Sketching Imaginative Experiences: From Operation to Reflection via Lively Interactive Artifacts

Kenny K. N. Chow

The Hong Kong Polytechnic University, Hong Kong, China

This article presents a cognitive approach to designing for reflection via lively interactive artifacts, which contingently present dynamic information metaphorically, stimulating the imagination through conceptual blends. Operating a lively artifact initially echoes a familiar scenario from an apparently unrelated domain, triggering an imaginative blend between the current and the familiar scenarios to promote immediate understanding; the lively artifacts then show contingent changes that prompt reinterpretation and reflection through successive blends with alternate scenarios. Grounded in ideas related to the embodied cognition thesis from cognitive science, the liveliness framework includes a protocol of cognitive processes with a diagrammatic tool assisting designers and/or researchers in sketching intended immediate and successive blends, scrutinizing sensorimotor correspondences, projecting potential reflection, and planning experiments for collecting samples of cognitive responses from participants. By comparing the predicted with the sampled, one can construct a spectrum of possible interpretations. The application of the protocol is demonstrated though two case studies of lively interactive artifacts, including an existing mobile interface and a newly designed digital-tangible interface, which aim to encourage users to reflect on battery and tobacco consumption respectively.

Keywords – Embodied Cognition, Blending Theory, Imagination, Sensorimotor Experience, Reflective Design, Interaction Design.

Relevance to Design Practice – The article presents the liveliness framework as an approach to stimulating people’s imagination and reflection on habitual behavior, including a protocol of cognitive processes with a diagrammatic tool for designing, predicting, and examining the user experiences of lively interactive artifacts.

Citation: Chow, K. K. N. (2018). Sketching imaginative experiences: From operation to reflection via lively interactive artifacts. International Journal of Design, 12(2), 33-49.

Received Jun. 3, 2016; Accepted Nov. 21, 2017; Published Aug. 30, 2018.

Copyright: © 2018 Chow. Copyright for this article is retained by the author, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: sdknchow@polyu.edu.hk

Kenny K. N. Chow is an Associate Professor in School of Design at The Hong Kong Polytechnic University. Chow received a Ph.D. in Digital Media from the Georgia Institute of Technology U.S.A., an M.F.A. from the City University of Hong Kong, an M.Sc. and a B.Sc. from the University of Hong Kong. He has publications in journals like Leonardo, Interacting with Computers, Animation: An Interdisciplinary Journal, and Information Design Journal, and conference proceedings like Persuasive Technology, International Design and Emotion Conference, and others. Chow’s latest book is Animation, Embodiment, and Digital Media: Human Experience of Technological Liveliness published by Palgrave Macmillan.

Introduction

User experience includes not only usability but also other design concerns like aesthetics, affect, and meaning. Many researchers and theorists have proposed theoretical frameworks revolving around these aspects (Forlizzi & Battarbee, 2004; Locher, Overbeeke, & Wensveen, 2010; McCarthy & Wright, 2004; Schifferstein & Hekkert, 2008). Recent studies have been conducted to investigate more specific experiences, such as serendipity (Liang, 2012) and surprise (Lin & Cheng, 2014). This article looks at the experience of interpretation and imagination, from the operational to the reflective levels. When operating a device, people understand meaning or effect of their actions based on the feedback or changes they bring about. A desktop computer user clicks and drags the mouse to slightly left, and sees the window on the screen moving along the same direction. One may have a sense of grasping something on the virtual desktop via the mouse, as in using a remote control. In fact, different effects can imply nuances in meaning. When using a navigation mobile application (e.g., Google Maps) to check one’s current geographical position with compass mode enabled, one turns the mobile phone to right and sees the displayed map rotating around the current position and revealing contents at the right side. The action and effect looks similar to pointing a handheld radar device to inspect the surroundings. When one launches the built-in calculator on the same phone, and turns it in landscape mode, the basic calculator rotates and changes into a scientific one, as there are physical scientific calculators with wide keypads. The meaning of similar actions varies from turning the viewpoint to manipulating an object. In either case, the user understands the digital operation logic through metaphorical mapping from varied mundane physical experiences, resulting in different interpretations.

This kind of imaginative interpretation can be explained by conceptual blending, a theory introduced by Fauconnier and Turner (2002). Blending is a basic cognitive operation combining two or more existing concepts, which range from purely abstract thoughts to experientially and physically grounded ideas, into one with new structure formed partially from each input. It is pervasive in our everyday meaning making. Consider the saying “You are digging your own grave,” which describes a person unwittingly causing his or her own failure (p. 131). It combines the concept of “digging the grave” and that of “unwitting failure”. From the former, elements like the act of digging and the dead person to be buried, but not the living digger, constitute part of the blend. From the latter, the act of causing failure and the person who is responsible are brought in. The act of digging corresponds to the act of causing failure, and the dead person is the responsible person. The resulting blended concept has a new structure that include the same person who causes and suffers the failure. Blending is also commonly employed in creative activities like poetry (Harrell, 2007; Hiraga, 2005), interface and interaction design (Benyon, 2012; Imaz & Benyon, 2007; Markussen & Krogh, 2008), and digital art (Chow & Harrell, 2012).

Apart from conscious thoughts, blends can work quickly and go unnoticed, especially after repeated practices. Fauconnier (2001) explicates the immediate, successive blends in using the computer desktop interface, including those between the mouse and the pointer on the screen, between the click-and-drag action and grasping things, between the drag-and-drop action and putting things into a container, and so on. In these blends, one part of the input includes the computer interface and the actions performed on it, while the other part comes with some familiar concepts or mundane experiences from the physical domain, like manipulation of objects. There are similarities and differences between these inputs. Users, however, feel that they are directly manipulating windows and icons on the virtual desktop through the immediate blends.

Blends can integrate material objects and related actions with seemingly unrelated concepts or scenarios, yielding vivid imaginative small narratives. Coulson and Fauconnier (1999) analyze the imaginative blends in the common trash can basketball game. One input is the game scenario, including crumpled paper, trash can, and the action of throwing out the crumpled paper into the trash can. The other input is the common knowledge about the basketball game or a remembered scenario of playing (or watching) the game. The correspondences, connecting the crumpled paper to the basketball, the trash can to the hoop, and the act of throwing trash to shooting the ball, are all compressed into an imagined scenario of playing the game in the workplace.

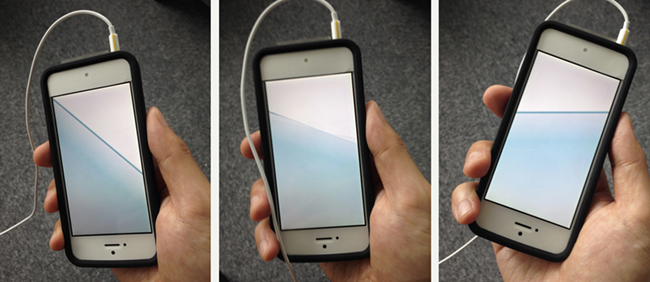

This research starts with similar kinds of immediate, imaginative blends combining material artifacts and related user actions with apparently unrelated yet familiar experiences from another domain, resulting in what Chow and Harrell (2012) call material-based imagination. When the material artifacts become digital and dynamic, the imagined, blended scenarios would continue to unfold with contingent changes, prompting inference of their causes through blends succeeding the earlier ones, and then narrative imagining with the changes and the inferred causes. The outcomes are not only for operational understanding but also for reflective meaning making. An illustrative example is the mobile phone NEC FOMA N702iS (designed by Oki Sato and Takaya Fukumoto). It features an intriguing battery meter on the screen in the form of an image looking like water. Holding and tilting the phone results in animation that the water seemingly flows to react (see Figure 1 for an implementation by the author’s project team). The initial interaction resembles swaying a container filled with liquid, triggering an immediate blend in the user and resulting in an imagination of a phone filled with water. After some time, the water level drops. The change prompts one to infer that the drop is a result of consumption. The scenario of using the phone is combined with a scenario of consuming water in a bottle. This blend results in an imaginative narrative that using the phone is consuming the water inside it, which may lead to reflection on one’s consumption behavior.

Figure 1. An implementation of the water-level battery meter originally on the mobile phone N702iS.

The battery level is indicated via the illusion of water inside the phone.

The water-level battery meter is an exemplar of lively interactive artifacts, whose interface or appearance features dynamic phenomena (e.g., water waves) that initially look unrelated yet familiar (e.g., water container) for immediate understanding (e.g., tilt to move water); it then shows contingent changes (e.g., the level drops) that stimulate further interpretation, imagination, and even reflection (e.g., resource consumption). Liveliness in this article, grounded in cognitive science theories including the concept of animacy, refers to the kind of dynamic and contingent phenomenon that stimulate imagination and interpretation. One way to create this stimulation is via conceptual blending. This research thus investigates and explores the potential of successive, imaginative blends for reflection in both designers and users with lively artifacts as anchors. The liveliness framework informs an approach, which includes a protocol of cognitive processes with a diagrammatic tool facilitating prediction of cognitive responses in users and collection of real and particular instances via experiments involving participants. The application of the protocol is demonstrated through two case studies of lively interactive artifacts. One is the water-level battery meter as an existing design; the other is a new design generated from a project led by the author aiming to assist users in smoking cessation through imagination and reflection.

Related Concepts

Lively interactive artifacts enable meaning making at multiple cognitive levels during different moments of use. The whole meaning-making journey involves operation, imagination, interpretation, and reflection. There are a few major concepts from design and cognitive science related to different levels of understanding.

From Operation and Understanding

Krippendorff and Butter (2008) define design semantics as the understanding of how others come to understand and interact with designed artifacts. Based on Gibson’s (1977) notion of affordances and Martin Heidegger’s hammer analogy in terms of present-at-hand and ready-to-hand (Dourish, 2001), they delineate three stages of understanding of an artifact, namely recognition of affordances of the artifact, exploration of the sequence of use, and reliance on the artifact like second nature. This operational sense of understanding can be compared with the idea of usability, which concerns whether a design is easy for users to understand, learn, remember, and use. Donald Norman, in The Design of Everyday Things (2002), introduces a set of usability principles. The principles of perceived affordances and visible feedback are required for the above recognition and exploration stages. Norman’s natural mapping of interface controls and system results, for example via spatial analogy, makes an interface easy for users to learn and remember, and thus one may arrive at Krippendorff and Butter’s reliance stage wherein the operation becomes natural and direct.

While Norman’s natural mapping calls to bridge cognitive gaps between controls and results, Wensveen, Djajadiningrat, and Overbeeke’s (2004) framework for natural coupling of user action and product function suggests ways of bridging the gaps in six aspects, namely time, location, direction, dynamics (continuous or discrete motion), modality (sight, sound, or touch), and expression (related to users’ emotional states), through three types of feedback (information returned about the result of an action) and feedforward (information offered about an action before it is taken). The information in feedback and feedforward is divided into inherent (natural consequence of the action taken), augmented (additional signals by design usually), and functional (user-intended effect). Natural and direct coupling often takes place in the use of mechanical products (e.g., scissors) in all six aspects through inherent information, while electronic products (e.g., alarm clock) and computer interfaces (e.g., GUI) rely more on augmented information in fewer aspects. Wensveen and colleagues use their alarm clock design to demonstrate how to enrich action possibilities in electronic products, giving users more embodied freedom in performing motor action and making the interaction more direct and intuitive. This freedom and directness in operation also opens up possibilities for expressing emotions, demonstrating connections between experiences at the sensorimotor and the affective levels (Locher et al., 2010).

With advances in multimodal technologies like touchscreens, motion-sensing, and location tracking, electronic products with digital interfaces (e.g., smartphones) are able to present or represent augmented information that looks inherent. The aforementioned water-level battery meter displays images with reaction that seems like a natural consequence of the user’s tilt action. This kind of dynamic and metaphorical representation renders augmented information seemingly inherent in various aspects of natural coupling, which is also an alternative approach to enriching action possibilities for user responses at a level other than operational understanding. The liveliness framework to be introduced particularly focuses on the link from sensorimotor experiences to imagination, interpretation, and reflection.

To Interpretation and Reflection

A few design notions have been proposed in relation to meaning making at a level other than the operational. The following is a cursory overview related to the liveliness framework.

Hallnäs and Redström (2001) think that technology can be designed to be less efficient in order that people can have more time to think and reflect. They metaphorically call this agenda slow design, because instead of compressing time for finishing tasks, technology can supply time for thinking. It comes with two design guidelines, making form or expression complex for users to understand, meanwhile material or function simple.

Bolter and Gromala (2004) also see the potential of designing interfaces that are not always transparent but sometimes reflective instead. While transparency enables the user’s direct attention to the immediate tasks, reflectivity draws the attention to the interface, the medium, or the instrument itself. Designers should consider the rhythm of transparency and reflectivity at the designed interface, which means temporal or situational balance between the two qualities.

“Ludic” design (Gaver et al., 2004) attempts to balance between meaning production and utility by engaging users with playful elements. Bill Gaver and colleagues believe that people can learn and reflect by playing. Sengers and colleagues look at the reflective potential of computing and introduce reflective design (Sengers, Boehner, David, & Kaye, 2005), which emphasizes critical reflection as a means of exposing people’s unconscious assumptions about everyday technologies and inviting them to look at possibilities, from perspectives of both designers and users, other than the norm. It integrates a range of related approaches, including critical design (Dunne & Raby, 2001) and ludic design, and proposes a set of design principles and guidelines, such as defamiliarizing the interface (rather than using common design patterns) and incorporating ambiguity, which designers can follow not only to question entrenched practices but also to remind users of the same ones.

Fleck and Fitzpatrick (2010) offer a definition of reflection in terms of four levels. The ground level is not reflective but only describes actions or happenings in an event. The first level is providing explanations or justifications for the actions, which can be interpretation. The second level is considering and comparing different explanations or interpretations. The third level is challenging personal assumptions, leading to a change in practice. In other words, Sengers and colleagues’ reflective design seems to aim at this level. Finally, the fourth level is relating to a wider social context.

The liveliness approach uses blends to generate ideas for metaphorical representations, which echo familiar concepts from apparently unrelated domains. This unusual familiarity at interfaces underpins the balance between operation, which should be easier to understand, and reflection, which can be less direct, resonating with Bolter and Gromala’s rhythm of transparency and reflectivity, as well as ludic design’s endeavor. The representations at times show contingent changes with uncertain causes, prompting users to consider different interpretations and even challenge their initial assumptions. This intended uncertainty is also in line with Hallnäs and Redström’s slow design and Sengers and colleagues’ reflective design, aiming at Fleck and Fitzpatrick’s third level of reflection or higher.

Embodied Cognition: Schemas, Movement, Animacy, and Blends

The liveliness framework is grounded in embodied cognition, an emergent philosophical belief in cognitive science alternative to cognitivism and connectionism (Varela, Thompson, & Rosch, 1991). It includes a substantial set of theories supporting the bodily basis of human cognition in terms of understanding, reasoning, categorizing, imagining, and even feeling. Those underpinning the connection from sensorimotor experiences to imagination and interpretation in liveliness include image schemas, animacy, and blends.

Image Schemas and Conceptual Metaphors

Johnson (1987) and Lakoff and Johnson (1999) introduce the idea of image or embodied schemas, which are spatial structures and dynamic/motion patterns recurring in our bodily/sensorimotor experiences (e.g., VERTICALITY/UP-DOWN: things up above usually require more effort to access) and underlying many entrenched but probably unnoticed abstract concepts in our minds. The background knowledge of concepts is called domain, and schemas provide structures for source domains in what Lakoff and Johnson (2003) call conceptual metaphors (e.g., Good Is Up wherein VERTICALITY/UP-DOWN structures the concrete source domain and quality is the abstract target domain in the metaphor). Numerous examples can be found from our everyday expressions and practices (e.g., we say something good as high quality, and we put champion literally higher than runner-up).

Movement and Temporality in Schemas

Johnson (2008) points out that life is inextricably tied with movement and temporality. From a perspective of bodily experience, movement refers to bodily motions and interactions with moving objects, which exist in many image schemas, such as SOURCE-PATH-GOAL: a path is a means of moving from one location to another. Temporality, or the passing of time, can also be felt through both the movements of our bodies and other objects, as illustrated by the two fundamental space-time conceptual metaphors. One is the moving time metaphor: When we say the coming Friday, time is a moving object. The other is the moving observer metaphor: Speaking of having two more days to go, the speaker is moving. In the context of design, both user actions and observed movements or changes at interfaces become central to the liveliness framework.

Animacy and Liveliness: Contingent Movements or Changes

Animacy refers to people’s concept separating the animate from the inanimate in the everyday world. Mandler (1992) and Turner (1996) point out that animacy is a complex image schema of observed movements, including self-motion, which means something starting to move on its own, caused motion, which is caused by other objects through direct physical contact, and most importantly contingent motion, whose causes act at a distance indirectly, thus requiring perceptual analyses. This motion schematic of animacy resonates with Arnheim’s degrees of liveliness (1974), which describe the level of complexity in the observed movements, namely pure movement (i.e., mere displacement) as the first, internal change as the second, and self-movement as the third. The fourth level further involves movements motivated by others through direct contact or across some spatial gaps, which correspond to the caused or contingent motions respectively in Mandler’s schematic. In other words, contingent movements or changes look complex, prompting one to interpret and imagine, which is an integral part of the liveliness framework.

Blends: Immediate, Successive, and Imaginative

The notion of blending is built on ideas including mental spaces (Fauconnier, 1985) and frames (Fillmore, 1985; Minsky, 1974). Mental spaces are small conceptual models that an individual temporarily constructs for local understanding and action, representing particular scenarios as perceived, imagined, or remembered (Fauconnier & Turner, 2002; Coulson, 2001; Lakoff & Johnson, 2003). A mental space typically contains elements of the scenario and relations between them, which are structured by a frame (cf. schema) based on background knowledge of a long-term concept (i.e., domain) (Coulson, 2001). One accesses a frame (like a template) from memory and fills in local information as elements and relations to form a mental space. By integrating two or more mental spaces with correspondences between elements and relations, new blended mental space is generated with the structure partially projected from each input.

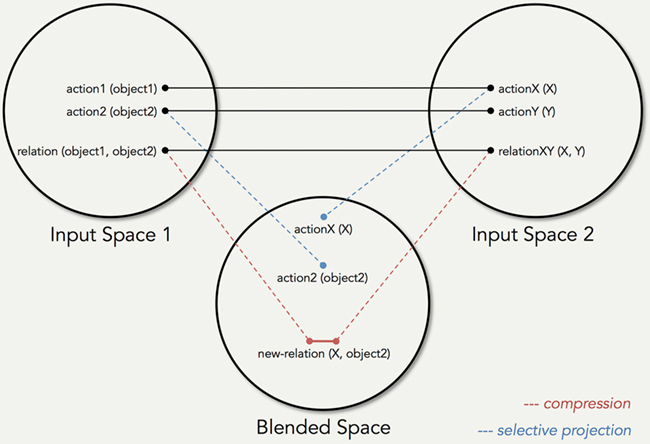

Figure 2 shows a typical conceptual integration diagram. It consists of circles representing mental spaces, each of which contains elements of a scenario, such as actors, objects, and their spatiotemporal relations structured by a frame. A relation can also be an action performed by an actor on an object, as represented like a function with a bracket. The two horizontal spaces are input for the blend, while the one below them is the output. The horizontal solid lines are links mapping the counterparts between the two input spaces respectively. These outer-space links are compressed into inner-space relations inside the blend. Other elements are only selectively projected, as shown in dotted lines, from either one input to the blend.

Figure 2. A typical conceptual integration diagram. The circles represent mental spaces containing actors and objects of scenarios. The functions with brackets represent relations or actions between actors and objects. The horizontal lines are mappings of counterparts between the two inputs, while the dotted lines are elements partially selected from either one input, constituting the so-called blend.

Blending is emergent in that the output of one blend can become input to another new blend, forming a conceptual integration network with successive blends. As mentioned, blends can be immediate, such as the successive blends in using the computer desktop interface. Blends may involve material objects and related actions, like the imaginative blends in playing trash can basketball. All in all, blends based on material and action can be quick, imaginative, and running in succession, which is the skeleton of dynamic and metaphorical interface representations in the liveliness approach.

The Liveliness Framework

Based in the embodied cognition view of animacy, liveliness in this article refers to the dynamic phenomena that echo life, including reaction to stimuli, which is obviously dependent on direct causes, autonomous transformation, which is independent of others, and contingent changes, which lie between the former two ends with some extent of uncertainty in their causes due to indirectness (Chow, 2013). To make sense of lively phenomena, particularly contingent ones, people sometimes perform blends. For example, we understand a sudden backward jump of a cat by blending it with our own reaction to surprise and infer that the cat is startled (Turner, 1996). Latest advances in digital technology have brought liveliness to material artifacts in the form of dynamic and metaphorical interfaces, whose understanding requires blending with phenomena in not only humans or animals but also plants and even physical or natural environments. For instance, a user of an Apple MacBook might see a slowly, repeatedly glowing and dimming light (in some versions of the product) as something (not only humans) sleeping with a steady breathing rate and understand that the computer is just sleeping. We might see the background of a mobile game (e.g., Pokémon Go) change over time from light blue to dark blue as a virtual sky synchronizing with the real one. These interactive artifacts feature dynamic phenomena via constant or frequent updates on information (e.g., status of the computer or current time of day) at the interfaces. Sometimes, the updates look curious and contingent on uncertain, indirect causes, like the water loss in the aforementioned water-level battery meter, prompting one to reason them out by invoking alternate frames to reanalyze the situations, what Coulson (2001) calls frame-shifting, such as attributing the water loss to consumption or leaking. One elaborates successive blends into imaginative narratives.

The notion of frame-shifting is also pertaining to Lin and Cheng’s (2014) later wow, which looks at the connection between surprise and meaning making in the use of metaphorical products. Drawing on Krippendorff’s (2006) interaction protocol and Todorov’s (1981) narrative model, Lin and Cheng propose a circular interaction model, which is illustrated with a series of design examples, including a mood lamp that looks like a large baby formula bottle when it is off, as well as a condom when one turns it on. The act of turning the lamp on and off shifts the frame between love-making and baby-feeding, inviting one to think of their possible connection. This research attempts to draw a counterpart examining the cognitive processes of experiencing metaphorical interfaces that contingently present dynamic information. Since the representations are dynamic and contingent owing to digital technology, frame-shifting may come about at times unintended by users, which can be stimulating in addition to inviting. The liveliness framework thus focuses on the dynamic stimulation of metaphorical representations.

Hence, lively interactive artifacts are digitally enabled material artifacts with the following features:

- presenting information in a metaphorical yet seemingly natural way;

- showing constant or frequent updates on information via changes in the representations;

- the changes at times seem contingent on indirect causes, which stimulate frame-shifting.

The interaction with a lively artifact at the sensorimotor level is designed to echo scenarios from a seemingly unrelated domain (i.e., metaphorical), triggering imaginative blends for immediate understanding of the operation. At times, updates or changes with uncertain causes (i.e., contingent) prompt reinterpretation. One tries to give an account by associating the changes with a comparable scenario from a varied domain with different frames (i.e., frame-shifting) and then blends it with the initial blended output. Different frames and successive blends result in different imaginative parables that let the user reflect differently on the situation.

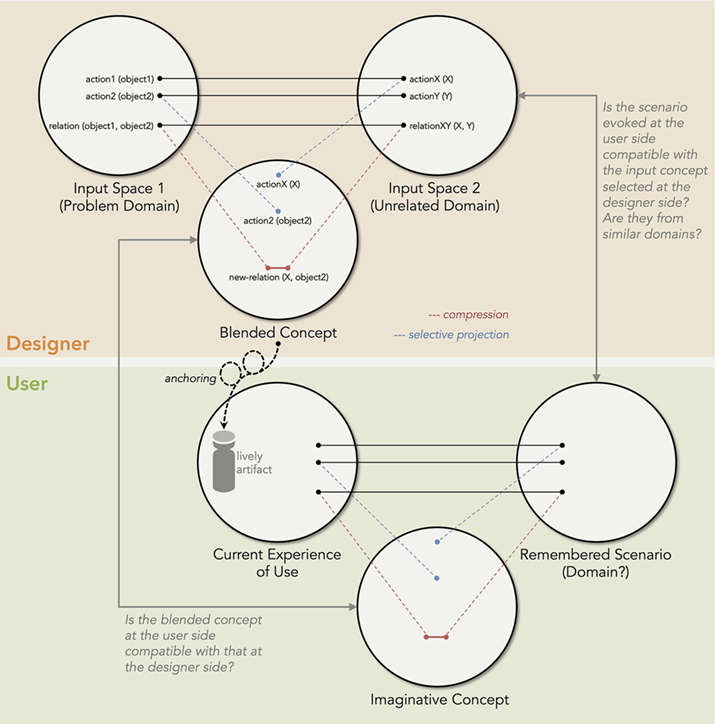

Following the post-structuralist thought in the humanities after Barthes and Heath’s Death of the Author (1977), the meaning of a text is no longer dictated by the author but instead can be produced by the reader. By analogy, designers do not have full control on how people understand the use of a design and the way they actually use it (Krippendorff & Butter, 2008), not to mention what reflective meaning they would make (Almquist & Lupton, 2009; Sengers & Gaver, 2006). Yet, this does not declare the death of the designer. Designers, who start with working in one problem domain (e.g., designing a battery meter for mobile phones), look for (via methods like body-storming) mundane concepts from another domain (e.g., holding a bottle of water) to blend, resulting in novel concepts (e.g., a water-filled mobile phone), which are materialized as lively artifacts (e.g., the water-level mobile interface), potentially evoking memories in users (e.g., having not enough drinking water) and hopefully leading to reflection. Users, at the other side, recall scenarios in some alternate domains when using the artifacts, which are then blended with their current experiences of use, outputting imaginative and embodied concepts. Figure 3 shows the conceptual integration propagated from designers, through lively artifacts, to users. The lively artifacts contingently presenting dynamic information act as anchors of designers’ blended concepts for users’ possible blends, what Chow and Harrell (2013) call elastic anchors.

Figure 3. A template of conceptual integration propagated from designers, through lively artifacts to users. In the designer (upper) part, Input Space 2 is the mental space constructed by the designer based on a concept selected from a seemingly unrelated domain. The designer’s blended concept is anchored to the user (lower) part via the lively artifact (the spiral anchoring line implying the iterative process) around which the user constructs a mental space of the scenario of use, which is reminiscent of another scenario in the user’s mind.

The concepts selected by designers for the blends may not easily come to users’ minds during use. To align both ends, designers need to appropriately anchor their blended concepts in the artifacts in order for the intended blends to emerge in users. The artifacts should be able to evoke scenarios that are compatible with those intended by the designers. In fact, Figure 3 only represents the basis of the designer-user conceptual integration. With contingent changes, lively artifacts are able to trigger successive blends. Based in the embodied cognition ideas, the liveliness framework pursues the following two goals: (1) coherent designer-user conceptual integration; (2) successive blends for reflection.

First, mundane concepts come from everyday life, which is fundamentally tied with our sensorimotor experience of the everyday world, including bodily movements, interactions with moving objects, and perception of changes, as discussed by Johnson (2008) from the perspective of embodied cognition. The liveliness framework includes a protocol of cognitive processes based on user action, perception of movements and changes at the interface, which allow designers and researchers to identify the sensorimotor phenomena in using an artifact and scrutinize the correspondences in the intended blends, which can be compared with empirical findings from experiments involving users. The results highlight crucial factors in accomplishing the coherent conceptual integration propagated from designers to users.

Secondly, contingent changes are integral to our experience of the everyday world. Contingency in the concept of animacy is the indirect, uncertain link between entities across spatial or temporal gaps (Mandler, 1992). This uncertainty prompts people to reconsider different reasons and perspectives. With frame-shifting, one looks for another scenario with similar changes and matches it with the current uncertain scenario, resulting in a new blend at the next level. Through different frames and blends, one inevitably looks at the situation from different perspectives and sets other assumptions. This entails the second level of reflection in Fleck and Fitzpatrick’s term. If a user is shocked by the new frame compared with the initial one, one may even perform the third level of reflection, challenging previously unconscious assumptions.

For coherent designer-user conceptual integration wherein successive blends lead to reflection, the introduction and use of lively interfaces are delineated in two stages, each of which involves four cognitive processes, resulting in different levels of understanding. This protocol assists designers or researchers in predicting possible user experiences and designing experiments for collecting samples from target users.

At the initial stage of use, immediate understanding of operation takes place:

- 1.1 Knowing action possibilities—The user becomes aware of possible motor actions on the artifact via perception, recognition, or exploration. This process is comparable to Krippendorff and Butter’s (2008) recognition and exploration stages, Norman’s (2002) perceived affordances, as well as Wensveen and colleagues’ (2004) augmented feedforward.

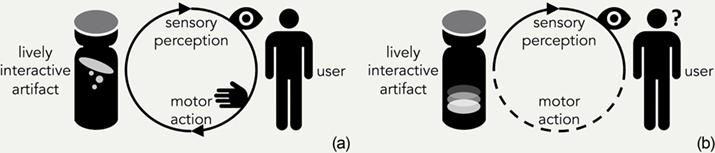

- 1.2 Receiving quick feedback—The user perceives quick sensory feedback based on the action taken, forming a feedback loop at the sensorimotor level [Figure 4(a)], which adds immediacy to Norman’s (2002) visible feedback. The feedback, mainly augmented, looks like inherent in Wensveen and colleagues’ terms (2004).

- 1.3 Triggering immediate blends—The augmented yet seemingly inherent sensorimotor experience echoes a remembered or mundane scenario from a different domain, triggering an immediate blend and yielding an imaginative concept of the operation. Details on the blend will be introduced in next section.

- 1.4 Becoming second nature—After repeated use, the operation becomes second nature, which is like Heidegger’s ready-to-hand and Krippendorff and Butter’s (2008) reliance stage. Gaps between action and feedback are bridged by the blend and become unnoticed, which is an alternative approach to Wensveen and colleagues’ (2004) natural coupling. The user perceives a sense of directness at this stage.

Figure 4. (a) A sensorimotor feedback loop between the user and the artifact at the initial stage.

(b) Contingent changes cause user to become curious at a later stage.

At a later stage of use, further imagination and reflection come about:

- 2.1 Noticing contingent changes—The user notices changes over time, and becomes curious about the causes or meaning [Figure 4(b)] in accordance with the imaginative concept in Stage 1.3. This is comparable to Heidegger’s present-at-hand (Dourish, 2001) or Lin and Cheng’s (2014) later wow.

- 2.2 Invoking interpretive frames—From a varied domain, the user recalls another remembered or imagined scenario with similar changes and their causes, trying to account for the perceived changes.

- 2.3 Elaborating successive blends—The remembered or imagined scenario is analogically mapped with the imaginative and embodied concept in (1.3) plus the perceived changes. Both become inputs to the next blend, yielding a narrative elaborated from the concept together with the causes and the resultant changes. The next section will illustrate this blending process.

- 2.4 Reflecting on the situation—Through invoking different frames, the user reviews possible explanations for the situation, which invite one to see from different perspectives.

Sketching Conceptual Integration Diagrams

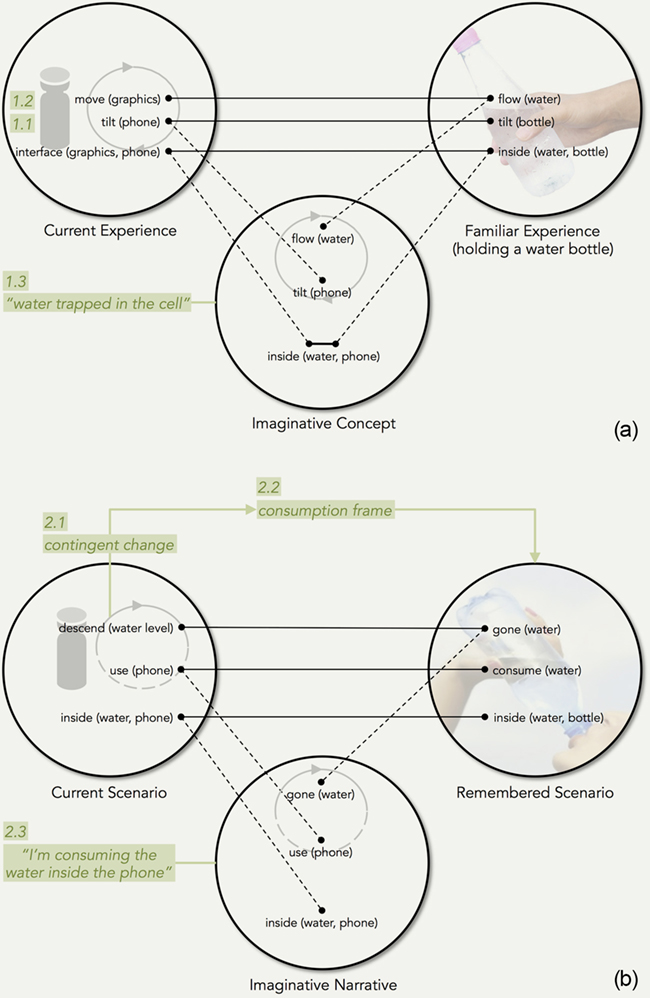

To identify the cognitive processes that may take place in the use of a lively interactive artifact, designers or researchers need an analytical tool to scrutinize the possible perception, action, and conceptual integration. Fauconnier and Turner’s (2002) conceptual integration diagrams (see Figure 2) are extended. Sketching an immediate blend taking place at Stage 1.3 of the protocol, one adds to the integration diagram as in Figure 5(a), including the sensorimotor feedback loop [from Figure 4(a)], which envelops motor action (Stage 1.1, e.g., tilting the cell phone) and sensory perception (Stage 1.2, e.g., seeing the waves) in the mental space. The left input space is the current experience using a lively artifact, which is analogical to a familiar experience from another domain (e.g., holding a bottle of water) denoted by the right input space. The result of the blend is an imaginative and embodied concept (e.g., water trapped in the cell phone).

At a later stage, the user elaborates successive blends (Stage 2.3). To sketch the possible blends, one continues with the blended output in Figure 5(a), which becomes an input (the left input space) to the next blend, as illustrated in Figure 5(b). The feedback loop at Stage 1.2 is fading in the user’s mind [as shown in Figure 4(b)], but contingent changes (Stage 2.1, e.g., the water level descends) emerge from the lively artifact, which prompts the user to invoke an interpretive frame (Stage 2.2, e.g., resource consumption). One may recall a remembered or imagined scenario, the right input space in Figure 5(b), structured by the frame, as represented by the arc from left to right, such as checking how much water left in a bottle and then assessing whether it is enough for the rest of a day. This remembered or imagined scenario and the current experience of contingency form a new blend, giving rise to an imaginative narrative (Stage 2.3, e.g., consuming too much water or battery and realizing it is not enough for the day) and eliciting emotions (e.g., anxiety) according to the user’s concerns (e.g., need water or battery during the day).

Figure 5. (a) An immediate blend at the initial stage of using the water-level battery meter.

(b) A possible blend at a later stage of using the water-level battery meter.

Two Case Studies

To illustrate the application of the protocol, two design cases, including the water-level battery meter interface, which has inspired the liveliness framework, and a newly designed system from a project led by the author, are studied. Both cases aim to provoke reflection on consumption, one about limited resources and the other about harmful substances. Each case study starts with the designer’s analogical concept and the designed interface, followed by prediction of user experiences according to the protocol. To investigate the possible imagination and reflection in users, cases may involve participants to experience a prototype in a laboratory setting or in the field. Interviews are then conducted with the participants during or after the use. The protocol provides an outline for the interview questions. For instance, the interviewer may ask about Stage 1.1: “What did you do on the interface when the phone rang the first time? Why?” This is to probe what the participant has perceived, assumed, known, and felt. For Stage 1.3, interviewer may ask: “Please describe what you saw after you did that.” This is to see how the participant’s verbal response may reveal some imagined scenarios that have come to the mind. For Stage 2.1, interviewer may enquire: “Did you notice anything about the level? What did you think?” This is to check how the participant may relate to the contingent changes. In the laboratory, participants’ immediate responses and behavior during use can be observed. The observational data can be used to supplement the interviews. The interviews and observations are transcribed, and the data are coded according to keywords in the protocol, including motor action for Stage 1.1, sensory feedback for Stage 1.2, scenario from a different domain for Stage 1.3, scenario with similar changes for Stage 2.1 and 2.2, blended narrative with inferred causes for Stage 2.3, and different perspectives for Stage 2.4. The coded data, for instance similar changes, can be clustered into different groups, implying different frames invoked among the participants.

The experiments involving participants are not to verify the liveliness framework, which is grounded in the tenable embodied cognition ideas, but to investigate the potential of each lively artifact in provoking imagination and reflection and compare different possibilities between the designer’s intent and particular users’ responses. The two sides may perfectly align, or the user side may span a spectrum of imagination, which may be unintended by the designer yet can still be coherent as far as the reflection is concerned. Designers or researchers thus become aware of various possible interpretations toward the intended reflection, and able to reinforce the corresponding dimensions in the designs. Worse cases can be a halt in the successive blending processes at the user side, which probably informs design issues in the contingent changes. Hence, the main value of this approach is to provide way to analyze and improve a design intended for reflection. That also justifies the relatively small number of participants in each study. Empirical findings of the studies have been published elsewhere (Chow, 2016; Chow, Harrell, Wong, & Kedia, 2015). This article is not to delineate the experiments and findings in details, but to provide the major results of the data clustering, which illustrate the spectrum of imagination, interpretation, and reflection among the participants. This demonstrates how the protocol is used to guide prediction of user experiences and collection of samples for better anchoring of designers’ blended concepts via lively artifacts.

The Water-Level Battery Meter: Reflection on Resource Consumption

The mobile phone featuring the water-level battery meter was a project of the Japanese design studio, nendo. The official web page of the project describes the design concept of the phone, which is based on “a drinking glass, a form familiar to the hand” (for more details, please check http://www.nendo.jp/en/works/n702is-2/). The designers’ imaginative blends can be seen in many parts of the text, such as “the earphone jack lets the user ‘drink up’ music like you would put a straw in your glass”, “when you shake it, the alarm stops”, and “the ‘water level’ goes down as the battery runs out”. Based on the text, the cognitive processes taking place during use can be delineated as follows.

At the initial stage of use:

- 1.1 Knowing action possibilities—The user perceives the subtle water movement on the interface of the phone in hand, and impulsively tilts the phone to see if the water is reactive.

- 1.2 Receiving quick feedback—The user sees the water moving in response to the tilt, forming a sensorimotor feedback loop. When the phone rings, the user shakes it until the alarm stops, which is another sensorimotor experience.

- 1.3 Triggering immediate blends—The tilt action and the water movement look similar to the mundane experience of holding a bottle of water. The phone corresponds to the bottle, the water graphics (including the waves and bubbles) to the real water. The immediate blend [see Figure 5(a)] is an imagined act of moving the water inside the phone. The main gaps include lack of weight shifting during the tilt.

- 1.4 Becoming second nature—After receiving a few unwanted incoming calls, the user gets used to shaking the phone to stop the ringing. The operation becomes second nature. The gaps like lack of weight shifting become unnoticed.

At a later stage of use:

- 2.1 Noticing contingent changes—The user notices the drop in the water level, and becomes curious how and why the water is gone.

- 2.2 Invoking interpretive frames—The drop prompts the user to invoke a frame about consumption, which structures the mental space of a remembered or imagined scenario, like very less water left for now due to drinking too much earlier.

- 2.3 Elaborating successive blends—The scenario of insufficient water is compared to the current battery situation. The water left inside the phone corresponds to the battery power. Based on the blended space in (1.3), a possible successive blend [see Figure 5(b)] results in a narrative that the power-water or juice left in the phone is insufficient for now due to over-consumption earlier.

- 2.4 Reflecting on the situation—The consumption frame and the resulting narrative suggest that the user has consumed too much juice. One may regret, feel anxious, and try to save the juice.

Laboratory experiments are conducted with 20 participants (6 females and 14 males, 6 at the age between 18 and 25, 9 between 25 and 35, and 5 above 35). Each participant is asked to stay alone in a sitting room environment with a given iPhone with an audio content, which is intended to engage the participant. The phone comes with a simulated implementation of the water-level battery meter (see Figure 1). The participant is asked to cancel any incoming calls from Unknown caller by shaking the phone. The participant is also reminded to “pay attention to the interface, which shows the battery level” (in exact wordings). The water surface starts at a level of 70% on the screen and continuingly descends to the bottom in only 16 minutes. Interviews then follow. The observation and interview data after clustering can be summarized as follows.

Different domain. Eleven out of 20 participants seem to recall liquid containers implying hand interactions, while 8 participants mention fixed containers. The lack of hand interactions in the latter associations is probably due to incomplete modalities represented by the interface. The interface shows visuals reactive to hand movements, yet there is no sound or force feedback. The visual perception overshadows the motor action.

Similar changes. Almost half of the participants infer that the drop is a result of leaking, draining, or indicatively time passing as seen in an hourglass wherein the gradual loss of sand is independent of their behavior. Slightly more than half of them associate it with consumption, although the resources consumed vary from water or oil to food or even money.

Blended narrative. Participants metaphorically speak of draining, drinking, eating, consuming intangible (e.g., money and time) or even virtual (game energy) resources. The variation in the forms of resources is surprising. A few of them even imagine how to replenish the water.

The major findings here are that the liquid container imagined may not be handheld, and the interpretive frames invoked, like time passing, can be a kind of passive consumption. Sample quotes from the interviews are included in Table 1.

Table 1. Sample quotes from interviews of participants who have experienced the water-level interface in the laboratory.

| Stage & Code | Clustering |

1.3 |

Recalling liquid containers that are usually held in hand: Recalling liquid containers that are not handheld: |

2.1 & 2.2 |

Invoking a frame about consumption: Invoking a frame about leaking or draining: Invoking a frame about time passing: |

2.3 |

Imagining draining or leaking: Imagining drinking: Imagining eating: Imagining consuming virtual resources: Imagining replenishment after consumption: |

Lock Up: Reflection on Substance Consumption

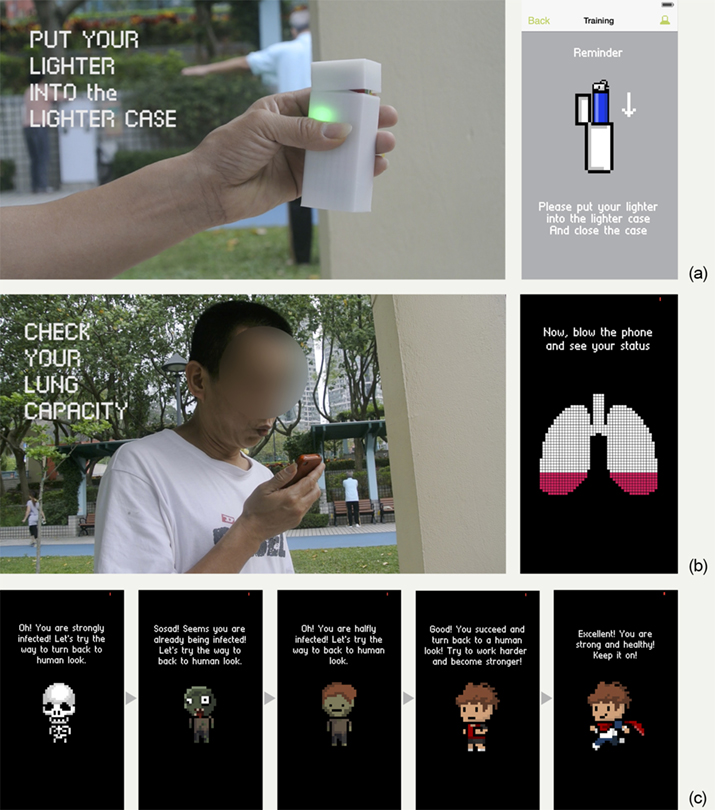

Lock Up is an interactive system including a mobile app and a smart case aiming to assist users in controlling the smoking habit. The initial idea originated from a design student’s (Ms. Lui Yan Yan, School of Design, The Hong Kong Polytechnic University) graduation project, which was guided and then further developed by the author following the liveliness framework. Lock Up requires the user to put his or her lighter in the case and lock it up. This is a heuristic way to estimate how much time has passed since last smoking. After closing the hinged cover firmly, the user sees the LED (light-emitting diode) lights on the case starts to flash [Figure 6(a)]. The user meanwhile blows at the smartphone for a lung capacity test [Figure 6(b)]. The app responds with a default result: a skeleton character image (i.e., avatar) appears with a message saying that the user has been seriously infected. After doing some physical exercise, the user sees improvement in the app, from the skeleton, to a zombie, then a half-zombie-half-human, a healthy human, and so on [see Figure 6(c)]. An immediate understanding comes about in the user’s mind that locking up the lighter and exercising translates to progress. Meanwhile, one may take out the lighter anytime, but the lung capacity test quickly relapses to the skeleton, even though the user keeps doing exercise. One may infer that the lighter is the culprit and want to stay away from it. The design refers to the concept that releasing the demon is detrimental.

Figure 6. (a) Lock Up requires the user to put the lighter into the case, and the lights on the case start flashing. (b) Lock Up asks the user to blow at the smartphone for a lung capacity test. (c) The lung test result starts with a skeleton, followed by a zombie, a half-zombie-half-human, a healthy human, and so on.

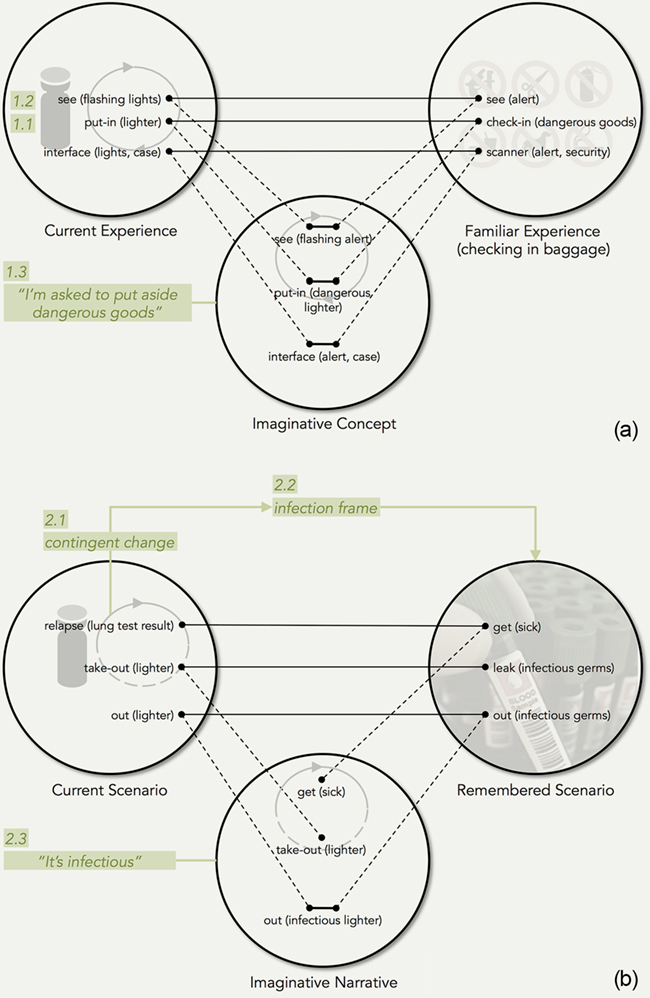

At initial moments of use:

- 1.1 Knowing action possibilities—Seeing the size and shape of the smart case, the user knows that the cavity fits the lighter.

- 1.2 Receiving quick feedback—The user is then invited to blow on the phone for a lung capacity test. The app displays in real time the blow volume graphically over a lung-shaped image, followed by a skeleton avatar as a result, which may shock the user. One is suggested to put the lighter into the case and run away. Closing the cover tight results in flashing lights on the case.

- 1.3 Triggering immediate blends—The acts of putting the lighter into the case, covering it firmly, and running are reminiscent of one’s experience of being separated from the lighter on some occasions, such as checking in baggage. The flashing lights seem to suggest that the lighter is still active. The immediate blend is an imagined situation that the lighter needs to be locked up [see Figure 7(a) for a possible blend].

- 1.4 Becoming second nature—The user keeps doing exercise and checking lung capacity. The health status is improving gradually, from the skeleton, to the zombie, and so on. The user is motivated.

If the user takes the lighter out of the case, the flashing lights go off. The user’s lung capacity test result quickly relapses to the skeleton, even though one keeps doing exercise. At this later stage:

- 2.1 Noticing contingent changes—The user’s avatar turns back to the skeleton, which makes one really curious about the reason. One may realize that the lighter is not in the case.

- 2.2 Invoking interpretive frames—The user attributes the curious changes to the lighter and invokes a frame about infection, which structures the mental space of a remembered or imagined scenario, for example, leaking an infectious virus or hazardous chemicals.

- 2.3 Elaborating successive blends—The constructed scenario related to something hazardous and the current curious situation developed from the blend in (1.3) in which the lighter is out, become inputs to the next blend [see Figure 7(b)]. The output is an imaginative narrative that the infectious lighter is dragging the user’s health status down.

- 2.4 Reflecting on the situation—The assumption that the lighter is just a device is challenged. The user sees the lighter differently, which is actually the source of infection. One may want to stay away from it.

Figure 7. (a) An immediate blend taking place at the initial stage of using Lock Up.

(b) A possible succeeding blend at a later stage of using Lock Up.

The laboratory experiment is designed to first ask a participant to launch the app prototype and blow at the smartphone. The lung test result is scripted to be the skeleton. The participant is then told to put his or her lighter into the case and close the hinged cover firmly by wrapping it with medical tape. The lights on the case started to move from top to bottom and back. The participant then needs to run on a treadmill for two rounds each of which is about three minutes followed by a lung test. The result is scripted to be the zombie and then the half-zombie. The participant is intermittently tempted to take the lighter out. The succeeding interview is divided into two sessions corresponding to the two stages in the protocol. After the first session, the participant needs to test the lung again and the skeleton results, because the lighter is not in the case. The second session then follows. Six participants (all male smokers, one aged between 18 and 25, and all the others above 25 having smoked more than 3 years) have taken the test. The questionnaire results show that one of them prepares to cease smoking and the others are contemplating it. After clustering, the major findings are the following.

Different domain. Three participants mention the moments of having no access to lighters on plane. Lighters are classified as dangerous goods and forbidden. The immediate blend between putting the lighter into the case and the experience of entering airport security results in a message that one should put aside the dangerous lighter. Two participants relate the flashing lights to mundane machine operation. Yet, the lights still invite moments of vigilance in most participants.

Similar changes. Nearly all participants initially attribute the relapse to the hiatus of exercising. One of them believes that the chemicals generated from exercise have been fading away. All participants later realize, with some shock, that the lighter brings diseases and makes them sick.

Blended narrative. One participant quickly knows the lighter is infecting him and thinks that putting it in the case could save him. In addition, some participants see different roles of the lighter in relation to cigarettes (e.g., key-and-lock partnership) or exercise (e.g., demon vs. angel).

The major findings from these experiments include that the flashing lights may suggest possible danger. With frame-shifting, the danger becomes the disease that demonizes the lighter. Sample quotes from the interviews are listed in Table 2.

Table 2. Sample quotes from interviews of participants who have experienced Lock Up in the laboratory.

| Stage & Code | Clustering |

1.3 |

Relating to moments on plane: Relating to machine operation: |

2.1 & 2.2 |

Invoking a frame about chemical: Invoking a frame about weight control: |

2.3 |

Seeing the infectious lighter: Seeing different roles of the lighter: |

The above two design cases show that nuances between the predicted and the sampled are common. In the water-level battery meter case, the designers’ concept is based on a hand-familiar drinking glass, while some blends in participants involve fixed containers. The interpretive frames in our prediction are about resource consumption, yet those invoked in some participants can be passive consumption, like the passing of time. In the case of Lock Up, the flashing lights are intended to represent one’s resistance to being locked, but in general they look like a machine signal (e.g., processing or alert) to the participants. The nuances in fact inform the corresponding causes of those interpretations, like incomplete modalities in sensory feedback (e.g., the water level drop inside the phone leads to no change in weight) and subtleties in animation dynamics (e.g., the lights on the case move too mechanically). The findings motivate iteration in the anchoring process (see Figure 3). For example in Lock Up, the immediate understanding of fast flashing lights as an alert signal can support the frame-shifting to the reinterpretation of the lighter as the source of infection, which still lead to similar user reflection intended by the design.

Conclusion and Future Work

This article introduces the liveliness framework with a protocol of cognitive processes for designers or researchers to predict possible imaginations and reflections in users and formulate plans for experiments involving participants. The experiments and participant interviews generate real and particular instances of interpretive frames and successive blends with articulated reasons in sensorimotor phenomena enabled by lively artifacts. By comparing the participant responses with the design intents, we can identify potential alternate frame-shifting and blending in users, which may be slightly deviated from the original design intents but still lead to the intended reflection. In other words, designers propose or predict possible cognitive journeys, whereas participants may counter-propose alternate paths, yet still toward the same destination.

There are limitations in the current approach. First, imagination and reflection stimulated by lively artifacts may take place at any moments of use. The proposed protocol of cognitive processes is currently a heuristic model that briefly delineates the meaning-making journey into two stages. In fact, blending is emergent, and so the elaboration or shifting of blends may continue. In other words, the second stage of the protocol can iterate, as long as contingent changes continue to unfold. Secondly, as the meaning making is enduring, the laboratory experiments not only suffer from the isolated physical setting but also from the short and segmented time frame. The former is a well-known issue of research in the laboratory. The latter falls short of accumulating data that emerges in a prolonged period of use. The heuristics lie in the design of the experiments. One needs to thoughtfully condense a typical journey of use into a viable and sensible time frame in the laboratory. The ideal way is definitely to build minimum viable prototypes for participants to use in daily life for a period of time, and to sample the experience data via different means.

As mentioned, user experience research has developed to cover specific facets. Wensveen et al. (2004) explore how far users may directly act on and make sense of electronic products as they do with mechanical ones. Liang (2012) looks at the unexpected yet meaningful experiences in the use of interactive artifacts. Lin and Cheng (2014) focus on surprise elicited at different stages of interaction with metaphorical products. This research fills the gap, addressing the imaginative meaning making processes of operating interactive artifacts with dynamic and metaphorical interfaces. Liveliness, as embodied by latest multimodal sensing and actuating technologies in the form of lively artifacts, can present personally relevant information (e.g., personal device status as in the water-level battery meter, or user behavioral records as in Lock Up) in a metaphorical yet natural way that stimulates user reflection at different moments in daily life. As the two presented design cases have shown, the liveliness framework can be applied to designing reflective representations of information concerning users in terms of their habitual behaviors, such as consumption, choice, or even addiction. Designing lively artifacts and interfaces for user reflection on behavior are part of the research agenda of liveliness.

The research now focuses on later part of the designer-user conceptual integration network via lively artifacts (Figure 3), including the predicted and sampled user experiences. The presented approach assumes that designers working for a problem domain select a known concept from another domain for blending and then anchor the blended concept in a lively artifact. Someone might question: how designers identify an appropriate concept and domain for the blend? How can one compare different concepts from different domains? What are the dimensions of sensorimotor phenomena to be considered in the comparison? How can one design the contingent changes? What are the guidelines for anchoring the blended concept at the designer side before testing at the user side? Similar questions correspond to the upper part of Figure 3, that is the cognitive processes taking place at the designer side. This research will continue to look into these aspects in the future.

Acknowledgements

I gratefully acknowledge the grant from Hong Kong General Research Fund (PolyU 5412/13H).

References

- Almquist, J., & Lupton, J. (2009). Affording meaning: Design-oriented research from the humanities and social sciences. Design Issues, 26(1), 3-14.

- Arnheim, R. (1974). Art and visual perception: A psychology of the creative eye. Berkeley, CA: University of California.

- Barthes, R., & Heath, S. (1977). Image, music, text. London, UK: Fontana.

- Benyon, D. (2012). Presence in blended spaces. Interacting with Computers, 24(4), 219-226.

- Bolter, J. D., & Gromala, D. (2004). Transparency and reflectivity: Digital art and the aesthetics of interface design. In P. A. Fishwick (Ed.), Aesthetic computing (pp. 1-7). Cambridge, MA: MIT.

- Chow, K. K. N. (2013). Animation, embodiment, and digital media human experience of technological liveliness. London, UK: Palgrave Macmillan.

- Chow, K. K. N. (2016). Lock up the lighter: Experience prototyping of a lively reflective design for smoking habit control. In Proceedings of the 11st International Conference of Persuasive Technology (pp. 352-364). Cham, Switzerland: Springer.

- Chow, K. K. N., & Harrell, D. F. (2012). Understanding material-based imagination: Cognitive coupling of animation and user action in interactive digital artworks. Leonardo Electronic Almanac, 17(2), 50-65.

- Chow, K. K. N., & Harrell, D. F. (2013). Elastic anchors for imaginative conceptual blends: A framework for analyzing animated computer interfaces. In M. Borkent, B. Dancygier, & J. Hinnell (Eds.), Language and the creative mind (pp. 427-444). Stanford, CA: CSLI.

- Chow, K. K. N., Harrell, D. F., Wong, K. Y., & Kedia, A. (2015). Provoking imagination and emotion with a lively mobile phone: A user experience study. Interacting with Computers, 28(4), 451-461.

- Coulson, S. (2001). Semantic leaps: Frame-shifting and conceptual blending in meaning construction. Cambridge, UK: Cambridge University.

- Coulson, S., & Fauconnier, G. (1999). Fake guns and stone lions: Conceptual blending and privative adjectives. In B. Fox, D. Jurafsky, & L. Michaelis (Eds.), Cognition and function in language (pp. 143-158). Stanford, CA: CSLI.

- Dourish, P. (2001). Where the action is: The foundations of embodied interaction. Cambridge, MA: MIT.

- Dunne, A., & Raby, F. (2001). Design noir: The secret life of electronic objects. Basel, Switzerland: Birkhäuser.

- Fauconnier, G. (1985). Mental spaces: Aspects of meaning construction in natural language. Cambridge, MA: MIT.

- Fauconnier, G. (2001). Conceptual blending and analogy. In D. Gentner, K. J. Holyoak, & B. N. Kokinov (Eds.), The analogical mind: Perspectives from cognitive science (pp. 255-285). Cambridge, MA: MIT.

- Fauconnier, G., & Turner, M. (2002). The way we think: Conceptual blending and the mind’s hidden complexities. New York, NY: Basic Books.

- Fillmore, C. J. (1985). Frames and the semantics of understanding. Quaderni di Semantica, 6(2), 222-254.

- Fleck, R., & Fitzpatrick, G. (2010). Reflecting on reflection: Framing a design landscape. In Proceedings of the 22nd Conference of the Computer-Human Interaction Special Interest Group of Australia on Computer -Human Interaction (pp. 216-223). New York, NY: ACM.

- Forlizzi, J., & Battarbee, K. (2004). Understanding experience in interactive systems. In Proceedings of the 5th Conference on Designing Interactive Systems (pp. 261-268). New York, NY: ACM.

- Gaver, W. W., Bowers, J., Boucher, A., Gellerson, H., Pennington, S., Schmidt, A., …Walker, B. (2004). The drift table: Designing for ludic engagement. In Proceedings of the Extended Abstracts of the Conference on Human Factors in Computing System (pp. 885-900). New York, NY: ACM.

- Gibson, J. J. (1977). The theory of affordances. In R. Shaw & J. Bransford (Eds.), Perceiving, acting, and knowing: Toward an ecological psychology (pp. 67-82). Hillsdale, NJ: Lawrence Erlbaum Associates.

- Hallnäs, L., & Redström, J. (2001). Slow technology: Designing for reflection. Personal and Ubiquitous Computing, 5(3), 201-212.

- Harrell, D. F. (2007). GRIOT’s tales of haints and seraphs: A computational narrative generation system. In P. Harrigan & N. Wardrip-Fruin (Eds.), Second person: Role-playing and story in games and playable media (pp. 177-182). Cambridge, MA: MIT.

- Hiraga, M. (2005). Metaphor and iconicity: A cognitive approach to analysing texts. Basingstoke, UK: Palgrave Macmillan.

- Imaz, M., & Benyon, D. (2007). Designing with blends: Conceptual foundations of human-computer interaction and software engineering. Cambridge, MA: MIT.

- Johnson, M. (1987). The body in the mind: The bodily basis of meaning, imagination, and reason. Chicago, IL: University of Chicago.

- Johnson, M. (2008). The meaning of the body: Aesthetics of human understanding. Chicago, IL: University of Chicago.

- Krippendorff, K. (2006). The semantic turn: A new foundation for design. Boca Raton, FL: CRC/Taylor & Francis.

- Krippendorff, K., & Butter, R. (2008). Semantics: Meanings and contexts of artifacts. In H. Schifferstein & P. Hekkert (Eds.), Product experience (pp. 353-376). San Diego, CA: Elsevier Science.

- Lakoff, G., & Johnson, M. (1999). Philosophy in the flesh: The embodied mind and its challenge to Western thought. New York, NY: Basic Books.

- Lakoff, G., & Johnson, M. (2003). Metaphors we live by. Chicago, IL: University of Chicago.

- Liang, R. -H. (2012). Designing for unexpected encounters with digital products: Case studies of serendipity as felt experience. International Journal of Design, 6(1), 41-58.

- Lin, M. H., & Cheng, S. H. (2014). Examining the “later wow” through operating a metaphorical product. International Journal of Design, 8(3), 61-78.

- Locher, P., Overbeeke, K., & Wensveen, S. (2010). Aesthetic interaction: A framework. Design Issues, 26(2), 70-79.

- Mandler, J. M. (1992). How to build a baby: II. Conceptual primitives. Psychological Review, 99(4), 587-604.

- Markussen, T., & Krogh, P. G. (2008). Mapping cultural frame shifting in interaction design with blending theory. International Journal of Design, 2(2), 5-17.

- McCarthy, J., & Wright, P. (2004). Technology as experience. Cambridge, MA: MIT.

- Minsky, M. (1974). A framework for representing knowledge (Tech. Rep.). Cambridge, MA: MIT.

- Norman, D. A. (2002). The design of everyday things. New York, NY: Basic Books.

- Schifferstein, H., & Hekkert, P. (2008). Product experience. San Diego, CA: Elsevier Science.

- Sengers, P., Boehner, K., David, S., & Kaye, J. J. (2005). Reflective design. In Proceedings of the 4th Decennial Conference on Critical Computing: Between Sense and Sensibility (pp. 49-58). New York, NY: ACM.

- Sengers, P., & Gaver, B. (2006). Staying open to interpretation: Engaging multiple meanings in design and evaluation. In Proceedings of the 6th Conference on Designing Interactive Systems (pp. 99-108). New York, NY: ACM.

- Todorov, T. (1981). Introduction to poetics. Minneapolis, MN: University of Minnesota.

- Turner, M. (1996). The literary mind. Oxford, UK: Oxford University.

- Varela, F. J., Thompson, E., & Rosch, E. (1991). The embodied mind: Cognitive science and human experience. Cambridge, MA: MIT.

- Wensveen, S. A. G., Djajadiningrat, J. P., & Overbeeke, C. J. (2004). Interaction frogger: A design framework to couple action and function through feedback and feedforward. In Proceedings of the 5th Conference on Designing Interactive Systems (pp. 177-184). New York, NY: ACM.