Pioneering Online Design Teaching in a MOOC Format: Tools for Facilitating Experiential Learning

Jaap Daalhuizen 1,* and Jan Schoormans 2

1 Technical University of Denmark, Kgs. Lyngby, Denmark

2 Delft University of Technology, Delft, The Netherlands

Providing online design education offers a unique opportunity for learning, by providing high quality learning experiences to distributed audiences for free. It has its challenges as well, particularly when the aim is use ‘active learning’ strategies (Biggs & Tang, 2011), which are necessary when teaching design. In this paper, we report on the development of one of the first massive open online courses (MOOC) in the field of product design. We provide insight into the way the course was designed to stimulate active learning, highlighting the tools that were developed to engage students in a mode of experiential learning (Kolb, 1984). We present the results of the course evaluation, through (post-course) surveys and interviews, focusing on the way the newly developed active learning tools were experienced by the students. We found that experiential learning strategies are applicable to the MOOC context, and that dedicated didactic tools were evaluated more positively in terms of stimulating reflection, motivation and learning that conventional ones. We conclude with an analysis of the outlook on future developments for online design education.

Keywords – Design Education, Design Methods, Massive Open Online Learning.

Relevance to Design Practice – Design courses that integrate dedicated didactic tools – as the ones presented in this paper - for facilitating reflection can offer high quality learning experiences to practitioners without the burdens that often characterize traditional courses for professionals; costly programs and relatively time-consuming and inflexible offerings.

Citation: Daalhuizen, J., & Schoormans, J. (2018). Pioneering online design teaching in a MOOC format: tools for facilitating experiential learning. International Journal of Design, 12(2), 1-14.

Received Apr. 13, 2016; Accepted Nov. 27, 2017; Published Aug. 31, 2018.

Copyright: © 2018 Daalhuizen & Schoormans. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: jaada@dtu.dk

Dr. Jaap Daalhuizen (1980) is assistant Professor at the Department of Management Engineering, Technical University of Denmark, Kgs. Lyngby, Denmark. His research focuses on design theory & methodology, and innovation processes, with a particular focus on the development and use of methods in relation to human behavior and organizational context. His research on design methods has a diverse range of application areas, for example in health innovation, behavioral design and agility in the manufacturing industry. He has published in Design Studies and International Journal of Design and is co-editor of the Delft Design Guide.

Prof dr. Jan Schoormans (1956) is a Professor of Consumer Behavior at the Faculty of Industrial Design Engineering, Delft University of Technology, Delft, the Netherlands. His research focuses on consumer preferences and behavior towards (the design of) new products. He has published on these topics in marketing journals like the International Journal of Research in Marketing, Psychology and Marketing, the Journal of Product Innovation Management, in psychological journals like Perception and the British Journal of Psychology, and in design journals like International Journal of Design, Design Studies, Journal of Engineering Design and the Design Journal.

Introduction

Massive Open Online Courses (MOOCs) offer free access to high-quality educational resources using a digital platform. They are a type of Open Educational Resources (OER) that offer versatile and free access to high-quality education; even to people residing in remote or disadvantaged areas (Daradoumis, Bassi, Xhafa, & Caball, 2013). Access to high quality educational resources has been acknowledged as a key to building global prosperity (UNESCO, 2016). People lacking the opportunity to attend on-campus education for reasons of finances, geographical distance or time availability now have an accessible, flexible, cheap and location-independent means to pursue an education. In this way, it provides a distinct alternative to education in the traditional university model. However, online education also has its own distinct set of challenges, particularly in areas where active learning strategies (Biggs & Tang, 2011) are required for effective teaching. Glance, Forsey and Riley (2013) concluded that most online courses are characterized by the presence of video lectures, formative quizzes and automated assessment and/or peer- and self-assessment. Such elements resemble what might be referred to as classic didactic tools that are often criticized for their emphasis on students as passive knowledge consumers and their limited suitability in the pursuit of active learning strategies (see e.g., Biggs & Tang, 2011). Daradoumis et al. (2013) urged MOOC designers to take a learner-centered approach, allowing some degree of content customization. For example, allowing for variation in the content that students work on and deliver is key in the context of design, where outcomes should be to some extent unique by definition. Thus, the importance of active learning strategies might be even more important in design, raising the question of whether the current MOOC format is suitable for design education. That is, in design, the importance of learning through reflection on one’s own experience is considered to be a key didactic mechanism (Schön, 1987, 1983), and Kolb’s model of experiential learning (Kolb, 1984) is often used to describe the process underlying that mechanism. Yet the ‘classic didactic tools’ still often used in MOOCs do not provide means to facilitate this type of learning.

In this paper, we describe and evaluate a MOOC in the field of design. We describe a number of dedicated tools that were developed and implemented in the course to facilitate experiential learning. Furthermore, we evaluate the extent to which it was able to facilitate key didactic mechanisms in the course. The contribution of this paper is twofold. First, we present the design of a MOOC on design in terms of its structure and content—including a number of reflection tools that have been developed to facilitate reflection and experiential learning. Second, we present an evaluation of the students’ experience of the course and the tools presented, following the questions below:

- Did students experience a coherent design process?

- Did students learn to perform the different design activities?

- Did students reflect on their experiences?

Insights from the development of the didactic tools as well as their evaluation in the MOOC context can be used to inform the development of future distance learning courses in areas where direct experience and reflection are key for effective learning.

Massive Open Online Courses

MOOCs have gained massive popularity in recent years and represent a relatively new form of education (Pappano, 2012). They have sprung from a much longer tradition of distance learning, which includes blended formats combining face-to-face education with online learning technologies (Nguyen, 2015). For example, the Open University in the United Kingdom has long been offering paid, distant learning courses on design, with considerable success. One of the key benefits of these courses is the access to a tutor who can provide, in part, face-to-face feedback and discussion. In recent years, more providers of online design courses have emerged, typically offering relatively small, paid courses that aim to teach specific (design) skills like graphic design, UX principles, etc. Some examples of such providers are Lynda.com and Udemi.

Scholars and practitioners put forward a number of arguments for the benefits of online learning. The most important arguments are: (1) its effectiveness in educating students, (2) its usefulness in enabling life-long learning for professionals, (3) its cost-effectiveness in terms of reducing the student-to-cost ratio of courses, and (4) the opportunity to provide world-class education to anyone with a fast internet connection (Daradoumis et al., 2013; Nguyen, 2015). Comparisons between online education and traditional face-to-face education have produced mixed results, with the more rigorous meta-analyses (Bernard, Borokhovski, Schmid, Tamim, & Abrami, 2014; Means, Toyama, Murphy, Bakia, & Jones, 2009)it is devoted to developing a better understanding of the effectiveness of blended learning (BL pointing to equal performance while uncovering several moderating factors such as selection bias, students’ characteristics and which generation of online courses was used in the studies (Zhao, Lei, Lai, & Tan, 2005).

MOOCs are a relatively new phenomenon in the field of distance and e-learning and there is still much uncertainty concerning whether they can be regarded as an extension of the second generation of online education courses or should be seen as a qualitatively different category (Nguyen, 2015). Two key characteristics set them apart from most other online courses in design; MOOCs are free of charge for students to register and follow, and, MOOCs offer education that is handled completely through the online platform and without direct tutoring. McAuley, Stewart, Siemens, Cormier, & Commons (2010) have described MOOCs as follows:

A MOOC integrates the connectivity of social networking, the facilitation of an acknowledged expert in a field of study, and a collection of freely accessible online resources. Perhaps most importantly, however, a MOOC builds on the active engagement of several hundred to several thousand “students” who self-organize their participation according to learning goals, prior knowledge and skills, and common interests. Although it may share in some of the conventions of an ordinary course, such as a predefined timeline and weekly topics for consideration, a MOOC generally carries no fees, no prerequisites other than Internet access and interest, no predefined expectations for participation, and no formal accreditation. (p. 6)

Given the reliance on experiential learning in the field of design, the chance of successfully teaching design in a MOOC format depends heavily on the development of appropriate tools. Such tools ought to address a number of key issues. First, in order to facilitate experiential learning, a MOOC should allow students to experience design themselves, i.e., through designing something themselves and to reflect on their own experiences in a productive way. Second, a MOOC on design should teach a design process that can be applied to many different problems, rather than focusing on how to solve a specific design problem and evaluating students on how well they solved the particular problem. Third, in order to allow students to learn to design, they should experience all of the basic activities along the main phases of the design process in an integrated manner, rather than being offered snippets of the design process. In the following sections, we elaborate on each of these challenges.

Challenges in Bringing Design Education Online

Given the reliance on experiential learning in the field of design, the chance of successfully teaching design in a MOOC format depends heavily on the development of appropriate tools. Such tools ought to address a number of key issues. First, in order to facilitate experiential learning, a MOOC should allow students to experience design themselves, i.e., through designing something themselves and to reflect on their own experiences in a productive way. Second, a MOOC on design should teach a design process that can be applied to many different problems, rather than focusing on how to solve a specific design problem and evaluating students on how well they solved the particular problem. Third, in order to allow students to learn to design, they should experience all of the basic activities along the main phases of the design process in an integrated manner, rather than being offered snippets of the design process. In the following sections, we elaborate on each of these challenges.

Facilitating Students to Have Concrete Design Experiences and Reflect on Them

Design is seen to primarily deal with unstructured, open-ended problems (Akin, 1986; Buchanan, 1992; Coyne, 2005; Rittel & Webber, 1973) and the studio is commonly considered to be a key tool to facilitate problem-driven, experiential learning (Demirbas & Demirkan, 2007). Itin (1999) defined experiential learning as ‘changes in the individual based on direct experience’ (p. 99). A key quality of design teaching in the studio is the nature of the interaction between teacher and students (Goldschmidt, Hochman, & Dafni, 2010; Little & Cardenas, 2001; Wang, 2010; Ward, 1990). That is, teachers share knowledge and expertise through reflecting on the students’ work in progress, and guide the co-evolution of problem and solution (Dorst & Cross, 2001; Wiltschnig, Christensen, & Ball, 2013). In those cases, students learn about a topic through ‘reflection on doing’. Thus, direct teacher-student interaction in which both parties engage in reflective behavior regarding the students’ work is regarded as a key to good design education (Adams, Turns, & Atman, 2003; Schön, 1987).

Kolb (1984) developed a widely used model of experiential learning. This model describes four basic activities for learning: (1) having a concrete experience, (2) observing the experience and reflecting on what is being observed, (3) forming abstract concepts and generalizations about what has been observed and (4) active experimentation with the new understanding, resulting in new experiences. In an educational context, the model is typically used to direct courses and course material in such a way that students are likely to go through a complete learning cycle that is grounded in their own experiences with different design activities. Some claim that experiential learning does not need a teacher per se (Itin, 1999) as it is grounded in the student’s own experience. Schön (1987, 1983), however, emphasized the important role of the expert teacher in guiding students’ learning processes. Following Schön’s (1983) model of reflective practice, design teachers or coaches have an obvious role in the traditional studio setting for design education, directing the process of experiential learning.

Facilitating experiential learning is not straightforward in the context of a MOOC, as they are characterized by the absence of direct teacher feedback on student work. That is, the number of students in a MOOC can run into the thousands—at least at the start of a course—which means that the teacher’s attention is an extremely scarce resource for students at the individual level. This brings with it the risk that students do not learn through reflection on action as there is a lack of expert feedback in the process of guiding students in this process.

Facilitating Students to Learn about Design Process

Design education in a university context is often characterized by a focus on the design process (Daalhuizen, Person, & Gattol, 2014; Roozenburg & Cross, 1991). That is, design education typically aims to teach students how to go about designing, in addition to being occupied with the outcome of design activity, i.e., the design itself. This is well illustrated by the central concept of ‘design thinking’ (Adams, Daly, Mann, & Dall’Alba, 2011; Brown & Rowe, 2008; Cross, 2006; Dorst, 2011; Dym, Agogino, Eris, Frey, & Leifer, 2005; Oxman, 2004; Roozenburg, 1993), which points to the importance of focusing on teaching certain patterns of reasoning in addition to, for example, formulas about materials, production technology or mathematics. Indeed, when thinking about teaching design, it is recognized that a student’s focus should be on mastering the design process. In this light, the reasoning process is typically supported by a set of methods and tools. A focus on the design process in teaching design implies that students are not supposed to be—and cannot be—evaluated solely on the basis of their design outcome and that a large emphasis is placed on evaluating how students perform design activities themselves. Furthermore, as the outcomes of a design process are unique to a high degree, it is difficult to assess outcomes in an unambiguous way. In the context of a MOOC, students’ work is typically assessed through an automated process that determines whether the outcome of their activity is correct. Even in cases where a mechanism like peer-reviewing is used, outcomes are typically expected to be of a uniform nature, allowing evaluation to happen based on a set of rubrics related to expected qualities of the outcome.

Facilitating Students to Learn about a Full Design Cycle

In addition, in order to teach process knowledge on design, in a meaningful way, students should be guided through a complete design process so that they can see the interrelationships between the different basic phases in any design process and the consequences of decisions made in one stage to activities in later stages. A widely used phase model for design is the ‘double-diamond’ model by the British Design Council, which describes the phases of ‘discover’, ‘define’, ‘develop’ and ‘deliver’ (Design Council, 2005). Each of these four phases calls for a number of specific design activities. Only when students are offered the opportunity to experience the design process with its four phases, at least once, can they be expected to grasp what it means to design something in a comprehensive manner, i.e., from beginning to end.

The Delft Design Approach MOOC

The Delft Design Approach MOOC ran for the first time on the edX platform, from October 2014 until January 2015. 27 video lectures, 17 quizzes, 7 design exercises and accompanying templates were produced as core teaching material. Furthermore, 7 benchmark videos, 5 expert videos, 8 sofa session videos and 8 peer review modules (see next sections for a detailed description) were produced as well. The course content was organized in terms of a design project. Students were asked to explore a pre-set domain defined as ‘morning rituals’, to define a design problem within that domain, to develop ideas and to conceptualize promising ideas and test them with users (see figure 1). As a final step, students were asked to deliver their work in a final presentation. For each step in the process, the students were taught one or more design methods. In total, seven design methods were adapted to the MOOC context and introduced in video lectures. Each design method was adapted to fit the desired learning experience and the context of the MOOC. This happened in an iterative process between the topic experts and the core staff of the MOOC. The following design methods were used: Vision in Design (Hekkert & Van Dijk, 2011), Context mapping (Sanders & Stappers, 2008; Visser, 2009; Visser, Stappers, van der Lugt, & Sanders, 2005), Defining a problem definition (Roozenburg & Eekels, 1995), Creating ideas by analogy, Creating a list of requirements (Roozenburg & Eekels, 1995), and a Harris profile in concept choice (Harris, 1961). In addition, expert teachers illustrated concept sketching and prototyping skills in a practical, applied manner.

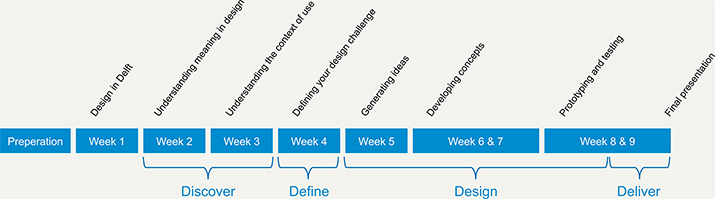

Figure 1. Timeline of the course along the four phases of the double diamond model.

A total of 18 academic staff and industrial topic experts were involved in the creation of the course material. The main goal of the MOOC was to create a situation in which students could learn to design through reflection-on-action (Schön, 1983). That is, they were challenged to learn about design not only by gaining theoretical knowledge, but also by experiencing designing ‘by doing’ and subsequently reflecting on their own experiences. A number of specific didactic tools were developed specifically for this purpose. In designing these tools, Kolb’s model of ‘Experiential learning’ (Kolb, 1984) was used to guide decisions, which are explained in the next section. Furthermore, in order to create a coherent learning experience, the students were offered the opportunity to experience a full design process along the main phases of design.

In order to achieve the above-mentioned goal, the MOOC was divided into weekly or bi-weekly modules that were designed according to a structure for experiential learning (see figure 1). The modules were organized along the four basic phases of design: ‘Discover’, ‘Define’, ‘Develop’ and ‘Deliver’ (see Figure 1) following the double diamond model (Design Council, 2005). A number of didactic tools were designed to facilitate the learning process in each module. In the following sections, we describe the module structure and the way the didactic tools fitted in. We elaborate the decisions that were made along the way in designing the tools.

A Structure for Experiential Learning in a MOOC Context

The MOOC structure was designed in such a way as to facilitate and support a form of experiential learning. This entailed that each module was designed according to a fixed structure that guided students through an experiential learning loop. For this purpose, a number of didactic tools were developed and used in combination with existing MOOC tools. The module structure and didactic tools are discussed below in detail.

A Structure for Experiential Learning for the Weekly or Bi-weekly Modules

Each of the weekly or bi-weekly modules focused on a single step in the design process. Students were taught about each step through one or more design methods. Together, the modules strung together as a coherent design process. A weekly module guided students through a complete learning cycle. That is, each module offered material, tools and guidance for the student to: (1) have a concrete experience with using the design method to take the specific step at hand, (2) observe their own experience and reflect on what was being observed, (3) reflect and form abstract concepts and generalizations about what has been observed and, (4) actively experiment with the new understanding.

Each module consisted of the same set of content elements: An introduction lecture, one or more video lectures, quizzes, a design exercise, a hand-in template, a peer review, benchmark videos, a self-evaluation module, and a sofa session. The format and use of the design exercise, hand-in template, benchmark videos and sofa session were newly designed for this MOOC. In the following section, we will describe all content elements in terms of their design and the way in which they supported remote, experiential learning.

Introduction Lecture

The introduction lecture aimed to increase coherence and overview for the students. It presented the aim and contents of the coming module and linked back to the work done in previous modules. The introduction lecture was offered by the same staff member, and thus provided a familiar face across the modules, aiming to increase student commitment (see Figure 2).

Figure 2. Screenshot from the introduction video of week 2 in the Delft Design Approach MOOC.

Design Exercise

The design exercise formed the cornerstone for each module and instructed the students to take a step in their design project, in which one or more methods needed to be applied. It allowed the students to experience design activities themselves, in a concrete, step-by-step manner. Each exercise was accompanied by a template that helped students to shape their expectations of the format in which the results needed to be delivered. This helped them to imagine what they ought to be working towards and was aimed to boost confidence in being able to perform the task and increase motivation. Each exercise ended with a presentation of the results the students had created. In terms of experiential learning, the exercise asked the students to have a concrete experience with designing themselves.

Each exercise was a building block for the design project that ran across the whole MOOC (see Figure 1). By building up the students’ design project in concrete tasks, guided by methods and a template, the students were offered a relatively ‘safe’ learning process. The aim was to create a learning experience for the students that evoked a limited amount of uncertainty, and still offered open-ended problems that allowed for innovation. Furthermore, the concrete tasks also allowed the students to be able to reflect on their own work in a meaningful way, particularly in the course forum and peer review, as their process and outcomes were similar enough to allow for discussion and feedback in many cases.

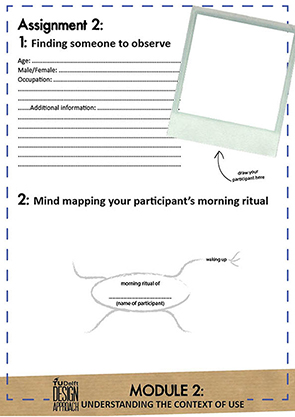

Design Exercise Template

The exercise template (Figure 3) was an important element in achieving the aim of providing concrete tasks and opportunity for fruitful reflection and discussion.) The template provided a structure for the students’ outcomes, without unnecessarily limiting the direction in which the individual student wanted to take their project. That is, the template guided students in terms of the type and depth of their content, but not the content itself. In other words, the template did not guide in terms of what insights were gathered, what design direction was chosen, or which ideas were developed.

Figure 3. Template that guided students through the first steps of the second design exercise “understanding the context of use”.

Theory Lecture

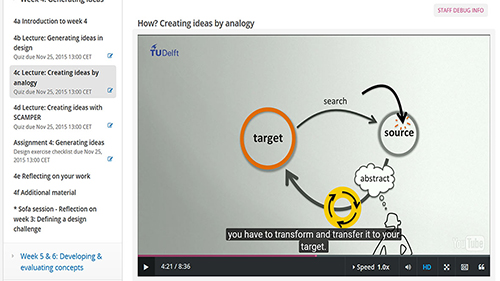

The theory lectures explained the method(s) and underlying theory. This was followed by a quiz to test the students’ knowledge. The videos typically included some background theory and a specific method the students were to use for the design exercise (see Figure 4 for an illustrative example). In terms of experiential learning, the lectures provided input to help form abstract concepts and generalizations about what had been observed during the exercise.

Figure 4. Screenshot from the theory lecture on the edX platform, informing the students about the ‘Analogy’ method, used for idea generation in the MOOC.

Peer Reviewing

The peer review allowed students to receive reviews of their work by their peers. The students were provided with a set of rubrics, which they used to review each other’s work and provide feedback. The rubrics were designed in concurrence with the exercise templates. Similar to the exercise templates, the rubrics for peer reviewing guided the students to review the steps taken and the extent to which meaningful content was presented, rather than assessing the ideas presented or insights generated (i.e., the quality of the design work itself). This left space for students to explore the direction of their design on an individual level. Discussion of the quality of for example ideas themselves were left to the course forum where students could choose to post their outcomes and discuss these with peers.

The peer reviewing had a double function as it not only provided reviews of one’s own work to evoke reflection-on-action but also allowed students to see and evaluate the work of others, widening their scope for what could be possible design directions, insights, ideas as well as providing a reference for the quality of their own work in comparison to others. It allowed the students to compare their work to that of others, providing a frame of reference that is also associated with design work in the studio format (Little & Cardenas, 2001). In terms of experiential learning, the peer reviewing helped students to observe their own experiences and reflect on them, both by receiving reviews and by seeing others’ work. Both mechanisms might open their eyes to aspects of their own work they might not be aware of, and enrich their reflection. Peer grades have shown to be relatively close to staff grades when rubrics are formulated according to specific guidelines (Kulkarni, Wei, Le, Chia, Papadopoulos, Cheng, & Klemmer, 2015). A limitation of peer reviewing in a MOOC context is that it involves non-experts reviewing non-experts (see e.g., Glance et al., 2013) often leading to poor quality feedback.

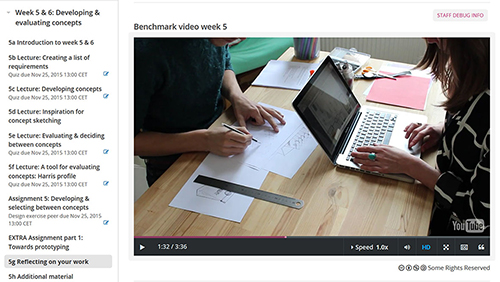

Benchmark Video

A key feature of design education in a studio environment is the presence of benchmarks or references of the work of other students and the examples and feedback of a teacher. In the MOOC context, the benchmark video allowed students to compare the way they worked themselves with the way two qualified master students from the faculty of Industrial Design Engineering had worked on the same exercise.

The benchmark videos featured two master students who showed intermediate results of their work, and commented on the challenging issues they faced while working (see Figure 5 for an illustrative example). The video was intended to both show to the MOOC students how one might go about the exercise as well as offering a discussion between the students about the most challenging issues related to the exercise. The benchmark also showed how the course theory was being implemented in a good way. By ‘benchmarking’ themselves against the master students, the students were able to reflect on the level of quality of their own way of working. In terms of experiential learning, the benchmark video helped students to observe their own experiences and reflect on them, comparing it to a benchmark that they could be certain of it was good quality.

Figure 5. Screenshot from a Benchmark video page on the edX platform, showing two master students from the faculty of Industrial Design Engineering doing concept development.

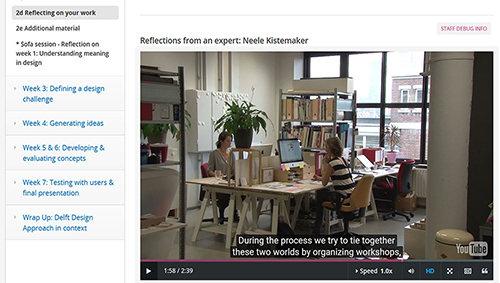

Expert Video

The expert video allowed students to gain more advanced insights into the particular step they took and the method(s) they applied in that step. The industrial experts were all successful alumni from the Faculty of Industrial Design Engineering at TU Delft with a minimum of 5 years of experience in industry and with applying the specific method in their own practice. They reflected on the step the students had taken based on their experience in practice (see Figure 6, for an illustrative example). In doing so, the expert videos aimed to evoke reflection amongst the students on a higher level, for example on the applicability and use of the design methods in a more general sense. The expert videos were aimed mostly at the more advanced students, to trigger reflection on the course content and their own experiences in a broader sense.

Figure 6. Screenshot of the Expert Reflection page on the edX platform, showing how industrial experts reflected upon the methods that the MOOC students also used.

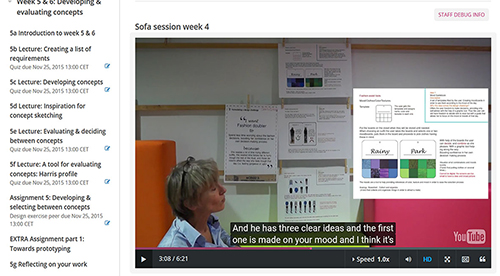

Sofa Session Video

The ‘Sofa session’ video, provided near to real-time feedback on students’ participation in the course. In the video, a member of the course staff discussed a number of pieces of student work hand-picked from the MOOC for their appropriateness to highlighted particularly challenging issues in the step the students had taken (see Figure 7, for an illustrative example). Furthermore, student work that stood out was used as an exemplar.

Figure 7. Screenshot from the Sofa Session page on the edX platform, showing how a staff member shows and discussed student work that has been uploaded in the week before.

Evaluation of the MOOC: Students’ Experiences

The goal of the MOOC was to provide students with the experience of going through a full design cycle and reflecting on their own experiences to facilitate learning. In order to assess the extent to which the MOOC succeeded in reaching this goal, the course evaluation was guided by the following questions: 1) Did the MOOC succeed in creating an experiential learning environment in which students can experience a coherent design process? 2) Did the MOOC succeed in enabling students to learn how to perform the different design activities? 3) Did the MOOC encourage students to reflect on their experiences in a meaningful way?

Method & Data

Four types of data were collected both during and after the MOOC was delivered: 1) Self-reported demographics provided at the time of enrolment. 2) Performance metrics collected through the edX platform. 3) Information posted by students on the course forum and the course’s social media channel on twitter. 4) Demographic data and assessments of the students’ experiences through both a pre- and post-course survey and a number of in-depth interviews.

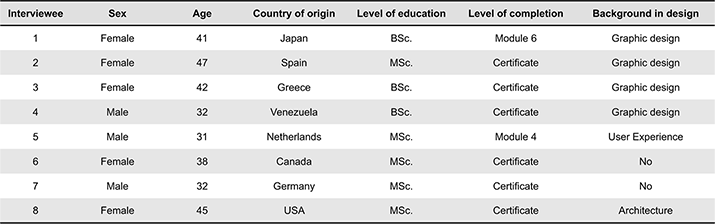

The post-course questionnaire was sent through the edX platform to students who enrolled in the course. In this questionnaire, questions were asked about study behavior and evaluation questions were asked on a number of topics. 74 students originating from 31 countries filled in this questionnaire. 65% of these students had finished the course successfully. Furthermore, we performed in-depth interviews with eight students who finished the course. The interview results have been also reported elsewhere (Cascales, 2015). Students were asked to evaluate different elements of the course, with an emphasis on their learning experiences. The Course forum contained 2480 comments on 639 topics from 736 different students at the end of the course. The post-course interview participants are described in Table 1 and are referred to as such in the remainder of the paper.

Table 1. Demographic information of interview participants in the post-course interviews.

Student Population

The number of students enrolled in the Delft Design Approach MOOC was 13,503. The students reportedly resided in 155 different countries, with the majority of students reporting that they were from the United States (18%) and India (14%). The reported median age of the students was 29 years and 30.1% of them were female. The percentage of students who stated they had a high-school diploma or less upon enrolling was 20% while 38.6% reported that they had a BSc and 32.5% a MSc or higher. In the following section, we will first present results on the participation and retention of students, the degree of learning as reported and finally how they evaluated the reflection tools.

Active Participation and Retention

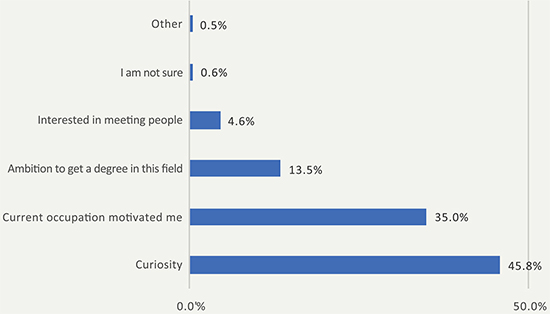

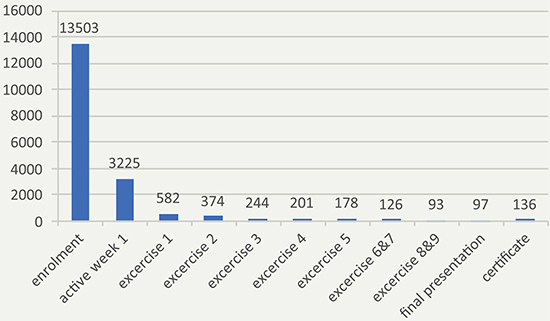

The number of students who actually commenced the course, which was defied as students that visited the course at least once, was 6,905 (51.1% of all enrollments). The number of students who were active in the first week was 3,225 (23.9% of total enrollments). Active participation was defined as any student actively engaging with one or more pages on the course platform (e.g. watched a video, read through instructions, took a quiz). Of the active students, 582 submitted the first exercise (18.1%). Of the students that started the course, 2.0% passed the course and received a course certificate. 23.4% of students that submitted the first exercise passed the course and received a course certificate (see figure 9). Although this might seem a very low retention rate, it followed a similar pattern compared to other MOOCs, being characterized by massive enrolment, a modest-sized population of active students and a low percentage of students passing the course (see e.g. Jordan, 2015; Rayyan, Seaton, Belcher, Pritchard, & Chuang, 2013) often ranging between 1 to 5 percent. Significant reasons that explain the low retention rates are the fact that MOOCs offer free enrolment and registration with no requirements to commit to course work (Skrypnyk, Hennis, & Vries, 2015) as well as a mismatch between the expectations of students and what the course offers. Furthermore, student populations participating in a MOOC are known to have a more diverse set of intents and motivations compared to students in a university course. The pre-course survey (n = 786) showed different motivations to enroll (Figure 8).

Figure 8. Reported main motivation for enrolling.

Figure 9. Enrolment and participation rate for the DDA MOOC. Enrolment refers to the total amount of people that enrolled for the course. Active week 1 refers to the students that were engaging with at least some content in the first week of the course.

Additionally, hierarchical clustering of pre-course survey data (n = 558) resulted in four meaningful student profiles. The first group consisted of what we refer to as ‘Relevant professionals’ that enrolled because of their occupation. Students in this group mostly had a Master’s degree and had at least some experience in the design field. Therefore, the course was highly relevant to them and they typically planned the most engagement with the course beforehand. The second group which we refer to as ‘students’ had a Bachelor’s or Master’s degree with and had at least some experience in the field of design. This group also had the least experience with online courses. The third group which we refer to as ‘curious professionals’ enrolled because of their curiosity. They had a Bachelor’s or Master’s degree and had the least amount of relevant educational background of all groups. Students in this group had taken online courses before and the reputation of the university and the course staff as well as recommendations by others were important factors for enrolment. This group was less interested in attaining a certificate. The fourth group which we refer to as ‘Others’ consisted of working professionals, people between jobs or other. Students in this group had taken online course before. Attaining a certificate and recommendations by others were less important for them.

A key reason for the loss of students during the first weeks of this course might be a technical one. In the first module of this MOOC students we asked to make and upload a video of their morning ritual. In the discussion forum a large number of students indicated that they had technical issues with making a video and especially with uploading it, which is illustrated by the feedback from one of the survey respondents:

I loved the course. It was helpful for my own work and it was all very professional and entertaining. So no complaints about that. The problem was the videos. It took me so much time to get the first one done and I did manage to finally learn iMovie. But by the second video assignment my laptop’s memory started playing up and crashing constantly and I was just spending an insane amount of time trying to get this video done. So I decided to only do the lessons and weekly quiz. (survey respondent)

Furthermore, the fact that the course was about design and asked students to engage in actual design work meant that the students were required to spend a relatively large amount of time on their course work. Although the respondents reported that they spent 8.27 hours on average per week (n = 62, SD = 3.0), which was close to the workload intended by the course staff, 32% of the respondents (n = 72) indicated that the MOOC required too much of a time investment. This might be explained by the diverse background of the students, as some of them had little or no existing knowledge or experience, as a result of which they had to spend a relatively great amount of time on course work. Additionally, even motivated students had trouble completing the course, as many of them had a full-time job, as illustrated by a survey respondent:

I started the course with good intentions and completed the first few exercises but unfortunately work pressure meant I could not dedicate the time required. (survey respondent)

General Evaluation of the Course

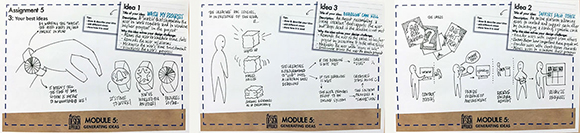

In general, respondents were positive about the MOOC, indicating that they rated the overall quality of the MOOC with a mean score of 4.5 (n = 74, SD = 0.62) on a 5-point scale (1 = very poor, 5 = very good). 71% of these respondents indicated that they would recommend our MOOC to another person. Furthermore, 61% (n = 73) of the respondents indicated that the MOOC inspired them to continue to study in the field of design. 54% (n = 70) of the respondents indicated that the course had exceeded their expectations, such as in terms of how practical the MOOC would be, as illustrated by interviewee 7, who expected to encounter a course on design theory, but was positively surprised: “Focusing on the design practice makes much more sense” (see Figure 10, for an example of student design work).

Figure 10. Illustrative example of student work delivered in week 5 ‘Idea Generation’. Courtesy of Odesliva.

As mentioned before, many students did not know what to expect from the MOOC and for many this was a reason to drop the course early on, or to not even start with the coursework. However, some students were positively surprised. For example, one student enrolled because she expected a MOOC offered by TU Delft to be of high quality. Even with her high expectations, as she mentioned in the interview, she was positively surprised: “I got much more than I was expecting” (interviewee 1).

Also, a number of the interviewees indicated that they remained motivated during the course. A reason for this enduring motivation might be that we used a set of new learning tools that aimed to motivate people and facilitate reflection on their own work. The lectures, benchmark videos and expert videos proved to be especially motivating.

Did Students Experience a Coherent Design Process?

As we stated in the previous sections, our aim was to let students experience a full design cycle, as opposed to teaching them bits and pieces of design theory. During the interviews, the students were asked how they experienced the course and its modules. They typically emphasized the value of organizing the course in terms of a single design project. For example, when asked which module stood out from the rest, one interviewee responded with: “You cannot compare actually, it is a process … Each module makes a total” (interviewee 1). Another interviewee commented on the motivational aspect of having to work on a single design project: “Finishing the project was also a very good incentive. I found very interesting that you have a project from the start to the end, and that keeps you going on” (interviewee 2).

Furthermore, due to the summative nature of the project, the students reflected on the value of seeing an end result that ties all the course work together. For example, interviewee 3 felt she learnt the most in the last weeks because then she finally saw how her ideas turned into something more tangible.

The design project was organized around a design theme to provide an anchor point between students in terms of the content they worked on and to motivate students by providing a clear domain to work on. In the post-course questionnaire, we included questions (5-point scale, 1 = strongly disagree, 5 = strongly agree) about the value of the design theme. The respondents indicated that the morning ritual theme helped them to structure the assignments (mean score = 4.26), to create a coherent flow throughout the course (Mean = 4.30) and to be more creative (Mean = 4.00), and that it kept them motivated to go on and finish the MOOC (Mean = 3.87). Despite these positive reactions, some students indicated that they would have liked to choose their own design topic. For example, one of the interviewees suggested that allowing students to choose their own theme would have helped her to finish the course, as she could have done the project on a topic relevant to her. That is, she did not expect the course to have a predefined theme and would have liked to have her own theme, but underlined that she also understood the choice of having a design theme: “It makes sense, because you can have all the people on the same page” (interviewee 5).

Did Students Learn to Perform the Different Design Activities?

To gain a more in depth understanding, we asked students to indicate to what extent they experienced the different modules (i.e., each focusing on a specific design activity and method) as contributing to their learning experience. Typically, all interviewees mentioned that all modules contributed to their learning experience, albeit not to an equal amount. For example, although interviewee 2 mentioned she learnt from all modules, the module on ‘understanding the context of use’ was her personal favourite. She explained that it stood out because the other modules were more familiar in terms of what she had done before.

Interviewee 6 agreed and described her learning experience as follows. She reported that at first she was not convinced about the task in module 3 as she did not understand its necessity: “Why can’t we just get in the define part and start designing?” However, after having experienced the fieldwork that included user observations, she felt that she learnt so much about the user that she completely changed her mind about this topic. Another interviewee emphasized that she felt most motivated during the last modules because she finally saw something more tangible emerging and greatly enjoyed the sketching in module 6 & 7 (developing and evaluating concepts). Interviewee 4 reported a similar experience; he mentioned that modules 6 & 7 were the most enjoyable for him. He also reflected on the difficulty of prototyping at home, as he did not have the proper

tools available.

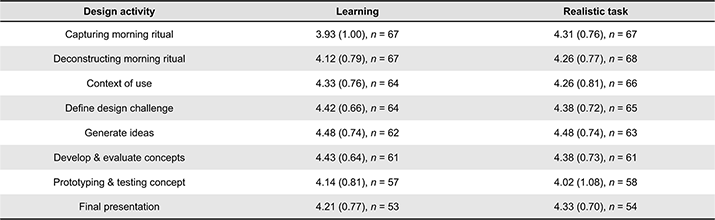

The survey included statements regarding the learning experience of students during the different modules. Respondents were asked to state their agreement with a number of statements regarding the different modules (1 = strongly disagree, 5 = strongly agree). The scores indicated that the different modules all contributed to their learning experience and were seen as more or less realistic tasks, as the mean score for all activities is higher than 3 (see Table 2). Statistical testing (one sample T-tests) also shows that the prototyping activities and the final presentation are evaluated significantly less positively compared to the other five activities in terms of both the learning aspect and the degree to which the activities are regarded to be realistic.

Table 2. Reported impact of the course modules on students’ learning experience.

Did Students Reflect on Their Experiences?

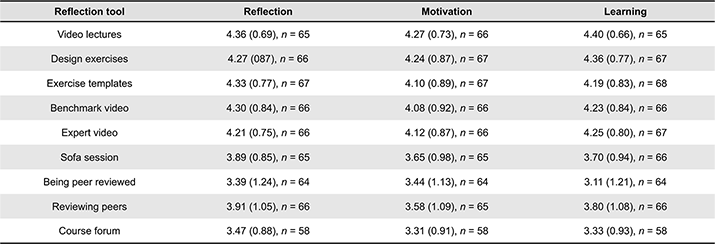

To what degree these tools enabled students to review and reflect on their knowledge and progress is maybe the most relevant question to answer in this paper, as the quality of learning is highly related to reflection. We asked the respondents in the post-course questionnaire to respond to statements regarding the quality of the reflection tools, using a 5-point scale (1 = strongly disagree, 5 = strongly agree). Each tool was assessed on its helpfulness in reflecting on the students’ work, the extent to which they helped to motivate students and the extent to which they helped students to learn (see Table 3). The mean scores for all reflection tools were all 3 or higher. This indicates that all tools were regarded to be working satisfactory, or, in many cases were even perceived to be working well (> 4).

Table 3. Reported impact of reflection tools on students’ reflection, motivation and learning experience.

When asked which reflection tool stood out, one interviewee responded: “One completes the other, there’s not a best” (interviewee 1). However, she indicated that she liked the benchmark videos because she could compare her work to others and see their different approaches and not lose her focus.

The tool that was evaluated best was the template (Mean = 4.33), followed by the benchmark and expert videos (Mean = 4.30 and Mean = 4.21). Indeed, a statistical test showed that these newly designed reflection tools are evaluated more positively than well-known MOOC reflection tools such as peer reviews and the course forum. Interviewee 4 reflected on the templates as a very helpful tool during the course: “The templates were very well put together so they could really guide you. […] I loved them” (interviewee 4). The reason for the positive evaluation of the template can probably be found in the fact that the templates gave students clear guidance on what information to upload.

The benchmark videos and especially the presence of experts in the field both motivated students and provided a good opportunity for reflection. One student indicated during the interview that she “Felt very motivated with the benchmark videos”. Another student indicated that she could compare her work to others and see their different approaches and not lose her focus.

Evaluations of the peer reviews and the sofa sessions were less positive. In both tools, the information that was provided to the students was evaluated as being too general. Indeed, in the sofa session, general first feedback was given and some bad and good results were shown and discussed. The peer reviews that the students received were evaluated the lowest (Mean = 3.39). It was clear that the feedback students received from other MOOC students was often disappointing. This topic was also mentioned often in the interviews. For example, interviewee 1 reported being disappointed in the peer-reviewing system: “Feedback is the most important thing in this course and with this I got disappointed”. Students missed well founded feedback, and feedback on specific details, as was indicated by one of the interviewed students: “I think people need a more immediate interaction”.

Discussion

In this paper, we have showcased one of the first Massive Open Online Courses on design, developed and delivered by TU Delft on the edX platform. We have outlined the course structure and content, emphasizing how experiential learning was facilitated through a number of didactic tools specifically developed for this purpose. Furthermore, we presented an evaluation of students’ learning experience, focusing on their experience of the design process as a whole, the specific design activities and the extent to which the didactic tools helped to evoke reflection and learning.

The idea behind the Delft Design Approach MOOC was to bring a university-based design course to a global audience. This idea echoes two of the TU Delft goals, namely to educate the world and enhance the quality of online and campus education and to contribute to innovation that fosters worldwide development. Design is recognized to be a relevant factor in successful innovation (Roy & Riedel, 1997)

The MOOC reached a global audience. The massive course’s enrolment shows that there is an interest in accessible design education among a worldwide audience. At the same time, only a limited number of students finished the course completely. It is a well-reported fact that MOOCs have relatively low retention rates, with only a limited amount of students finishing a course after enrolment (Greene, Oswald, & Pomerantz, 2015; Hone & El Said, 2016; Xiong, Li, Kornhaber, Suen, Pursel, & Goins, 2015)MOOC students\u2019 completion rate is usually very low. The current study examines the relations among student motivation, engagement, and retention using structural equation modeling and data from a Penn State University MOOC. Three distinct types of motivation are examined: intrinsic motivation, extrinsic motivation, and social motivation. Two main hypotheses are tested: Firstly, in many cases, low retention rates can be explained at least in part by students’ intentions when they start (Reich, 2014) as is described earlier in this paper. The DDA MOOC was no exception to this rule. However, when comparing the amount of active students in the first week to the amount of students finishing the course, there was a relatively high retention rate, particularly given that the DDA MOOC offered rather open-ended exercises, requiring the students to deal with high amounts of (necessary) uncertainty along the way. Furthermore, since its first run, the MOOC has been run three times more (one time as self-paced MOOC) in 2015 and 2016 with over 600 students attaining a certificate. Indeed one of the advantages of a MOOC is that it can be run many times with a relatively small teaching staff, and thus without the need to invest large sums of money every run. Secondly, in regular education most often students need to finish courses with minimum requirements. It is clear that such requirements in the MOOC do not need to be met. This means that students react differently to MOOCs than to “regular” courses. Some students follow the total MOOC without submitting their final assignment, or without submitting work at all. This is supported by the amount of ‘unique views’ of the lecture videos. For example, the prototyping video lecture in the last module still received 250 unique views, even though only 84 students submitted the prototyping assignment. That is, along the course, substantially more people watched videos, than handing in exercises which illustrates the different motivations students had with participating in the course. Many other students have followed only parts of the course, e.g., one or two modules. Next, many students enrolled based on pure interest or curiosity, for example because they were in a process of orienting themselves on their next step in education and/or because they were working as professionals and wanted to enhance their skills. These students were not always interested in completing the course to gain a certificate (see the section on Active Participation and Retention).

The discussion above also brings to the fore that students of a MOOC have different levels of background knowledge and experience with design. The fact that students have different levels of pre-knowledge and experience was challenging in designing the course, as the same content will be too easy for some students and at the same time too difficult for other students. On the other hand, an online course offers new possibilities for creating flexible course content and structures in which the students themselves can choose how to go about learning. We tried to overcome this expected difference in background and experience by offering a mix of course material that catered to both novice and advanced learners, and to students from within and outside of the field of design. For example, for each topic that was addressed in the lecture videos, we offered additional material in the form of articles and videos for students who wanted to explore the topic in more detail (advanced learners). Also, we assumed that by offering an open theme of ‘morning rituals’, people from different educational, social, and cultural backgrounds would be able to relate to and choose a design challenge that was in tune with their interests and capabilities.

The main aim of the Delft Design Approach MOOC was to offer a hands-on experience of learning to design to a global audience through the edX platform.

In this paper, we presented the structure and content of one of the first MOOCs on design as well as an assessment of how its students experienced the course.

Based on the results we can state that the course enabled students to learn how to design on at least a basic level. The Delft Design Approach MOOC showed that it is possible to teach design online—out of the studio—at least to the extent that a significant amount of learners are happy with the learning experience they were offered. Furthermore, students indicated that they experienced a coherent design process that went through a complete design process.

In terms of facilitating experiential learning (Kolb, 1984), the MOOC incorporated a number of didactic tools. These tools seem to have induced a process of experiential learning, with the newly developed tools reportedly helping to reflect on the students’ own work, motivating them and contributing to learning. In particular, the benchmark and expert videos seem to show that students benefit from seeing others doing the same work they did, or reflect on the same work they did. That is, these kind of tools seem to be a promising solution to the absence of studio reflection. What remains missing is the reflection of experts on detailed decisions the students made. Our study also shows that peer review in this setting, is not very suited to take over the role of the teacher in the studio. The design results that students present to each other in the peer review setting are too different and too ambiguous to reflect on these in a valid way. A more extended use of experts that react on these results and on the threads in the discussion forum could be a possibility, although this is time consuming and expensive. Overall, through developing a number of specific didactic tools, we succeeded in helping students to experience design work themselves, and—perhaps more importantly—to reflect on their own work and build a frame of reference to assess their own design capabilities. These elements of our MOOC can be an example for other courses in which experiential learning is a prerequisite.

One limitation of this work is that we present details related to a single MOOC, with limited comparisons to other available MOOCs on design or similar topics.

Furthermore, we include students’ statements on how they experienced the course and its specific elements, yet do not present a controlled study on learning effectiveness of a design MOOC compared to a traditional studio course. This places limitations on the ability to generalize from our findings. As such, our work is intended to contribute to the debate on the value of online education for both universities and industry in general, and the ways in which experiential learning can be facilitated in an online context in particular.

Experiential learning is crucial for offering effective learning experiences in skill-based disciplines like design and architecture. Most MOOCs however are not designed for experiential learning. This has narrowed the present experiences with online experiential learning. We tried to add experience with respect to experiential learning through the development and running of our MOOC. It goes without saying that much more knowledge in this field is needed. Future work for example could focus on the development of technologies to better suit the troublesome interaction between stakeholders. Specifically, in a design context, tools are needed to facilitate group work in a MOOC context. In addition, and in taking one of the student’s comments further, it might be valuable to develop ‘prototyping at home’ kits that students might order so they are able to participate in all phases of design, even if they do not have access to such facilities. Furthermore, more research is needed to better grasp and anticipate the cultural sensitivities of teaching a global audience. More knowledge is needed how to respond to such sensitivities and how to design a MOOC without excluding potential learners.

The future success of MOOCs that embrace experiential learning will depend on the possibilities to facilitate the process of experiential learning. New technical possibilities will certainly add to the value of these MOOCs. We, therefore, suggest that future MOOCs will have a successful future in design education, and can offer a valuable contribution that complements on-campus education in the studio.

References

- Adams, R. S., Daly, S. R., Mann, L. M., & Dall’Alba, G. (2011). Being a professional: Three lenses into design thinking, acting, and being. Design Studies, 32(6), 588-607.

- Adams, R. S., Turns, J., & Atman, C. J. (2003). Educating effective engineering designers: The role of reflective practice. Design Studies, 24(3), 275-294.

- Akin, O. (1986). A formalism for problem restructuring and resolution in design. Environment & Planning B: Planning & Design, 13(2), 223-232.

- Bernard, R. M., Borokhovski, E., Schmid, R. F., Tamim, R. M., & Abrami, P. C. (2014). A meta-analysis of blended learning and technology use in higher education: From the general to the applied. Journal of Computing in Higher Education, 26(1), 87-122.

- Biggs, J. B., & Tang, C. (2011). Teaching for quality learning at university. London, UK: McGraw-Hill Education.

- Brown, T., & Rowe, P. G. (2008). Design thinking. Harvard Business Review, 86(6), 84-92.

- Buchanan, R. (1992). Wicked problems in design thinking. Design Issues, 8(2), 5-21.

- Cascales, D. (2015). Advancing design education online (Bachelor thesis). Lyngby, Denmark: Technical University of Denmark.

- Conole, G. (2014). A new classification schema for MOOCs. The International Journal for Innovation and Quality in Learning, 2(3), 65-77.

- Coyne, R. (2005). Wicked problems revisited. Design Studies, 26(1), 5-17.

- Cross, N. (2006). Designerly ways of knowing. London, UK: Springer.

- Daradoumis, T., Bassi, R., Xhafa, F., & Caball, S. (2013). A review on massive e-learning (MOOC) design, delivery and assessment. In Proceeding of the 8th International Conference on P2P, Parallel, Grid, Cloud and Internet Computing (pp. 208-213). Piscataway, NJ: IEEE

- Demirbas, O. O., & Demirkan, H. (2007). Learning styles of design students and the relationship of academic performance and gender in design education. Learning and Instruction, 17(3), 345-359.

- Design Council. (2005). A study of the design process. Retrieved from: https://www.designcouncil.org.uk/sites/default/files/asset/document/ElevenLessons_Design_Council%20(2).pdf

- Dorst, K. (2011). The core of “design thinking” and its application. Design Studies, 32(6), 521-532.

- Dorst, K., & Cross, N. (2001). Creativity in the design process: Co-evolution of problem-solution. Design Studies, 22(5), 425-437.

- Dym, C. L., Agogino, A. M., Eris, O., Frey, D. D., & Leifer, L. J. (2005). Engineering design thinking, teaching, and learning. Journal of Engineering Education, 94(1), 103-120.

- Daalhuizen, J., Person, O., & Gattol, V. (2014). A personal matter? An investigation of students’ design process experiences when using a heuristic or a systematic method. Design Studies, 35(2), 133-159.

- Glance, D. G., Forsey, M., & Riley, M. (2013). The pedagogical foundations of massive open online courses. First Monday, 18(5), 1-13.

- Goldschmidt, G., Hochman, H., & Dafni, I. (2010). The design studio “crit”: Teacher–student communication. Artificial Intelligence for Engineering Design, Analysis and Manufacturing, 24(3), 285-302.

- Greene, J. a., Oswald, C. a., & Pomerantz, J. (2015). Predictors of retention and achievement in a massive open online course. American Educational Research Journal, 52(5), 925-955.

- Harris, J. S. (1961). New product profile chart. Chemical & Engineering News, 39(16), 110-118.

- Hekkert, P., & Van Dijk, M. (2011). ViP - Vision in design: A guidebook for innovators. Amsterdam, the Netherlands: BIS Publishers.

- Hone, K. S., & El Said, G. R. (2016). Exploring the factors affecting MOOC retention: A survey study. Computers and Education, 98, 157-168.

- Itin, C. M. (1999). Reasserting the philosophy of experiential education as a vehicle for change in the 21st century. The Journal of Experiential Education, 22(2), 91-98.

- Jordan, K. (2015). MOOC completion rates: The data. Retrieved from http://www.katyjordan.com/MOOCproject.html

- Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Englewood Cliffs, NJ: Prentice-Hall.

- Kulkarni, C., Wei, K. P., Le, H., Chia, D., Papadopoulos, K., Cheng, J., & Klemmer, S. R. (2015). Peer and self assessment in massive online classes. In H. Plattner, C. Meinel, & L. Leifer (Eds.), Design thinking research: Building innovators (pp. 131–168). Cham, Switzerland: Springer.

- Little, P., & Cardenas, M. (2001). Use of “studio” methods in the introductory engineering design curriculum. Journal of Engineering Education, 90(3), 309-318.

- McAuley, A., Stewart, B., Siemens, G., Cormier, D., & Commons, C. (2010). The MOOC model for digital practice. Retrieved from: https://oerknowledgecloud.org/sites/oerknowledgecloud.org/files/MOOC_Final.pdf

- Means, B., Toyama, Y., Murphy, R., Bakia, M., & Jones, K. (2009). Evaluation of evidence-based practices in online learning: A meta-analysis and review of online learning studies. Washington, DC: US Department of Education.

- Nguyen, T. (2015). The effectiveness of online learning: Beyond no significant difference and future horizons. MERLOT Journal of Online Learning and Teaching, 11(2), 309-319.

- Oxman, R. (2004). Think-maps: Teaching design thinking in design education. Design Studies, 25(1), 63-91.

- Pappano, L. (Nov. 2, 2012). The year of the MOOC. The New York Times. Retrieved from https://www.nytimes.com/2012/11/04/education/edlife/massive-open-online-courses-are-multiplying-at-a-rapid-pace.html

- Rayyan, S., Seaton, D. T., Belcher, J., Pritchard, D. E., & Chuang, I. (2013). Participation and performance. In 8.02x electricity and magnetism : The first physics MOOC from MITx. Retrieved from: http://arxiv.org/pdf/1310.3173.pdf

- Reich, J. (2014). MOOC completion and retention in the context of student intent. Educause review. Retrieved from http://www.educause.edu/ero/article/mooc-completion-and-retention-context-student-intent

- Rittel, H. W. J., & Webber, M. M. (1973). Dilemmas in a general theory of planning. Policy Sciences, 4(2), 155-169.

- Roozenburg, N. F. M. (1993). On the pattern of reasoning in innovative design. Design Studies, 14(1), 4-18.

- Roozenburg, N. F. M., & Cross, N. G. (1991). Models of the design process: Integrating across the disciplines. Design Studies, 12(4), 215-220.

- Roozenburg, N. F. M., & Eekels, J. (1995). Product design: Fundamentals and methods. New York, NY: John Wiley Sons Chichester.

- Roy, R., & Riedel, J. C. k. h. (1997). Design and innovation in successful product competition. Technovation, 17(10), 537-548.

- Sanders, E. B. -N., & Stappers, P. J. (2008). Co-creation and the new landscapes of design. CoDesign, 4(1), 5-18.

- Schön, D. A. (1987). Teaching artistry through reflection in action. Educating the reflective practitioner: Toward a new design for teaching and learning in the professions (pp. 22-40). San Francisco, CA: Jossey-Bass.

- Schön, D. A. (1983). The reflective practitioner. New York, NY: Basic Books.

- Skrypnyk, O., Hennis, T. A., & Vries, P. De. (2015). Reconsidering etention in MOOCs: The relevance of formal assessment and pedagogy. In Proceedings of the 3rd International Conference on European MOOCs Stakeholder Summit (pp. 166-172). Mons, Belgium: Université catholique de Louvain.

- UNESCO. (2016). Guidelines for open educational resources (OER) in higher education. Retrieved from: http://www.unesco.org/new/en/communication-and-information/access-to-knowledge/open-educational-resources/

- Visser, F. S. (2009). Bringing the everyday life of people into design (Doctoral dissertation). Delft, The Netherlands: Delft University of Technology.

- Visser, F. S., Stappers, P. J., van der Lugt, R., & Sanders, L. (2005). Contextmapping: Experiences from practice. CoDesign, 1(2), 119-149.

- Wang, T. (2010). A new paradigm for design studio education. International Journal of Art and Design Education, 29(2), 173-183.

- Ward, A. (1990). Ideology, culture and the design studio. Design Studies, 11(1), 10-16.

- Wiltschnig, S., Christensen, B. T., & Ball, L. J. (2013). Collaborative problem-solution co-evolution in creative design. Design Studies, 34(5), 515-542.

- Xiong, Y., Li, H., Kornhaber, M. L., Suen, H. K., Pursel, B., & Goins, D. D. (2015). Examining the relations among student motivation, engagement, and retention in a MOOC: A structural equation modeling approach. Global Education Review, 2(3), 23-33.

- Yousef, A. M. F., Chatti, M. A., Schroeder, U., & Wosnitza, M. (2014). What drives a successful MOOC? An empirical examination of criteria to assure design quality of MOOCs. In Proceedings of the 14th International Conference on Advanced Learning Technologies (pp. 44-48). Piscataway, NJ: IEEE.

- Zhao, Y., Lei, J., Lai, B. C., & Tan, H. S. (2005). What makes the difference? A practical analysis of research on the effectiveness of distance education. Teachers College Record, 107(8), 1836-1884.