Navimation: Exploring Time, Space & Motion in the Design of Screen-based Interfaces

Institute of Design, The Oslo School of Architecture and Design, Oslo, Norway

Screen-based user interfaces now include dynamic and moving elements that transform the screen space and relations of mediated content. These changes place new demands on design as well as on our reading and use of such multimodal texts. Assuming a socio-cultural perspective on design, we discuss in this article the use of animation and visual motion in interface navigation as navimation. After presenting our Communication Design framework, we refer to relevant literature on navigation and motion. Three core concepts are introduced for the purpose of analysing selected interface examples using multimodal textual analysis informed by social semiotics. The analysis draws on concepts from multiple fields, including animation studies, ‘new’ media, interaction design, and human-computer interaction. Relations between time, space and motion are discussed and linked to wider debates concerning interface design.

Keywords – Animation, Communication Design, Interface, Movement, Navigation, Social Semiotics.

Relevance to Design Practice – Examples of dynamic screen-based interfaces are presented and concepts are introduced that are applicable to the analysis and shaping of digital environments. This may help designers to see the importance of the communicative and mediating role of interfaces as cultural and mediating artefacts. A socio-semiotic approach highlights relations between time, space and motion in screen-based design.

Citation: Eikenes, J. O. H., & Morrison, A. (2010). Navimation: Exploring time, space & motion in the design of screen-based interfaces. International Journal of Design, 4(1), 1-16.

Received July 9, 2009; Accepted January 24, 2010; Published April 20, 2010.

Copyright: © 2009 Eikenes and Morrison. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: jonolav.eikenes@gmail.com

Introduction

Interfaces are part of our wider digital culture; through them we access online banks, play computer games, and communicate in social networking arenas. Digital interfaces are cultural artefacts as much as they are technological ones, and they act as arenas for communication as much as a means for finding information. Early interface development tended to focus on task-oriented work undertaken by professionals using machines. Subsequently, the field of Human-Computer Interaction (HCI) placed emphasis on usability and efficiency in studies of interfaces, leading, for example, to attention to user-centred design approaches. Today, in addition, interface design must deal with a diversity of media devices used in a variety of contexts and settings, and with their cultural significations. As computer graphics have become more sophisticated in resolution, colour, visual movement, speed, and responsiveness, so too have the devices become smaller and more portable, a well-known example being the Apple iPhone.

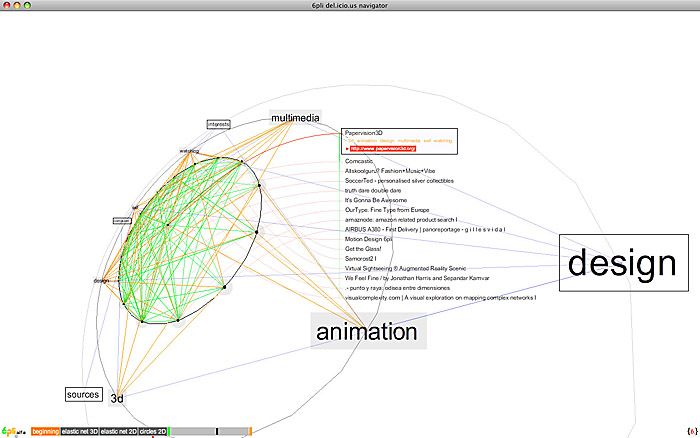

Figure 1. Bookmarks and tags from http://delicious.com/ are visualized as nodes located on

circles in a three-dimensional space at http://www.6pli.com.

In many ways we might now say that ‘the interface is on the move’ (Skjulstad & Morrison, 2005). Through software such as Adobe Flash and via new operating systems, the interface no longer moves only in cases of embedded videos or animated advertising banners. Animation and motion graphics now also appear as part of the interface itself (Figure 1). As a whole, it is the interplay of time, space and motion designed as part of the interface, along with selected and accessed dynamic media and our own motivated and exploratory use, that realise meaning, informationally and communicatively (Figure 2). The interface, therefore, can be seen as not simply a graphic skin or a served, static structure that ought to disappear.

Figure 2. Cover Flow mode in the Apple iPhone interface.

On the contrary, we now encounter the interface as a dynamic, multimodal and mediational artefact that needs to be understood as a culturally constructed ‘site’ for multiple activities of situated use. Relatively few studies have looked into the emerging phenomenon of dynamic, multimodal interfaces as communicative environments that offer instances of mediation by innovators in the field. Close analysis at a textual and visual level, and, more particularly, an analysis that is centred on interface and Communication Design, is needed to more fully describe, define and interpret the phenomena of dynamic interface designs and what they, in turn, can offer us as users and designers.

Perspective, Focus & Context

In this article we adopt a Communication Design perspective of digital design that refers to the socio-cultural and technological shaping of digital tools, artefacts and environments. This view draws on humanist perspectives that place analysis of digital artefacts in contexts of multimodal textual composition, as objects and processes of social construction and with respect to their cultural situatedness and contexts of mediated use (Morrison et al., in press). Focusing on Communication Design allows us to analyse dynamic interfaces as mediating environments for cultural expression and multimodal signification. The study we present in this article is the first part of a wider one that connects such textual analysis of existing interfaces to the design of interfaces for social engagement and their location in contexts of wider use.

We analyse interfaces that are themselves concerned with experimentation and dynamic mediation in and through the interface. These have been developed by design bureaus and by commercial concerns who integrate dynamic interfaces in their professional design practice and marketing (Skjulstad, in press). Besides analysing the interface of a well-known mobile device (Figure 2), we focus mostly on web-based interfaces. We focus, furthermore, on the visual and kinetic aspects of these interfaces, even though they include other features such as sound and haptic feedback.

To account for the interplay of dynamic elements and the mediation of navigational activities, we have developed the term navimation1. Navimation refers to visual movement that is intertwined with the activity of navigation in the interface (Eikenes, 2009; Morrison & Eikenes, 2008). The concept of navimation has been developed through a reflexive interplay between design production and critical analysis in an ongoing practice-based research project called RECORD.2

A Communication Design Perspective

Interaction & Communication Design

Interface design has often been considered a subsection of interaction design (Moggridge, 2007; Löwgren & Stolterman, 2004; Bagnara & Crampton Smith, 2006). In the shift from designing objects to designing experiences, interaction design needs to investigate temporal as well as spatial form (Redström, 2001; Mazé & Redström, 2005), and to see computation as basic material.

From a social, cultural and humanistic perspective, studies of the design of interactions and their contexts of use can be understood in terms of mediated communication and the historical, social, playful and aesthetic in digital design (Blythe, Overbeeke, Monk, & Wright, 2003; Lunenfeld, 1999). This approach has been framed as Communication Design (Morrison et al., in press). This mediational perspective of digital communication is informed by studies in new media, social semiotics, socio-cultural studies of learning and work, and practice-based research into multimodal composition in which mediated discourse itself undergoes change through active use (Jones & Norris, 2005; Morrison, in press). This view is distinct from the structuralist and directional or ‘transmission’ models of communication (e.g., Crilly, Maier, & Clarkson, 2008) that are not rooted in cultural and mediational theory. From a Communication Design perspective, the interface itself mediates; it is understood as socially and culturally constructed and situated. Such a perspective is not very widely articulated in discussions of the interface in design research. Further, few studies exist of dynamic, digital interfaces and their multimodal characteristics from a specifically media and Communication Design view (e.g., Skjulstad, 2007).

In their design activity, interaction designers invest heavily in the shaping of interfaces as symbolic and cultural texts. Alongside this attention to design, and with reference to user-driven studies, we also need to unpack the features and possible functions of these emerging forms of mediated communication. The proliferation of ‘movement in the interface’ demands that we pay attention to a variety of media types, genre conventions and earlier media, and to the ways that elements of these are combined in different configurations. Social semiotics provides some means for relating the various graphical, animational and kinetic aspects of dynamic interfaces within a wider communicative perspective.3

On Social Semiotics

In contrast to studies on product semantics and semiotic views of design (Krippendorff, 1989; Vihma, 1995), social semiotics has not been applied in much design research literature. Screen interfaces are rarely addressed in terms of social semiotics (Nadin, 1988; O’Neill, 2008). However, social semiotics may account for how meaning is made in the process of adopting, using, and modifying signs in interface design and situated use (Kress & van Leeuwen, 2001). In this view, signs are not seen as fixed ‘codes,’ but rather as dynamic and ever-changing ‘semiotic resources’ that people create, use and adapt to make meaning in producing as well as interpreting artefacts in specific contexts.

Further, social semiotics accounts for meaning making practices of all types, including those involved in verbal, visual, or aural communication (e.g., van Leeuwen, 2005). These are different semiotic systems or modes that can be combined into multimodal compositions (Morrison, in press). Semiotic resources may also travel from the medium of film to an interface composition, and vice versa, a process that has been described as remediation (Bolter & Grusin, 1999).

Interface as Mediating Artefact

The notion of the interface is commonly drawn from HCI, where it is often portrayed as a flat layer existing between the user and the computer (e.g., Moran, 1981; Jørgensen & Udsen, 2005; Laurel, 1991). The introduction of Graphical User Interfaces (GUIs) in the 1970s and 80s was an important development. Graphical elements such as windows, icons and menus, combined with a pointing device such as a mouse, have been referred to as WIMP (Windows, Icons, Menus and Pointing device) interfaces. Here the notion of direct manipulation (Shneiderman, 1983) has been employed to refer to specific relations between user action (input) and presentation (output).

Existing analyses of dynamic GUIs are often technical in focus, or adopt a functionalistic approach, aimed at ease of use (usability) and efficiency. However, as Jørgensen and Udsen (2005) point out, and as is promoted in the ‘Digital Bauhaus’ (Binder, Löwgren, & Malmborg, 2009), the interface has also been taken up in research related to narrative, aesthetics, and media and communication. Laurel (1991), for example, compares the interface to the theatre, and Manovich (2001) describes it as an aesthetic experience.4 Drawing on the field of ‘new’ media as well as visual communication (Gere, 2006), we might investigate the interface in its own right as a cultural textual construct, such as has been applied to CD-ROMs, and more recently to computer games (Liestøl, 2003). The interface can be seen as an inter-semiotic object (Royce, 2006): it is a medium incorporating a mix of media and representations that ‘speak’ to us, and is thus a communicational phenomenon generated through design (Skjulstad & Morrison, 2005). Wood (2007) suggests that digital technologies are leading to an increasing appearance of competing elements in the interface, which act together to organize a viewer’s attention. She further argues that a viewer can be embodied in a spatio-temporal interface and gain agency by exerting control over the interface space.

Drawing on the work of Vygotsky (1978) on cultural mediation, a socio-cultural perspective on mediated communication and its design characterises the interface as a mediating artefact that facilitates and materializes social interaction. Further, interfaces may be labelled as primary, secondary or tertiary artefacts (Wartofsky, 1979). Primary and secondary artefacts are tools and representations, respectively, while the tertiary artefact is one that goes beyond the practical and creates an autonomous ‘world’ of play that can colour the way we see the ‘actual’ world. The interface may be seen as a tertiary artefact that provides an environment for play and engagement. It mediates as an instrumental tool for activities such as navigation and social interaction, but also mediates as a rich textual environment in which a diversity of signs and multiple media types are co-composed. Bødker and Andersen (2005), drawing on their respective expertise in activity theory and semiotics, refer to this as ‘complex mediation.’ It is via navigation that complex mediation occurs in the interface. From a Communication Design perspective, the changing mediational character and significations of dynamic interfaces need to be understood more fully both in terms of navigation as well as animation. We now turn to these.

Towards Navimation

Navigating Time & Space

Since digital information is not bound to physical location and form, the digital interface allows us to create, find, and experience information and media content in many ways.5 Benyon (2001) proposes a shift in HCI towards conceptualising people as navigators of ‘information space’; in information visualization, Spence (2007) refers to navigation as interactive movement in information space. This generates associations to ways in which we find our way in the physical world, and allows for an open approach to navigation—one of exploration, discovery, serendipity and fun. Moving through a real or a virtual space may be satisfying in itself.6 In architecture, the term wayfinding is often applied.7

Verhoeff (2008) argues that navigation is the primary paradigm driving digital screen media today, in which the relationship between narrative and the spectacular is manifested. The screen is both a site and a result of navigation: it is a screenspace in which there is a simultaneous construction and representation of navigation. Verhoeff argues that the time-space dichotomy is untenable since time necessarily includes movement through space. From a critical practice view on design, Mazé (2007) explores temporal form in relation to materials, use, and change. She refers to concepts such as ‘becoming’ and ‘in-the-making’ to open up a range of spatial-temporal relations that can be investigated through critical design practice.

Importantly, activities of navigation take place on both short and long timescales (Lemke, 2000a, 2000b), from the moment of selecting a link to the time span involved in navigating through hundreds of webpages, and also through traversals across diverse websites and interfaces, devices, and media genres (Lemke, 2005). Lemke introduces the term hypermodality to refer to hypertextual navigation that is combined with diverse modes of communication such as image, text and sound (Lemke, 2002). This connects navigation with diverse modes of communication, including visual motion.

Motion in the Interface

Since the advent of film, tremendous developments have taken place in technology as well as artistic expression of screened motion (Manovich, 2007; Pilling, 1997). Computer technology has gone on to change the possibilities of screening images in motion, as motion now can be generated in real time as it is being screened, and in response to user action. This applies to a range of screen-based devices with or without touch-screen functions, including laptops, mobile phones, GPS readers, MP3-players and PDAs.

Experimental animation and the design of film title sequences have been especially important for the development of non-figurative graphics and typography in motion. Today, designing with moving imagery and type is often referred to as ‘motion graphics’ (Gallagher & Paldy, 2007). A highly relevant field in relation to motion graphics is animation studies, from which Power (2009) asks for a more expressive visual aesthetic, one that is not driven by a naturalistic representational agenda, which has often been the aim in computer animation as well as other forms of computer-generated imagery.

As interfaces may now be designed to include elements of motion graphics and animation, the development of software tools is important as it allows for different types of dynamic interfaces to be produced. Not only do these tools allow different elements and features to be created; they also affect the creative process of developing interfaces. Currently, the most widely used software for creating dynamic interfaces is Adobe Flash. It allows the creation of simple animation and the design of interactive and graphically rich compositions, including elements such as video and audio, that can be incorporated in websites.

However, there are few studies of how motion can be applied in interface design. Chang and Ungar (1993) describe how principles of cartoon animation can be applied to the user interface, arguing that cartoon animation has much to lend to user interfaces with regard to achieving both affective and cognitive benefits. Baecker and Small (1990) propose three roles for animation in interfaces: to reveal structure, process, or function. Thomas and Calder (2001) look at how programmers can implement specific animation techniques in direct manipulation interfaces.

In exploring the aesthetics of interaction design, pliability (Löwgren, 2007) and fluency have been described as experiential qualities, where movement is one of the factors that influence the user experience through interaction. The experiential is a result of the design and properties of the interface. Skjulstad (2007) has looked at how motion graphic artists incorporate kinetic elements in their online design portfolios. In their article “Movement in the interface,” Skjulstad and Morrison (2005, p. 414) describe how motion can occur at three levels in a ‘dynamic interface’: 1) in the interface itself, 2) via flexible representation, and 3) within dynamic media types. Skjulstad (2007) further draws on the concept of montage from film studies to refer to how various parts of a website are related to each other through graphical juxtapositions, co-occurrences, and contrasts.

Navimation

We have coined the concept navimation to refer to motion in an interface that is connected to the activity of navigating that interface (Eikenes, 2009). Navimation describes the synergies that appear when the dynamics of navigation are utilized in connection with the techniques of animation. By no means should all motion in the interface be considered navimation. However, the concept provides us with a starting point for discussing these movements in interfaces, and their relations to user actions. It allows us to work towards the development of core concepts for addressing the symbolic and the cultural in dynamic interfaces as a complement to earlier notions of direct manipulation and usability. The concepts are taken up in our own design practice, and as part of the larger RECORD research project, as conceptual tools in the design process. They have been central to conveying our suggestions and communicative prototypes to companies and project partners with whom we collaborate.

Methods, Core Concepts & Selected Interfaces

Multimodal Text Analysis

In dynamic, multimodal interfaces a mix of symbolic and representational media types such as images, written text, sound, video and animation may co-occur (Skjulstad, 2007; Wood, 2007). These media types might act separately or interactively in order to ‘speak’ to us and motivate our active engagement. The interface may be analyzed through multimodal textual analysis as a method of addressing the medley of media types and the mixing of discursive norms and elements that might appear in navimation. This method is informed by the humanities, from hermeneutics, and allows the analysis of design artefacts and their symbolic, connotative and communicative innovation. It provides established means for conducting analyses of experimental designs that are geared towards finding new textual and communicative characteristics.

Such textual analysis allows us to ‘zoom in’ on the interface as a communicative, symbolic space, not only a technical one. We do this through the definition of three core concepts that we have developed through extensive critical use, design and reading of dynamic interfaces as cultural texts and with reference to other research in multimodal discourse analysis of new media texts. We have applied these concepts to a selection of dynamic interfaces that feature navimation on the web as well as to a leading mobile device that also relies heavily on navimation. In terms of integrated design and research methods, we reiterate that this is one part of a wider inquiry that the RECORD project has taken into designing for social engagement (Eikenes, 2009) and also into actual contexts of use.

The four examples are drawn from many years of our own personal, educational and professional web usage, interface design practice, and interface analysis. We have identified more than 150 interfaces with navimational features on the web and on a range of interactive media devices. The easy and free access to a large number of websites through the Internet has led us to focus on web-based interfaces, but we have also included a mobile device in order to demonstrate the relevance of navimation for other platforms and situations of use. We believe our concepts could be applied to other kinds of interfaces and contexts, such as those found in gaming, art installations, or interactive storytelling, although such a level of application is beyond the scope of this article. The terms and concepts we apply are partly drawn from film and animation production (i.e., see Zettl, 1990). Practice-based manuals in interaction design and motion graphics (e.g., Woolman, 2004) have been especially useful in finding, categorizing and discussing core kinetic features. In addition, the concepts have been informed by discussions following a conference presentation (Morrison & Eikenes, 2008).

Three Key Concepts

The three core concepts we have developed for the multimodal textual analysis of dynamic interfaces are as follows:

- Temporal navigation. This concept denotes how, at a micro level, navigation can be seen as durable and continuous. Navigation becomes topological (Lemke, 2000b), meaning that it is realised by degree rather than discrete options. In contrast, links act as discrete and finite options when navigating traditional hypertext environments (e.g., Aarseth, 1997; Bernstein, 1998), resulting in a discontinuous form of navigation. In dynamic interfaces this discrete selection can be replaced with a continuous activity of manipulating or changing the navigational progression.

- Spatial manipulation. This concept refers to how motion can be used not only to create and enhance a sense of two- or three-dimensional space on a screen, but also to manipulate and even distort this sensation. The screen of an interface can be seen as the viewing frame of a virtual camera (Jones, 2007), with the user looking into a virtual space framed by the screen. We do not see the camera itself, but when we are presented with a virtual environment seen from a certain point of view, we might assume that there is a virtual camera at play. The notion of a virtual camera reflects the way that real film cameras have traditionally been used to frame images in motion, i.e., through zooming, panning or using a dolly (Zettl, 1990). At a different layer, the interface—and its screen—is placed in the context of a real physical space. This context may also affect the way the virtual screen space is perceived, as the users themselves also might be physically moving. Thus, the sensation of space can be manipulated and distorted not only on the screen itself, but also in relation to a physical context.

- Motional transformation. Drawing on Woolman (2004), we see that transformation involves the changing of some inherent feature of an element or elements in the interface over time. These changes might take place in colour, transparency, size, position or shape. This is one of the core features and unique possibilities of animation and motion graphics, in which elements can gradually be transformed. This transformation can be reductive, elaborative, or distortive (Woolman, 2004). Transformations are often a complex mix of these variables, and can be more or less predefined by the interface designer. Related terms that are already in use in the interaction design profession as well as in computer animation and motion graphics are ‘shape transformation,’ ‘transition,’ ‘image morphing’ and ‘tweening’ (in-betweening).

Presentation of Navimation Interfaces

In order to be able to compare and analyse navimation interfaces, it is first necessary to be able to describe them. We now apply the three concepts to three selected examples (in the order best suited for each example), before reflecting on the concepts as they apply across the examples.

A Creative Agency’s Portfolio

In addition to being designed for accessibility and ease of use, interfaces can be designed to communicate recognisable brand values and identities. This is particularly so for design agencies whose creative portfolios include innovations at the level of the interface.

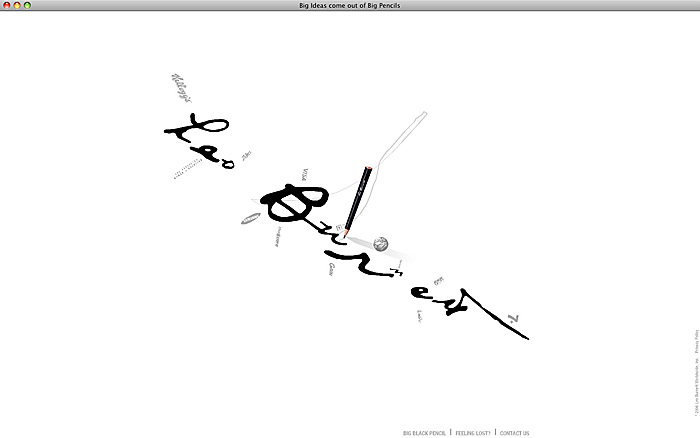

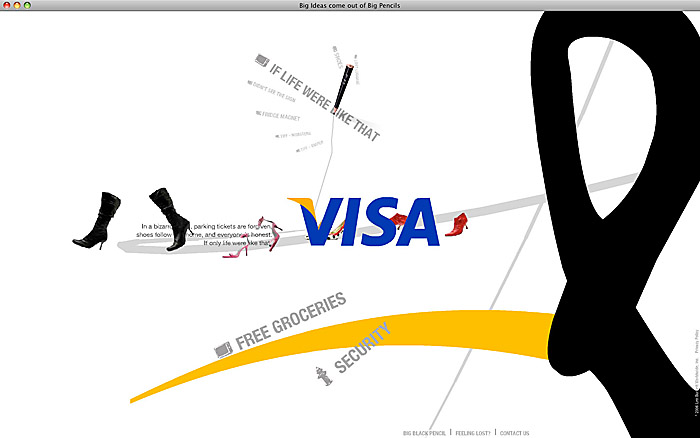

Description: http://www.leoburnett.ca

This is a website for Leo Burnett, a company that describes itself as an “idea-centric” global marketing communication agency, with 96 offices in 84 countries. This Flash-based website is first and foremost a portfolio. It presents a selection of projects conducted for a variety of customers. The majority of the projects are adverts for TV and print. The main navigational idea of the site is to arrange content around the company logo (Figures 3), and from here to allow the user to zoom in on desired areas (Figure 4). New content appears as you zoom into a new area, an approach that allows for deep hierarchies with potentially unlimited layers of information. The usual cursor on the screen has been replaced by an illustrated pencil. As this pointing device is moved, the perspective view of the pencil changes, the letters of the logo move, and lines are drawn as a trail emerging from the movement of the pencil.

Figure 3. When entering a section on the website http://www.leoburnett.ca, the whole environment is

rotated before it zooms into the selected section.

Figure 4: A typical section, with sub-sections as well as short animations related to the selected project.

Navimation Applied

Motional transformation. On entering the site, the logo of Leo Burnett (including the pencil) is statically placed in the middle of the screen. Move the mouse pointer over the logo, and the letters break free from each other and float in space, accompanied by the sound of a cymbal. This transformation from a static logo to several separate letters can clearly be seen as an instance of motional transformation. This transformation comes as a surprise, catching our attention by breaking with the conventional, expected appearance of a flat and static interface. This effectively communicates and embodies Leo Burnett’s skills of creatively catching people’s attention through advertising campaigns.

Spatial manipulation. The individual letters are afloat in a simulated three-dimensional space. The construction of this space is supported when the user moves the pencil and finds that the letters move independently, depending on their position. However, this space is not spatially coherent: even if the pencil can interact with letters that are floating in a three-dimensional space, the lines drawn by the pencil are drawn on a continuing two-dimensional layer behind the letters. When the user selects a specific category item, the whole environment of letters and extensions quickly rotates to match the direction of the selected element, before zooming into the chosen element, where new sub-elements are then revealed. At the same time, the spatial environment is discreetly transformed from a three-dimensional environment into a two-dimensional one. In this way, motion is used to manipulate the sense of space by creating a mixed sensation, thereafter transforming the same space. Spatial manipulation is here used to create a spatial environment with its own rules and behaviours, constituting an original screen space that is radically distinct from what is found on the websites of other advertising agencies.

Temporal navigation. The pencil and the letters on the screen move in correspondence with how the user directs the pointer, leaving drawn lines as traces of navigation. This type of navigation is marked as durational and temporal, and the tracing suggests associations with children’s drawings or playful, artistic scribblings. Navigating between the sections or nodes in the hierarchy of the interface is based on selection followed by a transition through motion, and is therefore not an instance of temporal navigation. However, the responsiveness and quick transitions that occur give the impression of an interface that becomes highly dynamic through the process of navigation.

For creative industry players such as Leo Burnett, interfaces and portfolios are strategic tools for communicating with external partners and clients, who in turn are able to actively use these interfaces as semiotic resources in the design of their own digital marketing. In addition, such interfaces can contribute to the building of an internal corporate identity.

A Web Browser Add-on for Exploring Media Content

Some web concerns, such as Flickr, allow external actors to develop new ways of navigating the media content that they provide. As a result, independent actors can create novel interfaces that aggregate media content from different websites and services. Through these interfaces, users are able to explore media content in ways other than the service itself provides.

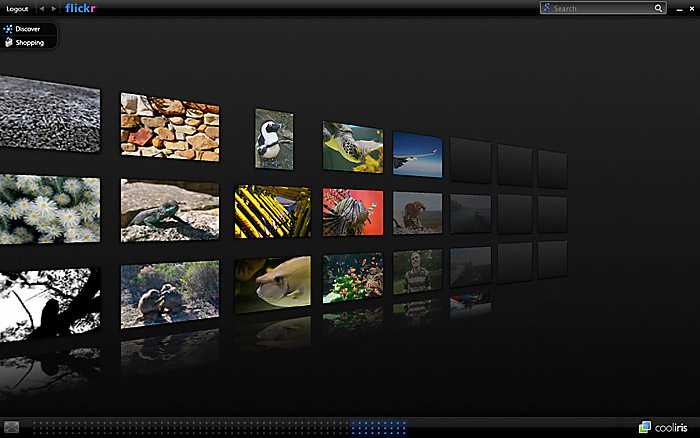

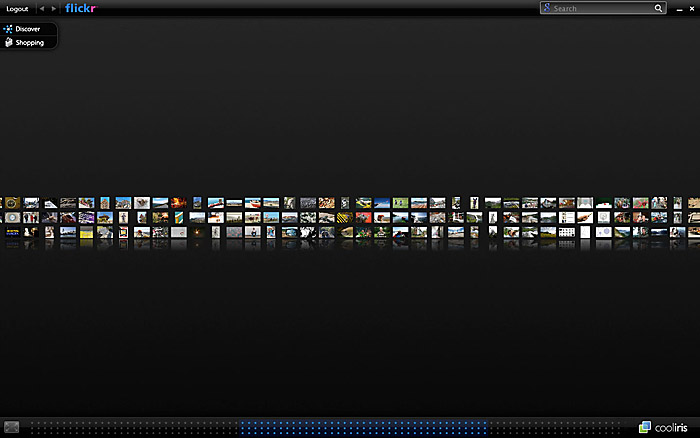

Description: CooIiris Plugin

Cooliris (http://www.cooliris.com) is a software add-on for web browsers such as Firefox, Internet Explorer and Safari (and is also available for the Apple iPhone). When installed, this software provides a new interface for exploring and watching video and image streams from sites such as www.flickr.com, www.youtube.com, and images.google.com, as well as media files located on one’s own computer. A symbol on an image in the browser window indicates that it belongs to a stream that can be viewed using Cooliris. Clicking on this symbol, or on the Cooliris one in the browser, brings the Cooliris interface to the fore; it may fill the whole screen (Figures 5, 6 and 7).

Figure 5. Cooliris (http://www.cooliris.com) in full screen.

New images are loaded as the user navigates along the wall. (All photos from Flickr: http://www.flickr.com/photos/jonolave.)

Figure 6. The camera is placed at a distance from the wall.

Figure 7: The camera is placed here close to the wall, allowing the selected image to be brought forward.

This interface is typically controlled with the mouse and keyboard. The main navigational idea is to be able to arrange images on a vertical plane in a virtual three-dimensional environment, and to allow the user to browse this ‘wall’ horizontally. There are by default three potentially endless rows of images on the wall. New images are loaded as the user navigates further along the wall (Figure 5). Clicking on an image causes the virtual camera to close in on that specific image, as well as bring the image slightly forward, closer to the camera (Figure 7). The scroll wheel on the mouse or on a touch pad can be used for zooming in and out, while the arrow keys on the keyboard can be used for moving between images one by one. A scrollbar is also provided at the bottom of the screen.

Navimation Applied

Spatial manipulation. The wall of images is flat and two-dimensional. However, when navigating along the wall, the virtual camera moves in three dimensions, thus giving the impression of a three-dimensional space, inviting the user to explore a cinematic and immersive environment. The camera can move close to the wall (Figure 7) or away from the wall (Figure 6). This movement can be controlled via a touch pad or the scroll wheel on a mouse. The act of moving the pointing device and seeing the resulting movement on the screen implies that the user is controlling the camera. However, when using the touch pad to scroll horizontally (Figure 5), the direction is reversed: the user appears to be controlling the wall, not the camera. This is somehow inconsistent. Further, double-clicking an image results in it becoming aligned with the middle of the screen: it scales to optimal size, and the wall disappears in the background. Through this fading transition, the spatial metaphor is manipulated and broken as the interface enters a different spatial viewing mode.

Temporal navigation. Users can continuously navigate along the wall by clicking and dragging the pointing device. In this way, they can decide in which direction and at which speed to move. Thus, the navigation is durational and temporal. The visual composition and temporal navigation gives the impression of moving along an endless stream of media content. If a user selects an image by clicking on it, the virtual camera moves automatically along the wall. This is a transition, not something controlled by the user, and is therefore not an instance of temporal navigation.

Motional transformation. When entering the Cooliris environment, the screen transforms from a traditional web page, via a black screen, into a new spatial environment through a fade-in transition. Thus, the whole screen space may be said to be transformed through motion. A similar transformation takes place when a user clicks on an image, resulting in it opening to full screen. Here, the other images fade out while the scrollbar at the bottom of the page is transformed through motion. When navigating along the wall of images, simple boxes in gradient colour serve as placeholders for images before they are loaded (Figure 5). Each time one of these boxes fades into an image, this process can be described as motional transformation.

This interface can gather media content from a variety of external sources, including videos from YouTube and amateur photographs from Flickr. The content is brought into the interface and presented in a consistent way, without much of the original metadata associated with the content. Importantly, Cooliris can be seen as a ‘meta-interface’ that supersedes existing discrete service interfaces by creating a new immersive virtual environment that allows users to access media content in a more direct, efficient, dynamic and engaging way.

Browsing Music on a Mobile Device

Digital devices are increasingly becoming smaller, more portable and more powerful. Further technological improvements have included better screen resolution, faster processing and storage, and, more recently, haptic and motional features. For mobile devices, we are seeing a growth in content designed specially for small screens on which dynamic information is served to us on the move. One area in which this is most obvious is that of music. Innovations in personal music players have now moved over to mobile devices and ‘smart phones’ with multiple functions in which interfaces are becoming important in representing and providing access to a variety of media.

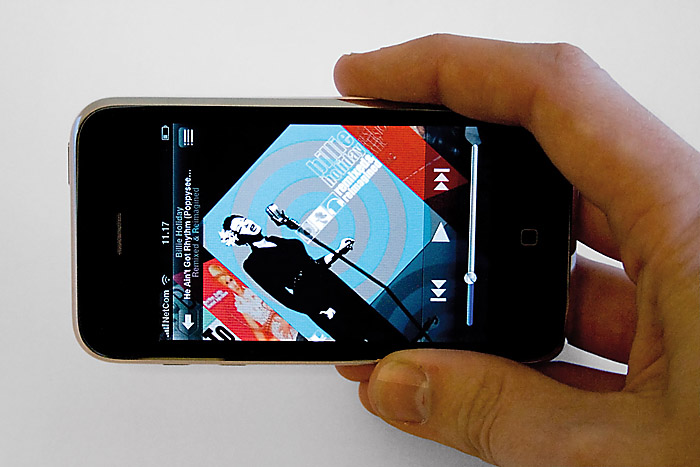

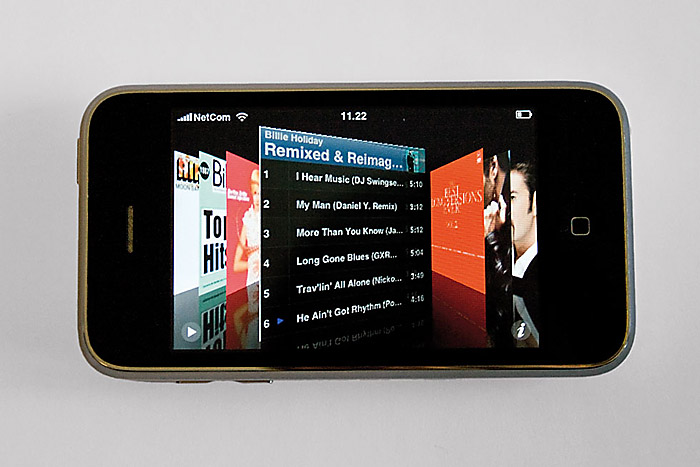

Description: iPhone Cover Flow

The Apple iPhone is a handheld device that is a mobile phone, a web browser, and a music player. Cover Flow is an integrated interface on the iPhone used for navigating the music library on the device by browsing cover artworks (Figures 8, 9 and 10). This interface appears in a similar way on iTunes and Finder on Apple computers. In the iPod mode of the phone, the main navigational idea of this interface is to arrange album covers along a line in a three-dimensional space. The user enters the Cover Flow interface by tilting the phone from vertical to horizontal mode. The iPhone has a sensitive screen that allows for multi-touch interaction. In Cover Flow, only one finger is used. To navigate the line, a finger is used on the touch-screen to ‘push’ the covers to the left or right. A selected album is presented in the middle of the screen, while the remaining albums are presented in perspective views on the left and right, partially hidden behind each other (Figure 9). To play the album, the user first taps on the cover, causing it to rotate and display the songs of the album on the reverse side of the cover artwork (Figure 10). There is a direct mapping (Shneiderman, 1983) between the finger interaction with the screen and the behaviour of the interface. Interface elements such as cover artwork display to some degree a kind of real-world physical behaviour, i.e., when the finger strokes the surface of the screen the underlying cover is pushed across the screen.

Figure 8. Rotation is used as a transition when entering the Cover Flow interface.

Figure 9. A finger is used on the touch-screen to browse album covers.

Figure 10. An album cover is rotated to show the list of songs on a particular album.

Navimation Applied

Spatial manipulation. When the user enters Cover Flow by physically rotating the device, the selected artwork rotates correspondingly (Figure 8) before it appears along a line of covers in a three-dimensional space (Figure 9). The physical and spatial rotation activity is thereby reflected in the virtual environment, assuming a common gravity. However, there is no one-to-one mapping between the physical and virtual space. First, there is a short delay before the virtual rotation takes place. Second, while the phone may be rotated freely, the virtual environment snaps automatically to a position parallel to the four sides of the device itself. In this way, the sensation of space created by the relationship between the real and the virtual space is manipulated rather than immediately given. The kinetic response is both surprising and satisfying. When navigating along the line of covers, we perceive the covers as moving and the interface camera as standing still, since we are holding the device still in our hand. As the virtual environment can be manipulated this directly with our fingers on the screen, any division between real space and the screen space is blurred or diminished.

Temporal navigation. When the user pushes the album covers sideways, navigation is continuous. In this case, the speed and direction of navigation is fully controlled by the user, within the limits of the interface. However, if a user selects an album cover by tapping on it, the interface itself takes control of the navigation through a transition and moves to the selected album. Depending on how a user chooses to navigate, the navigation may be said to be temporal or not.

Motional transformation. When the user opens Cover Flow by tilting the phone, the entire screen space is transformed through rotation and fade. Another instance of motional transformation can be seen when tapping on a cover. The cover artwork rotates centrally around a vertical axis, displaying the reverse side of the artwork (Figure 10), which shows a list of songs from the given album. As a result, the album cover transforms into a different interface element through rotation, thereby supporting the metaphor of the album image being a physical object such as a CD or an LP.

The Apple iPhone represents an important change in the way we can interact with digital interfaces. Sophisticated graphical interfaces are no longer only part of stationary PCs and laptops; they are moving out into a range of different contexts in the world.

Reflections Across the Examples

We now consider the three concepts across the selected examples.

Temporal Navigation

Navimational interfaces often allow the possibility of navigating continuously over time. Temporal navigation denotes how, at a micro level, navigation may be seen as a durable, continuous and topological activity. Above we have seen this kind of navigation realised in two different ways: by changing the perspective, and by navigating along one or more spatial dimensions.

First, on the Leo Burnett website, temporal navigation is realised by slightly shifting visual elements in response to user interaction, resulting in a change in perspective that creates a sensation of depth, in similar ways as in film (Zettl, 1990). Such subtle movements can drastically affect the sensation of spatial depth, and thereby allow for new spatial compositions. Second, the iPhone Cover Flow and Cooliris interfaces both allow navigation along specific spatial dimensions. The iPhone images are explored by moving the row of images horizontally, while the wall of images in Cooliris are explored by moving horizontally and by zooming in and out. This is in contrast to traditional web design, in which navigation is normally carried out by selecting hypertext links or buttons, or by moving vertically up and down a ‘flat’ web page. Further, in these examples, the user is often provided with other navigational alternatives when temporal navigation is utilised.

The application of temporal navigation challenges the assumption that dynamic information that changes over time, such as animation or video, necessarily leaves the user in an observational and static user mode (Liestøl, 1999, p. 44). Rather, the user becomes what Liestøl (1999, p. 15) calls a secondary author within the dynamic interface. This secondary author may be given a high degree of agency by increasing the possibility of continuously changing the course of events in the interface. Temporal navigation can provide new possibilities for navigation and narrative composition with dynamic features in the interface. This applies to designers as well as storytellers and other information providers in a range of fields, and increasingly in social media environments. However, the concept could lose its analytical power if applied to physical environments or virtual reality environments that mimic the real world as navigation here is always temporally continuous.

A further direction for research could be to investigate how different interaction techniques and physical devices affect temporal navigation, and to apply the concept in diverse design contexts. How can we, for example, combine full-body interaction with temporal navigation, or combine the traits of hypertext with temporal navigation, or use continuous navigation as a way of visualising and exploring information?

Spatial Manipulation

Spatial manipulation refers to how the use of motion can both create and manipulate the sensation of space in a screen interface, for example by mixing representations of two-dimensional and three-dimensional space. In the examples above, spatial manipulation is used to constitute a space that would be impossible to realise in the physical world. Further, the notion of a virtual camera becomes important. We identified two layers of interface spatiality that can be combined in various ways.

The Leo Burnett website showed that the sensation of space can be inconsistent at a given time, and can change with time, thereby constituting a virtual environment that would be impossible to recreate in the physical world. This is distinct from virtual reality interfaces and from most digital modelling software that generally try to imitate physical reality through a coherent spatial interface (Power, 2009). The Cooliris interface makes us aware of the notion and importance of a virtual camera through which the interface environment and screen space is framed, as described by Jones (2007) for computer-generated animation. This virtual camera is partly controlled by the user and creates a sensation of three-dimensional space. However, this sensation is distorted as soon as the user opens an image in full screen. Further, the notion of the virtual camera draws attention to the differences involved in controlling the virtual camera versus the content of the screen. It is important for designers to be aware of this.

The iPhone takes spatial manipulation further when two layers of spatiality come to the fore: first, we have the screened spatialization that is created through the screen, and, second, the spatial, physical, and tangible interaction that takes place between the user and the device. These layers are highly interconnected, affecting and extending one another. The relationship can be direct, i.e. through having a shared sense of gravity, or it can be manipulated in different ways, thereby producing a more complex sensation of space in and through the interface. This example points to the need for studying how we might physically interact with navimation interfaces, including greater attention to haptic qualities and input techniques such as gestures.

We argue that spatial manipulation offers new possibilities for interface design and analysis. For designers, there is still much work to be done in combining and integrating screens with the physical world, as recent developments in mobile augmented reality technology have shown. Motion graphics could be one source of inspiration for such developments. Yet, as an analytical concept, spatial manipulation does not necessarily have to be limited to screens. For example, how might the sensation of space be manipulated through the movement of objects or by mixing diverse representation techniques in the physical world?

Motional Transformation

We use motional transformation to refer to how an inherent feature of one or several visual elements can gradually change over time. In the examples above, transformative motion is used to change visual elements into new entities, provide smooth transitions, and reveal more information about an element.

The Leo Burnett site shows how motional transformation can alter and break apart a visual element into new separate entities that constitute objects for further navigation. This transformation has signification in itself. In this specific case, the unexpected visual transformation seems to represent the conglomerate of projects and ideas that constitute the company itself. This can relate to what Eisenstein (1988) called ‘plasmatic’ and ‘metamorphose’ when describing the traits of animation, and to Chang and Ungar’s (1993) argument that interface design can learn from cartoon animation, in which objects are ‘solid’—they move about as three-dimensional, real things, reacting to external forces, as though they have mass and are influenced by inertia.

Cooliris demonstrates how subtle transformations can have an important communicational role. Means of fading, moving and resizing visual elements and even the whole screen space, act as transitions that create a sensation of seamless navigation between the different parts of the interface. Here, reference could be made to the notion of temporal continuity in ‘continuity editing’ in film making, in which the purpose is to establish a logical coherence between ‘shots’ (Zettl, 1990; Liestøl, 1999, p. 43). In interface design, the aim is often to provide continuity in navigating between different parts of an interface, and in such situations motional transformation can be used in different ways to establish pace and rhythm, drawing on different visual styles, and thereby resulting in different meanings. The iPhone Cover Flow shows a different use of motional transformation: to reveal more information about an object. Here, this is achieved through a rotational transition that supports a specific metaphor.

We argue that motional transformations can have especially rich meaning potential since a large amount of visual elements can be presented and transformed in a short span of time, and even more so if combined with interaction. However, this also raises questions of ‘motion literacy’ and how much visual information different people are able to perceive in a short time. As Jenkins (2006) argues, this also relates to different genres of visual representation in popular culture and their continuous development.

As an analytical concept, motional transformation risks becoming too general when applied to the whole screen space. The screen is itself a visual element in which new elements are embedded and composed inside each other. The concept seems to be most powerful when applied to visual elements that are easy to identify as independent entities in a larger composition. The notion of transformation may also be problematic: how much change is required to call something a transformation?

Conclusions

We have argued and shown that the interface is not just a flat layer between a user and a computer, but a complex, mediating, cultural artefact. It is one that allows the combination of semiotic resources and mediated features into dynamic compositions (Morrison, in press). As the interface is itself a designed artefact, potential for meaning is built into it by remediating and alluding to other interfaces and conventions as well as to other circulating media types and their wider social and cultural contexts of communication.

In interaction design, the move from object to experience implies a move from spatial form to temporal form (Mazé & Redström, 2005). Taken together, spatial form and temporal form add up to movement through space in time. In interfaces, animation and visual movement are not only composed in the form of embedded videos or animated menus, but as part of the actual interface. They are intertwined with navigational movement in and through information space (Benyon, 2001). As digital hardware and software continue to develop, so do the dynamic features of interfaces. This raises new opportunities and challenges for design concerning the spatial and temporal form of screen spaces.

Complex mediation (Bødker & Andersen, 2005) is realised in the interface by way of these features and properties. For the user, it is through the mix of media, modes and movement that a range of dynamic activities can take place. In turn, dynamic media types are also remediated (Bolter & Grusin, 1999) as users navigate their way through information space. As information continues to proliferate in digital communication, new mediating interfaces as well as analytical concepts are needed for creating understanding of what has been designed textually and rhetorically as well as for providing communicative and cultural resources and potential for meaningful experiences. The concept navimation—the intertwining of visual movement with activities of navigation in screen interfaces—allows us to investigate these complex relations at a textual and mediational level. We have investigated features of navimation by applying three concepts that, as semiotic resources (Kress & van Leeuwen, 2001), might also apply to other domains and design contexts. Further research might investigate, for example, how different interaction techniques and physical devices affect temporal navigation, or how spatial manipulation can be applied outside the screen. Motional transformation has rich meaning potential that yet remains to be utilised, for example in visualising abstract data, a central concern in the field of information visualisation.

This investigation has concentrated on the visual and semiotic aspects of navimation. A number of further questions arise. How can navimation be used in diverse physical contexts and with different types of screening technologies? How might navimation help users understand the structure of a complex communicative system? In what ways does the depiction of navimation interfaces in visual culture, such as in science fiction movies, relate to the interfaces that we use each day, and to the future of interface design?

The conceptual approach and concepts developed here might be applicable to other types of interfaces, such as those found in gaming, installation, public advertising, and interactive storytelling. Similarly, ideas could be taken up, critiqued, applied or modified in other areas, such as film, animation and motion graphics, information visualization, augmented reality, mobile, and 3D interface design. These are domains that are becoming increasingly connected in their use of interfaces as symbolic texts and cultural media as well as in our variously motivated uses and engaged participation. Social semiotics needs to be explored more thoroughly in relation to digital design and to multimodal text analysis of dynamic interfaces.

Navimation and the concepts developed here have been devised and applied as part of a wider practice-based research project. The project continues to investigate designing for social use and engagement. From a critical design practice view, the concept of social navimation has been developed to investigate how features of navimation can be applied in social media (Eikenes, 2009). This also extends to investigations into the roles of navimation in the design of social media applications for popular, contemporary music sharing. Here navimational features and functionalities have been developed and refined through users’ responses. Further, ongoing research is taking this design experience into the context of co-designing navimation for a leading web browser concern. In each of these linked design and analysis components of the project, the concepts we have developed have shown potential as ideational tools for designing. They have also been useful as a vocabulary for analytical discussion. The terms have been successfully introduced in the graduate level teaching of interaction design, alongside the more practical approaches of sketching and prototyping dynamic interfaces.

In these diverse contexts, we see that there is a challenge to develop a more elaborate vocabulary for navimation that can be further adjusted to a more micro level and perhaps conveyed in more accessible language for design education and design practice. There are precedents for this in motion graphics and animation handbooks (e.g., Woolman, 2004). We have shown, however, that design research can generate critical terms in the manner that has been conducted in film and animation studies (e.g., Jones, 2007) and in social semiotics (e.g., Kress & van Leeuwen, 2001). This is a complex undertaking when the texts we study are themselves dynamic, and are used across timescales in a diversity of spatial environments and contexts.

Acknowledgements

For comments, thanks go to: Synne Skjulstad, Palmyre Pierroux, Jay Lemke, the design research seminar group at the Oslo School of Architecture and Design (AHO), the Communication Design Research Group (InterMedia, University of Oslo), the Transactions Research Group (Faculty of Education, University of Oslo), participants at the Multimodality and Learning Conference (2008), and members of the NORDCODE PhD School. Two anonymous reviewers provided most helpful suggestions. This article is a result of the RECORD project financed by the Research Council of Norway, to which AHO is a partner.

End Notes

1. See www.navimationresearch.net

2. The project deals with the relationships among innovations in dynamic interfaces, content mediation and aspects of social networking from within digital interaction and communication design. In the project, interfaces are designed and analysed textually as a means to better understand their meaning potential and how they might be constructed. For the project website, see: www.recordproject.org. The project has undergone different phases and covers multiple areas that involve design experimentation, analysis, and user-based studies. This article is one of a medley of research publications based on this project and it aims to highlight the need for and potential of building a tentative critical vocabulary for both describing and analysing dynamic features in interfaces that can be drawn from research and incorporated into practice and reflection in action (Schön, 1983).

3. The aim of semiotics is to understand how texts and artefacts can communicate meaning through sign systems, including the systems represented by all kinds of media types. Andersen et al. (1993) and Nadin (1988) have used semiotics to analyse the computer as a medium. From a semiotics point of view, people make meaning by using systems of signs. Influenced by psychology, critical linguistics, discourse and cultural studies, social semiotics explains meaning making as a social practice. Semiotic systems are dynamic ones shaped by and developed in specific social and cultural contexts.

4. The notion of the GUI may be extended to include the interface as a communicative text and site for mediated engagement and expression. This situates the interface as a media object that communicates meaning in itself (e.g., Bolter & Grusin, 1999) in a cultural context.

5. One of the issues explored in the field of HCI is the challenge of navigating within large amounts of digital media content, such as images, video and music files (Shneiderman et al., 2006). Typically, the goal has been to reduce the time and effort for the user in finding a specific document or element.

6. Murray (1997) describes navigation as a form of agency that can be pleasurable in itself, in that we can enjoy “orienting ourselves by landmarks, mapping a space mentally to match our experience, and admiring the juxtapositions and changes in perspective that derive from moving through an intricate environment” (p. 129).

7. The term was coined by the urban planner Lynch (1960) to refer to “a consistent use and organization of definite sensory cues from the external environment” (p. 3). The connection between navigation and architecture is evident in the digital world, where the design of digital information spaces such as webpages is often referred to as information architecture (Rosenfeld & Morville, 1998).

References

- Aarseth, E. (1997). Cybertext: Perspectives on ergodic literature. Baltimore, MD: The Johns Hopkins University Press.

- Andersen, P. B., Holmqvist, B., & Jensen, J. (Eds.). (1993). The computer as medium. Cambridge, UK: Cambridge University Press.

- Baecker, R., & Small, I. (1990). Animation at the interface. In B. Laurel (Ed.), The art of human-computer interface design (pp. 251-267). Reading, MA: Addison-Wesley.

- Bagnara, S., & Crampton Smith, G. (Eds.). (2006). Theories and practice in interaction design. Mahwah, NJ: Lawrence Erlbaum.

- Benyon, D. (2001). The new HCI? Navigation of information space. Knowledge-Based Systems, 14(8), 425-430.

- Bernstein, M. (1998). Patterns of hypertext. In Proceedings on the 9th ACM Conference on Hypertext and Hypermedia (pp. 21-29). New York: ACM.

- Binder, T., Löwgren, J., & Malmborg, L. (Eds.). (2009). (Re)searching the digital Bauhaus. London: Springer.

- Blythe, M., Overbeeke, K., Monk, A., & Wright, P. (Eds.). (2003). Funology: From usability to enjoyment. Dordrecht: Kluwer Academic Publishers.

- Bolter, J., & Grusin, R. (1999). Remediation: Understanding new media. Cambridge, MA: The MIT Press.

- Bødker, S., & Andersen, P. B. (2005). Complex mediation. Human-Computer Interaction, 20(4), 353-402.

- Chang, B., & Ungar, D. (1993). Animation: From cartoons to the user interface. In Proceedings of the 6th Annual ACM Symposium on User Interface Software and Technology (pp. 45-55). New York: ACM.

- Crilly, N., Maier, A., & Clarkson, P. (2008). Representing artefacts as media: Modelling the relationship between designer intent and consumer experience. International Journal of Design, 2(3), 15-27.

- Eikenes, J. O. (2009, August 31). Social navimation: Engaging interfaces in social media. Paper presented at the 3rd Nordic Design Research Conference, The Oslo School of Architecture and Design, Oslo, Norway. Retrieved May 15, 2009, from http://ocs.sfu.ca/nordes/index.php/nordes/2009/paper/download/246/133

- Eisenstein, S. (1988). Eisenstein on Disney. (J. Leyda, Ed.). (A. Upchurch, Trans.). London: Methuen.

- Gallagher, R., & Paldy, A. (2007). Exploring motion graphics. Clifton Park, NY: Thomson Delmar Learning.

- Gere, C. (2006). Genealogy of the computer screen. Visual Communication, 5(2), 141-152.

- Jenkins, H. (2006). Convergence culture: Where old and new media collide. New York: New York University Press.

- Jones, M. (2007). Vanishing point: Spatial composition and the virtual camera. Animation, 2(3), 225-243.

- Jones, R., & Norris, S. (2005). Discourse in action: Introducing mediated discourse analysis. London: Routledge.

- Jørgensen, A., & Udsen, L. E. (2005). From calculation to culture: A brief history of the computer as interface. In K. Bruhn Jensen (Ed.), Interface://culture: The World Wide Web as a political resource and aesthetic form (pp. 39-64). Frederiksberg: Samfundslitteratur Press.

- Kress, G., & van Leeuwen, T. (2001). Multimodal discourse: The modes and media of contemporary communication. London: Arnold.

- Krippendorff, K. (1989). On the essential contexts of artifacts or on the proposition that “design is making sense (of things)”. Design Issues, 5(2), 9-39.

- Laurel, B. (1991). Computers as theatre. Reading, MA: Addison-Wesley.

- Lemke, J. (2000a). Across the scales of time: Artifacts, activities, and meanings in ecosocial systems. Mind, Culture, and Activity, 7(4), 273-290.

- Lemke, J. (2000b). Opening up closure: Semiotics across scales. In J. Chandler & G. v. d. Vijver (Eds.), Closure: Emergent organizations and their dynamics. New York: New York Academy of Sciences.

- Lemke, J. (2002). Travels in hypermodality. Visual Communication, 1(3), 299-325.

- Lemke, J. (2005). Multimedia genres and traversals. Folia Linguistica, 39(1-2), 45-56.

- Liestøl, G. (1999). Essays in rhetorics of hypermedia design. Unpublished doctoral dissertation, University of Oslo, Oslo, Norway.

- Liestøl, G. (2003). “Gameplay”: From synthesis to analysis (and vice versa). In G. Liestøl, A. Morrison, & T. Rasmussen (Eds.), Digital media revisited: Theoretical and conceptual innovation in digital domains (pp. 389-413). Cambridge, MA: The MIT Press.

- Löwgren, J. (2007). Pliability as an experiential quality: Exploring the aesthetics of interaction design. Artifact, 1(2), 85-95.

- Löwgren, J., & Stolterman, E. (2004). Thoughtful interaction design: A design perspective on information technology. Cambridge, MA: The MIT Press.

- Lunenfeld, P. (1999). The digital dialectic: New essays on new media. Cambridge, MA: The MIT Press.

- Lynch, K. (1960). The image of the city. Cambridge, MA: The MIT Press.

- Manovich, L. (2001). The language of new media. Cambridge, MA: The MIT Press.

- Manovich, L. (2007). After effects, or velvet revolution. Artifact, 1(2), 67-75.

- Mazé, R. (2007). Occupying time: Design, technology, and the form of interaction. Stockholm: Axl Books.

- Mazé, R., & Redström, J. (2005). Form and the computational object. Digital Creativity, 16(1), 7-18.

- Moggridge, B. (2007). Designing interactions. Cambridge, MA: The MIT Press.

- Moran, T. (1981). The command language grammar: A representation for the user interface of interactive computer systems. International Journal of Machine Studies, 15(1), 3-50.

- Morrison, A. (in press). (Ed.). Inside multimodal composition. Cresskill, NJ: Hampton Press.

- Morrison, A., & Eikenes, J. O. (2008, June 19-20). The times are a-changing in the interface. Paper presented at the International Conference on Multimodality and Learning. Institute of Education, University of London, London.

- Morrison, A., Stuedahl, D., Mortberg, C., Wagner, I., Bratteteig, T., & Liestøl, G. (in press). Analytical perspectives. In I. Wagner, T. Bratteteig, & D. Stuedahl (Eds.), Exploring digital design. Vienna: Springer.

- Murray, J. (1997). Hamlet on the holodeck: The future of narrative in cyberspace. New York: The Free Press.

- Nadin, M. (1988). Interface design: A semiotic paradigm. Semiotica, 69(3/4), 269-302.

- O’Neill, S. (2008). Interactive media: The semiotics of embodied interaction. London: Springer.

- Pilling, J. (1997). A reader in animation studies. Sydney: John Libbey.

- Power, P. (2009). Animated expressions: Expressive style in 3D computer graphic narrative animation. Animation, 4(2), 107-129.

- Redström, J. (2001). Designing everyday computational things. Unpublished doctoral dissertation, Göteborg University, Göteborg, Sweden.

- Rosenfeld, L., & Morville, P. (1998). Information architecture for the World Wide Web. Sebastopol, CA: O’Reilly.

- Royce, T. (2006). Intersemiotic complementarity: A framework for multimodal discourse analysis. In T. Royce & W. Bowcher (Eds.), New directions in the analysis of multimodal discourse (pp. 63-109). London: Routledge.

- Schön, D. (1983). The reflective practitioner: How professionals think in action. New York: Basic Books.

- Shneiderman, B. (1983). Direct manipulation: A step beyond programming languages. IEEE Computer, 16(8), 57-69.

- Shneiderman, B., Bederson, B., & Drucker, S. (2006). Find that photo!: Interface strategies to annotate, browse, and share. Communications of the ACM, 49(4), 69-71.

- Skjulstad, S. (2007). Communication design and motion graphics on the Web. Journal of Media Practice, 8(3), 359-378.

- Skjulstad, S. (in press). What are these? Designers’ websites as communication design. In A. Morrison (Ed.), Inside multimodal composition. Cresskill, NJ: Hampton Press.

- Skjulstad, S., & Morrison, A. (2005). Movement in the interface. Computers and composition, 22(4), 413-433.

- Spence, R. (2007). Information visualization: Design for interaction. Harlow: Pearson Prentice Hall.

- Thomas, B., & Calder, P. (2001). Applying cartoon animation techniques to graphical user interfaces. ACM Transactions on Computer-Human Interaction, 8(3), 198-222.

- van Leeuwen, T. (2005). Introducing social semiotics. London: Routledge.

- Verhoeff, N. (2008). Screens of navigation: From taking a ride to making the ride. Journal of Entertainment Media, 12. Retrieved April 20, 2009, from http://blogs.arts.unimelb.edu.au/refractory/2008/03/06/screens-of-navigation-from-taking-a-ride-to-making-the-ride/

- Vihma, S. (1995). Products as representations: A semiotic and aesthetic study of design products. Helsinki: University of Art and Design Helsinki (UIAH).

- Vygotsky, L. (1978). Mind in society: The development of higher psychological processes. Cambridge, MA: Harvard University Press.

- Wartofsky, M. (1979). Models: Representation in scientific understanding. Dordrecht, the Netherlands: Reidel Publishing.

- Wood, A. (2007). Digital encounters. London: Routledge.

- Woolman, M. (2004). Motion design: Moving graphics for television, music video, cinema, and digital interfaces. Mies, Switzerland: RotoVision.

- Zettl, H. (1990). Sight, sound, motion: Applied media aesthetics (2nd ed.). Belmont, CA: Wadsworth.