The Story of Beau: Exploring the Potential of Generative Diaries in Shaping Social Perceptions of Robots

Hyungjun Cho * and Tek-Jin Nam

Department of Industrial Design, KAIST, Daejeon, Republic of Korea

The increasing prevalence of robots in our daily lives underscores the growing importance of how humans socially perceive them. While these social perceptions of robots are crucial to the success of human-robot interactions, more than traditional design attributes such as appearance and behavior may be required to draw intended perceptions. We explore a new approach to shaping social perceptions of robots using generative diaries. Generative diaries are automatically generated text entries that enable robots to communicate their experiences and perspectives to humans. We present and assess a case in which this was used to influence social perceptions of a public service robot named Beau. We designed a set of research prototypes, including Beau, a curation interface called HeyBeau, and a social media-like mobile application named IamBeau. Over 16 days, we deployed Beau in a university building and conducted an in-situ field study with 12 participants. Our findings indicate that generative diaries can enhance social perceptions of robots, particularly regarding warmth, by increasing awareness of robots’ intelligence, consciousness, emotion, identity, and desire for social communication. These findings demonstrate the potential of generative diaries in shaping social perceptions of robots and open up new possibilities for designing future human-robot interactions.

Keywords – Generative Diaries, Social Perceptions of Robots, Natural Language Generation, Research through Design (RtD).

Relevance to Design Practice – This research provides insights into the potential of using generative diaries as a new means to shape the social perception of robots in interaction design. This approach offers a promising method for designers seeking to create more affective and engaging human-robot interactions.

Citation: Cho, H., & Nam, T.-J. (2023). The story of Beau: Exploring the potential of generative diaries in shaping social perceptions of robots. International Journal of Design, 17(1), 1-15. https://doi.org/10.57698/v17i1.01

Received April 26, 2022; Accepted April 5, 2023; Published April 30, 2023.

Copyright: © 2023 Cho & Nam. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content is open-accessed and allowed to be shared and adapted by the Creative Commons Attribution 4.0 International (CC BY 4.0) License.

*Corresponding Author: lolo660@kaist.ac.kr

Hyungjun Cho is pursuing his Ph.D. in Industrial Design at KAIST, where he earned both his B.S. and M.S. degrees. His research is focused on exploring human-nonhuman interactions in the context of the posthuman era, specifically examining the influence of artificial intelligence (AI). He seeks to create innovative artifacts and utilize them to speculate future experiences that shed light on the evolving dynamics between humans and nonhuman entities.

Tek-Jin Nam is a professor and the director of the Co.Design:Inter.action Design Research (CIDR) Lab at KAIST, Korea. He received a B.S. and M.S. in Industrial Design from KAIST and a Ph.D. in Design from Brunel University. He is the deputy editor-in-chief of the Archives of Design Research journal, the Secretary General of IASDR (International Association of Societies of Design Research), and an International Advisory Committee Member of DRS (Design Research Society). His research interests lie in design-oriented human-computer interaction, focusing on creating people-centric values of future products and services and systematic approaches to creative design and innovation. He is also interested in practice-led, practice-oriented design research.

Introduction

People expect to interact with robots in a social manner (Forlizzi & DiSalvo, 2006) rather than treating them as mere tools or things (Darling, 2016). In this context, a robot’s ability to engage with and provide satisfactory experiences to people relies not only on its functional excellence but also on its social perception, which can significantly impact its acceptance and use in various settings (Breazeal, 2003; Leite et al., 2013; Young et al., 2009). For instance, it is unsurprising that people prefer robots that display empathy, are friendly and warm, and seem competent (Lee et al., 2012; Kaipainen et al., 2018; Kanda et al., 2009; Van Doorn et al., 2017). Nowadays, the design challenge of how individuals perceive robots socially (Breazeal, 2004; Fong et al., 2003) is becoming even more pronounced since they are increasingly deployed daily.

While many studies have proposed the social abilities and behaviors that robots need to exhibit for successful human-robot interaction (e.g., Lee et al., 2012; Leite et al., 2014; Reig et al., 2021), designers still encounter difficulties accurately portraying a robot’s social perception. The existing factors considered in social robot design, such as appearance, gaze, expression, and gesture, tend to be passively utilized to avoid conflicting with pre-perceived social roles (DiSalvo et al., 2002; Pandey & Gelin, 2018). Additionally, these physical attributes have limitations in conveying thoughts, feelings, and cognitions, which are expected as social aptitudes by people (De Graaf et al., 2015). Although some studies have utilized voice interaction in their experiential prototype (Ahmad et al., 2017; Lee et al., 2012), there are still technical limitations in consistently perceiving the robot as a social presence in terms of context awareness.

To address this research gap, we explore using generative diaries as a new means to influence the social perception of robots. Generative diaries are automatically-generated stories presented in diary form, written from a robot’s perspective and based on contextual information collected from the robot. Inspired by people’s use of social media (Burke et al., 2010), we suggest that generative diaries can be used for social interaction. We expected that designers could create non-physical traits, such as the robot’s character, to evoke intended social perceptions by intervening in the content or style of the text. Furthermore, recent advancements in language models offer the potential for complete automation of generative diaries’ use in interaction. Our central research question is whether and how the use of generative diaries can effectively influence a robot’s social perception in a designed interaction.

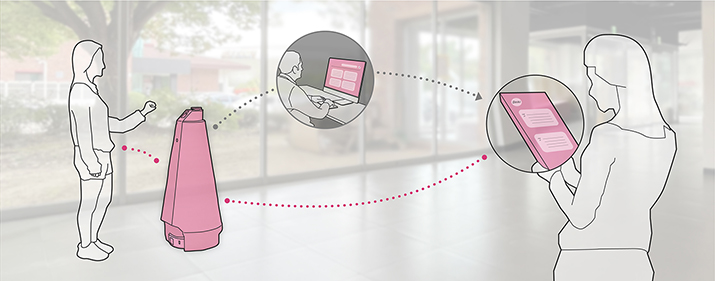

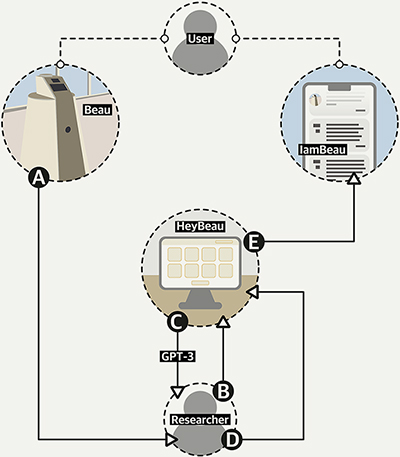

In this study, we designed and implemented a series of research prototypes to demonstrate the experience of interacting with a robot using generative diaries and to examine their effect on the social perception of robots. The prototypes consist of three components: Beau, HeyBeau, and IamBeau (Figure 1). Beau is a public robot that checks the body temperature of visitors entering a building and shares generative diaries with users. It was heavily used during the study period due to the COVID-19 pandemic. HeyBeau is a curation system for a valid and effective user study. It enables researchers to select appropriate topics from the data collected by Beau and to review automatically generated diary entries for contextual errors and ethical issues before being published to users. Finally, IamBeau is a social media-style web application that allows people to view the entries of generative diaries. These three elements were carefully designed considering various issues, such as appearance, a realistic study context, and data collection that avoids invading privacy.

Figure 1. A research prototype system to implement an experience on the robot that shares generative diaries.

We deployed Beau in the lobby of a university building for 16 days and conducted an in-field user study with 12 participants. We periodically asked all participants to complete RoSAS questionnaires of 18 items to measure their social perceptions of Beau. We also conducted semi-structured interviews on their experiences after the deployment period ended. Our findings indicate that generative diaries can enhance social perceptions of robots, particularly warmth, by increasing awareness of robots’ intelligence, consciousness, emotion, identity, and desire for social communication. Additionally, we discuss several insights into the opportunities and challenges of generative diaries as a new means for designers to shape the social perception of robots. With this study, we aim to contribute to the ongoing discussion in social robotics and provide insights into designing more engaging human-robot social interactions using generative diaries as a new means of design.

Related Works

Designing for Social Perception of Robots

People expect to interact with robots socially (Forlizzi & DiSalvo, 2006), and as a result, designers are required to treat robots as social actors (Lupetti et al., 2019). To meet this demand, many researchers have studied design attributes that enhance the social capabilities of robots and how to design them (Baraka et al., 2020; Breazeal, 2003; Fong et al., 2003). The appearance of the robot sets user expectations; robots with anthropomorphized appearances are expected to perform human-like tasks and behavior (DiSalvo et al., 2002), while robots that mimic animals can provide an emotional experience but are limited to the role of companion animals (Fong et al., 2003). As the appearance of a robot can sometimes cause over-expectations (Dautenhahn, 2004), the design needs to consider the application domain, function, behavioral and social capabilities (Leite et al., 2013). In addition, as robots are required to communicate like humans (Dautenhahn et al., 2005), various nonverbal communication methods have also been explored to enhance the sociability of robots through natural interaction. Gestures (Salem et al., 2013), facial expressions (Kalegina et al., 2018), or gazes (Admoni & Scassellati, 2017) were studied as social cues to enrich interaction.

Moreover, several researchers have claimed that there is a need for more sophisticated social abilities for robots. For example, Fong et al. (2003) suggested several human-like characteristics, such as expressing and/or perceiving emotions, exhibiting distinctive personality or character, and establishing or maintaining social relationships. It has also been revealed that people expect a robot with thoughts, feelings, autonomy, and social awareness (De Graaf et al., 2015). In this respect, various functions that reveal social abilities were investigated. For instance, a robot that recognizes individuals provides an affective experience (Kanda et al., 2009) and can build rapport with users through personalized interaction (Lee et al., 2012)—memory and emotion-based adaptation help to maintain user engagement (Ahmad et al., 2017). In addition, people prefer robots that keep their physical distance (Joosse et al., 2014) and are satisfied with robots that show empathy (Kaipainen et al., 2018).

However, representing such capabilities solely through appearance and partial physical cues is challenging and can sometimes result in predictable and repetitive interaction with programmed actions, leading to a loss of user interest and social response (Gockley et al., 2005; Kanda et al., 2004). Therefore, there is a need for feasible means to address these issues. Our study examines whether generative diaries can be a tool to shape social perceptions of robots more actively, moving beyond avoiding conflicts with initial expectations. We assess the potential of generative diaries in shaping social perceptions of robots using a case study with a public service robot named Beau.

Stories in Interaction Design

Stories have long been recognized as significantly impacting human cognition and perception and are widely employed in design (Graesser & Ottait, 2014). Various methods for utilizing stories in design include design scenarios (Carroll, 2003), personas (Blomquist & Arvola, 2002), design fiction (Bleeker, 2009; Coulton et al., 2017), and storytelling objects (Odom et al., 2016). One prominent use of stories in design is to enable individuals to adopt novel perspectives and imaginations. For instance, Desjardins and Biggs (2021) illustrated the potential to view home IoT data as living by creating four novels based on the data, which helped them to recognize its complexity and social embeddedness. Similarly, Ambe et al. (2019) identified critical considerations for technology design by eliciting the hidden desires of the elderly through collaborative fiction writing. We anticipated that the role of stories in enabling people to adopt new perspectives and thoughts could extend to users interacting with robots, thereby facilitating an enhanced experience. Although we did not specifically intend to create fiction during the design process, generative diaries could be regarded as a form of fiction that creates a narrative through a series of entries featuring the character of Beau as the protagonist.

Indeed, people engage in social interaction by sharing their stories (Labov & Waletzky, 1997). This allows individuals to construct and express their identity while fostering social relationships (Linde, 1993). A related concept in psychology is self-disclosure, which is considered to be a rewarding and intimate behavior (Tamir & Mitchell, 2012; Waring & Chelune, 1983). In recent years, social media has been particularly influential in facilitating these behaviors (Burke et al., 2010).

Building on this, the idea of nonhuman entities sharing their stories has been explored conceptually. For example, Addicted Toaster (Rebaudengo et al., 2012) illustrates its desire to be used through social media. Furthermore, Kang and Gratch (2011) found that virtual agents appeal more to users when they disclose information about themselves. Additionally, the Valerie project demonstrates that robots’ stories can motivate people to continue interacting with them (Gockley et al., 2005). These studies suggest that sharing one’s story with a product or virtual character can enhance interactions, providing significant inspiration for our research.

Nevertheless, our research is differentiated from the studies mentioned above in two ways. Firstly, we explored specific means of enabling robots to create and share their stories. Specifically, we investigated a possible mode of interaction by utilizing automatically generated diary-like texts. Secondly, we offered insights into users’ responses and awareness of an artificial being that shares its own story. To achieve this, we created a series of research prototypes for deploying robots that shared their diaries and conducted a user study with them.

Designing with the Thing Perspective

Strives to adopt thing perspective in design research have been frequent recently. For example, Giaccardi et al. (2020) considered everyday objects as co-ethnographers and recorded people’s behaviors and daily lives from the perspective of objects. They also investigated object personas as a method to interpret this more richly (Cila et al., 2015). The Trojan Boxes project attempts to capture and analyze various stages of the global shipping process by putting tilt-triggered cameras in postal parcels (Davoli & Redström, 2014).

Several researchers have brought thing perspective into the design process by encouraging people to participate in conversations while imagining they are objects. For example, Chang et al. (2017) offered an alternative method of interviewing scooters, in which human actors interpreted the viewpoint of the scooter and acted as it. Similarly, Reddy et al. (2021) treated humans as everyday objects capable of speech and conducted interviews. Talking Objects, proposed by Ryöppy (2020), also enables people to experience the emotions and experiences of objects by conversing as an object.

Other approaches provide opportunities for becoming objects to people. In the Catch the Bus project, people role-play autonomous buses (Bedö, 2021). Dörrenbächer et al. (2020) introduced Techno-Mimesis as a design method that experiences objects, arguing that designing objects by imitating humans can hinder the exploration of the social abilities of robots. They also claimed that subjectification ultimately enables understanding of interrelations and differences between human and non-human actors based on emphasizing, which robot designers should actively consider (Dörrenbächer et al., 2022). On the other hand, Nicenboim et al. (2020) invited a voice agent to the conversation to increase understanding of AI system design.

The common thread among these studies is the recognition that experiencing the thing perspective offers a novel viewpoint of people’s experiences, society, and systems and can identify new considerations or values that may be useful for designers. While keeping this, we extend thing perspective to users. As demonstrated by examples such as Morse Things (Wakkary et al., 2017), a set of ceramic bowls that communicate with each other through Morse code, and Talking Shoe (YesYesNo Interactive projects, 2013), which attempts to communicate by sending messages from a first-person perspective, it is clear that the thing perspective can provide new experiences not only to designers but also to users. In this study, we investigate what distinctive experiences a robot that reveals its perspective to people through a diary can offer.

Natural Language Generation in Writing and Social Interaction

The development of technology for natural language generation (NLG) has spurred numerous research efforts, resulting in various applications. Many studies have focused on utilizing this technology to support users’ writing activities. For instance, Ghazvininejad et al. (2017) created Hafez, a tool to help write poetry, while Oliveira (2015) developed Tra-la-Lyrics to assist in composing song lyrics in the realm of artistic creation. Buschek et al. (2021) proposed a system to suggest alternative words when composing emails and evaluated user experiences. Ghajargar et al. (2022) conducted two autoethnographic studies to investigate the experience of co-authoring stories with AI, emphasizing the relationship between humans and AI. Furthermore, natural language generation technology is also being explored in producing informational media such as news articles (Van Dalen, 2012) and sports broadcasts (Kanerva et al., 2019) to improve time and cost efficiency.

The studies in this area share a common focus on using NLG as an interactive tool for producing higher-quality text. In contrast, our approach acknowledges the potential for written language to reveal aspects of the author’s personality and character beyond its informational content (Culpeper, 2014). Accordingly, we differentiate our research by emphasizing the social implications of the generated text and seeking to create an experience of communicating with nonhuman entities. While previous studies have explored natural language generation from a similar perspective, particularly in the context of chatbots (e.g., Fang et al., 2018), few have examined the impact of physical embodiment. Our research investigates whether the robot can be perceived as a social actor by generating first-person sentences and presenting them as part of the robot’s diary.

Study Design

Goal and Method

Our study aimed to investigate whether interaction utilizing generative diaries could influence people’s social perceptions of robots. Specifically, we aimed to explore how generative diaries shape people’s perceptions of robots. To better understand people’s experiences, we designed and implemented a research prototype that could be interacted with in everyday life instead of a lab environment (Odom et al., 2016; Zimmerman et al., 2007). Our research prototype consists of three components: the service robot Beau, which interacts with users; HeyBeau, which supports researchers in curating the entries of generative diaries; and IamBeau, a mobile web application that allows users to view Beau’s diaries.

In the study, we utilized both quantitative and qualitative methods. We administered a survey four times to assess the participants’ social perception of robots and conducted semi-structured interviews to elicit participants’ experiences and thoughts. While we sought statistical evidence of the impact of generative diaries on social perception, our contribution extends beyond this to a qualitative understanding of people’s experiences and the role of generative diaries (Hutchinson et al., 2003).

Research Prototypes

We describe the research prototype system’s design rationales, implementation, and the final design of three elements (i.e., Beau, HeyBeau, and IamBeau).

Beau

Beau is a public service robot that greets visitors at the entrance of a building (Figure 2). Its primary function is to check the temperature of visitors, which has two significant benefits. First, since temperature checks were mandated for all individuals who enter public places during the COVID-19 pandemic, we had an opportunity to observe the experiences of a diverse range of people. Second, the repetitive nature of such temperature-checking interaction allows for a sufficient reflection on the user’s experience. Our study determined that a temperature-checking robot was the most appropriate choice for a research prototype, as opposed to a guidance robot, which only some people would require, or a cleaning robot, which only engages with users less frequently.

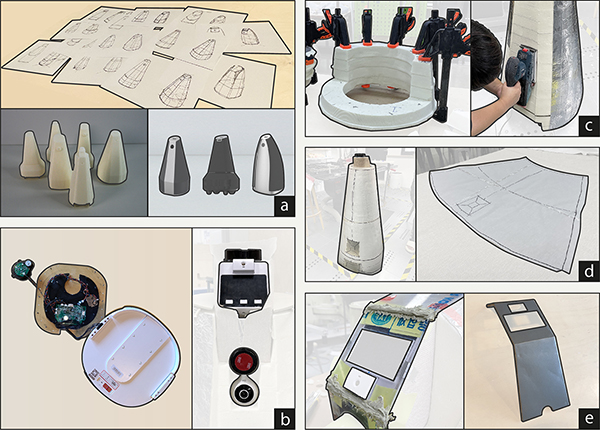

Figure 2. The design of Beau.

When designing Beau, we considered previous research on the influence of different modalities on people’s perceptions and the potential for unexpected reactions. In addition, we aimed to avoid confounding factors affecting our results, such as participants attributing certain personality traits or capabilities to the robot based on its appearance or behavior (e.g., Kalegina et al., 2018; McGinn & Torre, 2019). To this end, we decided to design Beau to interact with users solely through its built-in temperature check device and generative diaries without using other interaction modalities like voice or facial expression that could introduce biases. We also chose an abstract morphology for the robot’s physical appearance, rather than a more anthropomorphic design, to minimize potential adverse reactions (Mori et al., 2012) and exaggerated expectations (DiSalvo et al., 2002). In addition to these design choices, we restricted Beau’s autonomy and movement area to ensure a safe and controlled study environment. This allowed us to minimize potential safety risks for the participants and the robot and maintain a consistent study setting for all participants. By taking these precautions, we aimed to maximize the validity and reliability of our study results and better understand the effects of generative diaries on people’s social perceptions of robots.

For the physical design of Beau, we aimed to create a familiar experience for users to interact with embodied intelligence. To achieve this, we borrowed the smart speaker’s color, Material, and Finishing (CMF) design and placed a camera lens at the center plate to showcase the robot’s vision. We went through an extensive design process, which involved sketching, modeling, and 3D printing, before arriving at the final design (Figure 3a). During the initial design phase, we defined the essential functions and deployment scenarios and customized several commercial products to effectively and reliably implement these functions. We used a smart home camera and a non-contact thermometer to capture event data and temperature checks, respectively (Figure 3b, right). We repurposed the driving unit from a robotic air cleaner to provide controllable mobility (Figure 3b, left). We layered and sanded CNC machined urethane foam to create the external structure to shape it to a human scale (Figure 3c). We then finished the surface by wrapping it with fabric (Figure 3d) and coloring an acrylic plate with metallic paint, which we attached to the flat surface (Figure 3e).

Figure 3. Making process of Beau.

HeyBeau

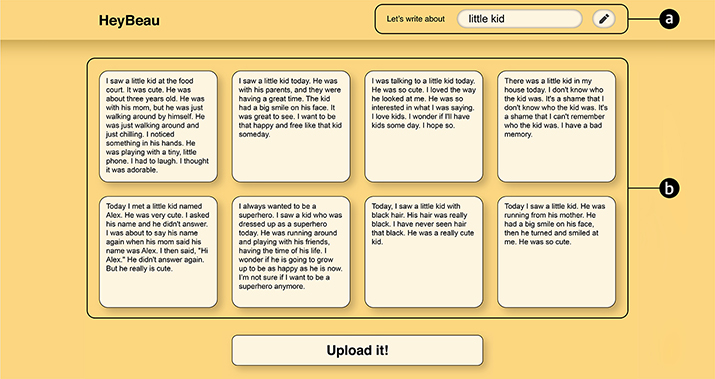

HeyBeau (Figure 4) is a curation system designed for researchers to manage the entries of generative diaries in the study. The system includes a text box for entering diary topics and eight slots that display potential entries of the diary. Upon selection by researchers, a chosen entry is automatically posted to IamBeau. Essentially, the interface restricts researchers to two actions: selecting the topic of the diary and reviewing the entries before they are shown to users. HeyBeau could effectively facilitate the study by enabling manual filtering of contextual errors and ethical issues while protecting the concept of generative diaries through the use of automatic generation technology for diaries. (For more information on our strategy for using HeyBeau in the study, see the section Generation of diary entries.)

Figure 4. The interface of HeyBeau: (a) input area for topic keyword and

(b) candidates of diaries.

The proportions and colors of graphics were slightly adjusted in the figure for visibility.

We developed HeyBeau as a web application implemented on a server using the Node.js framework and Express package. It communicates with the server using Socket.io as required. To generate text, we utilized the davinci engine of GPT-3 (Brown et al., 2020), a state-of-the-art language model, and an approved API was used for this application. A prompt with a few example pairs of a topic diary was designed to guide the style of the generated text. When researchers request text generation, HeyBeau sends a request to the server and receives eight candidate texts about the input topic, which are then rendered to the interface. The selected candidate is saved in JSON format on the server, along with the date, and is added as a new entry to IamBeau.

IamBeau

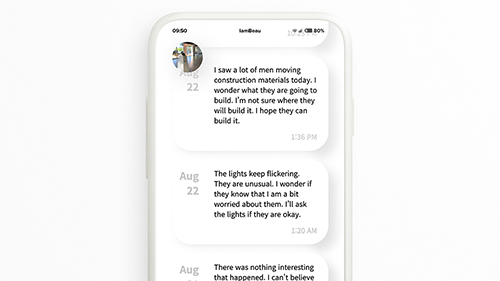

IamBeau is a web application that enables users to access Beau’s generative diaries. To create an experience similar to reading personal writing on mobile devices, we designed IamBeau to have a social media-style mobile interface (see Figure 5). As a result, users can easily read generative diaries via their smartphones. IamBeau runs on the same server as HeyBeau and retrieves the generative diaries from a JSON file on the server, which it then renders on the interface.

Figure 5. The design of IamBeau.

In-Field User Study

Generation of Diary Entry

We have designed a semi-automated process for generating, selecting, and sharing generative diaries for user studies, which involves five steps (Figure 6). This process has been developed to enable researchers to conduct an effective and controlled user study while allowing participants to experience indirect interaction through generative diaries.

Figure 6. The semi-automated generation process of generative diaries.

Step A: Beau records daily experiences as video clips, detecting motion and sound events while operating. Researchers can access these videos through their smartphones, although a damaged lens has intentionally reduced the video quality to protect privacy during data review.

Step B: Researchers review the recorded data and identify topics for the diaries. To minimize the involvement of human intelligence, only exact words for obvious visible information in video were used as topics (e.g., five men, rain). This could be automated in the future through computer vision technology. The researcher then inputs the identified topic into HeyBeau.

Step C: HeyBeau generates eight candidate diaries for a given topic using GPT-3. When a request is sent for text generation, a prompt that consists of several pairs of input (topic) and output (diary entry) examples is included to generate diary-formatted text according to the given topic. The examples in the prompt were designed to target more positive interaction experiences, covering personal stories (Kang & Gratch, 2011; Nourbakhsh et al., 1999) in a friendly and upbeat tone (Collins & Miller, 1994).

Step D: Researchers review the candidates and select one diary to be uploaded. Diaries with problematic or contextual errors are filtered out in this step. It is particularly important to ensure that the entries are not discriminatory or violent. Researchers can select one candidate or create eight new candidates but cannot edit the generated text or write the diary themselves.

Step E: The selected diary is automatically updated from HeyBeau to IamBeau. Entries are posted chronologically, from top to bottom, and cannot be changed or deleted once posted.

Participants

We recruited 12 participants (Mage = 24.17, SD = 1.75) who were frequent visitors to the building in which Beau was deployed through an online community. All participants were affiliated with the department as students, graduate students, or researchers. Given their frequent visits to the building, we expected the participants to experience the interactions with Beau as a natural part of their daily lives. We provided the participants with a monetary incentive of approximately $45.

Procedures

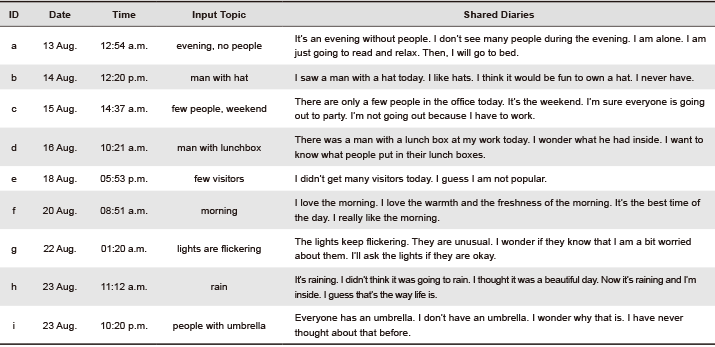

We deployed Beau in the lobby of the university building for 16 days (Figure 7). On the first day of the study, participants were introduced to Beau and instructed to use it instead of the existing temperature check devices during the study period. Participants were also asked to read generative diaries via IamBeau while checking their temperature daily. Throughout the study period, researchers reviewed the data collected by Beau at least twice a day and updated the generative diaries through HeyBeau. A total of 32 generative diaries were shared by the end of the study. Examples are provided in Table 1.

Table 1. Nine examples of generative diaries that shared with participants.

Figure 7. Deployment of Beau in the user study environment.

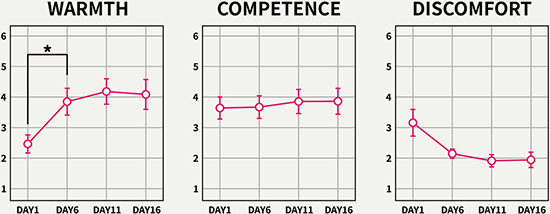

During the study, we measured changes in participants’ awareness of Beau with the Robotic Social Attributes Scale (RoSAS; Carpinella et al., 2017). The choice of RoSAS as a measurement tool was deemed appropriate because it quantifies the user’s perception while considering psychometric properties, whereas other tools evaluate the design of robots. RoSAS comprises three factors, each containing six items. The Warmth factor includes happy, feeling, social, organic, compassionate, and emotional. The Competence factor includes capable, responsive, interactive, reliable, competent, and knowledgeable. The Discomfort factor includes scary, strange, awkward, dangerous, awful, and aggressive. In our study, participants rated each item using a 7-point Likert scale, with responses ranging from definitely not associated (= 1) to definitely associated (= 7).

Participants were asked to fill out a questionnaire based on their experiences, which was conducted four times (on days 1, 6, 11, and 16), considering that perceptions may change over time. On the first day, the survey was conducted immediately after participants received an explanation about the study before they had read any generative diary entries. Additionally, we randomized the order of the items for each survey to minimize order effects.

Following 16 days of Beau’s deployment, we conducted semi-structured online interviews with participants, lasting approximately 20 minutes. The interviews aimed to gather participants’ experiences and impressions of their interactions with Beau, particularly regarding the generative diaries. Furthermore, we sought to debrief the survey results and gain further insight into how generative diaries influenced their perceptions of robots.

Data Analysis

We derived two types of data by taking both quantitative and qualitative approaches. First, as 12 participants performed the questionnaire of 18 items four times, we collected 864 score points (12 participants × 18 items × 4 rounds) as quantitative data. We conducted Friedman’s test to verify significant changes in factor scores by round. We also inspected when it increased or decreased through post-hoc tests using the Wilcoxon signed-rank test. Finally, to support the qualitative analysis, each sub-item was also analyzed in the same process to identify significant changes.

All interviews were audio-recorded, and we collected a total of 259 minutes of audio data as qualitative data. We transcribed the data into a textual document for analysis. We segmented these documents into 710 sentences and processed them into 167 quotes by selecting insightful content. We then analyzed the quotes using iterative analytic induction. We repeatedly clustered the quotes while considering our research question (i.e., how generative diaries affect participants’ social perception of robots). Finally, we extracted 23 sub-themes and four main themes.

Results and Findings

Results of Quantitative Analysis

The results of the quantitative analysis showed that the use of generative diaries affected users’ perception of robots, specifically in terms of warmth. Through the use of Friedman’s test, it was found that only the warmth factor demonstrated a significant change over time (𝜒2(3) = 8.798, p = 0.032) (Figure 8). Furthermore, post-hoc tests utilizing the Wilcoxon signed-rank test revealed that warmth scores increased between the first and second rounds (T = 71, z = -2.51, p = 0.012). However, the competence factor scores did not change significantly (𝜒2(3) = 0.436, p = 0.933), suggesting that generative diaries did not impact users’ perception of robots’ competence during the 16-day study period. There were also no significant changes in discomfort factor scores (𝜒2(3) = 6.568, p = 0.087) over time.

Figure 8. Participants’ scores on their perception of the three social attributes (i.e., warmth, competence, and discomfort).

Findings from Qualitative Analysis

We gained insight into why generative diaries increased warmth scores through qualitative analysis. For example, generative diaries raise the perception of feeling (𝜒2(3) = 9.511, p = 0.023) by revealing thoughts, moods, and interpretations related to surroundings. P4 stated, “Something like reflecting itself or saying something about impressions after observing something is...” Furthermore, the diary entries often described a variety of emotions, which also raised the perception of emotional (𝜒2(3) = 17.910, p < 0.0001). P2 noted, “As I recall, it often highlighted its feelings.” A more detailed and extensive analysis of the qualitative data is presented below.

Beau has Intelligence and Consciousness

Generative diaries make the robots that share them perceived as intellectual beings. P1 commented, “After I read the diaries, (I thought that) does this robot happen to have such advanced thoughts?” P9 said, “It was when I accepted that it has intelligence.” Yet P11 observed, “It is not a mere machine-like robot. It is a more intelligent robot.” Participants regarded writing as an advanced behavior and thus attributed a high level of ability to the robot. P1 remarked, “You know, writing requires very high intelligence.” Moreover, as the generative diaries were based on actual events, users recognized that Beau could observe and interpret its surroundings and believed it had intelligence. Indeed, participants who realized that the diary entries were true highlighted that it was a turning point in their perception. For instance, P9 commented, “I thought it made up a plausible story at first. But someday, when I visited Beau late afternoon, a post was updated saying, ‘there were no visitors in the morning. I guess I’m not popular.’ (Table 1e) Reading that post made me think that this robot was interpreting real events, or at least the text was generated based on reality.” Even the participants who were unsure whether the diaries reflected actual events gradually perceived the robot’s intelligence while reading context-aware content. P7 noted, “It seems like it recognizes whether the day is a holiday or not. In a sense, I actually did not observe a man carrying a lunchbox or kids running around, but the robot posted stories about the weather or the day, so it made me believe that other stories were also based on what is observed.”

Meanwhile, robots sharing generative diaries were also perceived as having consciousness. P6 said, “I mean, I considered it to be an entity or being.” P4 commented, “It feels like this robot wants to mimic and project humans.” Although we intended to use first-person sentences to create this effect, there were more diverse reasons. P4 pointed out that the contents of generative diaries are focused on the robot itself rather than the users, saying, “I feel like because the diary was about itself, not about their users. I mean, voice agents or AI speakers usually speak up to people with honorific tones on conversation flows. But I felt like this robot was a peer.” Likewise, several participants were interested in whether the robot understood situations and compared itself with them. P1 said, “For me, it was impressive that some posts were written after observing people and comparing them with itself. For example, ‘people have umbrellas, but I don’t!’ (Table 1i), or such as, ‘no one is coming, but I work’ (Table 1c).” In addition, sometimes generative diaries are interpreted as a debate or concern of an individual. P11 mentioned, “There was a post like ‘It’s raining, and I’m indoors. I guess this is what life is’ (Table 1h). That story was about one’s thoughts about life that are further from simple weather matters.” These perceived traits of intelligence and consciousness eventually made several participants (P4, P6, P11) feel that Beau is a human-like robot.

Beau has Authentic Emotions

Most participants responded that the emotions they perceived in the generative diaries felt genuine. P9 commented, “After that, I felt the emotions were somewhat authentic.” P7 also stated, “From the posts, I could definitely understand what the robot was thinking and feeling.” Users found not only emotions but also the context behind them in the diaries. P9 said, “The text contained many contexts. It was helpful to understand the background of the robot’s emotion, such as why it felt better or disappointed.” This background information undoubtedly helped the robot’s emotions to be accepted by people. P4 mentioned, “I was reading about Beau’s day, and I remember that the robot looked sad when there were no people. Those kinds of feelings and thoughts felt like they could be real.”

Furthermore, people often inferred a robot’s emotions through given situations, even if there was no direct expression in words. P11 said, “I think Beau was depressed. It gets stuck in the same place, trying to socialize with people but failing, and so on.” P11 also claimed that the emergence of such negative emotions is somewhat helpful for realistic experiences and added, “I think talking about worries is a deeper expression of emotions rather than simply being happy every day.” Participants who accepted the robot’s emotions as genuine showed empathy for the robot. P4 said, “I remember one diary that said, ‘Why do I have no clothes?’ Or it was a cap, maybe (Table 1b). Anyway, it was memorable because it felt pitiful to say that it never owned a cap.” P1 said, “It seemed to be a bit pessimistic, but actually, I would be too if I kept staying in the same place. So I could fully understand, and on the other hand, I felt sorry for it.”

Beau has a Unique but Invisible Identity

As generative diaries reveal what the robot thinks and feels depending on the situation, participants interpreted this information series and drew Beau’s characteristics. For example, P2 said, “The emotions written there made the robot’s image for me.” P9 commented, “The bright characteristic was built from diaries.” Consequently, each participant specifically defined Beau’s identity. For example, P2 described Beau as “someone who is timid and not good at talking, but easily becomes lonely without people, as well as someone who is not confident but desires to perform her job well.” P9 considered Beau “someone who always thinks pleasantly, and who feels happy just by seeing the sunset, although sometimes being sullen when there is no one around.”

Furthermore, the identity built from generative diaries did not conflict with the character shown in physical interaction. Although physical interaction failed to reflect the curious (mentioned by P6) and bright (mentioned by P9) characteristics of Beau, as it was designed limitedly for the study, participants naturally accepted these differences due to Beau’s timidity. P12 said, “I came to think that the robot was introverted because it doesn’t talk much with people but was talkative in writing.” P6 said, “I was just persuaded. I know many people who have many thoughts but do not express them or don’t talk much. So it was easy to accept that this robot has such a personality.” In other words, generative diaries make users aware of the internal characteristics of robots that could be different from those physically shown.

Beau is an Acquaintance and Potentially a Friend

Generative diaries allow robots to build social relationships with users by reducing their unfamiliarity. By sharing generative diaries, Beau reminded participants of reading others’ posts on social media, which was a familiar experience for people. P7 noted, “Expressing one’s thoughts through social media itself is one interaction method that is different from physical interaction. ... It is common for us to indirectly observe what they did and what they thought today without communicating directly on social media. ... I felt such interaction in this robot as well.” Accordingly, Beau was recognized as being able to perform social behavior just by sharing generative diaries through online platforms. P9 said, “Some are talkative like me, and others may be silent, but anyway, all have their thoughts. Well, just this robot shares its thoughts later, not when I’m there.” However, since generative diaries are not directly related to individual users, there is still a limit to robots getting close to users. P7 said, “The interaction was not a sort of talking together. Rather it was like reading a news article. I got it passively. In that sense, it felt more like just an acquaintance than a friend.”

Nevertheless, generative diaries make people think they can be close to robots. It continuously provides information about robots, narrowing the psychological distance from users. P5 said, “I felt a sort of intimacy while reading its diaries continuously.” Meanwhile, intimacy increases further when users find something in common between themselves and robots. For example, participants were interested in generative diaries because the mentioned events happened in their place. P5 said, “I empathized and built a rapport with it by imagining what it saw. It was like, ‘Oh, you did? Me too!’” They also felt a sense of kinship in terms of emotion. P10 said, “I was impressed by the stories about feeling empty while staying alone at night (Table 1a). That mood reminded me of the moment of practicing when I was a sophomore, so I empathized with it.”

Furthermore, several participants desired to be close to Beau. In particular, they highly expected personalized feedback, as they commented that they were waiting for the diary written about them. For example, P2 said, “I was subtly waiting for it to write about me in diaries. But it didn’t. I even thought that it was not interesting to me.” In addition, participants also wanted to start actual interaction with Beau. P5 noted, “I expected it to talk to me for some time. Reading diaries was a positive but one-sided online communication. That was a shame.”

Discussion

Our findings suggest that using generative diaries can improve social perceptions of robots, particularly for warmth, by promoting awareness of the intelligence, consciousness, emotions, identity, and desire for social interaction of robots. Building on these findings, we identified several opportunities and challenges for incorporating generative diaries in the design of social human-robot interaction. We also discussed the limitations of our study and areas for future work.

Opportunities of Using Generative Diaries in Social HRI

Promoting Continuous Engagement

From an interaction perspective, a key feature of generative diaries is using AI to generate new text for users each time. This provides a new opportunity to address ongoing concerns in HRI, where users may lose interest in robots over time, leading to changes in their attitudes (Gockley et al., 2005; Kanda et al., 2004). Participants in our study mentioned that the varying content of the diaries helped to maintain their interest in the robot and made them look forward to the following diary entry. Although the process of delivering the diaries is always the same, the content and impressions they create for users change every time, which we propose can be viewed as incremental novel behavior, as Leite et al. (2013) suggested. However, further research is needed to determine whether and how generative diaries can maintain interest continuously without being perceived as having standardized patterns. If possible, generative diaries can be a vital interaction modality in contexts such as educational robots (e.g., Komatsubara et al., 2014) that must continuously engage people.

Enabling Various Social Capabilities and Behaviors

Generative diaries can serve as a tool for implementing various social capabilities and behaviors. For example, by focusing on user interaction logs, they can demonstrate the ability to adapt to and remember previous conversations. Furthermore, generative diaries can engage in unobtrusive small talk through short memos, resembling human-like conversational styles. Additionally, it can exhibit perception abilities through generated text based on stored or detected information. These possibilities provide researchers with complementary implementation strategies for prototype design to verify the effects of specific social abilities of robots. While many researchers have explored social functions such as user recognition (Lee et al., 2012), interaction history recall (Ahmad et al., 2017), and self-disclosure (Gockley et al., 2005), most have primarily utilized voice interactions. However, scenario-based voice interactions are challenging to apply to actual contexts and provide only limited experiences. Therefore, we advocate for a more active consideration of the use of generative diaries in social robot design research and practice.

Enhancing Emotional Values

Our study identified the potential for generative diaries to enhance emotional value by increasing users’ awareness of robots’ consciousness and emotions. This finding has significant implications for the task performance of robots designed for emotional and affective interaction, such as healthcare and therapy robots (e.g., Šabanović et al., 2013) and companion robots (e.g., Robinson et al., 2013). Even in contexts where emotional interaction is not essential, such as education, generative diaries are expected to enhance the user experience by inducing emotional engagement. However, it should be noted that representing consciousness and emotions would not benefit all robots. In our study, we observed that awareness of consciousness and emotions caused doubts about robots’ accuracy and objectivity by several participants, implying that industrial robots that interact less with people and perform repetitive tasks may not benefit significantly from generative diaries. Moreover, robots without consciousness or emotion may still be preferred for complex or troublesome tasks (Hayashi et al., 2010).

Expanding the Design of Personality

Personality has been recognized as an essential consideration in robot design, particularly in social interaction (Fong et al., 2003; Lee et al., 2006). It can significantly affect users’ perception of robots and their intention to interact with them, thereby enhancing the quality of interaction (Robert et al., 2020). Consequently, many researchers have examined the effects of perceived robot personality on user experience. While some researchers have utilized gaze (Andrist et al., 2015), conversation control (Walters et al., 2011), and speech gesture (Lee et al., 2006) to design the desired personality, the outcomes have been limited to introverted and extroverted personalities. Notably, our study found that participants accepted Beau’s personality with greater detail, influenced by the generative diaries. For instance, Beau was perceived to possess a curious, bright, pure, or naive personality. This suggests that generative diaries offer interaction designers an opportunity to design robots with more diverse personalities and enable researchers to further investigate the effects of robot personality on user experience. However, before doing so, researchers should endeavor to understand which personality traits are preferred in different contexts and what constitutes desirable personality traits, as this dramatically affects users’ expectations and ways of interacting with robots (Robert et al., 2020). For example, as observed in our study, Beau’s perceived personality influenced the desire to form a close relationship and doubts about their task competency.

Challenges of Using Generative Diaries in Social HRI

Trade-Off between Generative Diaries and Physical Social Cues

The social perception of robots influences users’ expectations for their behavior and response in subsequent interactions. For example, people expect a robot that is perceived to have a memory to recognize them, and they expect to be welcomed by a robot that is perceived to have an amicable and warm personality. However, it is not easy to guarantee that these expectations will be met in physical interactions. Cognitive dissonance will likely result in various social errors (Tian & Oviatt, 2021). Therefore, designers seeking to add generative diaries to HRI must consider the balance between the perception they induce and the physical and social cues that influence perception. Initially, physical interaction can explore short and straightforward contexts. For instance, guidance robots at airports often engage in short interactions with unidentified users in crowded environments, providing limited opportunities for social shortcomings to be exposed. Nonetheless, generative diaries can still benefit such applications by engaging users remotely and indirectly, increasing revisit rates. Moreover, future research on improving physical and social cues based on AI, such as generating natural speech gestures using GAN (Yoon et al., 2020), would be desirable.

Efficiency, Authenticity, and Ethical Considerations for Automation

In the research prototype we developed, generative diaries were automatically generated. However, identifying topics and evaluating the suitability of entries fell on the researchers using HeyBeau. This strategic decision aimed to evaluate interactions using generative diaries efficiently, and attempts should be made to exclude human intervention in actual interactions. The first hurdle in achieving this goal is ensuring that diary entries are based on actual events. Study participants expressed interest that diary entries were written based on actual events and that the writer physically existed. Although the entries were randomly generated, the topics captured by the generative diaries, thanks to the robot that physically interacts with users, were meaningful and provided users with a positive experience in communicating with the robot. Therefore, designers should actively consider injecting actual information as long as there are no contextual errors. Our experience has shown that obvious information such as dates, times, weather, and notable events such as construction or moving is adequate.

The second consideration for automation is the process of filtering out problematic diary entries to prevent their publication. For example, discriminatory and violent content and contextual errors that raise minor doubts should be filtered out. We filtered out a few such entries through HeyBeau during the study. However, such a human intervention process is only feasible for some applications. Indeed, this issue is a common challenge for UX designers using AI (Dove et al., 2017) and is particularly criticized as a side effect of natural language generation technology (Solaiman et al., 2019). Therefore, it is necessary to adopt text mining techniques such as sentiment analysis (e.g., Singh & Kumari, 2016) or explore more rigorous and meticulous model training methods in the screening process. Alternatively, semi-structured sentences may be considered, although conflicting perspectives on diversity may exist.

Intentional Shaping of Social Perception

The use of generative diaries to shape the social perception of robots in interactions holds promise. However, implementing the designer’s intended experience at a detailed level requires additional research. We discovered that participants recognized Beau’s characteristics as a social presence primarily through the diary’s content. In particular, information about Beau’s thoughts and emotions in different situations was crucial. Although the utilization of generative diaries during the study impacted Beau’s social perception, the process of ultimately shaping the perception was likely influenced by the researchers’ intervention in candidate selection through HeyBeau. Consequently, we predict that building a dataset for training or tuning AI models will be significant for designers. This new process is more abstract and indirect than traditional design work and requires a deeper understanding.

Limitations and Future Works

Although our study successfully proposed the potential of using generative diaries in interactions to shape social perception, several limitations exist. Specifically, our research results can be influenced by various variables, including interaction design decisions such as the diary’s content, delivery methods, notification settings, and study setting factors like user demographics and the duration of interactions. Different interaction designs can result in different user experiences even when using generative diaries. Therefore, our following research goals include diversifying the diary content beyond personal stories to explore whether it can impact the perception of social attributes beyond warmth and the application of generative diaries in different devices and contexts to enhance user experiences. Limitations related to the study setting can be addressed through additional user research, particularly by tracking user experiences over a longer period to gain insights into interactions using generative diaries.

Conclusion

Based on our exploration of using generative diaries to influence the social perception of robots in interaction design, we found that such a method holds promise for designers seeking to create more effective and engaging human-robot interactions. Our research demonstrated that generative diaries could enhance social perceptions of robots, particularly warmth, by increasing awareness of robots’ intelligence, consciousness, emotion, identity, and desire for social communication. As a result, interaction designers and researchers can utilize them to demonstrate various social functions and design robots’ personalities. Furthermore, through our careful design and implementation of a series of research prototypes, we were able to offer insights into the opportunities and challenges of using generative diaries based on empirical understanding. We hope our work contributes to the ongoing discussion on design and HRI and offers valuable insights for designers seeking to shape the social perception of robots more effectively and engagingly.

Acknowledgments

This work was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea (NRF-2022S1A5B5A17046420).

References

- Admoni, H., & Scassellati, B. (2017). Social eye gaze in human-robot interaction: A review. Journal of Human-Robot Interaction, 6(1), 25-63. https://doi.org/10.5898/JHRI.6.1.Admoni

- Ahmad, M. I., Mubin, O., & Orlando, J. (2017). Adaptive social robot for sustaining social engagement during long-term children-robot interaction. International Journal of Human-Computer Interaction, 33(12), 943-962. https://doi.org/10.1080/10447318.2017.1300750

- Ambe, A. H., Brereton, M., Soro, A., Buys, L., & Roe, P. (2019). The adventures of older authors: Exploring futures through co-design fictions. In Proceedings of the SIGCHI conference on human factors in computing systems (Article No. 358). ACM. https://doi.org/10.1145/3290605.3300588

- Andrist, S., Mutlu, B., & Tapus, A. (2015). Look like me: Matching robot personality via gaze to increase motivation. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 3603-3612). ACM. https://doi.org/10.1145/2702123.2702592

- Baraka, K., Alves-Oliveira, P., & Ribeiro, T. (2020). An extended framework for characterizing social robots. In C. Jost, B. Le Pévédic, T. Belpaeme, C. Bethel, D. Chrysostomou, N. Crook, M. Grandgeorge, & N. Mirnig (Eds.), Human-robot interaction (pp. 21-64). Springer. https://doi.org/10.1007/978-3-030-42307-0_2

- Bedö, V. (2021). Catch the bus: Probing other-than-human perspectives in design research. Frontiers in Computer Science, 3, 636107. https://doi.org/10.3389/fcomp.2021.636107

- Bleeker, J. (2009). Design fiction: A short essay on design, science, fact and fiction. https://blog.nearfuturelaboratory.com/2009/03/17/design-fiction-a-short-essay-on-design-science-fact-and-fiction/

- Blomquist, Å., & Arvola, M. (2002). Personas in action: Ethnography in an interaction design team. In Proceedings of the 2nd Nordic conference on human-computer interaction (pp. 197-200). ACM. https://doi.org/10.1145/572020.572044

- Breazeal, C. (2003). Toward sociable robots. Robotics and Autonomous Systems, 42(3-4), 167-175. https://doi.org/10.1016/S0921-8890(02)00373-1

- Breazeal, C. (2004). Designing sociable robots. MIT Press.

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter C., ... & Amodei, D. (2020). Language models are few-shot learners. In Proceedings of the 34th international conference on neural information processing systems (pp. 1877-1901). ACM. https://doi.org/10.48550/arXiv.2005.14165

- Burke, M., Marlow, C., & Lento, T. (2010). Social network activity and social well-being. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1909-1912). ACM. https://doi.org/10.1145/1753326.1753613

- Buschek, D., Zürn, M., & Eiband, M. (2021). The impact of multiple parallel phrase suggestions on email input and composition behaviour of native and non-native English writers. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-13). ACM. https://doi.org/10.1145/3411764.3445372

- Carpinella, C. M., Wyman, A. B., Perez, M. A., & Stroessner, S. J. (2017). The robotic social attributes scale (RoSAS) development and validation. In Proceedings of the international conference on human-robot interaction (pp. 254-262). ACM. https://doi.org/10.1145/2909824.3020208

- Carroll, J. M. (2003). Making use: Scenario-based design of human-computer interactions. MIT Press.

- Chang, W. W., Giaccardi, E., Chen, L. L., & Liang, R. H. (2017). “Interview with things”: A first-thing perspective to understand the scooter’s everyday socio-material network in Taiwan. In Proceedings of the conference on designing interactive systems (pp. 1001-1012). ACM. https://doi.org/10.1145/3064663.3064717

- Cila, N., Giaccardi, E., Tynan-O’Mahony, F., Speed, C., & Caldwell, M. (2015). Thing-centered narratives: A study of object personas. In Proceedings of the 3rd seminar international research network for design anthropology (pp. 1-17). Aarhus university.

- Collins, N. L., & Miller, L. C. (1994). Self-disclosure and liking: A meta-analytic review. Psychological Bulletin, 116(3), 457-475. https://doi.org/10.1037/0033-2909.116.3.457

- Coulton, P., Lindley, J., Sturdee, M., & Stead, M. (2017). Design fiction as world building. In Proceedings of the 3rd biennial research through design conference (pp. 163-179). Figshare. https://doi.org/10.6084/m9.figshare.4746964

- Culpeper, J. (2014). Language and characterisation: People in plays and other texts. Routledge.

- Darling, K. (2016). Extending legal protection to social robots: The effects of anthropomorphism, empathy, and violent behavior towards robotic objects. In R. Calo, A. M. Froomkin, & I. Kerr (Eds.), Robot law (pp. 213-232). Edward Elgar Publishing. https://doi.org/10.4337/9781783476732

- Dautenhahn, K. (2004). Robots we like to live with?! - A developmental perspective on a personalized, life-long robot companion. In Proceedings of the 13th international workshop on robot and human interactive communication (pp. 17-22). IEEE. https://doi.org/10.1109/ROMAN.2004.1374720

- Dautenhahn, K., Woods, S., Kaouri, C., Walters, M. L., Koay, K. L., & Werry, I. (2005). What is a robot companion-friend, assistant or butler? In Proceedings of the international conference on intelligent robots and systems (pp. 1192-1197). IEEE. https://doi.org/10.1109/IROS.2005.1545189

- Davoli, L., & Redström, J. (2014). Materializing infrastructures for participatory hacking. In Proceedings of the conference on designing interactive systems (pp. 121-130). ACM. https://doi.org/10.1145/2598510.2602961

- De Graaf, M. M., Ben Allouch, S., & Van Dijk, J. A. (2015). What makes robots social? A user’s perspective on characteristics for social human-robot interaction. In Proceedings of the international conference on social robotics (pp. 184-193). Springer. https://doi.org/10.1007/978-3-319-25554-5_19

- Desjardins, A., & Biggs, H. R. (2021). Data epics: Embarking on literary journeys of home internet of things data. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-17). ACM. https://doi.org/10.1145/3411764.3445241

- DiSalvo, C. F., Gemperle, F., Forlizzi, J., & Kiesler, S. (2002). All robots are not created equal: The design and perception of humanoid robot heads. In Proceedings of the 4th conference on designing interactive systems (pp. 321-326). ACM. https://doi.org/10.1145/778712.778756

- Dörrenbächer, J., Löffler, D., & Hassenzahl, M. (2020). Becoming a robot-overcoming anthropomorphism with techno-mimesis. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1-12). ACM. https://doi.org/10.1145/3313831.3376507

- Dörrenbächer, J., Neuhaus, R., Ringfort-Felner, R., & Hassenzahl, M. (Eds.). (2022). Meaningful futures with robots: Designing a new coexistence. Chapman & Hall/CRC.

- Dove, G., Halskov, K., Forlizzi, J., & Zimmerman, J. (2017). UX design innovation: Challenges for working with machine learning as a design material. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 278-288). ACM. https://doi.org/10.1145/3025453.3025739

- Fang, H., Cheng, H., Sap, M., Clark, E., Holtzman, A., Choi, Y., Smith, N. A., & Ostendorf, M. (2018). Sounding board: A user-centric and content-driven social chatbot. In Proceedings of the conference of the North American chapter of the Association for Computational Linguistics: Demonstrations (pp. 96-100). Association for Computational Linguistics. http://dx.doi.org/10.18653/v1/N18-5020

- Fong, T., Nourbakhsh, I., & Dautenhahn, K. (2003). A survey of socially interactive robots. Robotics and Autonomous Systems, 42(3-4), 143-166. https://doi.org/10.1016/S0921-8890(02)00372-X

- Forlizzi, J., & DiSalvo, C. (2006). Service robots in the domestic environment: A study of the roomba vacuum in the home. In Proceedings of the 1st conference on human-robot interaction (pp. 258-265). ACM. https://doi.org/10.1145/1121241.1121286

- Ghajargar, M., Bardzell, J., & Lagerkvist, L. (2022). A redhead walks into a bar: Experiences of writing fiction with artificial intelligence. In Proceedings of the 25th international academic MindTrek conference (pp. 230-241). ACM. https://doi.org/10.1145/3569219.3569418

- Ghazvininejad, M., Shi, X., Priyadarshi, J., & Knight, K. (2017). Hafez: An interactive poetry generation system. In Proceedings of conference on system demonstrations (pp. 43-48). ACL Anthology. https://doi.org/10.18653/v1/P17-4008

- Giaccardi, E., Speed, C., Cila, N., & Caldwell, M. L. (2020). Things as co-ethnographers: Implications of a thing perspective for design and anthropology. In R. C. Smith, K. T. Vangkilde, M. G. Kjaersgaard, T. Otto, J. Halse, & T. Binder (Eds.), Design anthropological futures (pp. 235-248). Routledge.

- Gockley, R., Bruce, A., Forlizzi, J., Michalowski, M., Mundell, A., Rosenthal, S., Sellner, B., Simmons, R., Snipes, K., Schultz, A. C., & Wang, J. (2005). Designing robots for long-term social interaction. In Proceedings of the international conference on intelligent robots and systems (pp. 1338-1343). IEEE. https://doi.org/10.1109/IROS.2005.1545303

- Graesser, A. C., & Ottati, V. (2014). Why stories? Some evidence, questions, and challenges. In R. S. Wyer Jr. (Ed.), Knowledge and memory: The real story (pp. 121-132). Psychology Press.

- Hayashi, K., Shiomi, M., Kanda, T., & Hagita, N. (2010). Who is appropriate? A robot, human and mascot perform three troublesome tasks. In Proceedings of the 19th international symposium in robot and human interactive communication (pp. 348-354). IEEE. https://doi.org/10.1109/ROMAN.2010.5598661

- Hutchinson, H., Mackay, W., Westerlund, B., Bederson, B. B., Druin, A., Plaisant, C., Beaudouin-Lafon, M., Conversy, S., Evans, H., Hansen, H., Roussel, N., & Eiderbäck, B. (2003). Technology probes: Inspiring design for and with families. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 17-24). ACM. https://doi.org/10.1145/642611.642616

- Joosse, M. P., Poppe, R. W., Lohse, M., & Evers, V. (2014). Cultural differences in how an engagement-seeking robot should approach a group of people. In Proceedings of the 5th international conference on collaboration across boundaries (pp. 121-130). ACM. https://doi.org/10.1145/2631488.2631499

- Kaipainen, K., Ahtinen, A., & Hiltunen, A. (2018). Nice surprise, more present than a machine: Experiences evoked by a social robot for guidance and edutainment at a city service point. In Proceedings of the 22nd International academic MindTrek conference (pp. 163-171). https://doi.org/10.1145/3275116.3275137

- Kalegina, A., Schroeder, G., Allchin, A., Berlin, K., & Cakmak, M. (2018). Characterizing the design space of rendered robot faces. In Proceedings of the international conference on human-robot interaction (pp. 96-104). ACM. https://doi.org/10.1145/3171221.3171286

- Kanda, T., Hirano, T., Eaton, D., & Ishiguro, H. (2004). Interactive robots as social partners and peer tutors for children: A field trial. Human-Computer Interaction, 19(1-2), 61-84. https://doi.org/10.1207/s15327051hci1901&2_4

- Kanda, T., Shiomi, M., Miyashita, Z., Ishiguro, H., & Hagita, N. (2009). An affective guide robot in a shopping mall. In Proceedings of the 4th international conference on human robot interaction (pp. 173-180). ACM. https://doi.org/10.1145/1514095.1514127

- Kanerva, J., Rönnqvist, S., Kekki, R., Salakoski, T., & Ginter, F. (2019). Template-free data-to-text generation of Finnish sports news. In Proceedings of the 22nd Nordic conference on computational linguistics (pp. 242-252). https://aclanthology.org/W19-6125

- Kang, S. H., & Gratch, J. (2011). People like virtual counselors that highly-disclose about themselves. Studies in Health Technology and Informatics, 167, 143-148. https://doi.org/10.3233/978-1-60750-766-6-143

- Komatsubara, T., Shiomi, M., Kanda, T., Ishiguro, H., & Hagita, N. (2014). Can a social robot help children’s understanding of science in classrooms? In Proceedings of the 2nd international conference on human-agent interaction (pp. 83-90). ACM. https://doi.org/10.1145/2658861.2658881

- Labov, W., & Waletzky, J. (1997). Narrative analysis: Oral versions of personal experience. Journal of Narrative & Life History, 7(1-4), 3-38. https://doi.org/10.1075/jnlh.7.02nar

- Lee, K. M., Peng, W., Jin, S. A., & Yan, C. (2006). Can robots manifest personality? An empirical test of personality recognition, social responses, and social presence in human-robot interaction. Journal of Communication, 56(4), 754-772. https://doi.org/10.1111/j.1460-2466.2006.00318.x

- Lee, M. K., Forlizzi, J., Kiesler, S., Rybski, P., Antanitis, J., & Savetsila, S. (2012). Personalization in HRI: A longitudinal field experiment. In Proceedings of the 7th international conference on human-robot interaction (pp. 319-326). IEEE. https://doi.org/10.1145/2157689.2157804

- Leite, I., Castellano, G., Pereira, A., Martinho, C., & Paiva, A. (2014). Empathic robots for long-term interaction: Evaluating social presence, engagement and perceived support in children. International Journal of Social Robotics, 6, 329-341. https://doi.org/10.1007/s12369-014-0227-1

- Leite, I., Martinho, C., & Paiva, A. (2013). Social robots for long-term interaction: A survey. International Journal of Social Robotics, 5(2), 291-308. https://doi.org/10.1007/s12369-013-0178-y

- Linde, C. (1993). Life stories: The creation of coherence. Oxford University Press.

- Lupetti, M. L., Bendor, R., & Giaccardi, E. (2019). Robot citizenship: A design perspective. In S. Colombo, M. Bruns, Y. Lim, L.-L. Chen, & T. Djajadiningrat (Eds.), Proceedings of the conference on design and semantics of form and movement (pp. 87-95). https://doi.org/10.21428/5395bc37.595d1e58

- McGinn, C., & Torre, I. (2019). Can you tell the robot by the voice? An exploratory study on the role of voice in the perception of robots. In Proceedings of 14th international conference on human-robot interaction (pp. 211-221). IEEE. https://doi.org/10.1109/HRI.2019.8673305

- Mori, M., MacDorman, K. F., & Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robotics & Automation Magazine, 19(2), 98-100. https://doi.org/10.1109/MRA.2012.2192811

- Nicenboim, I., Giaccardi, E., Søndergaard, M. L. J., Reddy, A. V., Strengers, Y., Pierce, J., & Redström, J. (2020). More-than-human design and AI: In conversation with agents. In Proceedings of the conference on designing interactive systems (pp. 397-400). ACM. https://doi.org/10.1145/3393914.3395912

- Nourbakhsh, I. R., Bobenage, J., Grange, S., Lutz, R., Meyer, R., & Soto, A. (1999). An affective mobile robot educator with a full-time job. Artificial Intelligence, 114(1-2), 95-124. https://doi.org/10.1016/S0004-3702(99)00027-2

- Odom, W., Wakkary, R., Lim, Y. K., Desjardins, A., Hengeveld, B., & Banks, R. (2016). From research prototype to research product. In Proceedings of the conference on human factors in computing systems (pp. 2549-2561). ACM. https://doi.org/10.1145/2858036.2858447

- Oliveira, H. G. (2015). Tra-la-lyrics 2.0: Automatic generation of song lyrics on a semantic domain. Journal of Artificial General Intelligence, 6(1), 87-110. https://doi.org/10.1515/jagi-2015-0005

- Pandey, A. K., & Gelin, R. (2018). A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robotics & Automation Magazine, 25(3), 40-48. https://doi.org/10.1109/MRA.2018.2833157

- Rebaudengo, S., Aprile, W. A., & Hekkert, P. P. M. (2012). Addicted products: A scenario of future interactions where products are addicted to being used. In Proceedings of the 8th international conference on design and emotion (pp. 1-10). Central Saint Martins College of Art and Design.

- Reddy, A., Kocaballi, A. B., Nicenboim, I., Søndergaard, M. L. J., Lupetti, M. L., Key, C., Speed, C., Lockton, D., Giaccardi, E., Grommé, F., Robbins, H., Primlani, N., Yurman, P., Sumartojo, S., Phan, T., Bedö, V., & Strengers, Y. (2021). Making everyday things talk: Speculative conversations into the future of voice interfaces at home. In Extended abstracts of the SIGCHI conference on human factors in computing systems (pp. 1-16). ACM. https://doi.org/10.1145/3411763.3450390

- Reig, S., Luria, M., Forberger, E., Won, I., Steinfeld, A., Forlizzi, J., & Zimmerman, J. (2021). Social robots in service contexts: Exploring the rewards and risks of personalization and re-embodiment. In Proceedings of the conference on designing interactive systems (pp. 1390-1402). ACM. https://doi.org/10.1145/3461778.3462036

- Robert, L., Alahmad, R., Esterwood, C., Kim, S., You, S., & Zhang, Q. (2020). A review of personality in human-robot interactions. Foundations and Trends® in Information Systems, 4(2), 107-212. http://dx.doi.org/10.1561/2900000018

- Robinson, H., MacDonald, B., Kerse, N., & Broadbent, E. (2013). The psychosocial effects of a companion robot: A randomized controlled trial. Journal of the American Medical Directors Association, 14(9), 661-667. https://doi.org/10.1016/j.jamda.2013.02.007

- Ryöppy, M. (2020). Negotiating experiences and design directions through object theatre. In Proceedings of the 11th Nordic conference on human-computer interaction (pp. 1-13). ACM. https://doi.org/10.1145/3419249.3420169

- Šabanović, S., Bennett, C. C., Chang, W. L., & Huber, L. (2013). PARO robot affects diverse interaction modalities in group sensory therapy for older adults with dementia. In Proceedings of the 13th international conference on rehabilitation robotics (pp. 1-6). IEEE. https://doi.org/10.1109/ICORR.2013.6650427

- Salem, M., Eyssel, F., Rohlfing, K., Kopp, S., & Joublin, F. (2013). To err is human (-like): Effects of robot gesture on perceived anthropomorphism and likability. International Journal of Social Robotics, 5(3), 313-323. https://doi.org/10.1007/s12369-013-0196-9

- Singh, T., & Kumari, M. (2016). Role of text pre-processing in twitter sentiment analysis. Procedia Computer Science, 89, 549-554. https://doi.org/10.1016/j.procs.2016.06.095

- Solaiman, I., Brundage, M., Clark, J., Askell, A., Herbert-Voss, A., Wu, J., Radford, A., Krueger, G., Kim, J. W., Kreps, S., McCain, M., Newhouse, A., Blazakis, J., McGuffie, K., & Wang, J. (2019). Release strategies and the social impacts of language models. OpenAI. https://cdn.openai.com/GPT_2_August_Report.pdf

- Tamir, D. I., & Mitchell, J. P. (2012). Disclosing information about the self is intrinsically rewarding. Proceedings of the National Academy of Sciences, 109(21), 8038-8043. https://doi.org/10.1073/pnas.1202129109

- Tian, L., & Oviatt, S. (2021). A taxonomy of social errors in human-robot interaction. ACM Transactions on Human-Robot Interaction, 10(2), 1-32. https://doi.org/10.1145/3439720

- Van Dalen, A. (2012). The algorithms behind the headlines: How machine-written news redefines the core skills of human journalists. Journalism Practice, 6(5-6), 648-658. https://doi.org/10.1080/17512786.2012.667268

- Van Doorn, J., Mende, M., Noble, S. M., Hulland, J., Ostrom, A. L., Grewal, D., & Petersen, J. A. (2017). Domo arigato Mr. Roboto: Emergence of automated social presence in organizational frontlines and customers’ service experiences. Journal of Service Research, 20(1), 43-58. https://doi.org/10.1177/1094670516679272

- Wakkary, R., Oogjes, D., Hauser, S., Lin, H., Cao, C., Ma, L., & Duel, T. (2017). Morse things: A design inquiry into the gap between things and us. In Proceedings of the conference on designing interactive systems (pp. 503-514). ACM. https://doi.org/10.1145/3064663.3064734

- Walters, M. L., Lohse, M., Hanheide, M., Wrede, B., Syrdal, D. S., Koay, K. L., Green, A., Hüttenrauch, H., Dautenhahn, K., Sagerer, G., & Severinson-Eklundh, K. (2011). Evaluating the robot personality and verbal behavior of domestic robots using video-based studies. Advanced Robotics, 25(18), 2233-2254. https://doi.org/10.1163/016918611X603800

- Waring, E. M., & Chelune, G. J. (1983). Marital intimacy and self-disclosure. Journal of Clinical Psychology, 39(2), 183-190. https://doi.org/10.1002/1097-4679(198303)39:2<183::AID-JCLP2270390206>3.0.CO;2-L

- YesYesNo Interactive projects (2013). Google talking shoes. Retrieved April 11, 2022, from http://www.yesyesno.com/talking-shoe

- Yoon, Y., Cha, B., Lee, J. H., Jang, M., Lee, J., Kim, J., & Lee, G. (2020). Speech gesture generation from the trimodal context of text, audio, and speaker identity. ACM Transactions on Graphics, 39(6), 1-16. https://doi.org/10.1145/3414685.3417838

- Young, J. E., Hawkins, R., Sharlin, E., & Igarashi, T. (2009). Toward acceptable domestic robots: Applying insights from social psychology. International Journal of Social Robotics, 1(1), 95-108. https://doi.org/10.1007/s12369-008-0006-y

- Zimmerman, J., Forlizzi, J., & Evenson, S. (2007). Research through design as a method for interaction design research in HCI. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 493-502). ACM. https://doi.org/10.1145/1240624.1240704