Method Use in Behavioural Design: What, How, and Why?

Philip Cash 1,*, Xènia Vallès Gamundi 1,2, Ida Echstrøm 1,3, and Jaap Daalhuizen 1

1 DTU Management, Technical University of Denmark, Lyngby, Denmark

2 SSAB, Design and Digitalization Engineer, Oxelösund, Sweden

3 Digitalisering Socialforvaltningen Københavns Kommune, Copenhagen, Denmark

Behavioural design is an important area of research and practice key to addressing behavioural and societal challenges. Behavioural design reflects a synthesis of design and behavioural science, which draws together aspects of abductive, inductive, and deductive reasoning to frame, develop, and deliver behaviour change through purposefully designed interventions. However, this synthesis creates major questions as to how methods are selected, adapted, and used during behavioural design. To take a step toward answering these questions we conducted fifteen interviews with globally recognised experts. Based on these interviews we deliver three main contributions. First, we provide an overview of the methods used in all phases of the behavioural design process. Second, we identify behavioural uncertainty as a key driver of method use in behavioural design. Third, we explain how this creates a tension between design and scientific concerns—related to interactions between abductive, inductive, and deductive reasoning—which must be managed across the behavioural design process. We bring these insights together in a basic conceptual framework explaining how and why methods are used in behavioural design. Together these findings take a step towards closing critical gaps in behavioural design theory and practice. They also highlight several directions for further research on method use and uncertainty as well as behavioural design expertise and professional identity.

Keywords – Behavioural Design, Design Methods, Uncertainty, Method Catalogue, Design for Behaviour Change, Design process.

Relevance to Design Practice – This work describes an overview of methods used across all phases of the behavioural design process, as well as a framework for understanding their selection, adaption, and use. This provides concrete guidance for identifying relevant methods and subsequently applying them in behavioural design.

Citation: Cash, P., Vallès Gamundi, X., Echstrøm, I., & Daalhuizen, J. (2022). Method use in behavioural design: What, how, and why?. International Journal of Design, 16(1), 1-21. https://doi.org/10.57698/v16i1.01

Received May, 2020; Accepted March, 2022; Published April 30, 2022.

Copyright: © 2022 Cash, Vallès Gamundi, Echstrøm, & Daalhuizen. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content is open-accessed and allowed to be shared and adapted in accordance with the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) License.

*Corresponding Author: pcas@dtu.dk

Philip Cash received a Ph.D. degree in engineering design from the University of Bath, Bath, U.K., in 2012. He is an Associate Professor in Engineering Design with DTU Management, Technical University of Denmark. His research focuses on design activity and design team behaviour as well as research quality in design. This is complemented by applied research in design for behaviour change in close collaboration with industry. His research has been authored or co-authored in journals including Design Studies, Journal of Engineering Design, and Design Science.

Xènia Vallès Gamundi received a Master in Science in Design and Innovation from the Technical University of Denmark in 2020. She is currently employed in SSAB, where she analyses user needs to design and develop digital services that support industry growth. She is also involved in strategic decisions in relation to these services. Her background in mechanical engineering and product development, as well as her interest in behavioural and user-centered design, provides her with the expertise to bridge people with technology.

Ida Echstrøm’s chosen focus for her education as a user-centered Design engineer has been on the socio-technical aspect of design and product/service development. This has provided her with a deep understanding of the interaction between human and technology. She presented her master thesis Usage of Behavioural Design Methods in 2020, in collaboration with Xenia Valles Gamundi, at the Technical University of Denmark’s Department of Management. Ida Echstrøm works as IT-project manager for the social department of the municipality of Copenhagen, where she identifies the needs and requirements for new IT-solutions in a complex organisation with many actors and arenas.

Jaap Daalhuizen received a Ph.D. degree in industrial design engineering from the Delft University of Technology in 2014. He is an Associate Professor in Design Methodology with DTU Management, Technical University of Denmark. His research focuses on design and innovation processes and design methodology. He also works on issues of research quality in design research, particularly focused on the research on design methods and their impact in practice and education. He is co-editor of the Delft Design Guide, a widely used textbook on design methods, which has been translated into Chinese and Japanese. He is also interested in applied research on design for behavioural change. He has published in journals including Design Studies and International Journal of Design.

Introduction

Behavioural design is key to addressing major behavioural and societal challenges in areas ranging from health to sustainability (Kelders et al., 2012; Niedderer et al., 2016; Schmidt & Stenger, 2021). To achieve this, behavioural designers integrate insights and work practices from design and behavioural science (Bucher, 2020; Niedderer et al., 2017). This synthesis of mindsets, processes, and methods forms the basis for framing, developing, and delivering interventions that produce positive, ethical behaviour change (Mejía, 2021; Tromp et al., 2011). However, such synthesis is not without challenge. Despite a growing body of work describing processes and methods in this context (Cash et al., 2017; Niedderer et al., 2016; Wendel, 2013), major questions remain as to how methods are actually selected, adapted, and used in behavioural design.

At the heart of these questions is the duality introduced by the integration of design and behavioural science (Mejía, 2021; Schmidt, 2020). Here, the essential value of each aspect stems from radically different mindsets, with design emphasising creative (re)framing and abductive reasoning (Dorst, 2011; Kolko, 2010) and behavioural science emphasising theory motivated evidence and inductive/deductive reasoning (Dolan et al., 2014; Michie et al., 2008). These differences are concretely embodied in the mindsets, procedures, and goals of design versus behavioural science methods; with behavioural designers having to integrate methods, ranging from design creativity (Cash et al., 2017) to small group workshops (French et al., 2012), and Randomised Controlled Trials (RCTs) (Kelders et al., 2012). Hence, while much of the value of behavioural design is derived from integrating the best of design and behavioural science, many projects fall back on a design—or behavioural science—led framing (Khadilkar & Cash, 2020; Reid & Schmidt, 2018). To clearly differentiate this specific approach, we define the integration and careful balancing of methods and mindsets from design and behavioural science as Integrative Behavioural Design. Thus, central to unlocking the value of behavioural design is understanding one of the key supports for design work: methods and their use (Van Boeijen et al., 2020).

In this context, current understanding faces two major questions. First, almost all current method repositories reflect a single main framing in either design or behavioural science, without addressing possible interactions between these (Van Boeijen et al., 2020; Kumar, 2013; Michie et al., 2015). This provides a huge scope of potential methods with little insight into which are selected in practice. Such vagueness in scope is a critical barrier to understanding how methods and insights from one domain might generalise to others (Wacker, 2008). As such, it is unclear to what degree prior research on methods can be applied to integrative behavioural design. Thus, our first research question is: what methods are selected during behavioural design and when?

Second, Daalhuizen and Cash (2021) highlight how alignment between driving purpose and mindset is essential to method adaption and use. Here, while Daalhuizen (2014) identifies uncertainty as a potential unifying driver for method use in design, this has not been examined in the context of behavioural design and the different mindsets embodied in design and behavioural science methods (Khadilkar & Cash, 2020; Reid & Schmidt, 2018). Thus, our second research question is: how and why are methods adapted and used during behavioural design?

Given these questions, we aim to explore method use in behavioural design, taking an initial starting point in uncertainty (Daalhuizen, 2014). To achieve this, we conducted fifteen interviews with globally recognised behavioural design experts from across the behavioural design field. These provided the basis for thematic analysis related to what, when, how, and why methods were used in this context, as well as for the initial compilation of major methods used across all phases of the behavioural design process. Based on this, we develop an overview of methods in behavioural design, and propose a conceptual framework explaining how and why they are adapted and used in practice. Thus, we take a step towards explaining how design and behavioural science methods can be used in harmony in integrative behavioural design. This provides major theoretical and practical insights for behavioural design.

Background

In order to provide a basis for this research, we first outline the characteristics of behavioural design, followed by examining current understanding regarding design method use. Due to the emerging nature of theory in this area (Khadilkar & Cash, 2020; Mejía, 2021), we employ a scoping review to establish the initial domain, variables, and relationships informing our empirical work (Cash, 2018; Grant & Booth, 2009).

Behavioural Design and Uncertainty

Behavioural design deals with the process of designing for behaviour change, typically facilitated by multiple design artefacts (Khadilkar & Cash, 2020). This has been described in terms of a diverse set of process models and frameworks (Bay Brix Nielsen et al., 2018; Bhamra et al., 2011; Fogg, 2002; Tromp et al., 2011), all formulated with the intent to support designers in creating positive, ethical changes in behaviour within a specified scope (Lockton et al., 2013; Schmidt, 2020), balancing individual and collective concerns (Tromp et al., 2011). The nature of this design challenge has recently been defined by Khadilkar and Cash (2020) as:

Behavioural design has the goal to explicitly and ethically realise positive behaviour, desired by both the individual and society; the object of design is behaviour itself, which is explicitly understood and designed for using behavioural theories and brought into effect by actively changing user psychology with the help of artefacts. (p. 521)

This positions behavioural design at the intersection of design and behavioural science (Reid & Schmidt, 2018), with a focus on working with psychological, social, and behavioural effects that stem from human-product or other human-artefact interactions (Bucher, 2020; Fokkinga & Desmet, 2013). Behavioural design draws together the generative potential of design with the theory and data grounded problem solving of behavioural science (Reid & Schmidt, 2018; Schmidt, 2020). The generative, abductive reframing emphasised by design is essential to circumventing complex, wicked behavioural, and social problems that often defy neat analytical solutions (Schmidt, 2020; Schmidt & Stenger, 2021). Conversely, the analytical inductive/deductive approach emphasised by behavioural science is essential to understanding and influencing behaviour in a predictable manner (Bay Brix Nielsen et al., 2021; Schmidt, 2020). For example, Khadilkar and Cash (2020) explain how it is common for behavioural design projects to include both abductive re-framing and inductive/deductive work with theory and data. Therefore, behavioural designers utilise both design- and behavioural science-led methods ranging from framing a vision for the product (Tromp & Hekkert, 2018; Wendel, 2013) to lists of behaviour change techniques (Dolan et al., 2014; Michie et al., 2011). Thus, much of the value of behavioural design is derived from integrating design and behavioural science methods around a common focus on behaviour change (Khadilkar & Cash, 2020; Reid & Schmidt, 2018).

In this context, many of the core challenges encountered during behavioural design cannot be easily addressed by the inductive/deductive use of behavioural theory (Reid & Schmidt, 2018; Schmidt & Stenger, 2021). Therefore, designers draw heavily on their own understanding and intuition in the critical generative work of framing and reframing of behavioural problems and solutions (Tromp et al., 2011). Such difficulties in understanding are directly connected to perceived or epistemic uncertainty in design (Tracey & Hutchinson, 2016; Wiltschnig et al., 2013). Here, Cash and Kreye (2017) broadly define perceived uncertainty as: “a designers’ perceived lack of understanding with respect to the design task and its context” (p. 3), linking to wider discussions of uncertainty in the management literature (O’Connor & Rice, 2013). This is further nuanced by the work of Christensen and Ball (2018) who describe epistemic uncertainty as: “a designer’s experienced, subjective and fluctuating feelings of confidence in their knowledge and choices” (p. 134). For simplicity, we henceforth refer to these similar conceptualisations as uncertainty. Uncertainty has been shown to drive all types of design work, ranging from generative sketching, problem/solution co-evolution, and creative analogising (Christensen & Ball, 2016, 2018; Scrivener et al., 2000) to more analytical information processing and knowledge sharing (Cash & Kreye, 2018; Lasso, Kreye et al., 2020), as well as general method use (Daalhuizen et al., 2009). Thus, uncertainty—in general—forms a potential driver for both abductive and inductive/deductive design work, making it an ideal lens for investigating integrative behavioural design.

Decomposing this general driver, a number of recent empirical studies have demonstrated that differences in the action taken by designers can be explained by variation in the level or type of uncertainty (e.g., technical, market, etc. (O’Connor & Rice, 2013; Kreye et al., 2020; Lasso et al., 2020). While the majority of the design literature addresses level of uncertainty in general (Cash & Kreye, 2017; Christensen & Ball, 2017), management scholars have highlighted the predominance of technical and organisational uncertainty in new product development (O’Connor & Rice, 2013; Song & Montoya-Weiss, 2001). Given this decomposition of uncertainty, it is relevant to differentiate behavioural design from other types of design work and to presume that practitioners also experience behavioural uncertainty as a driver of their actions. Subsequently this might explain how disparate methods are incorporated in a single harmonious process. Thus, the question becomes what types of uncertainty behavioural designers face and how this drives their use of methods across the behavioural design process.

As a starting point for answering this question, the characteristic challenge of behavioural design can be framed in terms of the difficulties in understanding behavioural problems and solutions. Specifically, behavioural designers are confronted with many, varied behavioural theories (Michie et al., 2014), substantial complexity in understanding and predicting behavioural outcomes (Fogg & Hreha, 2010; Kelly & Barker, 2016), and frequent unexpected side effects (Michie et al., 2015). In particular, interventions often fail due to complex interactions with the wider context over time, which cannot easily be resolved by traditional behavioural science-led methods, such as controlled experimental validation (Lambe et al., 2020; Schmidt & Stenger, 2021). All of these challenges can be linked to descriptions of behavioural uncertainty in psychology (Kipnis, 1987; Redstrom, 2006), i.e., the uncertainty associated with being able to predict or explain a person’s behaviour (Redstrom, 2006). While this type of uncertainty has not previously been described in the design or management literature (Cash & Kreye, 2017; Christensen & Ball, 2017; O’Connor & Rice, 2013), it does correspond to empirical descriptions of the challenges faced by behavioural designers (Khadilkar & Cash, 2020). Thus, behavioural uncertainty provides a potential starting point for understanding method use in behavioural design.

Design Methods

Methods bridge theory and practice, and help designers work with uncertainty by extending their abilities, enhancing reflection, and supporting learning (Daalhuizen, 2014). Methods act as intermediates that allow people to learn from others more efficiently and with greater effect (Daalhuizen, 2014), and many companies claim that design methods are central to their activities (Gericke et al., 2020). Design methods are assumed to improve design performance by transferring know-how between people over time and space (Daalhuizen, 2014). Methods thus function as an important means to transfer procedural knowledge to designers.

The information that methods contain can originate both from practice and academia and can be defined as a formalised representation of an activity that functions as a mental tool to support designers to (learn how to) achieve a certain goal, in relation to certain circumstances and available resources (Daalhuizen & Cash, 2021; Daalhuizen et al., 2019). As such, methods can be conceptualised as mental tools providing structure for designers’ thinking and behaviour (Daalhuizen et al., 2019). Critical to this is the core procedural information needed to use a method, including its goal, description in practice, and intended outcome (Andreasen, 2003; Daalhuizen et al., 2014; Roozenburg & Eekels, 1995). However, this procedural information must also be processed, made sense of, and ideally translated into new or changed design practices. Thus, methods also need to convey the underlying mindset (Andreasen et al., 2015; Daalhuizen, 2014), their general role in the overarching design process, and how and when they ought to be used to support design practices (Daalhuizen, 2014; Dorst, 2008). These key elements of method content have been crystalised in the recent work of Daalhuizen and Cash (2021) as: Method Framing, Method Rationale, Method Goal, Method Procedure, and Method Mindset.

Typically, method repositories (see, e.g., Van Boeijen et al., 2020; Chasanidou et al., 2015; Kumar, 2013) implicitly deal with method framing and mindset, via for example the method label, overall goal description, information about when to use the method, and input/output to be expected (Kumar, 2013). This implicit focus is further compounded by the link between mindset and identity, with designerly method use, and characteristic traits such as creativity and empathy, constituting an important part of operationalising designers’ professional identity (Andreasen et al., 2015; Kunrath et al., 2020a). Hence, methods must be understood in the context of designers’ expertise and approach to design work itself (Dong, 2009). This is also reflected in a connection between designer expertise development and their ability to deal with uncertainty (Tracey & Hutchinson, 2016). While this not normally an issue—due to most projects dealing with a single dominant mindset such as design thinking (Brown, 2008) or gated product development (Ulrich & Eppinger, 2003)—it becomes more critical in the context of integrative behavioural design. Here, at least two mindsets need to be harmonised across the design process (Reid & Schmidt, 2018), and in some cases the more inductive/deductive focus of scientific problem solving can appear to be in tension with the nature of design (Cross, 2001; Stolterman, 2008). Thus, in order to understand how current methods and repositories might apply to behavioural design, it is necessary to specifically characterise method use, its purpose, and the methods originating sources and associated mindsets in this context.

Methods in Behavioural Design

Given the above framing, it is important to situate understanding of method use with respect to current processes and methods in behavioural design. Following its integrative nature the behavioural design process combines design-led (re)framing, creativity, development, and iteration, with behavioural science-led characterisation of behaviour, integration of theory, and validation of proposed interventions (Fogg, 2009a; Wendel, 2013). Therefore, while user studies, prototyping, and testing are typical process elements (Van Boeijen et al., 2020; Ulrich & Eppinger, 2003), their character and degree of focus in behavioural design is distinctive (Wendel, 2013). For example, Cash et al. (2017, Figure 6) illustrate how deep behavioural understanding influences every phase of the behavioural design process, as well as its interaction with wider development work. Khadilkar and Cash (2020) formalise this focus in terms of the design of a behavioural object realised via—potentially multiple and varied—artefacts.

Corresponding to this focus there are five distinct phases that characterise behavioural design in context. With respect to the core design process, both Wendel (2013) and Cash et al. (2017) describe four phases: i) identification and framing of the behavioural problem based on an understanding of both design and behavioural science; ii) mapping and description of relevant behaviours, actors, and outcomes; iii) framing and development of intervention(s), which are iv) refined via iterative testing. Broadly similar phases are also described in Tromp and Hekkert’s (2016) Social Implication Design (SID) method: i) (re)frame the project; ii) define a desired effect; iii) identify possible influences; and iv) develop these into concepts. However, if interventions are to be effective in real world contexts over the long term, a fifth phase is needed to deal with the complexities of scaling up, launching, monitoring, and maintaining behaviour (Schmidt & Stenger, 2021; Wendel, 2013). This phase is essential to dealing with unexpected side effects or other emergent ethical issues (Kelly & Barker, 2016; Michie et al., 2015), and has been highlighted in both the design (Fogg, 2009b; Tromp & Hekkert, 2016) and behavioural science literature (Michie et al., 2015; OECD, 2019). Across all phases, designers draw on different lenses for understanding and influencing behaviour (Bay Brix Nielsen et al., 2021; Lockton et al., 2010; Michie et al., 2015). Further, behavioural designers continuously evaluate potential ethical issues, which are embedded throughout the process with specific attention and tools in all phases (Berdichevsky & Neuenschwander, 1999; Lilley & Wilson, 2013). Thus, while containing many familiar elements, the behavioural design process differs significantly from traditional design or product development processes.

In this context, a number of specific methods have been developed to aid designers in dealing with the complexity and uncertainties inherent to behaviour and behavioural interventions (Wendel, 2013). Notable examples include the Design with Intent Method (Lockton et al., 2010), Behavioural Prototype (Kumar, 2013), Behavioural Mapping (Hanington & Martin, 2017), Social implication design (Tromp & Hekkert, 2014, 2018), and Behavioural Problem/Solution matrix methods (Cash et al., 2020). The scope of the process and need to integrate design and behavioural science insights means that behavioural designers draw on a wide array of methods, including traditional design (e.g., personas) and development (e.g., desk research), behavioural science (e.g., cognitive mapping), and even design research methods (e.g., behavioural pattern mapping). These have potentially very different goals and procedural characteristics, as well as underlying mindsets and staging, ranging from highly constrained behavioural science methods, such as RCTs (Kelders et al., 2012), to design methods emphasising creative identification of behavioural interventions (Lockton et al., 2010), all of which need to be related to consideration of the ethical implications, aims, and values underpinning the desired behaviour change. However, there is no current overview of the methods used in behavioural design, and the field is yet to be rigorously codified (Schmidt, 2020). Thus, there is a need to better understand how methods are harmoniously applied across an integrative behavioural design process.

Methodology

Given the lack of prior theory and the open-ended research questions, we take a theory building approach to establish the main relationships between uncertainty and method use in behavioural design (Cash, 2018) and deliver insights for both design theory and practice in this little formalised area. As such, we used in-depth interviews with internationally recognised experts in behavioural design to build up a picture of practices across the behavioural design space. This approach is suitable due to i) the lack of extant theory, ii) the need to explore the complex interactions between methods and behavioural design uncertainty across the whole process, and iii) the need to connect theoretical and practical insights (Robson & McCartan, 2011). Further, this limits potential ethical issues to the provision of informed consent and data confidentiality.

As a starting point for this investigation, we adopted a general conceptual framework linking uncertainty experienced by the designer to the way methods were used across the behavioural design process (Andreasen et al., 2015; Daalhuizen, 2014). Furthermore, we explored key elements of the methods in terms of their specific goal, procedure, mindset, convergent or divergent stance, and expected outcome (Daalhuizen & Cash, 2021). Based on this framework we were able to define a research sample and carry out a multi-stage interview study as outlined in the following sections.

Sampling

We follow the best-practice guideline in the recent work of Cash et al. (2022) to outline our major sampling considerations. First, the scope of behavioural design is very broad, with a potential population including both designers and behavioural scientists (Reid & Schmidt, 2018). To limit the scope, and based on the framework of Reid and Schmidt (2018), we excluded those working at the extreme ends of the behavioural design spectrum, i.e., fully design-led or fully behavioural science-led, to focus on the core population of practitioners dealing with integrative behavioural design.

Second, given the diverse nature of this population and the limitations of current theory regarding how it might vary and how this might impact method use, we aim for analytical generalisability by the abstraction of theoretical insights regarding uncertainty and method use from the specific interview data (Robson & McCartan, 2011). This allows for the development of robust insights that can form the basis for subsequent evaluation of the wider population in future research (Onwuegbuzie & Leech, 2007).

Third, given these considerations, a purposive sampling schema is appropriate (Onwuegbuzie & Leech, 2007). Specifically, we aimed to obtain a sample reflecting the diverse range of practitioners working in integrative behavioural design, and thus adopted a variation sample supported by snowball recruitment of participates from an initial set (Cash et al., 2022, Figure 2). Here, the unit of analysis was individual behavioural designers, based on prior research showing that most behavioural design teams are small and typically only comprise a single main behavioural design expert (Khadilkar & Cash, 2020). Further, the population of behavioural designers is both small and including many novice practitioners as the field is still rapidly developing, while we required our sample to be able to reflect on and describe method use across the process. As such, we focused on experts. Here we draw on the works of Richman et al. (1996) and Ericsson et al. (2006), who both describe expertise based on approximately ten years of practice—although this is less in developing contexts, as in this case—coupled with special skills or knowledge as recognised by peers. Correspondingly, the major theoretical sampling criteria was experience with leading the whole process of integrative behavioural design, and expertise in this context. Specifically, we considered an individual to be an expert in behavioural design when he/she is socially recognised as such and can be distinguished by experience and knowledge in the domain. This also aligned with our variation/snowballing sampling schema.

Finally, given the need to develop both qualitative saturation and rich insights, we followed prior work in limiting the sample size to a small set of interviewees whom we could work with in depth (Onwuegbuzie & Collins, 2007; Sandelowski, 1995). Prior work has highlighted circa 12 participants as appropriate for this type of research, and we followed this to recruit 15 interviewees (Cash et al., 2022; Onwuegbuzie & Collins, 2007).

Identifying and Screening the Sample

The sample was defined in three stages. First, individuals were identified via online search (e.g., Google, LinkedIn) using keywords (e.g., behavioural design) and a location, to ensure the coverage of multiple geographical areas and approaches to design practice. Potential experts known through the research team’s network were also included at this stage.

Second, identified individuals were assessed based on the above expertise criteria, in addition to their field, education, teaching work, publications, claims of expertise, and recognition by other acknowledged experts in the field. Once an individual was identified as an expert, he/she was contacted to evaluate willingness to participate. The approached experts were asked for further recommendations of other experts in a snowball sampling approach (Teddlie & Yu, 2007). Recommended experts were again evaluated according to the above criteria.

Third, experts were identified and interviewed until saturation was achieved, i.e., further data collection only confirmed results already identified (Sandelowski, 1995), whilst also providing multiple insights and data related to each main finding. Based on this process, 49 potential experts were initially contacted, of which 8 declined and 26 did not reply, could not participate, or were excluded as not sufficiently expert after initial contact. In total, we interviewed 15 globally recognised design experts situated in a professional context. An overview of the sample is given in in Table 1.

Table 1. Overview of expert sample.

| Criteria | Overview (numbers refer to number of interviewees) |

| Country | 1-Australia, 6-Denmark, 2-the Netherlands, 1-United Kingdom, 2-United States of America, 1-South Africa, 1-Sweden, 1-Switzerland |

| Academic background (may have multiple backgrounds) |

2-Biology & Biochemistry, 5-Business & Economics, 5-Design, 1-Graphic Design, 2-Marketing, 1-Law, 2-Literature & Linguistics, 1-Philosophy, 4-Psychology |

| Years of practice experience in integrative behavioural design |

Average of 7.4 years |

| Current employment | 3-Independent consultant, 6-Consultancy founder, 4-Consultancy employee, 2-Internal behavioural team in a company or ministry |

| Having publications (may have multiple types of publications) |

5-Formal ((journal articles, books, conference papers), 4-Informal platforms (webpages, blogs, podcasts, videos) |

| Teaching (may teach in both categories) |

12-Education for corporations and practitioners, 5-University education of students |

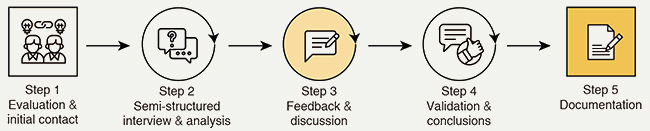

Multi-Round Interviews and Analysis

A multi-round interview approach was selected due to the richness of data that it generates (Harvey-Jordan & Long, 2001) and because it offered the best fit with the research aim. Data was primarily collected via individual, semi-structured interviews lasting approximately one hour. This approach was selected to ensure that experts were not biased by others’ opinions. After each round, insights and findings were presented back to individual interviewees to increase validity and obtain further feedback (Miles et al., 2014). A visualisation of this multi-round process is given in Figure 1 and detailed below.

Figure 1. Representation of the followed procedures in the multi-round interview approach.

Step 1. Evaluation & Initial Contact

Experts were first approached by telephone call or online contact, e.g., email or LinkedIn. A first interview was scheduled. Prior to the meeting information was sent including working definitions and a consent form which was to be returned before the interview. The consent form was also used to inform the experts about the objectives and procedures of the study, contact details for the research team, time commitment, as well as ethical issues around consent and privacy.

Step 2. Semi-Structured Interview & Analysis

The interviews used an online video call service and followed a semi-structured interview guide covering the following main topics. Here, uncertainty was addressed in terms of the challenges and issues of understanding faced by the designers throughout the behavioural design process, in accordance with prior qualitative studies of uncertainty (O’Connor & Rice, 2013):

- The key stages, events, and decisions taken across the behavioural design process.

- The methods used at each stage, where they had originated from, and how they had been adapted for the behavioural design context (if at all).

- The specific goal, method description, mindset, when to use, convergent or divergent nature, outcome, and rationale for each identified method.

All interviews were recorded for later analysis. In addition, one researcher took detailed field notes during each interview to further contextualise the findings. The interviews themselves were supported by the use of an online co-creating tool to visualise the expert’s insights. This was based on the Architecture of Design Doing framework (Daalhuizen et al., 2019), and helped in capturing and describing design practices in a coherent and consistent manner, ensuring that the context-sensitive nature of methods was kept intact. Finally, secondary data in the form of process templates, method descriptions, and method templates were also collected to triangulate insights.

Based on this data, a multi-round inductive analysis was undertaken (Miles et al., 2014). First, all identified methods were listed and used as a basis for open coding in order to distil initial insights (Neuman, 1997). Second, axial coding and thematic analysis was used to distil critical patterns, refine, and exemplify insights (Braun & Clarke, 2006; Neuman, 1997). During this process the research team iteratively moved between the data and the literature in order to ensure conceptual validity of the themes (Miles et al., 2014).

Step 3. Feedback & Discussion

The analysis in Step 2 was then used as the basis for a second round of feedback and elaboration with the individual interviewees. Feedback was elicited for each major finding via either writing on a power-point summary or a further 20-minute online meeting (again recorded for subsequent analysis). This feedback was then used as the basis for another analytical iteration of the themes from Step 2.

Steps 4. and 5. Validation & Conclusions,

and Documentation

In a final round of validation, the refined findings from Step 3 were again presented to the experts. At this stage, no further challenges were raised and no further insights were obtained. As such the interview process was concluded and the results were documented.

Findings

A total of 71 methods were identified and characterised across the span of the behavioural design process (summarised in the Appendix; and comprising the five phases outlined in the background section: i) identification and framing of the behavioural problem based on an understanding of both design and behavioural science; ii) mapping and description of relevant behaviours, actors, and outcomes; iii) framing and development of intervention(s), which are iv) refined via iterative testing; and v) scaling up, launching, monitoring, and maintenance of behaviour), with their usage providing the foundation for the thematic analysis. Based on this, we identify three main findings: the distribution and nature of current methods used in all phases in the behavioural design process; how methods from other fields and from design have been developed and used based on a tension between design and science related concerns; and how behavioural uncertainty drives this development and use.

Method Use across the Behavioural Design Process

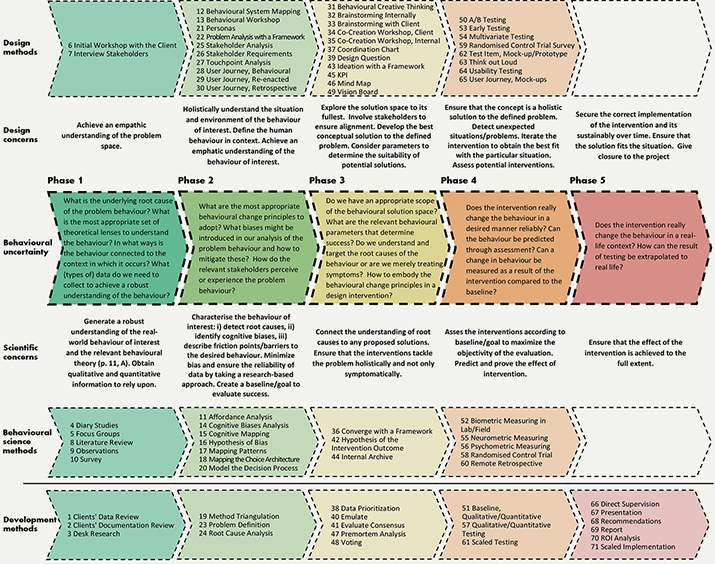

In order to map method usage across the behavioural design process, methods were allocated to one or more of the five phases identified above. Allocation was based on the experts’ statements and the visualisation co-created during the interview. The main methods used in each phase are detailed in the following sections, and their distribution across the process is illustrated in Figure 2.

Phase 1. Identification and Framing

The process begins with establishing an initial project definition (typically with a client), with a focus on collecting information and compiling existing knowledge on the subject. Ten methods were commonly used in this phase, with the majority being adapted from generic development methods (three methods) or behavioural science (five methods). Of these, the most important methods were: literature review (8), clients’ documentation review (2), interview stakeholders (7), focus groups (5), and observations (9), the numbers from here on referring to the methods listed in Figure 2. A major aim of these methods was to reveal assumptions held by clients, because their understanding of the behavioural problem is typically perceived to be tacit, vague, and incomplete, as illustrated by Expert B: “People have a tendency to think they have defined something when it is, actually, very vaguely defined.” Critical to this phase was identifying a framing for the project that would allow for a narrowing of the focus to enable work in Phase 2, as well as support later intervention development and behaviour change. This is illustrated by quotes from Expert F: “As we define the problem, we are also defining the scope of the field research” and “We don’t know what we are going to make, because we don’t know what we are going to find.” Here, observations were particularly critical to confronting assumptions and framing the true problem behaviour, as explained by Expert G: “We are strong believers in understanding people’s real behaviour, we prefer to go into the context and observe.” Overall, this helped establish the nature of the process as explained by Expert K: “We saw two domains that were really interesting to us… the lean start-up domain… and design thinking, where we fell in love with the methodology. By combining these two things with a deeper understanding of psychology we basically tinkered with behavioural design as a discipline.”

Phase 2. Mapping and Description

In this phase, experts used the framing from Phase 1 to bound the characterisation of the problem behaviour. This primarily built on a systematic approach, described as problem analysis using a theoretical framework (22). The frameworks used varied in source between scientific literature and self-created. Irrespective of the specific framework, three main outputs were consistently highlighted: i) the need to detect problem root causes, ii) the identification of relevant cognitive biases of the user, and iii) the description of friction points or barriers to the desired behaviour. This also facilitated the identification of potential for additional value creation; i.e., through creative re-framing of the problem by utilising multiple perspectives, as explained by Expert K: “The beauty of the framework is the shared space in which you map your psychological insight… and by engaging your client and your team in building up that framework… to get everyone to diverge or converge towards understanding the essence of the problem.” This is further exemplified by Expert H: “We find the model that allows us to understand the behaviours and what stands in the way of those behaviours.” Such analysis and re-framing was typically complemented by the development of various types of user journey (28, 29, 30), cognitive biases analysis (14), and behavioural system mapping (12). In general, experts sought to combine insights from multiple methods in order to both reveal alternative perspectives and triangulate evidence to support any conclusions. Expert P: “If you just rely on academic literature it could be that it is not relevant to the particular context you are working in. If you just rely on the context you could be biased by the Hawthorne effect... If you just rely on data, you might be inferring the wrong assumptions.” As such, method triangulation (19) was identified as key. In addition to these behaviour-specific methods, a number of traditional design methods were used. However, these were typically adapted to focus on the behaviour of interest, using the experts’ behavioural science knowledge to do so. An example is described by Expert A in the use of a behavioural user journey (28) adapted “with a focus on mapping every aspect of the behaviour”, linking them to emotional states, cognitive biases and drivers, as well as to other behaviours. Similarly, another adaptation is the re-enacted user journey (68), where behaviours are reproduced in context.

Phase 3. Framing and Development of Interventions

With the problem behaviour defined, sixteen methods were used for ideation purposes. Most common amongst these were types of brainstorming (32 and 33), vision board (49), and mind map (46). However, these were often complemented and supported by an archive of successfully applied behaviour change principles that several experts had compiled. These allowed them to revisit past projects for inspiration. Seven experts described this as an explicit method, describing their creation and use of an internal archive (44). Here, a critical creative element was translating behaviour change principles into designed interventions, which was considered a key differentiator between behavioural design and behavioural science, as explained by Experts H: “There are a lot of behavioural scientists, but not very many behavioural designers” and M: “This is not very scientific, but we are half creative half science. There has to be some room for messiness.” As such, the experts also highlighted how their creative approach often did not follow a stringent set of methods and emphasised diverse engagement and creativity, as stated by Expert H: “One the things I found from working in the creative industry is that having a lot different people, lots of different backgrounds, is usually more helpful than having three behavioural scientists in a room that all went to university, studying the same topic.” Here, all experts emphasised the importance of linking the problem to the solution with the goal to develop potential solutions aligned with the analysis results. This is exemplified by Experts K: “We make sure that we have the best possible insight as a point of departure for ideation, because ideation should be about what is the psychological problem that we need to solve” and M: “We spend a lot of time saying, ok, we know what behavioural principle can help but how can we make this into something that is creative and fun.”

Phase 4. Iterative Testing and Refinement

Across all experts, there was an acknowledgement that iterative testing was essential due to the unpredictability of behavioural effects, as highlighted by Expert C: “Tests are a behavioural designer’s best friend.” As such, a wide variety of testing methods were used, including test item mock-up/prototype (62), either digital or physical, coupled with qualitative and/or quantitative testing (57). Most commonly used methods were RCTs (58), survey (59), A/B testing (50), and multivariate testing (40). All experts emphasised their preference for testing in the real-world context, as illustrated by Expert O: “If you are not actually testing with real users, you are not actually testing.” Importantly, testing served both to inform creative design and refinement (e.g., via iterative prototyping) as well as more validation focused behavioural evaluation (e.g., via RCTs). This dual purpose is highlighted by the contrasting quotes of Experts F: “We find that just creating the prototype and discussing and ending it, is its own creative process, separate from ‘testing’” and E: “We are constantly testing up against what our initial objectives were.” However, this phase typically concluded with field tests, which allowed analysis of how users interacted with the solution in the actual situation. No matter what testing method was applied, all experts emphasised the need for a qualitative and/or quantitative baseline (51), in order to assess the final success of an intervention. This focus on testing was typically linked to a large number of iterations, as explained by Expert N: “We might loopback to deliberate or design, depending on whether we are seeing successful results; so, if we are finding either, it is not working or it is working in a way that we didn’t expect we want to go back.”

Phase 5. Scaling up, Launching and Maintenance

The final phase deals with planning how to implement the intervention sustainably and give closure to the project. Six methods were identified here. Most commonly mentioned were the use of recommendations (68) and presentation (67) to stakeholders, which were typically supported by data from the testing phase as well as ROI analysis (70). Here, there was also a general acknowledgement that due to the context specific nature of behaviour and behavioural interventions, each project often demands a specific method. However, there was a common focus on making sure that the solution fits the client and that it was aligned with considerations, such as, brand strategy and identity. This is exemplified by Expert M: “the messenger effect. If the message doesn´t fit the messenger, something is going to go wrong.”

Method Development and Use Balancing Design and Scientific Concerns

Following the analysis of the used methods, it became apparent that there were two major sources for methods in behavioural design: traditional design methods dealing with primarily design related concerns and behavioural science methods dealing with primarily scientific research concerns. The two sources reflect a general tension that was expressed by many experts: between scientific conservatism in evidencing, explaining, and quantifying claims on the one hand, and the need for creativity and design thinking in actually developing and realising solutions on the other. This is illustrated by the way in which the experts adapted or combined methods from both sources, making the behavioural science methods more designerly, and the design methods more scientific. This was evident when contrasting method adaptation and use in Phases 1 and 2 with Phases 3 and 4, as illustrated in Figure 2.

Methods adapted from the behavioural sciences were key to collecting information in Phase 1. These methods were used to characterise both the qualitative aspects of users’ behaviour as well as provide quantitative information on, for example, frequency of a certain behaviour. This was particularly important in framing the design space as well as creating quantifiable objectives that could be later evaluated, as explained by Expert A: “We try to work with a key metric, resulting in statements or goals like; we want to reduce sick leave by 20%.” Adding to these qualitative and quantitative methods, Phase 2 also incorporated a number of adapted design methods, in order to understand the context and environment of the behaviour of interest and empathise with the user, often forming the basis for re-framing the problem. This is illustrated by Expert P: “We frame the root causes as ‘how might we…’ —design questions—… as a way to generate interventions that are in line with the root causes.” That is, these adapted design methods helped connect the scientific and creative aspects of the process and frame and develop interventions based on the results of the insights. Expert L, when describing behavioural design stated: “cognitive psychology and design thinking, that is the core engine.” For example, the experts developed three modified versions of user journeys in order to provide a basis for both re-framing and triangulation of insights: behavioural user journey (28), re-enacted user journey (29), and retrospective user journey (30), each dealing with a distinct aspect of the behavioural problem. Importantly, the combination of methods used in this phase was focused on developing a frame that could provide a basis for more focused scientific analysis and understanding of the problem behaviour, in conjunction with its antecedents and consequences, whilst still making progress through creative conceptualisation, as explained by Expert H: “A lot of behavioural scientists are absolutely terrible behavioural designers (...) but it is hard to find a designer that truly respects the psychology.”

In terms of the iterative design and testing characterising Phases 3 and 4, more traditional design methods were widely adapted. However, in Phases 2 and 3 they were often combined with methods specifically focused on behavioural aspects. These were frequently adapted by the experts to ensure a consistent focus on behaviour, for example via the combination of ideation with a framework (43) and behavioural workshops (13). In many cases, experts explained how the problem behaviour had been characterised so precisely in Phases 1 and 2 that the relevant behavioural change principles were obvious to them, but still requiring substantial design work in developing the actual interventions. For example, Expert D stated: “Once you understand the behaviour that drives the problem, the solution is often very easy to come by”, yet Expert M emphasised: “Usually (we talk) about how to combine a creative approach with insights from behavioural science.” In particular, Expert P highlighted a common challenge amongst those not experienced in behavioural design, which illustrates the central connection between behavioural science and design concerns: “When they [non-experts] jump from the second to the third phase, they don’t carry with them those root causes… As a result, a lot of the ideas aren’t linked back to what was driving them, the problem.” All experts based their creative work on previously validated behavioural insights using some form of internal archive (44). Similarly, user centred design methods formed the basis for much of the testing in Phase 4. However, as shown in Figure 2, there was an increased focus on testing with end-users in the real-world context when possible. Here, design methods were combined with quantitative testing methods, adapted from the behavioural sciences, such as RCT’s (58), in order to ensure a behavioural focus as well as the robustness and validity of the results and subsequent claims. As highlighted by Expert I: “If you can’t measure it, you can’t really know if you’ve had an impact.”

The final phase was characterised by the use of generic development and project management methods, such as presentations (67) and recommendations (68) to plan for implementation. This was particularly important as the experts were typically not directly involved in implementation, instead acting as advisors. Hence, there was a need for clarity in communication and explanation of the problem behaviour, behaviour change principles, and design approach derived in the prior phases, such that the implementation team was able to understand, launch, evaluate, and maintain the behavioural intervention. This hand-over was explained by Expert O: “Basically, we are giving them a list ‘to do’.”

Across phases, the majority of methods were adapted from other fields—primarily behavioural science—and design methods were either adapted or combined with these in order to deliver both scientific robustness and creative insight in the behavioural design process, as illustrated in Figure 2. Common to all of these methodological adaptations was the underlying focus on understanding behaviour and incorporating behavioural insights and behavioural theory into the design process in order to ensure explicability and predictability in proposed interventions, as well as the ability to quantify and document concrete behaviour change in the final phase. This points to behavioural uncertainty as a key driver for method usage in behavioural design.

Handling Behavioural Uncertainty through Methods

Central to the experts’ balance between design and scientific concerns, and hence their use of methods across the design process, was resolving the specific uncertainties they faced in understanding and designing for human behaviour. In particular, they sought to connect understanding of root causes to any proposed solutions, in order to generate effective interventions that tackle the problem holistically and not only symptomatically. This took a number of forms across the process.

First, all experts described the design process as focused on generating a robust understanding of both the real-world problem behaviour as well as relevant behavioural theory. To do this, they combined creative reframing and abduction to bound and focus the scope of the work with hypothesis propositions based on literature review (8) and desk research (3). This was repeated in multiple phases with different frames being established, and hypotheses being iteratively tested. Concrete examples include the use of hypothesis of bias (16) and hypothesis of the intervention outcome (42) coupled with, for example, co-creative workshops (34) in Phases 2 and 3. In addition to this, the experts drew heavily on empirical data in their conceptualisation and decision-making in order to minimise potential ambiguity, as highlighted by Expert G: “Taking a research-based and evidence-led approach counters decision making based on assumptions and potentially biased judgements,” as well as the predominance of behavioural science methods in Phases 1 and 2. Further, all experts employed method triangulation (19) as explained by Expert B: “We like to use at least two different kinds of data collection, because we know that methods are faulty.” While the above considerations emphasise how experts dealt with behavioural uncertainty linked to scientific concerns, design concerns were also important and required a thorough understanding and ability to use design methods appropriately. This design need was highlighted by Expert H: “A lot of behavioural scientist are—I suppose—‘scientised’ by design, but not very good designers… I often think that behavioural scientists can take academic insights but find it really hard to translate them into interventions and things in the real world like artefacts or communications that actually change behaviour.”

Second, the experts made extensive use of behavioural theory in directing the focus of their work, as well as their interpretation of the problem and development of solutions. For example, Expert M explained how they aimed to minimise biases, and reveal underlying cognitive mechanisms by using theory informed observational methods: “… to not just go with our own intuition, we don’t want to just go by the way we see it.” This approach linked closely to traditional behavioural science research techniques. This was reflected in a focus on producing quantitative data via methods such as quantitative baseline (51), cognitive biases analysis (14), diary studies (4), and observations (9), in order to establish clearly defined goals and basis for comparison. For example, Expert C considered that “The essential scientific method is statistics, pairing psychological theory with statistics.” However, all experts agreed that qualitative data was also important in supporting the design work by providing insights into contextual factors, real-world effects, and user perceptions. As highlighted by Expert M: “If a certain principle works, behavioural science will say, let’s apply it; whereas a brand will say; maybe some might fit if we do it the right way.” Critically, this points to the need to balance methods to address both design and scientific concerns, allowing for framing and a creative focus as well as a productive narrowing of scope to support targeted intervention development. Expert A summarised this as: “(The methods used in designing interventions are) like if you combine behavioural insights with the scientific method and design thinking.” Ultimately, the design methods allowed the frame to be sufficiently limited as to enable the behavioural science methods, whilst also providing the potential for broadening the scope back out during exploration, development, and iteration.

Third, once the experts had established a strong design frame and hence were able to collect and analyse more focused insights, they perceived themselves as having a strong foundation for understanding what might be causing or stopping a behaviour and its importance in developing interventions. Expert I described a common mistake of novice behavioural designers that “don’t carry with them those root causes and, as a result, the ideas aren’t linked back to what was driving them.” This knowledge was considered essential during ideation and solution development, with behavioural insights translated into interventions via methods such as personas (21) and touchpoint analysis (27). As illustrated by Expert L: “By the time we get to the design development, we know what behavioural change principles to build up solutions upon.” There was a general consensus that the problematic behaviour was normally analysed in such depth that the intervention became obvious in principle yet required extensive creative design work and iteration in order to translate that principle into real interventions as highlighted in the Phase 4 results above. As Expert A explained: “We need to match the solution to the problem” with Expert K emphasising that “[after insight analysis] it becomes easy to craft a good problem statement, and then say: for the rest of the sprint are we going to design to solve this problem.” This link between behavioural problem and solution intervention was typically achieved through insights from cognitive science supported using internal archive (44), ideation with a framework (43), and by the practitioners’ experience and expertise. As illustrated by Expert H: “Over the last 10 years, consistently I have found that if you can use one of these three interventions, you can solve a problem quickly and usually without too many financial resources involved.” These insights were referred to as behaviour change principles, mental shortcuts, or biases, with common examples including social proof, awareness, and commitment. Ultimately, the experts aimed to ensure that the defined assumptions materialise when the interventions are applied and tested in the real world. As Expert H stated: “Ultimately all insights are absolutely useless if we don’t do anything with them.”

Given these findings, it is apparent that issues regarding being able to explain or predict a person’s behaviour were decisive in the experts’ selection, adaption, and use of methods in behavioural design. In particular, this was attributed to the complexity, ambiguity, and difficulty in evaluating, understanding, and predicting human behaviour in the real world. Bringing our findings together, Figure 2 illustrates the core behavioural design process and the observed behavioural uncertainty, the balancing of design and scientific concerns, and the methods adapted from the behavioural sciences or design across the various process phases. Figure 2 summarises our main findings regarding the progression of behavioural uncertainty across the phases, as well as the methods used to respond to this. Important to note here is that methods from both design and behavioural science are adapted in order to align harmoniously within each phase, with each other, and across the overall process.

Figure 2. Overview of behavioural uncertainty, design and scientific concerns, and methods used across the five phases of the behavioural design process. (Click on the figure to enlarge it.)

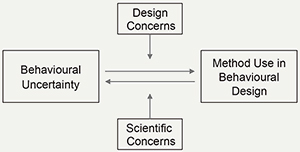

Discussion

Our research provides three main contributions with implications for theory and practice. First, we provide a comprehensive overview of the methods used in all phases of the behavioural design process. This forms an important collection for practitioners, educators, and students seeking to better understand the practices and peculiarities of behavioural design, and answers our first research question: what methods are selected during behavioural design and when are they selected? Second, we identify and explain behavioural uncertainty (i.e., “the uncertainty associated with being able to predict or explain a person’s behaviour” (Redstrom, 2006)) as a key driver of method use in the behavioural design context. This is a novel type of uncertainty in the design literature, and its characterisation provides an important link between prior work on uncertainty in design (Cash & Kreye, 2017; Christensen & Ball, 2017) and behavioural design (Khadilkar & Cash, 2020; Schmidt, 2020). Third, we explain how behavioural uncertainty informs a tension between design and scientific concerns, and how this tension fluctuates across the behavioural design process. This extends the scope of considerations that need to be accounted for when studying behavioural design (Tromp et al., 2011), as well as elaborating how behavioural designers move between design- and behavioural-science led framings in order to integrate the best of design and behavioural science methods (Mejía, 2021; Reid & Schmidt, 2018). We distil these insights into the framework illustrated in Figure 3, which answers our second research question: how and why are methods adapted and used during behavioural design? In combination with the overview in Figure 2, this relates the major concepts under study and highlights the critical relationship between behavioural uncertainty, design and scientific concerns, and method use in behavioural design.

Figure 3. Relationship shaped by tension between design and scientific concerns.

Central to our understanding of method use in behavioural design is the emergence of behavioural uncertainty as a specific driver. In psychology, behavioural uncertainty is associated with difficulties in explaining and predicting behaviour (Redmond, 2015). This substantially extends discussions regarding uncertainty in design. Prior work in design has highlighted uncertainty—in general—as a driver for all aspects of design work (Cash & Kreye, 2018; Christensen & Ball, 2018; Daalhuizen et al., 2009). However, such a general conceptualisation does not allow for substantial differentiation between various types of design work. In contrast, the management literature has highlighted how development processes differ significantly depending on the type of uncertainty they face (O’Connor & Rice, 2013), yet such differentiation has not been directly evidenced in the design context. As such, our work takes a step towards demonstrating how the type of uncertainty can differentiate design work, by distinguishing a key driver of behavioural design from, for example, technical uncertainty in new product development (Song & Montoya-Weiss, 2001). This expands Daalhuizen’s (2014) description of uncertainty as a determinant of method use in general by showing how the distinctive use of methods in behavioural design can be explained by the distinctive focus on behavioural uncertainty. This highlights the need to extend theorising of design uncertainty to include its potential type(s), in addition to level, in order to understand how it can drive such a wide variety of design work. This also suggests the need for further study of behavioural design, as behavioural uncertainty differs significantly from prior work (Cash & Kreye, 2018; Christensen & Ball, 2018; O’Connor & Rice, 2013), and therefore has the potential to require new or adapted methods, process, and management approaches. Thus, the characterisation of the relationship between behavioural uncertainty and method use in behavioural design points to a number of important areas for further theory development.

Together with our detailed findings (Figure 2), the relationship between behavioural uncertainty and method use also helps explain previously described challenges in understanding and designing for complex behaviour (Fogg & Hreha, 2010; Khadilkar & Cash, 2020) and predicting the impact of interventions in context (Lambe et al., 2020; Schmidt & Stenger, 2021). Further, behavioural uncertainty provides a common driver linking design and behavioural science methods (Figure 3). Specifically, behavioural uncertainty motivates integrative behavioural design by requiring generative, abductive methods to (re)frame problems and solutions, such that they can be feasibly addressed by inductive/deductive methods, to achieve both impactful and explicable behaviour change. In Dorst’s (2011) terms, methods supporting abductive work are essential to establishing the value and frame, while methods supporting inductive/deductive work are essential to concretising and evidencing the how (i.e., the working principles). This takes a step toward dissolving the apparent tension between methods emphasising abductive reasoning (Dorst, 2011; Kolko, 2010) and those emphasising inductive/deductive reasoning and scientific theory (Michie et al., 2008) in the context of behavioural design. Thus, the identification of behavioural uncertainty provides a potential foundation for understanding the harmonious use of methods from across fields in integrative behavioural design.

In this context, design concerns were more related to exploring the scope of conceptualisation, potentially increasing uncertainty, while scientific concerns were particularly related to uncertainty reduction via empirical research and the development of theoretical insights from the scientific literature. As such, these two sets of concerns, and their associated methods, were observed in a dynamic balance across the behavioural design process (Figure 2). The interviewees generally sought this balance by moving between design- and behavioural-science led methods whilst maintaining a common mindset and focus, which takes a step towards explaining how designers productively integrate such distinct fields (Mejía, 2021; Reid & Schmidt, 2018). This highlights the need for behavioural designers to consciously reflect on and integrate the diverse mindsets and goals embodied in each method, with their own mindset and focus on behavioural uncertainty. Despite the observed importance of achieving this alignment, current research on the nature and content of methods and how differences in these might lead to conflicts and error, especially across fields, is still sparse (Daalhuizen & Cash, 2021). This also emphasises the need to critically evaluate the assumptions embodied in method repositories developed in a single field. For example, mindset is often only addressed implicitly in the titling and goal descriptions of a method (Van Boeijen et al., 2020). This can lead to confusion and error, particularly where terms have different meanings, similar methods are used in different modes, or underlying reasoning is left implicit (Andreasen, 2003), all of which occur in the interaction between design and behavioural science methods in behavioural design. For example, behavioural design itself has multiple distinct characterisations (Reid & Schmidt, 2018; Schmidt, 2020), and both design and behavioural science method repositories include multiple iterations of framing, user analysis, or prototyping (Van Boeijen et al., 2020; Kumar, 2013; Wendel, 2013). Thus, the balancing of concerns (Figure 3) serves to highlight an important aspect of behavioural design practice, as well as points to the need for further theory development in understanding how methods are interpreted and processed in an integrative process.

Finally, this expanded scope of methods, and tension between design and scientific concerns in the shaping of their use (Figure 3), point to a potential challenge in understanding behavioural design mindset through traditional design expertise lenses. Specifically, while authors such as Dong (2009) highlight designers’ ability to understand and work with complex problems, Tracey and Hutchinson (2016) to manage uncertainty, and Kouprie and Visser (2009) to empathise with users, it is apparent that methods and their usage in behavioural design also draw heavily on social and behavioural science skills and mindset. These go beyond the major attributes and skills identified in the recent review of design expertise and professional identity by Kunrath et al. (2020b), and highlight the centrality of balancing scientific and designerly ways of thinking in the behavioural design context (Mejía, 2021; Schmidt, 2020). This is reflected in the fact that the interviewed experts all identified themselves as both behavioural designers and behavioural scientists or strategists. Thus, there is significant potential for further exploration of how behavioural designers combine these aspects of scientific and design mindset in order to shape both their practice and professional identity.

Limitations and Further Work

Before discussing implications, it is important to consider the main limitations of this work. First, the empirical approach drew on a limited number of expert interviews. This limits the degree to which insights can be generalised directly to other contexts or design settings, particularly given that both theory and practice are still developing in behavioural design. However, by working towards analytical generalisation (Robson & McCartan, 2011) we have been able to connect our insights to basic theoretical mechanisms in design, and hence provide a basis for further study in this area. Moreover, the robustness of our findings is supported by the degree of commonality found across a diverse group of experts. Importantly, this work represents one of only a few studies of globally recognised expert behavioural designers working in practice. Further work is needed to examine how these insights play out in real behavioural design projects across the scope of behavioural design (Reid & Schmidt, 2018), including sustainability, health, safety, and other application areas (Niedderer et al., 2017). In addition, further work is needed to better understand the development and impact of behavioural design expertise on method use, and how this differs from understanding of traditional design expertise.

Second, our conceptual framework focused on behavioural uncertainty as the major type of uncertainty emerging from the data. However, research on uncertainty outside of the design domain has also highlighted other types that could influence behavioural designers, such as technical and organisational uncertainty related to intervention development (O’Connor & Rice, 2013), or relational uncertainty related to communication with the client (Kreye, 2017). Thus, while we provide several insights into the influence of behavioural uncertainty on method use in behavioural design, further work could expand the scope of this investigation to examine the relevance and potential impact of other types of uncertainty.

Implications

This research forms the first overarching study of method use in behavioural design. We have traced how behavioural uncertainty informs a tension between design and scientific concerns, which in turn influence the selection, adaption, and use of a wide array of methods across the behavioural design process. As such, our work has several implications for both behavioural design theory and practice.

In terms of theory, the identification of behavioural uncertainty is an important contribution to understanding the use of methods in the behavioural design context (Figure 3) and constitutes a first description of this uncertainty type in the design literature. This points to the need to further examine the impact of varying uncertainty types on design work, expanding the scope of prior conceptualisations treating uncertainty in general. Also, the description of the relationship between behavioural uncertainty and design and scientific concerns extends understanding of the mechanisms driving design processes in this context, complementing prior descriptions of behavioural concerns and the balance between design- and behavioural science-led framings of behavioural design. Finally, the expanded scope of methods and tension between design and scientific concerns challenges traditional understanding of designers’ professional identity and expertise, with further work needed to better understand how the behavioural design mindset develops and shapes practice, in contrast to prior works on general design expertise.

In terms of practice, the development of a comprehensive overview of methods as well as the main concerns and uncertainty driving their use in all phases of the behavioural design process (Figure 2, Appendix) provides a foundational library relevant to practitioners, educators, and students working in the behavioural design context. Importantly, we also highlight how these methods go beyond traditional design method repositories, to incorporate many methods from the social and behavioural sciences. In addition, we point to key design and scientific concerns relevant at each stage of the behavioural design process and their connection to specific methods, providing best practice insights for behavioural designers and students. Ultimately, by explaining how these methods are used harmoniously based on the common driver of behavioural uncertainty we provide concrete guidance on how to move between design- and behavioural-science led framings in order integrate the best of design and behavioural science methods.

Conclusions

Behavioural design is an important area of research and practice key to addressing behavioural and societal challenges. However, major questions remain as to how design methods are selected, adapted, and used during behavioural design. To take a step toward answering these questions we conducted fifteen interviews with globally recognised experts. Based on these interviews we identify three main contributions. First, we provide an overview of the methods used in all phases of the behavioural design process. Second, we identify behavioural uncertainty as a key driver of method use in behavioural design. Third, we explain how this creates a tension between design and scientific concerns—related to interactions between abductive, inductive, and deductive reasoning—which must be managed across the behavioural design process. We bring these insights together in a basic conceptual framework explaining how and why methods are used in behavioural design. This holds several implications for theory and practice. However, substantial further work is needed to examine how method use and behavioural uncertainty interact in practice, as well as how this relates to critical aspects of designers’ mindset and professional identity development.

Acknowledgments

We thank all the experts that supported us in collecting the data used in this study. We also thank the reviewers for helping develop the final manuscript. Finally, we would like to thank DTU Management for funding this research.

References

- Andreasen, M. M. (2003). Improving design methods usability by a mindset approach. In U. Lindemann (Ed.), Human behaviour in design (pp. 209-218). Springer. https://doi.org/10.1007/978-3-662-07811-2_21

- Andreasen, M. M., Thorp Hansen, C., & Cash, P. (2015). Conceptual design: Interpretations, mindset and models. Springer.

- Bay Brix Nielsen, C. K. E., Cash, P., & Daalhuizen, J. (2018). The behavioural design solution space: Examining the distribution of ideas generated by expert behavioural designers. In Proceedings of the international design conference (pp. 1981-1990). The Design Society. https://doi.org/10.21278/idc.2018.0212

- Bay Brix Nielsen, C. K. E., Daalhuizen, J., & Cash, P. (2021). Defining the behavioural design space. International Journal of Design, 15(1), 1-16.

- Berdichevsky, D., & Neuenschwander, E. (1999). Toward an ethics of persuasive technology. Communications of the ACM, 42(5), 51-58. https://doi.org/10.1145/301353.301410

- Bhamra, T., Lilley, D., & Tang, T. (2011). Design for sustainable behaviour: Using products to change consumer behaviour. The Design Journal, 14(4), 427-445. https://doi.org/10.2752/175630611X13091688930453

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 2(2), 77-101. https://doi.org/10.1191/1478088706qp063oa

- Brown, T. (2008, June). Design thinking. Harvard Business Review, 1-9.

- Bucher, A. (2020). Engaged: Designing for behavior change. Rosenfeld Media.

- Cash, P. (2018). Developing theory-driven design research. Design Studies, 56, 84-119. https://doi.org/10.1016/j.destud.2018.03.002

- Cash, P., Gram Hartlev, C., & Durazo, C. (2017). Behavioural design: A process for integrating behaviour change and design. Design Studies, 48, 96-128. https://doi.org/10.1016/j.destud.2016.10.001

- Cash, P., Isaksson, O., Maier, A., & Summers, J. D. (2022). Sampling in design research: Eight key considerations. Design Studies, 78, Article 101077. https://doi.org/10.1016/j.destud.2021.101077

- Cash, P., Khadilkar, P., Jensen, J., Dusterdich, C., & Mugge, R. (2020). Designing behaviour change: A behavioural problem/solution (BPS) matrix. International Journal of Design, 14(2), 65-83.

- Cash, P., & Kreye, M. E. (2017). Uncertainty driven action (UDA) model: A foundation for unifying perspectives on design activity. Design Science, 3(e26), 1-41. https://doi.org/10.1017/dsj.2017.28