Collaborative 3D Workspace and Interaction Techniques for Synchronous Distributed Product Design Reviews

Department of Industrial Design, KAIST, Daejeon, Republic of Korea

This paper presents a collaborative 3D workspace that makes use of newly developed interaction techniques for synchronous distributed product design reviews. These new techniques were developed to enhance collaboration in regard to shared objects and shared workspaces. The requirements for such collaboration were determined through literature review and an observational study. An augmented reality based collaborative design workspace was developed in which the interaction techniques, the use of Sync-turntables and Virtual Shadows, were created to support shared manipulation, awareness of the actions of remote participants, and tele-presence. The Sync-turntable is a rotating synchronized table that provides intuitive physical representation of shared virtual 3D models. It also provides a physical cue of the remote participants’ manipulation. Virtual Shadows project body and hand silhouettes of remote participants which provides natural and continuous awareness of the location, gestures and pointing of collaborators. A lab-based user study was conducted, and the results show that these interaction techniques support awareness of general pointing and facilitate discussion in 3D product design reviews. The proposed workspace and interaction techniques are expected to facilitate more natural communication and to increase the efficiency of collaboration on virtual 3D models between distributed participants (designer-designer, engineer, or modeler) in collaborative design environments.

Keywords – Collaborative Design, Shared 3D workspace, Tangible Interaction, Tele-presence, Augmented Reality, Interaction Techniques.

Relevance to Design Practice – Collaboration has become an essential part of the design practice. The collaborative 3D workspace and interaction techniques proposed here support synchronous collaboration in distributed product design reviews. The research provides reflections on how a design researcher can create better collaborative design environments and on what should be considered for the application of the workspace.

Citation: Nam, T., & Sakong, K. (2009). Collaborative 3D workspace and interaction techniques for synchronous distributed product design reviews. International Journal of Design, 3(1), 43-55.

Received April 29, 2008; Accepted December 29, 2008; Published April 15, 2009.

Copyright: © 2009 Nam & Sakong. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: tjnam@kaist.ac.kr.

Introduction

Collaboration has become an essential part of the design process due to increased complexity in design projects and internationalized business environments. There are many cases where professionals from different organizations have to collaborate in order to develop a competitive product in a short period of time at a low cost. Moreover, the advancement in transportation and communication technologies together with the changes in modern lifestyles have made it common for design experts in need of collaboration to be dispersed geographically.

One of the characteristics of collaboration in product design is that the main content usually involves 3D models. As a means of expression for designers to visualize their abstract ideas, 3D models are iteratively used throughout the design process. Moreover, as a communication media it enables design managers, clients, users and other stakeholders to participate in the design process. Especially since the introduction of CAD, physical 3D models have been substituted by virtual 3D models that provide accuracy and efficiency (Tovey, 1989). However, compared to other collaborative work, design collaboration centered around a virtual 3D model presents many technological problems, as well as problems in interacting (Harrison & Minneman, 1996).

In general, 3D model data used in product design is large and complex, so there are many difficulties in synchronous collaboration. Furthermore, the existing 2D desktop based CAD interface is problematic in dealing with 3D models in distributed collaborative environments. Nonverbal communication methods such as one’s gaze and gestures have a great effect on tele-collaboration (Vertegaal, 1999; Buxton, 1992; Ishii & Kobayashi, 1998). However, the existing tele-collaboration environments, where the 3D model is presented in a 2D desktop environment, do not allow for this type of communication, thus decreasing efficiency in collaboration. Therefore it is necessary to solve these issues and to investigate a new interaction method for collaborative design using 3D models.

The aim of this research is to investigate ways that would make the collaboration between designers, or designers and expert collaborators of other fields in different locations, more efficient. In particular, the objective is to support synchronous distributed product design reviews by developing a new collaborative workspace with interaction techniques that would allow for a smoother collaboration. It is also intended to evaluate what effects the workspace has on design collaboration. As a research method to achieve these objectives, an observational study on the design collaboration behavior and environment was conducted to identify the requirements for a 3D remote design collaboration environment. Based on this, an augmented reality-based 3D tele-collaboration workspace using Sync-turntables and Virtual-shadows was developed to support awareness of remote users’ actions and contributions. A laboratory based user study was conducted to examine feasibility, as well as to reveal areas for improvement of the proposed workspace and interaction techniques.

Related Works

A collaborative 3D design environment is different from typical collaborative environments in terms of communication (Turoff, 1991; Baeker, 1993), problem solving (Nelson, 1999) and collaborative document production (Leland, Fish, & Kraut, 1988; Baeker, Nastos, Posner, & Mawby, 1993). Because designers have to make, edit, express and review 3D models, a tele-collaboration session for design involving a 3D model presents a lot of challenges in user interface (Nam & Wright, 2001; Pang & Wittenbrink, 1997; Gribnau, 1999). Related works of design collaboration can be classified into visualization-based design systems, where the focus is on reviewing a virtual 3D model (see http://www.sgi.com/products/software/vizserver/), and co-design systems which support real-time modeling and design editing (Gisi & Sacchi, 1994; Kao & Lin, 1996; Nam & Wright, 2001; Li, Lu, Fuh, & Wong, 2005).

One of the technological problems of a distributed 3D design collaboration environment is the data transfer of large 3D models and shared visualization. Therefore, a great amount of research related to design collaboration focuses on technical issues such as effective data transfer (Azernikov & Fischer, 2004), construction of a database for co-management (Qiu, et al., 2004) and visualization of a 3D model that is shared smoothly in real-time (Szykman, Racz, Bochenek, & Sriram, 2000). Interface issues are also considered important in computer supported cooperative work related research. These include usability issues for multiple users (Gutwin & Greenburg, 1998) and support for tele-presence in collaboration (Minsky, 1980; Steuer, 1995).

Research on using new technology such as augmented reality to enable a group of people to review a 3D model together is being carried out as well (Billinghurst & Kato, 1999). With augmented reality, designers can keep the existing workspace and incorporate the added virtual information on top of it. Augmented reality provides the benefit that designers can understand the size, volume and spatiality of the virtual information in the real world. MagicMeeting (Regenbrecht & Wagner, 2002) provides an augmented reality based collaboration environment in which a user can review the 3D model in a 3D environment. This system, however, is limited to supporting collocated collaboration. Tang and others (Tang, Neustaedter, & Greenberg, 2006) introduced mixed presence groupware that allows co-located and distributed teams to work together on a shared 2D visual workspace. To solve presence disparity problems in mixed presence groupware systems, they developed an embodiment technique called VideoArms that captures and reproduces people’s arms as they work over large displays.

Another concern for dispersed collaboration is tele-presence, which is integral to collaboration in that it helps geographically separated members establish a feeling of existence and of shared space. To establish tele-presence, the sharing of contexts (Churchill, Snowdon, Munro, 2001), and a natural synthesis of the work space and communication space (Buxton, 1992; Ishii & Kobayashi, 1998) have been studied. For example, systems such as Hydra (Buxton, 1992) and Clearboard (Ishii & Kobayashi, 1998) were found to support collaboration related to decision making, 2D note taking or sketching. Designer’s Outpost was developed originally as a collaborative web design tool using Post-it notes as a medium (Klemmer, Newman, Farrell, Bilezikjian, & Landay, 2001), but then grew into a remote collaboration system (Everitt, Klemmer, Lee, & Landay, 2003). It provides a shared workspace where a user can manage both real objects and virtual objects at the same time. The presence of participants is expressed in this space with shadows. This research focused on 2D based web design, so there are limits to its extension into collaborative environments using 3D models. It was found, however, that the combined use of both real objects such as Post-it notes and digital images of remote collaborators can be an effective communication tool. In-touch (Brave, Ishii, & Dahley, 1998), a remote communication tool focusing on the conveying and sharing of touch, introduced the importance of sharing real and physical feeling in personal communication. With this system, two remote users can sense each other’s direction, strength, and speed of control. Moreover, through an analog control of force, the user’s intention can be transmitted qualitatively. Such an application of physicality in the control of virtual information could be a useful element, especially in tele-collaboration environments controlling virtual 3D models.

It has been asserted frequently in related research that supporting tele-presence is an important element in tele-collaboration environments. However, challenges and opportunities with distributed synchronous design collaboration environments involving 3D models have not been fully addressed, with research concentrating mostly on solving technological problems. It is clear, though, that there are great possibilities for applying augmented reality and tangible interaction (Ulmer & Ishii, 2000) to tele-collaboration using 3D models.

Observation of Design Collaboration Behavior

In order to understand what was needed to support collaboration involving 3D models between people in different locations, an observation of a design collaboration process was carried out. It was intended to identify which stage in the design review process collaboration using 3D models is carried out, and what support the collaboration needs at this stage.

Method

The observation was carried out on a project with graduate level design student participants. The goal of their project was to design a new digital camera. Three teams, made up of three participants each, worked on the project. The design teams progressed through three stages of meetings, each a couple of days apart. The first of these meetings was a design brief meeting, followed by a design sketch review meeting, and then ending with a modeling review meeting. The project was conducted so that everyone carried out individual design work based on the content of the discussion from the previous meeting, with the results of this work being reviewed together in the next meeting.

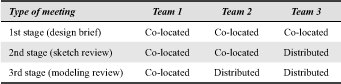

The focus of the observation was on the collaboration that occurred in design review meetings, since design work is often carried out individually and since collaboration occurs more intimately and frequently in these review stages. It was designed so that the three design teams would proceed with the design review meeting in different environments, as shown in Table 1. The intention was to understand the difference in design review stages of the co-located and distributed teams. At the same time, the focus of the observation was on how collaboration behavior changed when the object of collaboration was a 2D sketch and when it was a 3D model. Finally, it was hoped to illuminate what kind of limits and problems arise with remote collaboration. The first meetings were all conducted in co-located settings for introduction and instruction purposes.

Table 1. Meeting environment for each team

In the co-located meetings, the basic work environment consisted of a table with paper, pen, pencils, and reference images. In the distributed meetings, each individual’s computer served as a workspace where Microsoft NetMeeting was used to support video communication, voice sharing, text based chatting, whiteboard, program sharing and desktop sharing.

Analysis Method

Exploratory and qualitative analysis of the meetings was used to understand how the workspace was used depending on the environment (co-located vs. distributed) and on the property of the subject matters (2D or 3D subjects). The patterns of collaborative interactions were also interpreted. For the analysis, the collaborative interactions were classified into subject-centered and interpersonal interactions. Pointing, gesture and eye-contact were considered as interpersonal interactions. Sketching and modeling were considered as subject-centered interactions. The meetings were video recorded and interaction patterns were compared between the settings. After the project, a questionnaire and an interview were carried out to get participant feedback on the perceived importance of interaction types.

Results

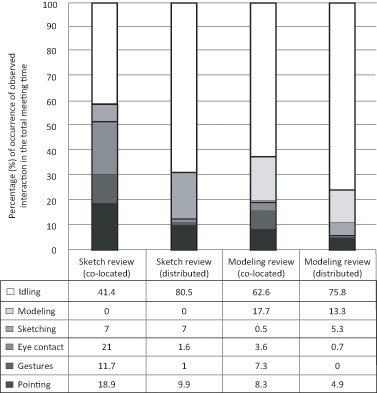

Figure 1 shows the frequency patterns of the different types of collaborative interactions observed in the meetings. In the co-located sketch review meeting, the types of nonverbal communication occurring most were gestures (11.7%), eye contact (21%) and pointing (18.9%). In distributed collaboration, gestures (1%) and eye contact (1.6%) decreased considerably, as did the frequency of pointing (9.9%). The frequency of sketching, however, more than doubled in the distributed environment. These sketches in the distributed environment were used more for pointing at a particular area or marking the direction than for expressing design ideas. The frequency of eye contact also showed a marked decrease in modeling reviews in the distributed environment. Participants were also observed spending some time in the meetings for individual thinking or being idle without collaborative and subject-centered interactions. This idle time can be explained by the difficulties of collaboration in the distributed conditions.

Figure 1. Percentage (%) of occurrence of observed interaction in the total meeting time.

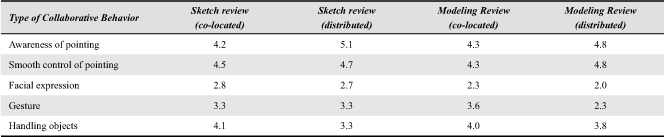

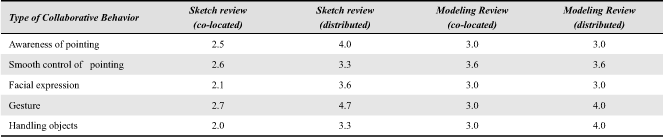

The participants were asked to rate the importance of the collaboration behaviors pointing, facial expression, gestures, and changes made to the shared object on a 5-point scale (Table 2). They were also asked how difficult these collaboration behaviors were during the meetings, also on a 5-point scale (Table 3).

Table 2. Perceived importance of collaborative behaviors (0: low importance; 5: high importance in 5 point scale)

Table 3. Perceived difficulty of collaborative behaviors (0: low difficulty; 5 high difficulty on a 5 point scale)

In sketch review meetings, the awareness of the partner’s pointing (co-located 4.2, distributed 5.0) and also the smooth control of pointing to the subject (co-located 4.5, distributed 4.7) were evaluated as the most important factors in collaboration. Recognizing other’s facial expression had the lowest importance in the co-located setting (2.8) and in the distributed one (2.7). In general, the level of collaboration difficulty was much higher in the distributed setting, especially for noticing the others’ gestures (co-located 2.7, distributed 4.7).

In modeling review meetings as well, the participants evaluated others’ pointing (co-located 4.3, distributed 4.8) and smooth sharing of one’s pointing (co-located 4.3, distributed 4.8) as the most important. Recognizing facial expressions (co-located 2.3, distributed 2.0) had the lowest ranked importance like in the sketch reviews, but was ranked even lower. Compared to the sketch review meeting, the handling of shared objects was considered to be more difficult in the modeling review for both co-located and distributed groups. This may be explained by the whiteboard function of the Netmeeting software working well for sketching together, but not being very smooth in sharing and manipulating a 3D model together with CAD due to long time delays in sharing visualization.

Interpretation of the Results

As stated, the goals of this observation were to find how best to support collaboration amongst distributed participants. Our findings can be organized into the areas which follow.

Shared Manipulation of the Actual Subject of Collaboration is Essential

As understood from the experimental results, the participants mentioned pointing and control of the shared object as the most important elements in collaboration, whereas the importance of facial expression recognition was valued low. In the distributed meetings, the participants mainly focused on the subject of design collaboration. In doing so, the use of the webcam screen for communication was rare. This suggests that while video conferencing is the main means of collaboration for decision making in distributed collaboration, sharing the actual object of collaboration itself while providing information directly related to pointing at the object or gestures around it is considered to be more important in design collaboration.

Importance of Sharing of 3D Models

The time delays in the display of images of the 3D model, as well as the unclear images being displayed, caused problems for collaboration in the distributed setting, and were pointed out as the main reasons behind communication disturbances. The participants also commented that this inconvenience sometimes caused unexpected results after the session. For example, one participant in the distributed setting reported that the color of the model was different from what they discussed during the session. A smooth discussion was generally deemed to be difficult because the object was not shared fully.

Limited Computer Screen Resource and Awareness of Others

When the modeling review took place under the distributed setting, the participants used several software applications concurrently. It was frequently observed that the participants lost track of what the others were controlling while moving and rearranging the different windows on their screen (Figure 2). Due to the limited screen resources of the desktop, it was difficult for the participants to be aware of what other participants were doing with the shared software tools. Therefore, it is important to provide awareness of others in individual workspaces. Further, in 3D model review meetings, it is important to support workspaces in which participants can organize individual tasks, shared tasks and other information for collaboration.

Figure 2. Confined work space in which it is difficult to understand the required information.

Supporting Tangibility and Sense of Real Scale of the 3D Model

There were situations in the co-located settings where objects in the real world were used as a quick reference for collaboration that then became a means of explaining ideas concerning the 3D models. For example, the participants used a kettle shaped object next to the designed model to estimate its real volume and size. Tangibility and a sense of real scale were provided with the model, but this was not possible in the distributed setting. When confirming the final design in the modeling review meeting, there was a discussion on the real size of the model. In the distributed setting, this collaboration was limited to within the desktop and the shared whiteboard. This demonstrates that a smooth transition between the real world and the digital world are required for the distributed setting.

Augmented Reality Based Collaborative 3D Design Workspace

Requirements

In creating a new augmented reality based 3D workspace to account for these findings, the following requirements were identified from the observational study:

- Shared object: The 3D model which is the object of collaboration needs to be shared among all participants in real-time. Control interface with the 3D model should be natural. The time delay of shared control should be minimized.

- Workspace: The collaboration space needs to support the sense of tangibility and scalability so that one can understand the real size and volume of the virtual model. This calls for a seamless connection between the real physical environment and the virtual model.

- Awareness: It is necessary for the participants of the collaboration to be continuously aware of where other participants are and what they are controlling. In particular, information directly related to the shared object, such as where participants are pointing, spatial information, and gestures explaining the workings of the shared object, needs to be provided naturally without interfering with the main task at hand.

Concept

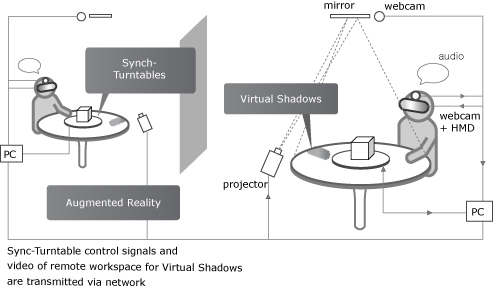

To meet these requirements, an augmented reality based collaborative design workspace was developed using two new interaction techniques. These techniques, using Sync-turntables and Virtual Shadows, were incorporated to support awareness of remote participants, shared manipulation and tele-presence. Sync-turntables, rotating and synchronized turntables, provide intuitive physical representation of shared virtual 3D models. They also provide a physical cue of the remote participants’ manipulation. Virtual Shadows, which are projected body and arm silhouettes of remote participants, provide natural and continuous awareness of the location, gestures and pointing of collaborators.

The workspace supports the reviewing stage and the discussion of points of modification to a virtual 3D model between geographically distributed designers participating in product design review meetings. Notably, it enhances a distant collaborator’s sense of physicality. Further, it facilitates an understanding of the real volume of the 3D model while reviewing and also provides continuous awareness of participants’ activities.

Augmented reality technology has been used to make it easy to interpret the real size and volume of a virtual 3D model (Billinghurst & Kato, 1999). A user wearing a visualizing head mounted display (HMD) is given a view that combines the real world with the virtual 3D model, making it seem like the user is controlling the 3D model in real life. It is possible for the user to naturally perceive its size and volume in the real world.

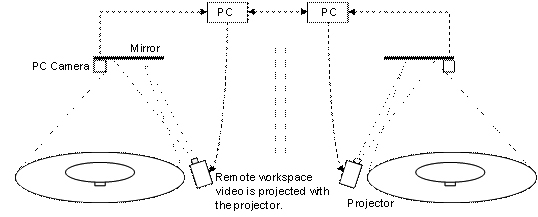

The augmented reality based collaboration 3D design workspace is configured as shown in Figure 3. The users collaborate sitting around a round table outfitted with a Sync-turntable and Virtual Shadows, which enables physical control of the virtual object and increases its tangibility. The round table environment was chosen because it is common to sit around a round table to collaborate effectively.

Figure 3. Tele-collaboration 3D design workspace summary.

The Sync-Turntable control signals and video of remote workspace for Virtual Shadows are transmitted via network.

Sync-turntable: Physically Synchronized Rotating Turntable

As a means of controlling and sharing the virtual 3D model in the new tele-collaboration environment, the Sync-turntables were applied. They use a simple rotating interface which allows an intuitive inspection of the virtual 3D model in all directions, and gives a physical feedback similar to controlling the virtual model itself. The collaborating users’ turntables are connected through a network, and the movements are synchronized in real-time. The control of the rotation is open to all users. The users wearing a HMD can see the 3D model turn with the turntable as if it were actually placed on the turntable (Figure 4). Simultaneous connection of the turntables visualizes the distant collaborator’s control actions, enhancing the sense of existence of that collaborator. The application of this physical medium, the turntable, smoothly incorporates the virtual 3D object into the real world. It enhances the user’s sense of tangibility by vesting a physical property to the control of a virtual object.

Figure 4. User with augmented reality video see-through HMD looking at the virtual model on the turntable (left) View displayed to the users through the augmented reality video see-through HMD(right).

Virtual Shadows: Projected Body Silhouette of Remote Collaborators

As discussed earlier in our observational study, continuous awareness of a collaborator’s position and gestures in a tele-collaboration workspace is very important. Other researchers reported on the importance of non-verbal communication methods such as gaze (Vertegaal, 1999), and gesture (Ishii, Kobaysshi, & Grudin, 1995) on tele-collaboration. Tang and others (2006) suggested that the embodiments in a tele-collaboration environment should visually portray people’s interaction with the work surface using direct input mechanisms. They noted the importance of the fine-grain movement of hand gestures and a collaborator’s positional relationship with the workspace. This awareness of remote collaborators is given through the use of Virtual Shadows.

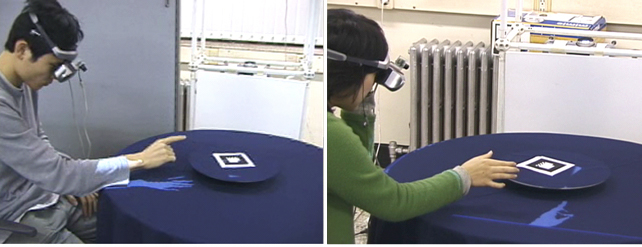

When people are in a meeting together, gestures or acts such as leaning against the table can be observed frequently. The proposed technique uses the silhouettes of the participants on the table (mostly of hands and arms) to create a Virtual Shadow (Figure 5). Users can easily be aware of where their collaborators are in the collaboration environment with Virtual Shadows, and the actions and gestures of pointing at a part of the virtual 3D model and explaining the workings of the objects can be expressed naturally. It makes unspoken and continuous awareness of others’ states possible without disturbing the main tasks. Just as people can understand each other’s thoughts more clearly with gestures, Virtual Shadows can compliment verbal communication in a tele-collaboration situation.

Figure 5. Virtual Shadow visualized in a collaboration environment.

In an augmented reality environment, there can be many methods to support awareness of remote participants with visual representations such as full live video streams and virtual avatars. These methods require accurate tracking equipment such as data gloves and optical markers that users should put on. Moreover, registration of the visual representation of the remote users on the augmented reality view requires heavy computational loads for processing and data transfer. Although Virtual Shadows are limited in expressing spatial gesture information accurately in that they only show two dimensions traced on the table surface, they do address the tracking and registration problems.

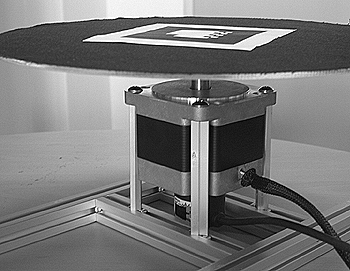

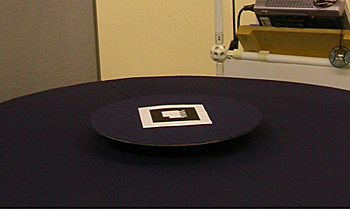

Prototype Implementation

To synchronize the movement of the Sync-turntables in distributed locations, stepper motors with two-way encoders were developed (Figure 6). The installation involved placing the motors underneath the round table, while the height of the turntable was aligned with the table surface (Figure 7). By doing so, the degree of rotation can be detected through the input encoder which converts the rotation angle into a pulse signal. That signal is then transmitted to the motor at a remote location. The output encoder on the other end reads this signal and generates the same pulse to turn the motor by the same amount. The prototype was tested with two motors connected with a cable. To apply to long distance distributed collaboration environments, the connection between encoders of the motors should be made through the network in real-time.

Figure 6. Turntable with encoder and stepping motor attached.

Figure 7. The Sync-Turntable and the table surface were aligned.

To visualize the Virtual Shadow, real-time image processing techniques were used. Using a digital camera attached above the table, the image of the area surrounding the turntable was continuously captured. This image was converted into the form of a shadow, and then it was projected onto the other user’s table. The image captured from the camera was first converted into a black and white image to reduce visual noise (Figure 8). Adjusting the brightness and the contrast, the user’s arm posture was extracted. To reduce visual noise, a dark blue cover was used on the table. The software was developed in Visual C++. Because the Virtual Shadow is a form of digital information, it can be easily transformed into different forms of information other than the provided monochromatic shadow. For multiple participants, colors could be added to show user identity of the Virtual Shadows.

Figure 8. Image captured to extract Virtual Shadow (left) and processed shadow image (right).

The processed image of the Virtual Shadow is transmitted through the computer network to be projected on to the other participants table. Figure 9 shows the structure of the system for visualizing Virtual Shadows. A mirror was installed to reflect the projected image to achieve the greatest projection area possible and to minimize the space needed to install the camera and the projector.

Figure 9. Structure of the prototype for the visualization of Virtual Shadows.

To implement the augmented reality system where the virtual 3D model is mixed with the image of the real world, DART (The Designers Augmented Reality Toolkit) was used. Running on Macromedia Director, it is a set of software tools that supports rapid design and implementation of augmented reality experiences and application (MacIntyre, Gandy, Bolter, Dow, & Hannigan, 2003). ARToolkit type markers (Billinghurst & Kato, 1999) were created and placed on top of the turntable. The digital graphic of the virtual 3D model was accurately overlaid onto the user’s view of the marker in the physical world. Users could see this synthesized video stream through a HMD. By using the HMD with a camera attached, it was possible to a see a video that accurately represented both the physical and virtual realities. By placing a marker with the same 3D model assigned for all the users at remote places, it was possible to let them see the same view. The process of recognizing the marker and placing the 3D model on the screen happens in the system of each user, so the amount of data that needs to be transferred through the network to synchronize the image of the 3D model is minimized. While further optimizations of the network connection and image processing in the prototype system were required for higher performance, the intention of this research was to test and illustrate our concepts rather than to produce production-level implementation.

User Study

A laboratory based user study was carried out to examine efficiency, effectiveness and user acceptance of the distributed collaborative 3D design workspace and interaction techniques. It was also intended to identify the impact and problems of applying the new workspace and techniques to collaborative product design review meetings with 3D models.

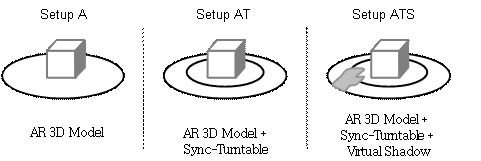

Method

For the experiment, two round tables equipped with Sync-turntables and Virtual Shadows were set up in a simulated distributed setting. The two workspaces were divided by a wall, but in the same room allowing the participants to communicate with each other verbally (see Figure 3 and Figure 9). Three conditions were used in the experiment differing in terms of used technology, as shown in Figure 10. The 3D models used in each condition were all of the same complexity to avoid a learning effect.

Figure 10. Three experimental setups for the user study.

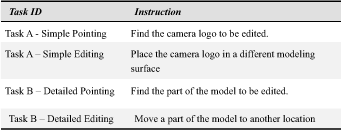

The tasks assigned to distributed collaborator participants were chosen to involve the review and discussion of a virtual 3D model (Table 4). Task A was a simple task of first locating and pointing at a label, and then relocating it. Task B was a detailed and complicated task of locating and pointing at a component of the model, and then modifying it. One participant of a team was asked to give instructions to the other. The instruction consisted both of pointing at particular areas, and of editing in terms of location or size. Both the pointing and actual completion of the tasks were observed.

Table 4. Task instruction and features

Using a within-subjects study, six paired teams of graduate student designers participated in the testing. The order of tasks and the conditions were alternated. Before the testing, the participants completed a questionnaire and user profile. To get familiar with an augmented reality setting with HMD, a simple pre-experiment exercise was given. The actual tasks were followed with a post questionnaire and interview on user satisfaction and acceptability of the system.

Effectiveness and efficiency were measured with respect to how accurate and fast the collaboration was. The independent variables of the experiments were the different types of tasks, the complexity of the tasks, and the presence of interaction technology provided in the settings. The dependent variables were the time taken to complete the task and the level of accuracy. The level of accuracy for pointing and editing requests in the collaborative workspace was measured on a 3-point scale by evaluating the difference between the completed 3D model of the remote partner and that of the reference model used by the other partner. Users’ subjective feedback on the preference of workspace and interaction techniques was also measured through the post questionnaire and interview. The entire meeting sessions were video recorded for exploratory qualitative analysis.

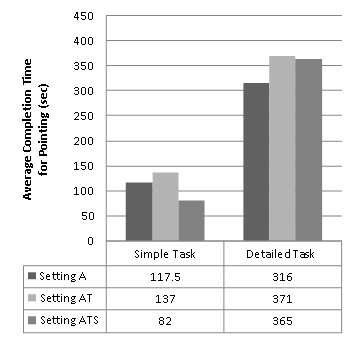

Results

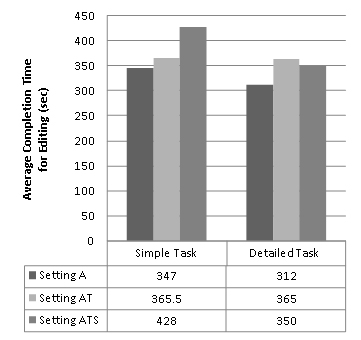

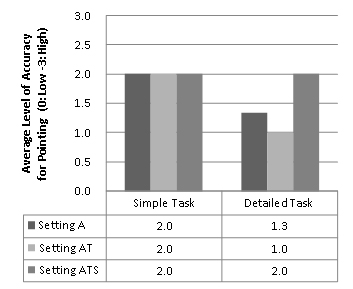

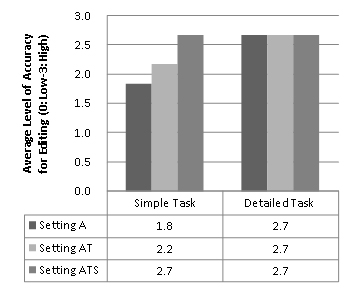

Figure 11 and Figure 12 show the time taken for the two tasks in the three different settings. There was no significant difference in completion time between the settings, while task types did produce significant differences. For the simple pointing task, the completion time was least in the ATS setting (82 seconds). For the detailed tasks, the completion time was least in setting A (316 seconds). This indicates that Virtual Shadows were effectively used for the simple pointing task. The complex task, however, required more accuracy where participants tended to communicate verbally, and Virtual Shadows seemed to provide the participants with more information to discuss. As a result, the completion time took longer in the ATS setting.

Figure 11. Time taken for pointing (sec).

Figure 12. Time taken in editing (sec).

For the editing task, the completion time was surprisingly slightly longer regardless of the task complexity when the interaction techniques were provided (Figure 11). It was noticed, however, that the participants tended to explain the task more with the interaction techniques provided. Also, because Virtual Shadows only provide 2D information, more verbal explanation had to be added in most cases. This indicates that the interaction techniques may not provide a direct benefit to the completion time of the detailed 3D modeling tasks.

In most conditions, the settings with the interaction techniques showed the highest accuracy. The accuracy of the pointing task is shown in Figure 13. The ATS setting achieved the highest accuracy for detailed pointing where Virtual Shadows was frequently used to show hand gestures and fingers as in face to face meetings. There was also a difference noted between the settings on the simple editing task, as shown in Figure 14. In particular, in the settings without the interaction techniques, participants faced the difficulties of describing exact locations only with verbal communication. Virtual Shadows reduced this difficulty in the simple editing task. For the detailed editing task, the participants showed a tendency to communicate verbally and did not rely on gesturing and pointing. As a result, the direct impact of the interaction techniques was not visible.

Figure 13. Accuracy of pointing task.

Figure 14. Accuracy of editing task.

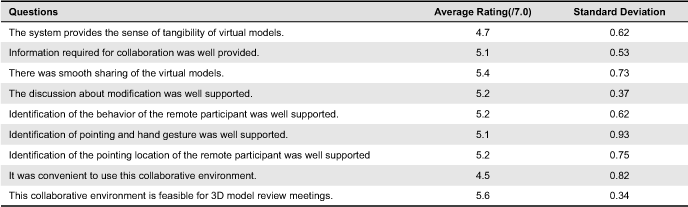

Participants reported their satisfaction level of their experience using a Sync-turntable and Virtual Shadows on a 7-point scale. The nine questions were arrived upon according to the identified requirements, usability, and feasibility of the system (Table 5). There were highly positive ratings on most questions, in particular, on those regarding feasibility of the system in design review meetings (5.6), and on virtual object sharing (5.4). Relatively low ratings were reported on the sense of physical presence (4.7) and the convenience of the system (4.5). A high standard deviation was detected on gesture recognition (0.93).

Table 5. Ratings of user satisfaction about the experience of the Sync-turntable and Virtual Shadows

Discussion

The setting with the Sync-turntable and Virtual Shadows showed the highest usability for simple pointing tasks in terms of speed and accuracy. It can be understood from this that using the proposed interaction techniques is effective in reviewing or discussing general matters when editing a 3D model. In particular, collaborative review tasks were smoother when verbal communication was done with a Sync-turntable. The techniques can also be seen as able to enrich synchronous distributed product design reviews with collaborative 3D tasks involving simple pointing and editing. Users responded that the new interaction methods had a positive effect on remote collaborative review of a 3D model (5.6/7.0 points). It was shown to be especially effective in pointing to a general part of the model. However, it did have difficulties in supporting precise tasks of editing such as adjusting the length or position of a component of the 3D model. It is suspected that user interface problems during collaboration combined with the general 3D interface issues for detailed editing tasks of design reviews.

The proposed interaction techniques can be considered as a method to support workspace awareness (Gutwin & Greenberg, 1998). It can be concluded from the questionnaire that Sync-turntable and Virtual Shadows contributed to enhancing the sharing of control and the feeling of the other’s presence. Awareness of pointing and gesture is mainly supported by Virtual Shadows, while presence awareness is supported by a Sync-turntable. These two awareness elements are complementary in that showing the position of the remote partner relative to the 3D model improves the awareness of presence as well as of pointing. The movement of hands can not only indicate a part of the model, but can also deliver information about what their partners are doing. When the physical cue from the Sync-turntable and the visual lexical information from Virtual Shadows are used together in synchronous distributed 3D workspaces, overall awareness can be enhanced. The accuracy in task completion was highest in the ATS condition (Figure 13 and 14). On the other hand, the task completion time was varied in the ATS conditions (Figure 11 and 12). More active communication was observed for the editing task when the difficulty of the tasks increased for the ATS setting, which indicates that workspaces supported by Virtual Shadows and a Sync-turntable triggers more active collaboration.

Sync-turntables and Virtual Shadows enhanced the sense of tangibility by providing physical cues of the other participant’s actions, thus promoting collaboration. The participants reported that full appreciation of the tangibility of the 3D model was limited (4.7/7.0 points), but gave positive feedback for the way that it enables users to handle the model in a more real environment compared to a 2D desktop environment. In the interview after the experiment, the users mentioned that the synchronized physical movement of the Sync-turntable gave them a strong impression that the model was being shared with the other participants. They also added that the additional locating and pointing information available through Virtual Shadows was useful in sharing each other’s work.

The results of the experiment clearly show that pointing during normal tasks took the shortest amount of time in an environment using both Sync-turntables and Virtual Shadows. In the same environment, the editing task took longer but showed increased accuracy. There was also user feedback that the simple rotating interface of the Sync-turntable was more convenient and intuitive for reviewing a model while rotating it, compared to the existing desktop based modeling tools where one has to use buttons and a mouse in a complex manner to do the same task. It was also mentioned that this collaboration environment solved the difficulty and troubles of navigating windows in desktop environments when chatting and using a modeling tool at the same time in tele-collaboration.

It was observed that participants had a tendency to sit in the same location at their respective tables to share the same view. As can be seen in Figure 15, the Virtual Shadow stretched out from where the users were sitting as they gradually got closer to the same location. This was different from our initial expectation that the participants would sit around the round table, as in a co-located meeting. This suggests that the distributed collaboration environment can be reconstructed in a new way that is different from a co-located situation.

Figure 15. Two participants discussing the model in the same absolute location.

Areas of improvement for the proposed techniques were also revealed from the study. For instance, the participants tried to use Virtual Shadows to point at a specific location on the model, but because the shadows only provide 2-D information, they were limited in pointing out vertical locations. After the experiment, in the interview the participants mentioned the need for an additional interface tool for this, such as a shared virtual 3D pointer. The common technical and user interface problems caused by the new 3D pointers may arise, however. The main technical problems of other virtual reality representations include issues of tracking the human body, realistic real-time display, registration of the real video display with a 3D pointer, and occlusion. The solutions of these technical problems need to be carefully considered. Another problem pointed out was that when multiple users were trying to control the turntable, there was a conflict for authority of control. To resolve this problem, it is necessary to provide information as to which of the participants is in control of the turntable at a given moment.

The sense of volume of the 3D model could be felt through augmented reality, and the virtual model could be controlled with the turntable. Despite this, limits in the interface still exist because it was not possible to control the model directly. For instance, participants looking at the augmented view reported that they sometimes experienced confusion as to whether the other participants were looking at the same side. This is probably due the wearing of an HMD and looking at a 3D model, which does not provide a very realistic scene and makes users feel that they are looking at a screen of a program. It is possible to know the position of the other participant with Virtual Shadows, but this is not possible unless the user puts his or her hand on the table to provide a marker of their location at the other participant’s workspace. This could be considered as an alternative way to solve this problem while optimizing tracking and visual representation in the augmented reality setting.

Conclusion

This study investigates a way of supporting geographically distributed designers, or experts of other professions in need of collaboration with designers, to collaborate more effectively. Requirements for a collaborative 3D workspace were arrived at based on a literature research in related areas and by conducting an observational study on design collaboration behavior. A collaborative 3D workspace and interaction techniques were developed for enabling designers to collaborate smoothly using nonverbal communication methods, and to let them be aware of each other’s presence while working on a 3D model. Increasing tangibility and physical control in 3D tele-collaboration environments was the main focus. These interaction methods were tested for their effect on design collaboration, especially for tasks related to design review. The results indicate that the interaction techniques enriched synchronous distributed product design reviews.

With using augmented reality technology, it was made possible to control a virtual 3D model in a 3D space. Through the use of a Sync-turntable, a more intuitive and physical control of the virtual model became possible. The simultaneous usage of the Sync-turntables and Virtual Shadows was proposed as a method of increasing awareness of a remote user’s presence. It is expected that the proposed interaction techniques and workspace can facilitate smooth collaboration between professionals (designer-designer, designer-engineer, designer-modeler, etc) who use 3D models.

For a more natural collaboration in a synchronous distributed shared 3D workspace, further research on workspace awareness and shared control scheme should be conducted. An efficient tracking method for users of the shared object in 3D space is required to improve the system. The user testing took place in a laboratory with a small number of teams as the aim of this study was to examine the general impact of the proposed concept. Further exploration should be done to accurately measure the impact of the interaction techniques and to investigate more specific issues of the interaction techniques, such as the impact of time delay and sense of tangibility.

Acknowledgements

This work was supported by the Korea Research Foundation Grant funded by the Korean Government (MOEHRD) (R08-2003-000-10179-0).

References

- Azernikov, F. (2004). Efficient surface reconstruction method for distributed CAD. Computer-Aided Design, 36(9), 799-808.

- Baecker, R. M., Nastos, D., Posner, I., & Mawby, K. (1993). The user-centered iterative design of collaborative writing software. In Proceedings of the INTERCHI ‘93 Conference on Human Factors in Computing Systems (pp. 399-405). Amsterdam, The Netherlands: IOS Press.

- Baeker, R. M. (Ed.) (1993). Readings in groupware and computer supported cooperative work: Assisting human-human collaboration. San Mateo, CA: Morgan Kaufmann Publishers.

- Billinghurst, M., & Kato, H. (1999). Collaborative mixed reality. In Proceedings of the 1st International Symposium on Mixed Reality (pp. 261-284). Berlin: Springer Verlag.

- Bly, S. A. (1988). A use of drawing surfaces in different collaborative settings. In Proceedings of the 1988 ACM Conference on Computer-Supported Cooperative Work (pp. 250-256).New York: ACM Press.

- Brave, S., Ishii, H., & Dahley, A. (1998). Tangible interfaces for remote collaboration and communication. In Proceedings of the 1998 ACM Conference on Computer Supported Cooperative Work (pp. 169-178), New York: ACM Press. .

- Buxton, W. (1992). Telepresence: Integrating shared task and person spaces. In Proceedings of the Conference on Graphics Interface (pp. 123-129). San Francisco: Morgan Kaufmann Publishers.

- Churchill, E. F., Snowdon, D. N., & Munro, A. J. (2001). Collaborative virtual environments: Digital places and spaces for interaction. London: Springer..

- Everitt, K. M., Klemmer, S. R., Lee, R., & Landay, J. A. (2003). Two worlds apart: Bridging the gap between physical and virtual media for distributed design. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 553-560). New York: ACM Press..

- Gisi, M. A., & Sacchi, C. (1994). Co-CAD: A collaborative mechanical CAD system. Presence, 3(4), 341-350.

- Gribnau, M. W. (1999). Two-handed interaction in computer supported 3D conceptual modelling. Unpublished doctoral dissertation, Delft University of Technology, Delft, The Netherlands. Retrieved June 15, 2008, from http://www2.io.tudelft.nl/id-studiolab/gribnau/thesis.html

- Gutwin, C., & Greenberg, S. (1998). Effects of awareness support on groupware usability. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 511-518). New York: ACM Press.

- Harrison, S., & Minneman, S. (1996). A bike in hand: A study of 3D objects in design. In N. Cross, H. Christiaans, & K. Dorst (Eds.), Analysing design activity (pp. 417-436). New York : Wiley.

- Ishii, H., & Kobayashi, M. (1998). Integration of interpersonal space and shared workspace: ClearBoard design and experiments. ACM Transactions on Information Systems, 11(4), 349-375..

- Ishii, H., Kobaysshi, M., & Grudin, J. (1995). Integration of interpersonal dpace and dhared eorkspace: Clearboard design and experiments. In S. Greenberg, S. Hayne, & R. Rada (Eds.), Groupware for real-time drawing: A designer’s guide (pp. 96-125). New York : McGraw-Hill.

- Kao, Y. C., & Lin, G. C. (1996). CAD/CAM collaboration and remote machining. Computer Integrated Manufacturing Systems, 9(3), 149-160.

- Klemmer, S. R., Newman, M. W., Farrell, R., Bilezikjian, M. & Landay, J. A. (2001). The designers’ outpost: A tangible interface for collaborative web site. In Proceedings of the 14th annual ACM Symposium on User Interface Software and Technology (pp. 1-10), New York: ACM Press. .

- Leland, M. D. P., Fish, R. S., & Kraut, R. E. (1988). Collaborative document production using quilt. In Proceedings of the 1988 ACM Conference on Computer-Supported Cooperative Work (pp. 206-215). New York: ACM Press.

- Li, W. D., Lu, W. F., Fuh, J. Y. H., & Wong, Y. S. (2005). Collaborative computer-aided design-research and development status. Computer-Aided Design, 37(9), 931-940.

- MacIntyre, B., Gandy, M., Bolter, J., Dow, S., & Hannigan, B. (2003). DART: The designer’s augmented reality toolkit. In Proceedings of the 2nd IEEE/ACM International Symposium on Mixed and Augmented Reality (pp. 329-339). Washington, DC: IEEE Computer Society.

- Minsky, M. (1980, May). Telepresence. Omni Magazine, 2(9), 45-51.

- Nam, T., & Wright, D. (2001). Syco3D: A real-time collaborative 3D CAD system. Design Studies, 22(6), 557-682.

- Nelson, L. M. (1999). Collaborative problem solving. In C. M. Reigeluth (Ed.), Instructional-design theories and models: A new paradigm of instructional theory (Vol. 2, pp. 241-267). Mahwah, NJ: Erlbaum.

- Pang, A., & Wittenbrink, C. M. (1997). Collaborative 3D visualization with cspray. IEEE Computer Graphics and Applications, 17(2), 32-41.

- Qiu, Z. M., Wong, Y., Fuh, J., Chen, Y., Zhou, Z., Li, W. D., & Lu, Y. (2004). Geometric model simplification for distributed CAD. Computer-Aided Design, 36(9), 809-819.

- Regenbrecht, H. T., & Wagner, M. T. (2002). Interaction in a collaborative augmented reality environment. In CHI ‘02 Extended Abstracts on Human Factors in Computing Systems (pp. 504-505). New York: ACM Press.

- Steuer, J. (1995). Defining virtual reality: Dimensions determining telepresence. In F. Biocca & M. R. Levy (Eds.), Communication in the age of virtual reality (pp. 33-56). Hillsdale, NJ: Lawrence Erlbaum.

- Szykman, S., Racz, J. W., Bochenek, C., & Sriram, R. D. (2000). A web-based system for design artifact modeling. Design Studies, 21(2), 145-165.

- Tang, J. C., & Leifer, L. J. (1998). A framework for understanding the workspace activity of design teams. In Proceedings of the 1988 ACM Conference on Computer-Supported Cooperative Work (pp. 244-249). New York: ACM Press.

- Tang, A., Neustaedter, C., & Greenberg, S. (2006). Videoarms: Embodiments for mixed presence groupware. In N. Bryan-Kinns, A. Blanford, P. Curzon, & L. Nigay (Eds.), Proceedings of the 20th British HCI Group Annual Conference (pp. 85-102). London: Springer.

- Tovey, M. (1989). Drawing and CAD in industrial design. Design Studies, 10(1), 24-39.

- Turoff, M. (1991). Computer mediated communication requirements for group support. Journal of Organizational Computing, 1(1), 85-113.

- Vertegaal, R. (1999). The GAZE groupware system: Mediating joint attention in multiparty communication and collaboration. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 294-301). New York: ACM Press.