The Interaction-Attention Continuum: Considering Various Levels of Human Attention in Interaction Design

Saskia Bakker* and Karin Niemantsverdriet

Industrial Design department, Eindhoven University of Technology, Eindhoven, the Netherlands

Interactive systems are traditionally operated with undivided attention. Recently, such systems have begun to involve autonomous system behavior that takes place outside the user’s behest and attentional field. In everyday life, people perform actions with varying levels of attention. For example, we routinely wash our hands in our periphery of attention while focusing on having a conversation, or we might consciously focus on washing our hands if trying to remove paint from them. We argue that interactive systems currently cover only two extreme ends of a full spectrum of human attention abilities. With computing technology becoming ubiquitously present, the need increases to seamlessly fit interactions with technology into everyday routines. Inspired by influential early visions on ubiquitous computing (Weiser, 1991; Weiser & Brown, 1997), we believe that interfaces should facilitate interaction at varied levels of attention to achieve this, these being focused interaction, peripheral interaction and implicit interaction. The concept of the interaction-attention continuum presented here aims to support design researchers in facilitating Human-Computer Interaction to shift between these interaction types. We use four case studies on the design of interfaces for interactive lighting systems to illustrate the application of the interaction-attention continuum and to discuss considerations for design along the continuum.

Keywords – Calm Technology, Divided Attention, Interactive Lighting Systems, Peripheral Interaction, Tangible Interaction, Ubiquitous Computing.

Relevance to Design Practice – The article discusses the need to develop interaction designs that are usable at various levels of attention, providing a continuum to facilitate designer-researcher in applying this notion. This continuum and the design considerations we derive from case studies are relevant when designing interactive systems for everyday routines.

Citation: Bakker, S., & Niemantsverdriet, K. (2016). The interaction-attention continuum: Considering various levels of human attention in interaction design. International Journal of Design, 10(2), 1-14.

Received August 3, 2015; Accepted May 16, 2016; Published August 31, 2016.

Copyright: © 2016 Bakker & Niemantsverdriet. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: s.bakker@tue.nl

Saskia Bakker is an assistant professor in the Industrial Design department of the Eindhoven University of Technology. In 2015, she held a visiting lectureship at the University College London Interaction Center. She obtained her PhD in 2013 from the Eindhoven University of Technology for a dissertation entitled “Design for Peripheral Interaction”. With a background in industrial design, her expertise sits in research-through-design in the areas of tangible interaction, peripheral interaction and classroom technologies. Saskia is a co-organizer of international workshops on peripheral interaction and a member of the steering committee of the conference series on Tangible, Embedded and Embodied Interaction (TEI).

Karin Niemantsverdriet is a PhD student in the Industrial Design department of the Eindhoven University of Technology. After obtaining her MSc degree in 2014, she started her PhD project in collaboration with Koninklijke Philips N.V. on multi-user interaction with domestic lighting systems. Karin takes a research-through-design approach in her work, lead by explorative design cases and in-context evaluations. Her interests include multi-user interaction, shared environments, awareness systems and tangible interaction.

Introduction

Over two decades ago, Weiser (1991) set out his influential vision for the 21st century in which computers of all sizes and functions are integrated in the everyday environment. In recent decades, the presence of computing technology in everyday environments has rapidly increased and much of Weiser’s vision has turned into reality, seeing digital technology integrated into many devices from door knobs to mobile touchscreen enabled devices. Nowadays, the digital world is omnipresent and available to be interacted with at any time. Perceiving and interacting with this digital information usually requires the user’s undivided attention, e.g., when looking at a smartphone screen or controlling a tablet through a graphical user interface. Alternative to these focused interactions, many interactive systems are currently being developed that act autonomously based on sensor data such as smart thermostats that adapt to users’ routines (Nest., n.d.). Compared to attention demanding, focused interactions, these interactive systems rely on implicit interactions (Ju, 2015; Ju & Leifer, 2011; Schmidt, 2000) that do not require any attention from the user and happen outside the user’s behest or intention.

When comparing focused and implicit interactions with computing technology to the way people interact with everyday physical environments, a remarkable difference can be observed. People can easily perceive and interact with the physical world without consciously thinking about it. For example, we do not have to consciously look outside to have an impression of the weather or time of day and we can easily tie our shoelaces while having a conversation. These actions and perceptions happen on a routine basis and neither require focused attention nor take place entirely outside of the attentional field; we can easily focus our attention on them whenever this is desired or required (e.g., when it unexpectedly starts to rain or when our shoelaces are entangled such that we need to focus to untangle them). These activities take place in the background or so called periphery of attention (Bakker, van den Hoven, & Eggen, 2010).

Weiser’s discussion of ubiquity in the computer for the 21st century (Weiser, 1991) also broached the need for computing devices to seamlessly blend into everyday life by operating in the periphery of attention (Weiser & Brown, 1997). This vision of ‘calm technology’ (Weiser & Brown, 1997) inspired many researchers in the domain of Human-Computer Interaction (HCI) to study digital information displays that can be perceived at the periphery of attention (Hazlewood, Stolterman, & Connelly, 2011; Heiner, Hudson, & Tanaka, 1999; Ishii et al., 1998; Matthews, Dey, Mankoff, Carter, & Rattenbury, 2004; Mynatt, Back, Want, Baer, & Ellis, 1998; Pousman & Stasko, 2006). Recently, the focus of such research has shifted towards studying both the perception of and interaction with digital information at the periphery of attention, enabling peripheral interaction with computing technology (Bakker, van den Hoven, & Eggen, 2015b; Edge & Blackwell, 2009; Hausen, Tabard, von Thermann, Holzner, & Butz, 2014; Olivera, García-Herranz, Haya, & Llinás, 2011). Despite these efforts, barely any computing devices enable peripheral interactions.

With the increasing ubiquity of technology, we believe that the vision of making interactive systems available in people’s periphery of attention is of growing relevance in order to seamlessly integrate computing technology into people’s everyday lives and environments. To achieve this, we argue that calm technology or peripheral interaction should not be seen as an alternative to focused or implicit interaction, but as a part of a continuum of interaction possibilities corresponding with varied levels of human attention. If interactive systems could seamlessly shift between focused, peripheral and implicit interaction, users would have the flexibly to choose the level of attention they wish to devote to the interaction depending on their context, goals and desires. Such flexibility was envisioned to be part of calm technology (Weiser & Brown, 1997), but current interactive systems seem to cover only two ends of the spectrum of human attention abilities, only offering scope for focused interaction and implicit interaction.

This article presents the interaction-attention continuum, which is aimed at supporting interaction design researchers in facilitating Human-Computer Interaction (HCI) at different levels of attention and to enable shifts along this continuum as the user desires. The interaction-attention continuum is grounded in theories of human attention and aims to provide a cross-over between these theories and theories of HCI. The continuum aims to provide interaction design researchers and practitioners with a practical handle to view and explore interaction design from the angle of human attention. We illustrate the application of the interaction-attention continuum through four case studies on interaction design for interactive lighting systems. We conclude the article by discussing considerations for interaction design exemplified in the case studies.

Interaction Design along the Interaction-Attention Continuum

To seamlessly fit technology into our everyday lives, we believe it is crucial to offer interaction possibilities at various levels of attention. We start by grounding our work in attention theory. Following this, we introduce the interaction-attention continuum, review related interaction design work and discuss why it is crucial to bridge the gap between focused and implicit interaction, before continuing to present our design cases.

Theoretical Background: Varying Levels of Human Attention

While focused interactions engage a user’s center of attention, peripheral interactions occupy a user’s periphery of attention. In this subsection, we address the theoretical background on divided attention to further describe the distinction between the center and periphery of attention.

Divided attention theory defines attention as the division of a limited amount of mental resources over different activities (Kahneman, 1973; Wickens & Hollands, 2000). These can be bodily activities (e.g., cycling), sensorial activities (e.g., listening to music), cognitive activities (e.g., thinking about an upcoming agenda item), or combinations of the three. At any moment, many such activities are available to be undertaken, but we cannot undertake all of these so-called potential activities (Kahneman, 1973) at once; activities can only be executed when mental resources are allocated to them. Hence, when our limited mental resources are divided over two or more activities, we are performing these activities simultaneously (i.e., concurrent multitasking (Salvucci, Taatgen, & Borst, 2009)). In line with divided attention theory, we describe the center of attention as the one activity to which most mental resources are allocated at any moment in time. The periphery of attention—in HCI literature that which is often referred to as “what we are attuned to without attending to explicitly” (Weiser & Brown, 1997, p. 79)—consists of all other activities (also see (Bakker et al., 2010)). Peripheral activities are always performed with a low amount of mental resources during a different main activity.

As detailed in the divided attention literature, performing multiple activities is only possible under particular conditions (Juola, 2016). Firstly, concurrent multitasking can only happen when the resource demand of the different activities is small. Resource demand decreases when operations have low difficulty (e.g., solving a complex equation requires more mental effort compared to listening to background music) and when operations become automated (Schneider & Chein, 2003) or habituated (Aarts & Dijksterhuis, 2000; Wood & Neal, 2007). Much less mental effort is needed for habituated activities such as walking compared to activities that are performed for the first time, such as when learning how to type. Research on habits links to theories of learning (Aarts & Dijksterhuis, 2000) and skill acquisition (Newell, 1991), this indicating that the time needed to perform an action generally decreases with practice and that this learning process involves an almost continuous interplay between action and perception (Newell, 1991). The interplay between action and perception is commonly suggested to improve the learnability and usability of interactive systems (Norman, 1998).

Secondly, the type of resources required to undertake potential activities determine whether multiple activities can be performed (Salvucci & Taatgen, 2008; Wickens & McCarley, 2008). For example, reading a book while driving a car is not feasible, whereas driving while that book is being read to you is not problematic. When two activities require visual attention, a bottleneck occurs (Salvucci & Taatgen, 2008) and the first activity must be stopped before the second can be executed. Given that many of our everyday activities happen on a routine basis, everyday scenarios such as having dinner, walking to the office or getting ready for work in the morning usually involve multiple habituated activities performed simultaneously (Bakker, van den Hoven, & Eggen, 2012). Some of these take place in the center of attention and others in the periphery of attention.

The process of dividing mental resources over various activities is highly dynamic. For example, when preparing dinner you might focus on cutting vegetables while listening to the news at one moment, briefly stop cutting to focus on a thought triggered by the news in the next instance and then shift focus toward the news while washing your hands. Activities regularly shift back and forth between the center and periphery of attention in everyday life, but most present interactive systems barely take such shifts into account in their interaction design.

Introducing the Interaction-Attention Continuum

The central message of this paper is the premise that in order to fit interactions with computing technology more seamlessly into our everyday routines, interactions with interactive systems should be available at various levels of attention. This section introduces the interaction-attention continuum, which is developed to support design-researchers in applying this notion to interaction design. Figure 1 depicts the interaction-attention continuum, which we illustrate by discussing the example of interactions with a modern lighting system in an office building at various levels of attention:

Imagine an office building in which an automatic lighting system using motion detectors is installed in the hallways. When office worker Joe enters the building, a motion sensor detects his presence and the lights in all the hallways on the ground floor automatically turn on. When he walks through the door into the hallway leading to his office, the hallway is nicely lit, which makes him feel welcomed and helps him to easily find his way. When the system was installed for the first time, Joe and his colleagues purposefully walked past the sensors a couple of times to explore how quickly the system reacted and what the range of the sensors was. Joe regularly has phone conversations for work and he always uses the benches located in the hallway to have these calls. During those phone conversations, it often happens that the lights turn off automatically when the sensors no longer detect Joe’s movement. It has happened so often that quickly moving his arm up and down to trigger the light to turn back on has become a routine action for Joe, which he regularly performs while in a phone-conversation.

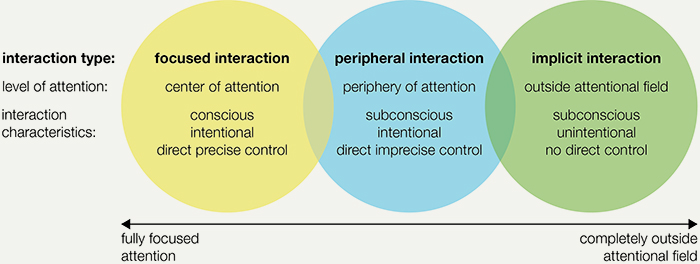

Figure 1. The interaction-attention continuum.

In this scenario we observe three different types of interaction with the lighting system: implicit, focused and peripheral. These interaction types form the core of the interaction attention continuum (see Figure 1).

First, Joe enters the building on a regular morning, triggering the lights to turn on in all hallways on the ground floor through implicit interaction (Ju, 2015; Ju & Leifer, 2011; Schmidt, 2000). This interaction is subconscious. Joe is not consciously thinking about the fact that his actions trigger lights to turn on in other parts of the building. Furthermore, the interaction is unintentional. Joe does not enter the building with the intention of turning on the lights, rather with the intention of starting his working day although the system interprets this behavior as input. Such interactions thus happen outside the attentional field of the user.

Second, Joe purposefully walks around the sensing area to explore the system’s working, thus engaging in focused interaction. The interaction is clearly conscious as Joe is fully aware that his actions trigger the lights to turn on, intentional, as turning the lights on is the purpose of his actions, and happens in the center of attention.

Third, Joe routinely moves his arm up and down while in a phone-conversation to turn the lights on through peripheral interaction (Bakker et al., 2015b; Edge & Blackwell, 2009; Hausen et al., 2014). This interaction is performed habitually and is therefore to some extent subconscious. It happens in the periphery of attention, while being clearly intentional as the movement is performed with the intention of turning on the lights.

As becomes clear from the scenario of Joe’s interaction with his office’s lighting system, the exact same interactive system can be operated through focused interaction at one moment, through peripheral interaction at another and through implicit interaction at a third moment. Although the three types of interaction are presented as separate circles in Figure 1, the illustration should be seen as a continuum. Depending on the user’s mindset and their context, computing technology may at one moment be interacted with through focused interaction, in the next moment through peripheral interaction and in yet another case through implicit interaction. In fact, such interactions can quickly and easily shift between the different types while occurring. The borders between the different types of interaction in the interaction-attention continuum should, therefore, be seen as overlapping grey areas. Earlier research on peripheral interaction design for primary school teachers (Bakker et al., 2015b) has indeed indicated that shifts in the grey area between focused and peripheral interaction can take place after a learning period in which the user becomes accustomed to the interface. The earlier mentioned interplay between action and perception (Norman, 1998), which is crucial in skill acquisition (Newell, 1991), plays an important role in realizing such shifts.

Related Interaction Design and Research Work

The three types of interaction described in the interaction-attention continuum are not novel interaction styles. The continuum simply poses a view on them to illustrate the need to consider multiple interaction types and the possibility of shifting between them in the design of interactive systems for everyday life routines. In this section, we discuss examples of each of these three interaction types separately.

Focused Interaction

Focused interaction is the most common interaction type of the interaction-attention continuum. Most applications on laptops or desktop computers are developed for focused interaction, for example, text-editing, photo-viewing, instant messaging and gaming applications, as are most applications on smartphones and tablet computers, for example, weather, news, social media and travel planning applications. The user needs to focus attention toward the screen and input devices such as mouse, keyboard or touchscreen to obtain information from the applications or to operate them. This also holds for most other everyday interactive devices such as automated teller machines (ATMs), microwaves, coffee machines or ticket vending machines. These devices, which are a part of our everyday environment, require focused attention during interaction, it being rather difficult to operate them while doing another activity such as having a conversation or reading the newspaper.

Implicit Interaction

Inspired by Weiser’s (1991) influential work on Ubiquitous Computing, in recent decades numerous researchers have studied systems that act autonomously based on sensor input as a particular alternative to focused interaction. The field of Ambient Intelligence (Aarts & Marzano, 2003), for example, studies connected electronic devices in the home environment that can sense and autonomously respond to the presence of people. The related term Internet of Things (Atzori, Iera, & Morabito, 2010) was introduced to describe future scenarios in which all types of everyday objects are enhanced with embedded computing technology and interconnected in a network, being able to identify users and offer appropriate interaction opportunities. The term context aware computing (Abowd et al., 1999) is used to identify how ubiquitous sensors can be used to determine and take into account information from the environment in actions taken by a computer.

All of the above-described fields of research rely on automatic sensing of people’s activity or presence as input for computer-initiated activities. Such sensors operate implicitly, without the user being aware of it. This type of Human-Computer Interaction is called implicit interaction (Ju, 2015; Ju & Leifer, 2011; Schmidt, 2000), which is defined as “an action, performed by the user that is not primarily aimed to interact with a computerized system but which such a system understands as input” (Schmidt, 2000, p. 192) and which happens “outside of the user’s notice or initiative” (Ju & Leifer, 2011, p. 80). Numerous examples of implicit interaction are discussed in research literature. For example, Ballendat et al. (2010) studied public displays that automatically adapt content based on the sensed proximity of potential users. Gullstrom et al. (2008) studied background tracking and analysis of computer user’s document handling activities to optimize later document retrieval. Researchers in the field of ‘ambient assisted living’ (de Ruyter & Pelgrim, 2007; Muñoz, Augusto, Villa, & Botía, 2011) studied the use of hidden sensors in the homes of elderly and disabled people to provide input for computing systems that can support these users in their daily lives.

Apart from implicit interaction systems studied in research contexts, we see an increasing number of such systems becoming commercially available. For example, the automatic lighting in the earlier-described scenario of Joe’s office, wind-shield wipers that start to move when driving through the rain, doors that open when detecting a person in front of them and cars that unlock when the owner bearing the car key is near. More recent examples include smart thermostats that detect and adapt themselves to a user’s routine (e.g., Nest), alarm clock applications that wake people at the most appropriate moment in their sleep cycle (e.g., Sleep Cycle alarm clock) and reminders that alert users to undertake intended activities based on their GPS location (e.g., Google Now).

Peripheral Interaction

Although both focused and implicit interactions have made their way into our everyday lives, peripheral interaction has mainly been explored in research studies. The field of peripheral interaction initiates from calm technology (Weiser & Brown, 1997), a vision that inspired various related areas of research, including ambient information systems (Pousman & Stasko, 2006), ambient media (Ishii & Ullmer, 1997), peripheral displays (Matthews et al., 2004) and awareness systems (Markopoulos, 2009). These areas each aim to present information from computing systems to users in a subtle manner, such that it can be perceived in their periphery of attention. An early example is the dangling string (Weiser & Brown, 1997), a string connected to the ceiling of an office building that subtly spins to increase office users’ background awareness of the network activity in the office. Similarly, water lamps (Dahley, Wisneski, & Ishii, 1998) project shadows of water ripples on the ceiling to subtly display the heart rate of a significant other to promote a feeling of connectedness. More recent examples include Data Fountain (Eggen & Mensvoort, 2009), which presents relative currency values through the height of its water jets, enabling users to perceive this information at a glance and Move-it sticky notes (Probst, Haller, Yasu, Sugimoto, & Inami, 2013), a system that augments physical sticky notes by adding motion to subtly remind users of upcoming tasks.

In recent years, some researchers have added to this field by aiming to enable users to both perceive digital information in their periphery of attention and to interact with this data at the periphery. These latter peripheral interactions (Bakker et al., 2015b; Edge & Blackwell, 2009; Hausen et al., 2014; Olivera et al., 2011) are inspired by peripheral activities in everyday life such as hand washing, drinking coffee or tying shoelaces. Edge and Blackwell (2009), for example, developed a tangible interaction design in which small tokens representing ongoing tasks could be manipulated by computer users at the side of their workspace, outside their visual field. Hausen et al. (2013) explored using gestures at the side of a computer worker’s desk space to enable direct access to frequently-used applications or functionalities in the periphery of attention. Wolf et al. (2011) explored various small hand-gestures that are suitable to quickly and unobtrusively provide coarse input to a computing system. Bakker et al. (2015a) studied peripheral interaction in a classroom context, enabling teachers to quickly communicate short messages to children while engaged in other teaching activities.

With only a few examples of peripheral interaction designs known, most current interactive systems occupy either the left or the right end of the interaction-attention continuum (Figure 1). Interaction designs in the middle of the continuum are sparse, which indicates a gap between these two extremes.

Bridging the Gap between Focused and Implicit Interaction

We believe that bridging the potential gap between focused and implicit interaction is necessary to complete the full continuum and thereby allow fluently embedding interactive technologies into our everyday routines. We hypothesize that this can be achieved by creating interactive systems that can be operated at various levels of attention, enabling interactions to shift along the interaction-attention continuum as desired by the user or appropriate to the context.

An Example: Interacting with an Interactive Lighting System

To illustrate the existence of and the need to bridge this gap in the middle of the interaction-attention continuum, we describe an example of everyday use of modern interactive lighting systems in the form of smartphone applications that can control the lighting at home. Various such systems with comparable functionality are commercially available (e.g., Belkin WeMo, Elgato Avea, LIFX, Mi.Light, or Philips Hue), being designed to be operated with either focused or implicit interaction. These interactive systems consist of networked light sources for which the color, intensity and saturation can be controlled wirelessly. Users can control the lighting through focused interactions with a smartphone application, for example, by selecting pre-set scenes or by dragging icons that represent individual light bulbs over a color gradient map. Alternatively, these systems can be programmed to enable implicit interaction. For instance, the systems can be coupled to the GPS location of the user’s smartphone to switch on the lights automatically when a user nears their house.

Although such systems provide many opportunities in terms of personalization, adaptation and flexibility in lighting use, we foresee some difficulties in the interaction. Imagine a person comes home at night to a house in which such an interactive lighting system is installed. With no pre-programmed implicit interactions, the only way to turn on the lights is through the smartphone application. This requires the person to grab their smartphone out of their bag, unlock the phone, find the application and select a preset or carefully placing icons on selected light-colors. This provides the user with very precise control over the lighting, but it seems like a needlessly laborious and complex action sequence to control the light in simple scenarios such as coming home at night. If the earlier mentioned implicit interactions were preprogrammed, the person would enter an already lit home, the system recognizing the user being about to enter. This makes the action of turning on the light effortless as it happens subconsciously, but such implicit interactions afford no direct control over the light change. For example, when coming home with the intention of going to bed immediately, we may not require all lights in the living room to turn on, in which case we need to again use the smartphone application to adjust the lighting to our needs. As a person’s lighting needs differ in different situations, depending on desires, intentions, or social context (Offermans, Essen, & Eggen, 2014), it seems unlikely that interactive systems could become fully aware of all these nuances through sensor data. Thus, implicit interaction with one’s lighting system will not always provide the most appropriate manner of interaction.

The scenarios of use described above show that focused interactions may be needlessly laborious and the resulting precise control potentially unnecessary. On the other hand, the implicit interaction will offer too little control in numerous situations. The gap between these two extremes is apparent; a possibility to have direct control over the light in an effortless, but imprecise manner is missing. The scope to control the lights through peripheral interaction is lacking, although this would likely support the system in seamlessly blending with people’s everyday routines. Please note that although we use the example of interactive lighting systems in line with our following case studies, this is only one example of the many modern interactive systems in which this gap is apparent.

In Conclusion

Although physical actions are often performed without focused attention as part of everyday routines in daily life, most modern interactive systems are designed for either focused interaction or implicit interaction. Interactive systems designed for peripheral interactions are rare. With interactive systems becoming more and more present in our daily lives, we argue that to fit computing technology seamlessly into everyday routines requires interactive systems to be developed such that interactions at all three levels of attention are possible. To further explore this premise, we present four case studies on interaction design for lighting in the home environment.

Case Studies: Conceptualizing Novel Interaction Design for Lighting

Through the work presented in this article, we argue that to fit computing technology seamlessly into our everyday routines, interactive systems need to offer interaction possibilities at various levels of attention. To explore this premise and its implications for interaction design, we conducted a number of exploratory case studies on interaction design for lighting in the home environment. These case studies explicitly explored the use of the interaction-attention continuum in the early stages of a design process to allow an open exploration of possible interaction styles suitable for shifts along the continuum. We choose to explore interfaces from the domain of interactive lighting because the earlier mentioned gap between focused and implicit interaction becomes apparent here, as is recognized in related literature (Offermans et al., 2014). The four case studies were conducted by teams of graduate students with a degree in industrial design or related subjects. The resulting designs are conceptual, being developed as tangible prototypes without functioning sensing and actuation capabilities, but surface interesting considerations for interaction design along the interaction-attention continuum.

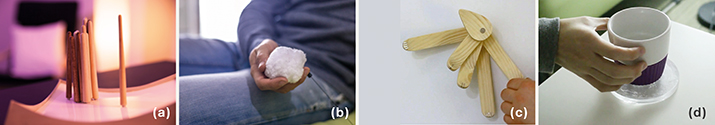

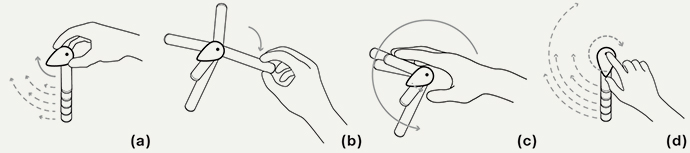

The four interfaces that resulted from the case studies are entitled sLight, Squeeze, Swivel and Coaster (see Figure 2). Each interface is designed for peripheral interaction and intends to enable shifts to one or both ends of the interaction-attention continuum. By presenting these interfaces, we lay out the challenges and opportunities in designing for shifts along the interaction-attention continuum. We first address the design and selection process that resulted in the four interfaces.

Figure 2. The mock-up prototypes of the four designs: (a) sLight, (b) Squeeze, (c) Swivel and (d) Coaster.

Design and Selection Process

A different design team conducted each of the four case studies as part of a graduate level interaction design course. In total, 16 teams of three students participated in two editions of the course. Each team was asked to design an interface with which users could control a connected lighting system in the home environment. The interface needed to be designed for peripheral interaction, while also enabling shifts along the interaction-attention continuum, a concept that was thoroughly discussed with each design team.

To gain additional insights into the human ability to act in the periphery of attention and to fluently shift activities between the center and periphery of attention, each designer engaged in a short session of autoethnography (O’Kane, Rogers, & Blandford, 2014). Each designer recorded a thirty-minute video about themselves while performing an everyday activity such as cooking, working on a computer and eating while watching a TV show. The designers viewed their recordings individually and within their teams to identify activities that were performed in the periphery of attention. All identified peripheral activities were captured in screenshots and served as inspiration during the design process.

The design teams iteratively developed their interfaces in a process in which building low-fidelity prototypes and evaluating these in informal user-evaluation sessions quickly followed each other, a process commonly practiced in interaction design (Hummels & Frens, 2008). For quick evaluation purposes, all design teams had access to a room equipped with a Philips Hue system that could be controlled through a tablet interface or the designers could use a provided Processing sketch. Given the availability of this room, the design teams were able to quickly evaluate their designs using of a Wizard-of-Oz setup (Dahlbäck, Jönsson, & Ahrenberg, 1993). Each team conducted at least three design iterations. The results of each iteration were discussed with the authors. Each design team produced a thoroughly iterated design proposal in the form of a physical mock-up prototype (see Figure 2).

The authors thematically clustered the sixteen design proposals to arrive at generalized insights regarding interaction design along the interaction-attention continuum. First, the authors discussed where each interface could fit on the continuum and how shifts along the continuum might potentially take place. Following this, the authors discussed the underlying interaction design principles that the interfaces relied on to potentially facilitate peripheral interaction and/or shifts along the interaction-attention continuum. This discussion led to the formulation of the design considerations presented in this paper. Four case studies were then selected, these covering a wide range of interaction styles and design principles to illustrate these design considerations.

Case Study 1: sLight

sLight is a tabletop interface that allows for different levels of detail in controlling the light (see Figure 2a and Figure 3). sLight consists of a square surface with ascending corners and wooden sticks each representing one lamp available in the environment. The entire surface of sLight is slightly tilted so that the four corners can be distinguished from each other. The three lower corners of the surface each represent a different light preset, for example, a ‘diner preset’, a ‘cooking preset’ and a ‘watching TV preset’. The presets are pre-programmed by the user, for example through a smartphone application, the interaction design of which is outside the scope of this design exploration. By placing all the sticks in a certain corner, the corresponding preset is applied to all lamps. For example, when placing all sticks in the corner representing the ‘diner preset’, the user sets all lights in the room for diner lighting. The one higher corner of the surface can be used to set bright white light. The placement of the stick on the slope represents the brightness. The higher the stick is positioned on the slope and the steeper the angle, the brighter the white light in that lamp will be (Figure 3c). To turn lights off, the sticks need to be pushed over until they lay flat on the surface. The layout of the sticks thus always gives a clear visual representation of the current light setting.

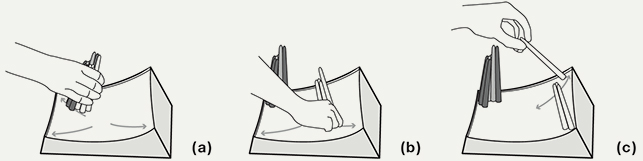

Figure 3. Interactions with sLight: (a) Move all sticks together to change the lighting of the whole room to a certain preset. (b) Change sticks of a single color to apply a different preset to one certain area of the room. (c) Position one stick on the highest slope to set individual lamps to white light of a certain brightness.

To distinguish the different sticks and thus the lamps they represent, the sticks vary in color and length. Colors represent the area of the room in which the lamps are located. For example, dark sticks represent lamps in the kitchen area and white sticks represent lamps in the lounge area of the room. The length of each stick represents the frequency of use of that particular lamp. For example, a reading lamp in the lounge area will be adjusted in brightness more often than the small atmospheric lamp in the corner of the room. In this example, the reading lamp would be assigned to a longer stick such that it is easier to grab individually.

sLight invites different types of interaction, which vary in level of attention and in level of detail:

- (a) All the sticks can be grabbed and placed in one corner at once to change the whole room’s atmosphere (Figure 3a). This quick move offers a low level of detail and is expected to be possible as a peripheral interaction. The angle of the surface slope provides haptic feedback with regard to position on the surface, diminishing visual attention required.

- (b) All sticks of a certain color can be selected to change only one area of the room (Figure 3b). This action requires visual attention to select the correct sticks, while also offering more detail in interaction: This interaction is likely less peripheral compared to the previous one and moves to the right—toward focused interaction—along the interaction-attention continuum.

- (c) Every lamp can be adjusted in brightness individually by selecting and moving the corresponding stick (Figure 3c). This more detailed interaction style requires visual attention to both select and place the stick in the right location and seems a clear example of a focused interaction.

Case Study 2: Squeeze

Squeeze consists of a soft ball that is attached to the couch via a chord and pulley (see Figure 2b and Figure 4). It can be used in two ways: (1) functionally to actively control the lighting or (2) as a fidgeting object to fiddle with while being engaged in other activities such as reading or watching TV.

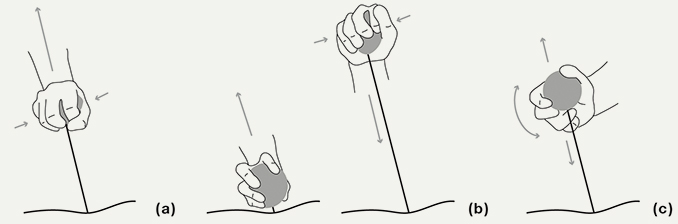

Figure 4. Interaction with Squeeze: (a) squeeze and pull to increase brightness. The amount of pressure applied while squeezing determines the number of lamps that are affected. (b) Pull without squeezing and apply pressure when releasing the chord to dim the light. (c) Fidget with Squeeze by applying little pressure. This results in subtle, but dynamic brightness changes in the ambient lamps.

Squeeze can be pulled to increase brightness or released to decrease brightness of lights in the vicinity of the interface (Figure 4a). The pulling distance determines the amount of change in brightness. By squeezing the interface during the interaction, users determine which lamps are affected. When little pressure is applied a few ambient lamps closest to the interface are adjusted, while maximum pressure allows all lamps in the room to be adjusted. When intending to increase the brightness in the entire room, the user grabs Squeeze firmly, pulls it away from the couch until the desired brightness level is reached and then releases the ball. To dim the lights, one should first pull out the chord without pressure, then apply the amount of pressure that represents the desired number of lamps and subsequently release the chord while maintaining this pressure to dim the lights (Figure 4b). This sequence of interaction might require some experience to achieve, but since the number of light parameters is limited and the actions themselves are easy to execute, we expect that it should be easy to learn, potentially enabling peripheral interaction.

Next to its functional purpose, Squeeze is designed to invite fidgeting and is thus located on the couch and given a soft and squeezable shape. Squeeze can be moved around, pulled, stroked and tilted, for example (Figure 4c). Naturally, the light effects of such fidgeting interactions are the same as when functionally interacting with Squeeze. However, we expect that when fidgeting, only little pressure is applied and the moving distance is small so these interactions would result in only very small adjustments of brightness in the ambient lamps, causing a playful, dynamic lighting effect. These interactions may to some extent become subconscious and can be unintentional. For example, the user might not intend to interact with the light, but simply to fidget, moving such interactions towards the implicit interaction end of the interaction-attention continuum.

Case Study 3: Swivel

Swivel is a light interface positioned on the wall next to the entrance of the living room. It allows the quick selection of one preset interaction for all lamps and more detailed brightness control for individual lamps or groups of lamps (see Figure 2c and Figure 5). Swivel consists of five pointers and a preset dial, all positioned around a central pivot. The pointers each represent either one individual lamp or a group of lamps to be controlled together. For example, multiple lamps above one dinner table. Similar to the sLight design, the mapping between the pointers and the lamps as well as the presets for the preset dial are assumed to be preprogrammed through, for example, a smartphone application although this aspect is outside the scope of this exploration. The orientation of each pointer represents the brightness of the corresponding lamp or group of lamps; downward directed pointers represent lamps that are turned off while turning the pointers clockwise increases the corresponding lamps’ brightness.

Figure 5. Interaction with Swivel: (a) By rotating the preset dial, one out of five pre-programmed presets can be selected. After selection, the pointers automatically move to match the brightness as defined by the preset. (b) Users can adjust the brightness of a (group of) lamp by rotating the pointers. (c) Turning pointers downward will turn (groups of) lamps off. (d) Swivel keeps track of use patterns. Pressing the dial activates the autonomous mode, in which Swivel predicts which preset might be most appropriate for the time of day.

Using the preset dial, users can select one of the five preprogrammed lighting presets. By selecting a preset (Figure 5a), all pointers automatically rotate towards the position that corresponds to the brightness settings from that preset, so that the position of the pointers always gives an accurate visual representation of the selected brightness settings. Users can adjust the brightness of a lamp or group of lamps by rotating the corresponding pointer by hand (Figure 5b and c). Conceptually, Swivel enables users to directly manipulate the lighting and also to adjust lighting autonomously. By tracking use patterns over time, the user’s routines could be estimated. By pressing the preset dial, the interface will automatically select the preset estimated to be most appropriate at that moment (Figure 5d). If the user does not desire the presented preset, they can adjust the light setting manually. The manual adjustments could be used as input for the learning algorithm.

Swivel offers detailed, yet quick access to presets, which, when the position of a preset on the dial is memorized, should require little visual attention and might therefore move to the periphery of attention. Adjusting brightness of groups of lamps individually is likely to require more effort as a cost of offering more detailed control and is expected to shift towards focused interaction on the interaction-attention continuum. Pressing the dial and setting Swivel in autonomous mode is likely the most effortless since it triggers the system to decide on the most suitable preset based on data gathered earlier through implicit interaction. By contrast to regular implicit interactions such turning on the lights automatically when the user comes home, Swivel allows the user to directly control the lights by rotating its pointers to indicate the selected preset, enabling them to adjust these pointers and thereby the brightness of the lights they represent. Swivel thus explores a shift between implicit and peripheral interaction.

Case Study 4: Coaster

Coaster is a lighting interface and functional coaster in one (see Figure 2d and Figure 6) and consists of a flat round interface that can be turned and pushed. When Coaster is up-side-down, nearby lamps are turned off. Flipping coaster over turns the light in the surroundings on in the last-used preset (Figure 6a). By rotating Coaster clockwise, the brightness of the lights increases relative to the current setting. Rotating the device counter clockwise decreases the brightness (Figure 6b). By pressing down while turning, the hue of the lights can be adjusted (Figure 6c). Coaster only controls lighting in the direct surroundings of the interface. Taking the interface to a different location moves the light setting to that new location.

Figure 6. Interaction with Coaster: (a) When upside down, all lamps are off. Turning Coaster over will make the lights turn on in their last-used setting. (b) By rotating Coaster, brightness of the local lamps can be adjusted. (c) By applying pressure when rotating, the color of local lamps can be adjusted. Interactions can be done by rotating the cup or the coaster itself.

Although detail in control is limited with Coaster in that it only offers control over lights in the surroundings and only enables adjusting two light parameters, the interactions are straightforward and quick and might thus easily move to the periphery of attention. With the interface being a functional coaster, adjusting the light is also hypothesized to easily become a part of the routine of drinking coffee.

Discussion and Considerations for Interaction Design

This article presents the interaction-attention continuum, which covers three types of interaction with computing technology, each at a different level of human attention: focused interaction, peripheral interaction and implicit interaction. We explored the application of this continuum through four design case studies. Although the resulting interaction designs were only developed as mock-up prototypes and evaluated informally during design iterations using demonstrations with a Wizard-of-Oz setup and group discussions, the designs revealed relevant considerations for interaction design along the interaction-attention continuum that can potentially be used to support interaction design-researchers in bridging the gap between focused and implicit interaction.

Tangible Gesture Interaction

All of the presented designs make use of tangible interaction (Hornecker & Buur, 2006; Ullmer & Ishii, 2000) in that each design enables the lighting to be controlled by manipulating one or more physical artifacts. Most known interactive lighting systems rely either on graphical user interfaces that offer focused interaction possibilities or on background sensing of human activity for implicit interaction. Through our design explorations, we have experienced tangible interaction as a suitable means for peripheral interaction as confirmed in related literature (e.g. Bakker et al., 2015b; Edge & Blackwell, 2009)). Being tangible, we imagine these interfaces being readily available for interaction within users’ everyday routines. This is vastly different from smartphone applications that are currently used to interact with modern lighting systems, for which users need to unlock their phone, find the application and browse through a menu before they are able to interact with their lighting.

Reflecting on the presented designs, we furthermore imagine their tangibility to support seamless shifts along the interaction-attention continuum. In the designs of sLight and Swivel, the physical appearance of the interface forms a direct representation of the current digital state of the system. This overlap in control and representation, a key characteristic of tangible user interfaces as defined by Ullmer and Ishii (2000), may help to support transitions between interactions at different levels of attention. With Swivel, for example, when users select a preset using the preset dial, Swivel autonomously adjusts the locations of its pointers so that all elements of the interface visually represent the new lighting state. Because controls and representation overlap, the user can immediately take detailed control over the lighting by adjusting the preset or each of the pointers manually, either through focused or peripheral interaction. At such moments, interactions thus shift along the continuum from implicit to peripheral or focused interaction, which is facilitated by the tangibility of the interface.

In contrast to sLight and Swivel, the physical controls of Squeeze and Coaster do not directly represent the system’s digital state. In Squeeze, this state is represented in the expressive gestures such as squeezing and pulling that a user performs with the interface. Squeeze is thereby an example of tangible gesture interaction (Hoven & Mazalek, 2011). The interaction style in the Squeeze design is intended to invite playful fidgeting, potentially enabling it to shift along the continuum towards implicit interaction; a user might perform fidgeting interactions subconsciously and unintentionally while still providing input to the system. Tangible gesture interactions might in this way support shifts along the continuum from peripheral to implicit interaction.

Supporting Learning through Interaction

Routinely understanding input-output relations is important to enable peripheral interaction (Bakker et al., 2015b). Theories of habituation and skill acquisition furthermore indicate that activities usually only become habitual (Aarts & Dijksterhuis, 2000) after a learning period characterized by a process of action and perception of intermediate results (Newell, 1991). We expect that the previously described overlap in representations and controls, as seen in sLight and Swivel, might facilitate a similar learning process. The visual appearance of these interfaces always represents the digital state of the system, that is, the current setting of the lighting. Since this digital state is also visually available in the room, looking or glancing at the interface of, for example, sLight, even without the intention to start interacting can support a user in gaining an understanding of the interface’s input-output relations. Similarly, seeing the location and orientation of the pointers in Swivel might facilitate the user’s understanding of the system’s autonomous behavior. When Swivel autonomously shifts to a different preset, the interface ‘explains’ its behavior by moving the pointers and dial to the new setting.

Another interaction design element that might support the user’s understanding of input-output relations is the combination of visual and haptic information embedded in the presented tangible interfaces. Each of the designs can potentially be operated with minimal visual attention, guided by haptic cues such as elevated surface edges (sLight), different pointer lengths (Swivel), resistance while pressing (Squeeze) or the number of physical interface elements (sLight and Swivel). These haptic cues are consistent with the visual appearance of the interfaces. For example, the elevated surface corners of sLight can be both felt and seen. When starting to use such interfaces, users will likely operate them with visual attention. In such cases, the haptic cues may not consciously be felt, but when interacting with these interfaces more often, we expect that users would gradually get used to both the appearance and the feel of the interfaces without consciously thinking about it. Potentially, interfaces in which haptic and visual elements are coherently combined might support the process of learning to use the interfaces without visual focus in the periphery of attention.

Lastly, Squeeze is designed to enable and invite playful fidgeting interactions. Fidgeting as a form interaction has been explored in the HCI literature to improve productivity and concentration on a main task (Karlesky & Isbister, 2013). Although the fidgeting interactions in Squeeze have not been designed for such a concrete goal, we envisage that fidgeting with the interface could support habituation to the nature of interaction. When absentmindedly fidgeting with Squeeze, for example, squeezing and pulling the interface has the same effect on the lighting as when such interactions were performed to functionally adjust the lighting. Since the time and effort required to conduct an activity tends to decrease with practice (Newell, 1991), these fidgeting interactions could be seen as practice without the user consciously thinking about it, which might later support functional interactions shifting from the center to the periphery of attention.

Coherently Offering Various Levels of Control

As a result of the tangibility of our designs, we have realized that offering various levels of control within one coherent interaction design might facilitate shifts along the interaction-attention continuum between focused and peripheral interaction. Both sLight and Swivel employ this. By moving the sticks of the sLight interface, for example, users can (a) change all lights at once, (b) change a group of lights or (c) change each individual light to exactly the desired brightness. Clearly option (c) offers much more detailed control compared to option (a). However, as a trade-off, option (c) likely demands more attention and effort and therefore must be performed through focused interaction whereas (a) is most likely a possible peripheral interaction as part of one’s everyday routine. Similarly, with Swivel users can decide whether they quickly rotate all pointers in one movement, possibly through peripheral interaction, or they may select individual pointers to rotate to exactly the right brightness. Clearly, when more precise control is needed, more focus is required and the interaction will shift along the interaction-attention continuum from being peripheral towards being focused. This is in line with related literature (Offermans et al., 2014), which recommends that increased focus and effort should be properly balanced with a resulting reward, that is, it should lead to a more suitable light setting. In both our examples, the interaction and input-output relations are coherent across different interaction types. We believe this coherence might support shifting along the continuum, since it diminishes time and effort to get used to multiple interaction styles.

Contextual Considerations

Throughout the design process leading to each of the presented interaction designs, we realized that next to the earlier-mentioned tangible interaction related design considerations, taking context and location of the interface into account is crucial to enable interaction at various levels of attention. For example, Swivel needs a central place in the room as its functionality involves implicit interaction and global control, requiring users to monitor Swivel while in autonomous mode and to have an overview of the room and light setting when explicitly interacting with the interface. Squeeze partially relies on users fidgeting with the interface, an action that likely takes place during another main activity. We hypothesize that this could work in a lounge area where people are watching TV or are engaged in conversation, but it would likely not work in an office or kitchen context where people’s main activities require the hands to manipulate other tools or interfaces. Coaster is likely to result in more peripheral interactions when it is located around a coffee table and when it can be used to make small lighting adjustments while drinking, but it seems much less appropriate as a global lighting interface. Considering context, both location and people’s activities and routines at those locations (Schmidt, Beigl, & Gellersen, 1998), seems crucial for interaction designs that aim to shift along the interaction-attention continuum.

Combining Interfaces

Although all the presented interfaces are designed to shift along the continuum, clearly none of them offer the ideal interaction design to cover the full range of possible interaction types on the interaction-attention continuum. This is clearest in the case of Coaster, which offers relatively detailed control over two parameters through interactions in the periphery of attention, but only for light in the direct surroundings. Interacting with the global light setting in the room would only be possible by walking around, which is likely too laborious for daily lighting interactions. Coaster could therefore be a suitable interface for particular moments in the everyday routine, for example, when sitting at the table, but it seems unsuitable to be the only interface in a room. sLight offers potential for focused and peripheral interaction, but not for implicit interaction. There might also be situations where users would like more detailed control possibilities through focused interaction than sLight offers, for example, to determine specific colors or color temperatures for each lamp. Squeeze offer possibilities for focused and peripheral interaction but yet again more detailed control may be desired in certain situations. Furthermore, different kinds of implicit interaction could be beneficial as well, such as automatically adjusting the light to according to learned user routines. Although latter functionality is included in Swivel, this interface offers only detailed control of brightness through focused interaction. Additionally, both sLight and Swivel rely on preset lighting scenes that a user needs to determine through a separate interface on a smartphone application. We see none of the presented interfaces as single solutions to lighting interaction, but envisage them to function in parallel to other lighting interfaces that together cover the full range of the interaction-attention continuum. As mentioned earlier, smartphone applications as known in present interactive lighting systems (e.g., Philips Hue), are very suitable for creating lighting scenes with precisely the right colors, brightness and saturation through focused interaction, but lack the scope to shift interaction to the periphery of attention. A combination of multiple interfaces, such as one for occasional detailed control and one for everyday interaction, might therefore be used to offer the full range of possibilities along the interaction-attention continuum. None of the four presented design concepts offer the ideal interface for each situation and context. We see them as an initial step to explore the design space around the middle of the interaction-attention continuum, facilitating peripheral interaction as well as shifts to focused and implicit interactions.

Conclusions

The work presented in this article centers around the observation that everyday interactive systems are usually designed for either focused interaction or implicit interaction. However, these two interaction styles cover only the two ends of a continuum of human attention abilities, a continuum that also includes peripheral interaction. We believe that with computing technology becoming omnipresent, it is essential to design interactive systems that are to become part of our everyday life routines such that they can be operated at various levels of attention, that is, through focused interaction when detailed control is required, through peripheral interaction when operated as a routine activity in which imprecise control suffices and through implicit interaction when no control is required or no attention is available. Moreover, interactive systems can blend into our everyday routines when they are designed to enable shifts between these interaction types, requiring designers and researchers to consider various levels of attention in their interaction designs. To support this, we have presented the interaction-attention continuum and grounded this continuum in attention theory and related work. We have further illustrated this continuum through four case studies of interaction designs for interactive lighting systems that are to become integrated in people’s everyday environments and routines.

From these design explorations, we have extracted a number of considerations for designs that aim to shift along the interaction-attention continuum. As such, we found that tangible gesture interaction, particularly when coherently offering various levels of control, is a suitable interaction style to facilitate shifts along the continuum. Additionally, we concluded that interfaces that support learning through interaction potentially enable users to easily gain an understanding of input-output relations, which may support the interaction to shift to the periphery of attention. Moreover, we found that contextual considerations are key to fitting interfaces seamlessly into people’s everyday routines and that combining interfaces can further facilitate this process.

The work presented in this article contributes to interaction design-research by posing a view on interaction design through a lens of human attention abilities. With human attention becoming a scarce resource in present everyday environments, we believe that this view, which builds on foundational work on ubiquitous computing (Weiser, 1991; Weiser & Brown, 1997), is of increasing relevance today and in the near future. Although our designs only present an initial exploration of the design space opened up by the interaction-attention continuum, we believe this offers numerous opportunities for further work on interaction design for everyday life in the present and future.

Acknowledgments

We thank the students who designed the case studies that we presented in this work: Eef Lubbers, Jesse Meijers and Tom van ‘t Westeinde (sLight); Akil Mahendru, Koen Scheltenaar and Matthijs Willems (Squeeze); Carlijn Valk, Debayan Chakraborty and Wendy Dassen (Swivel); and Nanna Kristiansen, Marjolein Schets and Yudian Jin (Coaster). We also thank Berry Eggen, Serge Offermans, Dzmitry Aliakseyeu and Remco Magielse for their input and constructive feedback during the course.

References

- Aarts, E. H. L., & Marzano, S. (2003). The new everyday: Views on ambient intelligence. Rotterdam, the Netherlands: 010.

- Aarts, H., & Dijksterhuis, A. (2000). Habits as knowledge structures: Automaticity in goal-directed behavior. Journal of Personality and Social Psychology, 78(1), 53-63.

- Abowd, G. D., Dey, A. K., Brown, P. J., Davies, N., Smith, M., & Steggles, P. (1999). Towards a better understanding of context and context-awareness. In Proceedings of the 1st International Symposium on Handheld and Ubiquitous Computing (pp. 304-307). Berlin Heidelberg, Germany: Springer-Verlag.

- Atzori, L., Iera, A., & Morabito, G. (2010). The internet of things: A survey. Computer Networks, 54(15), 2787-2805.

- Bakker, S., van den Hoven, E., & Eggen, B. (2010). Design for the periphery. In Proceedings of the Eurohaptics Symposium on Haptic and Audio-Visual Stimuli (pp. 71-80). Amsterdam, the Netherlands: Enschede Twente.

- Bakker, S., van den Hoven, E., & Eggen, B. (2012). Acting by hand: Informing interaction design for the periphery of people’s attention. Interacting with Computers, 24(3), 119-130.

- Bakker, S., van den Hoven, E., & Eggen, B. (2015a). Evaluating peripheral interaction design. Human-Computer Interaction, 30(6), 473-506.

- Bakker, S., van den Hoven, E., & Eggen, B. (2015b). Peripheral interaction: Characteristics and considerations. Personal and Ubiquitous Computing, 19(1), 239-254.

- Ballendat, T., Marquardt, N., & Greenberg, S. (2010). Proxemic interaction: Designing for a proximity and orientation-aware environment. In Proceedings of the International Conference on Interactive Tabletops and Surfaces (pp. 121-130). New York, NY: ACM.

- Dahlbäck, N., Jönsson, A., & Ahrenberg, L. (1993). Wizard of Oz studies: Why and how. Proceedings of the 1st International Conference on Intelligent User Interfaces (pp. 193-200). New York, NY: ACM.

- Dahley, A., Wisneski, C., & Ishii, H. (1998). Water lamp and pinwheels: Ambient projection of digital information into architectural space. In Summary of the SIGCHI Conference on Human Factors in Computing Systems (pp. 269-270). New York, NY: ACM.

- de Ruyter, B., & Pelgrim, E. (2007). Ambient assisted-living research in Carelab. Interactions, 14(4), 30-33.

- Edge, D., & Blackwell, A. F. (2009). Peripheral tangible interaction by analytic design. In Proceedings of the 3rd International Conference on Tangible and Embedded Interaction (pp. 69-76). New York, NY: ACM.

- Eggen, B., & van Mensvoort, K. (2009). Making sense of what is going on “around”: Designing environmental awareness information displays. In P. Markopoulos, B. de Ruyter, & W. Mackay (Eds.), Awareness systems: Advances in theory, methodology and design (pp. 99-124). London, UK: Springer.

- Gyllstrom, K., Soules, C., & Veitch, A. (2008). Activity put in context: Identifying implicit task context within the user’s document interaction. In Proceedings of the 2nd International Symposium on Information Interaction in Context (pp. 51-56). New York, NY: ACM.

- Hausen, D., Boring, S., & Greenberg, S. (2013). The unadorned desk: Exploiting the physical space around a display as an input canvas. In P. Kotzé, G. Marsden, G. Lindgaard, J. Wesson, & M. Winckler (Eds.), Human-Computer Interaction – INTERACT 2013 (pp. 140-158). Berlin Heidelberg: Springer.

- Hausen, D., Tabard, A., von Thermann, A., Holzner, K., & Butz, A. (2014). Evaluating peripheral interaction. In Proceedings of the 8th International Conference on Tangible, Embedded and Embodied Interaction (pp. 21-28). New York, NY: ACM.

- Hazlewood, W. R., Stolterman, E., & Connelly, K. (2011). Issues in evaluating ambient displays in the wild: Two case studies. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 877-886). New York, NY: ACM.

- Heiner, J. M., Hudson, S. E., & Tanaka, K. (1999). The information percolator: Ambient information display in a decorative object. In Proceedings of the 12th annual ACM Symposium on User Interface Software and Technology (pp. 141-148). New York, NY: ACM.

- Hornecker, E., & Buur, J. (2006). Getting a grip on tangible interaction: A framework on physical space and social interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 437-446). New York, NY: ACM.

- Hummels, C., & Frens, J. (2008). Designing for the unknown: A design process for the future generation of highly interactive systems and products. In Proceedings of the 10th International Conference on Engineering and Product Design Education (pp. 204-209). Barcelona, Spain.

- Ishii, H., & Ullmer, B. (1997). Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 234-241). New York, NY: ACM.

- Ishii, H., Wisneski, C., Brave, S., Dahley, A., Gorbet, M., Ullmer, B., & Yarin, P. (1998). AmbientROOM: Integrating ambient media with architectural space. In Summary of the SIGCHI Conference on Human Factors in Computing Systems (pp. 173-174). New York, NY: ACM.

- Juola, J. F. (2016). Theories of focal and peripheral attention. In S. Bakker, D. Hausen, & T. Selker, (Eds.), Peripheral interaction: Challenges and opportunities for HCI in the periphery of attention. London, UK: Springer.

- Ju, W. (2015). The design of implicit interactions. Synthesis Lectures on Human-Centered Informatics, 8(2), 1-93.

- Ju, W., & Leifer, L. (2008). The design of implicit interactions: Making interactive systems less obnoxious. Design Issues, 24(3), 72-84.

- Kahneman, D. (1973). Attention and effort. Englewood Cliffs, NJ: Prentice Hall.

- Karlesky, M., & Isbister, K. (2013). Designing for the physical margins of digital workspaces: Fidget widgets in support of productivity and creativity. In Proceedings of the 8th International Conference on Tangible, Embedded and Embodied Interaction (pp. 13-20). New York, NY: ACM.

- Markopoulos, P. (2009). A design framework for awareness systems. In P. Markopoulos, B. de Ruyter, & W. Mackay (Eds.), Awareness systems (pp. 49-72). London, UK: Springer-Verlag.

- Matthews, T., Dey, A. K., Mankoff, J., Carter, S., & Rattenbury, T. (2004). A toolkit for managing user attention in peripheral displays. In Proceedings of the 17th Annual ACM Symposium on User Interface Software and Technology (pp. 247-256). New York, NY: ACM.

- Muñoz, A., Augusto, J. C., Villa, A., & Botía, J. A. (2011). Design and evaluation of an ambient assisted living system based on an argumentative multi-agent system. Personal and Ubiquitous Computing, 15(4), 377-387.

- Mynatt, E. D., Back, M., Want, R., Baer, M., & Ellis, J. B. (1998). Designing audio aura. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 566–573). New York,NY: ACM.

- Nest. (n.d.). Meet the Nest learning thermostat. Retrieved April 14, 2016, from https://nest.com/thermostat/meet-nest-thermostat/

- Newell, M. K. (1991). Motor skill acquisition. Annual Review of Psychology, 42, 213-237.

- Norman, D. A. (1998). The design of everyday things. New York, NY: Basic Books.

- Offermans, S. A. M., van Essen, H. A., & Eggen, J. H. (2014). User interaction with everyday lighting systems. Personal and Ubiquitous Computing, 18(8), 2035-2055.

- O’Kane, A. A., Rogers, Y., & Blandford, A. E. (2014). Gaining empathy for non-routine mobile device use through autoethnography. In Proceedings of the SIGCHI Conference on Human factors in Computing Systems (pp. 987-990). New York, NY: ACM.

- Olivera, F., García-Herranz, M., Haya, P. A., & Llinás, P. (2011). Do not disturb: Physical interfaces for parallel peripheral interactions. In P. Campos, N. Graham, J. Jorge, N. Nunes, P. Palanque, & M. Winckler (Eds.), Human-Computer Interaction – INTERACT 2011 (pp. 479-486). Berlin Heidelberg, Germany: Springer.

- Pousman, Z., & Stasko, J. (2006). A taxonomy of ambient information systems: Four patterns of design. In Proceedings of the Working Conference on Advanced Visual Interfaces (pp. 67-74). New York, NY: ACM.

- Probst, K., Haller, M., Yasu, K., Sugimoto, M., & Inami, M. (2013). Move-it sticky notes providing active physical feedback through motion. In Proceedings of the 8th International Conference on Tangible, Embedded and Embodied Interaction (pp. 29-36). New York, NY: ACM.

- Salvucci, D. D., & Taatgen, N. A. (2008). Threaded cognition: An integrated theory of concurrent multitasking. Psychological Review, 115(1), 101-130.

- Salvucci, D. D., Taatgen, N. A., & Borst, J. P. (2009). Toward a unified theory of the multitasking continuum: From concurrent performance to task switching, interruption, and resumption. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1819-1828). New York, NY: ACM.

- Schmidt, A. (2000). Implicit human computer interaction through context. Personal Technologies, 4(2-3), 191–199.

- Schmidt, A., Beigl, M., & Gellersen, H. (1998). There is more to Context than Location. Computers and Graphics, 23, 893-901.

- Schneider, W., & Chein, J. M. (2003). Controlled & automatic processing: Behavior, theory, and biological mechanisms. Cognitive Science, 27(3), 525-559.

- Ullmer, B., & Ishii, H. (2000). Emerging frameworks for tangible user interfaces. IBM Systems Journal, 39(3-4), 915-931.

- van den Hoven, E., & Mazalek, A. (2011). Grasping gestures: Gesturing with physical artifacts. Artificial Intelligence for Engineering Design, 25(3), 255-271.

- Weiser, M. (1991). The computer for the 21st century. Scientific American, 265(3), 94-104.

- Weiser, M., & Brown, J. S. (1997). The coming age of calm technology. In P. J. Denning & R. M. Metcalfe (Eds.), Beyond calculation: The next fifty years (pp. 75-85). New York, NY: Springer.

- Wickens, C. D., & Hollands, J. G. (2000). Engineering psychology and human performance (3 ed.). Upper Saddle River, NJ: Prentice Hall.

- Wickens, C. D., & McCarley, J. S. (2008). Applied attention theory. Boca Raton, FL: CRC Press.

- Wolf, K., Naumann, A., Rohs, M., & Müller, J. (2011). Taxonomy of microinteractions: Defining microgestures based on ergonomic and scenario-dependent requirements. In P. Campos, N. Nunes, N. Graham, J. Jorge, & P. Palanque (Eds.), Human-Computer Interaction - INTERACT 2011 (pp. 559-575). Berlin, Heidelberg, Germany: Springer-Verlag.

- Wood, W., & Neal, D. T. (2007). A new look at habits and the habit-goal interface. Psychological Review, 114(4), 843–863.