Perceptual Information for User-Product Interaction:

Using Vacuum Cleaner as Example

Graduate School of Design, National Yunlin University of Science and Technology, Douliou, Taiwan

The purpose of this study is to identify which product designs for parts and directions are most effective, and then propose how perceptional information could best be designed to facilitate user-product interaction. Three categories of perceptional information for product operational tasks were proposed in this study. Task analysis and usability evaluations were carried out to analyze what information users required while they practiced the operational tasks. Finally, a primary model was proposed that revealed and defined specific types of entities and different perceptual information— Behavioural Information (BI), Assemblage Information (AI), and Conventional Information (CI)— to be significant elements for the model. Information for specific applications that is available for various types of vacuum cleaner parts is described below: 1) for specific operational tasks, these applications for operability, functionality and operational directions are required for the user-part category, and BI and CI provide effective support for the applications; 2) the application for assembly-ability is required for the part-part category, and AI and CI provide effective support for this application; and 3) the applications for operability, functionality, operational directions, and assembly-ability are required for the user-part-part category. BI and CI provide effective support for the applications for operability, functionality, whereas operational directions, and AI and CI provide effective support for the application for assembly-ability.

Keywords - Interaction, Perceptual Information, Product Design.

Relevance to Design Practice - The model of perceptual information application to the product design proposed and discussed in this research provides an approach for designing interfaces that facilitate user-product interaction.

Citation: Chen, L. H., & Lee, C. F. (2008). Perceptual information for user-product interaction: Using vacuum cleaner as example. International Journal of Design, 2(1), 45-53.

Received May 16, 2007; Accepted February 24, 2008; Published April 1, 2008

Copyright: © 2008 Chen & Lee. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: hao55@mail2000.com.tw

Introduction

Product functions have become more complex as technology progresses. Often times the design of the product interface is not intuitive. For product design, designers provide products with an innovative form, and even more importantly curtails the gap between users and a product in operation. Clearly, user-friendly products are preferable to those that are not (Courage & Baxter, 2005). Product designers need to deal carefully with possible interaction problems between users and product interfaces. Furthermore, Dix, Finlay, Abowd and Beale (2004) stated that a person’s interaction with the outside world occurs through the exchange of information. Today, an important concern for designers is how product design can convey information to better help users understand product functions.

In general, users interact with products through an interface. The controls, as well as the labels and signs, on the product hardware are part of the user interface (Hackos & Redish, 1998), which acts as a user-product information channel (Thimbleby, 1990; Baumann & Thomas, 2001). While designing user interfaces, designers may employ some techniques, such as affordance or signs, as cues for operations. The term affordance, which was coined by Gibson (1979), describes an intrinsically behavioural relationship between users and objects (You & Chen, 2006) and refers to the function or usefulness of an object (Vihma, 1995; Reed, 1988; Hartson, 2000; Galvao & Sato, 2005). As Norman (1990) stated, affordance provides strong cues to the operations of objects. For example, just by looking at them, users know that plates can be pushed using hands, and knobs can be turned with fingers. Furthermore, the affordance of an object can be specified by perceptual information (Reed, 1988; Gaver, 1991; McGrenere & Ho, 2000). Thus, the physical features are the information that relays the possible behaviours to users. In addition to physical properties, signs, icons, indexes, texts, and such, are used to illustrate the functions and operations of a product. As with signs, icons need to be represented in an appropriate form to facilitate the perception and recognition of its underlying meaning (Rogers & Sharp, 2002). Well-designed icons can help users to act quickly and confidently, because their meanings are immediately recognized (Horton, 1994). Some products have displays that show their functions and statuses with graphical icons and/or texts. Typographies and images are created by designers to communicate information (Howlett, 1996). These human-created signs are effective in facilitating user-product interaction.

In design, perception is designed for action (Ware, 1999) and can lead to appropriate action (Djajadiningrat, 1998). Perception refers to how information is extracted from the environment using vision, which is the most dominant sense for sighted individuals (Rogers & Sharp, 2002). We argue that attributes of an object should show specific visual information to assist users in operating a product. The aim of this study is to create visual information to facilitate user-product interaction. This study employs a case study to illustrate how users perceive attributes of parts on a product and then proposes a model for applying the information to the product design.

Information in Operating Products

The physical properties and signs of objects play different and important roles in presenting specific information to users. The physical properties of an object can be regarded as the physical-perceptual information that specifies operability or assemble-ability of an object, while the signs on an object can be regarded as the conventional information that specifies functionality and operational directions.

Behavioural Information

The physical-perceptual information refers to the physical properties of an object, form, material, and size that correspond to user size and capacity, and as Frens (2006) pointed out, the term “information-for-use” means that the form of the object shows how the object can be operated, and it is directly related to the users’ physical abilities. Such physical properties of an object are the information for guiding users in performing certain operations. For example, in Figure 1, the part marked with a rectangle shows the front of a digital camera, and users can see that their right hand would work better (Spolsky, 2001). The diameter and height of the control knob should be between 10mm and 30mm if it is to be operated with two or three fingers (Baumann & Thomas, 2001). The parts of a product are particularly important because users generally act directly upon them (Borghi, 2004). In this study, the physical properties of the product parts serve as information that is directly perceived and operated on by users with their body parts and are termed Behavioural Information (BI), which indicates the operability of the parts. Furthermore, the physical-property notion has been applied to the design of elements on screen, in which the size, shape, and relationship to other elements help users to easily understand their correct function (Howlett, 1996).

Figure 1. Kodak digital camera (Spolsky,2001, P26). Image by Joel Spolsky, used with permission.

Assemblage Information

Aside from the relationship between physical properties and the body parts, it is necessary to consider the assembling-physical relationship among the product parts as well. For example, take the mapping between the top of a cup and its lid that shows where the cover should be placed on the top of the cup. The physical-corresponding relationship refers to the physical properties between two objects, size and form. Many objects are made of many parts (Norman, 1990). In order to help users to understand how to more easily figure out the relationship among product parts and how to use them properly, the “physical constraints” between one part and another should be designed to match each other (Norman, 1990). Krippendorff (2006) stated that constraints restrict the actions that an artifact can accept. Not only can the physical-corresponding properties for object-object relationships offer physical constraints for operation but can also offer visual cues as information for the operational behaviours known as Assemblage Information (AI). AI indicates the assembly-ability of two individual parts and helps users to understand how to operate the object properly. However, in general, the physical feature is not the only perceptual information found in recent products. Images, symbols, texts, and so forth, also serve as important bits of perceptual information in relation to the experiences, conventions, or knowledge of the users.

Conventional Information

Conventional Information (CI) refers to the signs and texts on products as the perceptual information that aids users in quickly becoming aware of certain operations and functions of products. Designers can use icons on the cover of a product to illustrate its capabilities; and memories and associations in the users’ minds interpret the meanings of the icons (Horton, 1994). For instance, conventions that users are familiar with can help users to understand what an icon stands for, such as an image of a printer representing “print” (Constantine & Lockwood, 1999). Otherwise, the operation of a product can be represented with signs, such as that of arrows or texts on the buttons of a remote control can illustrate how to adjust the volume on a television. As in the case above, users know the meaning of the signs, because of their experiences or the conventions that they have learned. As McGrenere and Ho (2000) and Hartson (2003) stated, words or symbols on a button are a kind of “cognitive affordance” and perceiving them may depend on the user’s experience and culture. Therefore, in this study, the signs are referred to as Conventional Information and present certain operations and functions of products.

BI, AI, and CI play different and important roles in assisting user operations. Firstly, BI indicates how users can act upon the parts with their bodies and the operational posture, such as grasping by hands or pressing by feet. Part BI should fit the size of the human body, and there should be a behavioural-physical relationship between the body region and the product part. Next, the matching-physical relationship of the two particular parts can be determined with their AI. The physical properties of the parts, size, and form are significant cues for users to understand the matching relationship of these two parts and how to assemble them in the proper orientation. Finally, CI relates to individual experience or convention. It can present operational directions and functions clearly through indexes, signs, or texts, expressing how to operate a product’s parts or functions. For instance, an arrowhead or text on the knob of a device can indicate its operational directions and functions.

Method

A vacuum cleaner is used as a case study in this section to manifest how different perceptual information can be presented in product parts, and what roles they play in guiding user operations. This section includes two phases, “user task analysis” and “usability evaluation.” Based on the results, a model for applying information to a product design is proposed.

Task Analysis

Procedure and Material

In the first step, five observations were carried out to analyze user operational behaviours, as well as to assess the related parts on the product. Moreover, in this step, the perceptual information that the subjects encountered during the operational process was analyzed. This study was designed with seven stationary tasks. This limited the users’ behavioural possibilities. The vacuum cleaner and related assemblies were arranged on the floor, and the subjects were allowed to view the parts and to perform the tasks without time constraint.

Subjects and Tasks

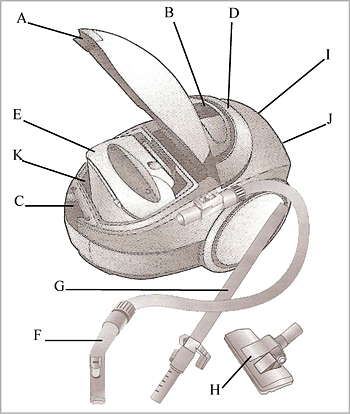

Five subjects (mean age 31.4, SD=3.0) participated in the study and were asked to perform the set up tasks in this study. While they performed these tasks, their operational processes were videotaped. All subjects have had experience using vacuum cleaners. The data from observations were analyzed principally based on the way users operated the vacuum cleaner and its related parts. Here, the related parts were determined based on how the subjects proceeded with the tasks. To begin with, the main body of the vacuum cleaner was divided into several parts, which were then reassembled as required to complete the tasks. Figure 2 shows the related parts of the vacuum cleaner for the tasks. Seven tasks were set up in this study and are as follows: 1) connect together the flexible pipe with the inhalant pipe and the floor brush; 2) adjust the button on the floor brush and the length of the inhalant pipe; 3) plug in the power cord and turn on the power; adjust the suction and begin using the vacuum cleaner; 4) turn off the power and unplug the power cord; 5) clean the dust box; 6) clean the filter behind the body of the vacuum cleaner; and 7) return body of vacuum cleaner to upright position.

A: Cover grip; B: Function controls; C: Socket for inhalant pipe; D: Handle; E: Dust box; F: Flexible pipe; G: Inhalant pipe; H: Floor brush; I: Filter; J: Power cord plug; K: Fillister for dust box.

Figure 2. Parts of a vacuum cleaner.

Usability Evaluation

This was to evaluate whether the perceptual information analyzed from task analysis was sufficient for the designated tasks in this study. The ten subjects included five males and five females (mean age 32.1, SD=5.2) who were asked to perform the tasks and then determined subjectively the difficulty level for performing the subtasks within the tasks. A seven-level Likert scale was used to measure the difficulty level of each subtask in this study, with 1 for quite difficult and 7 for quite easy. All subjects have had experience using vacuum cleaners. In addition to rating the difficulty level, the subjects were also asked to write down the reasons for any difficulties that they encountered after completing the tasks. Thus, whether adequate perceptual information was provided for completing the tasks was immediately evaluated.

Analysis and Results

Task Analysis

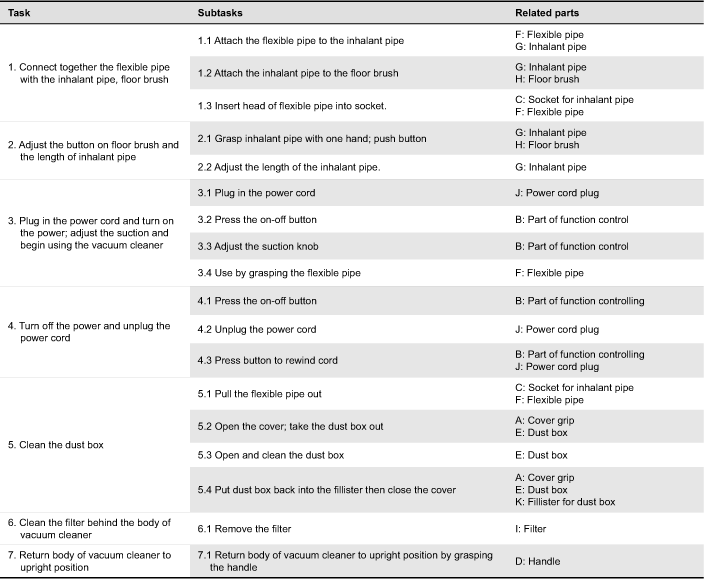

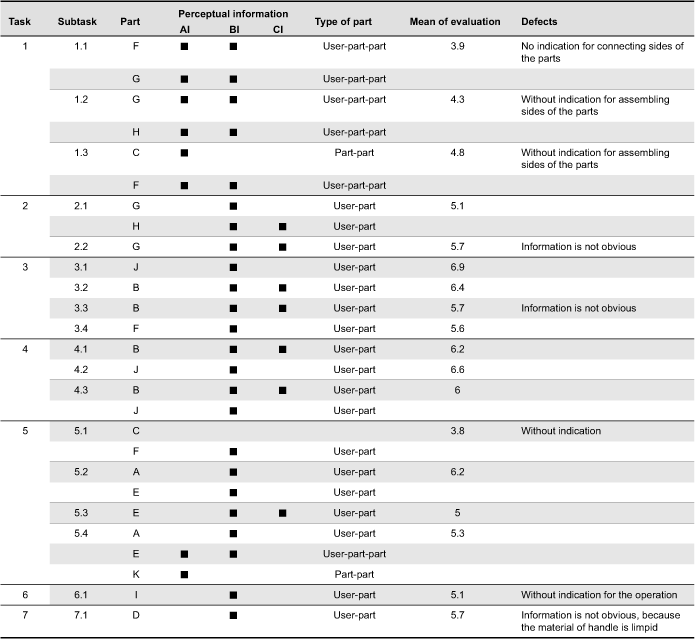

In this study, the seven tasks were further separated into subtasks based on the data from observations made while the users completed the tasks. Each subtask was concerned with related parts in the vacuum cleaner as shown in Table 1. For example, task 1 consisted of three subtasks and involved four parts - C, F, G, and H. For each subtask, the subjects practiced operational behaviours upon the related parts. It is necessary to analyze the perceptual information of the parts the subjects encountered in the operational process for clarifying how well the perceptual information was at explaining the specific applications.

Table 1. Tasks and related parts

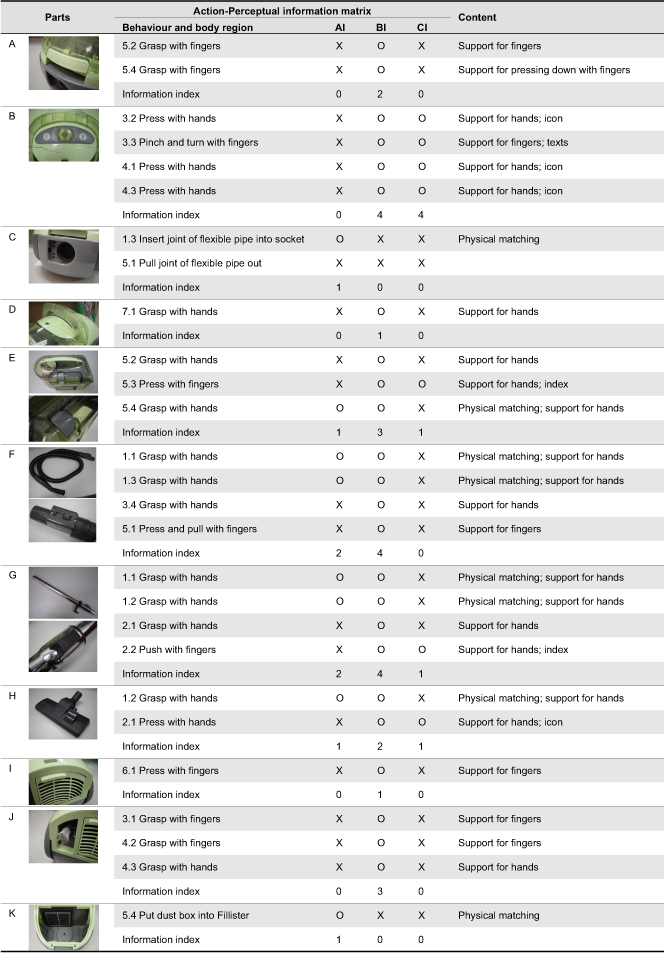

Through task analysis, the behaviours and the body regions involved for each part during the operational process, as well as the types of perceptual information presented for each part by subtasks, can be extracted and are shown in Table 2. The information index in Table 2 reports the sum of the information for each part of the whole task.

Table 2. Analysis of information for the tasks

Analyzing Table 2, we found that the BI, AI, and CI played different roles in assisting users’ operations. BI shows the physical properties of the parts, form, and size, and indicates how users should act on the parts and with what postures and body regions; AI shows the physical relationship and assembling relationship between one part and another. These are the visual cues for indicating the assembling position; CI relates to user knowledge, experience, and conventions. In this case, they were shown with icons, texts, or indexes on the parts. These indicate the functionality and operational directions of the parts. Furthermore, mutual co-ordination of the different perceptual information for completing a task is required. For example, for the grip on part B, which controls the function, BI and CI display the pinch-ability and the function, which users manipulate by pinching with fingers to adjust the degree of suction. Thus, the degree of suction is controlled by pinching and turning in a clockwise or counterclockwise direction according to the texts on the part.

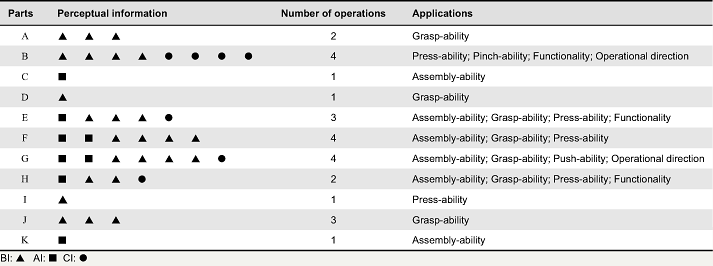

Table 3 shows a summary of the perceptual information that the subjects encountered while performing the tasks, and such perceptual information specifies the applications. An operational behaviour can be regarded as an intention that corresponds to the perceptual information the user needs. In this case, sometimes, two separate pieces of perceptual information were needed for one operation. For example, BI and CI of part B were both present to express the press-ability and functionality to meet requirements for performing the operational tasks. From this viewpoint, as shown in Table 3, BI, AI, and CI specify the applications, operability, assembly-ability, and functionality of the parts. The applications of the different perceptual information mean BI presents the applications for operability, grasp-ability, press-ability, and so forth; AI displays the application for assembly-ability, and the corresponding-physical relationship between two specific parts; CI shows the application for functionality and operational direction, such as what happens when a button is pressed or which way to turn the knob on a radio to adjust for volume.

Table 3. Parts- perceptual information-application

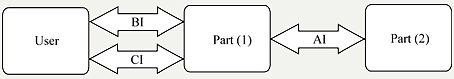

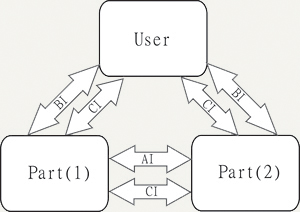

From Table 3, it can be seen from the relationship of perceptual information between users and the parts that BI and CI are necessary for users to understand the parts. AI was needed between two particular parts as shown in Figure 3. Figure 3 illustrates the relationship between the various types of entities in terms of user-part, part-part, and user-part-part relationships and perceptual information for BI, AI, and CI for the tasks. For the user-part type, a certain part may need to be grasped by both hands and the index on the part indicates the operational direction; hence, both BI and CI need to be presented to users for specific operational tasks. For the part-part type, AI is essential for presenting the physical-assembling relationship between two specific parts; for example, subtask 1.3 in Table 1, where part F needed to be inserted into part C. For the subtask, the users did not need to directly operate part C by hand, but users grasped part F to insert it into part C. For the user-part-part type, it synthesizes the two types of parts above; the part is not only operated by the user body but also needs to be assembled with another part for a certain task to be complete. Therefore, BI, CI, and AI were exploited to present the applications of the parts to users.

Figure 3. Information about entity types.

Usability Evaluation

Depending on the subtasks involved, a part may be classified as user-part-part, user-part, or part-part. This section evaluates the adequacy of the perceptual information presented in guiding users to operate the parts correctly and quickly through the usability evaluation. Table 4 shows for each subtask the parts involved, the type of each of those parts, the mean of the subjective evaluation, and the reason for operational faults based on results from the ten subjects. For subtask 1.1, parts F and G belong to the user-part-part category. The subjects had difficulty understanding the directions to assemble them properly, since they lacked proper instructions for which end of the two parts should be connected. The mean of the evaluation based on the subjects’ responses is 3.9. A similar operational fault occurred in subtasks 1.2 and 1.3 in which the subjects could not easily understand instructions for assembling parts G and F. In task 5, most of subjects did not know that part F had to be pulled out before part E could be removed from part K. Part I is classified as a user-part in task 6, and some subjects could not figure out that it had to be pressed or lifted by their fingers to remove it from the main body of the vacuum cleaner. As a result, there was not sufficient perceptual information on the parts for the operational tasks to be finished effectively. In addition, for subtasks 2.2, 3.3, and 7.1, CI and BI was not obvious enough to manifest, and this resulted in some subjects not knowing how to complete the subtasks. In contrast, most of the subjects could perform the operational tasks efficiently if the perceptional information was sufficient, as in subtasks 3.1, 3.2, 4.1, 4.2, and 5.2.

Some operational faults are caused by a lack of sufficient information on the parts. Therefore, Figure 3 can be redrawn reasonably as Figure 4. That is, for specific operational tasks, CI should be added to the part-part classification to indicate immediately the assembling relation between certain parts. Finally, a model of perceptual information application to a product design can be proposed. In terms of operational tasks in this study, the product parts can be classified into three categories as shown in Figure 4 and mentioned above: user-part, part-part, and user-part-part. They are described below:

- User-part: The user-part is only operated by users manually for specific operational tasks. Hence, the perceptual information is necessary for operability, functionality, and operational direction, and BI and CI are needed to be able to effectively show how the part is to be operated. For instance, for the task of adjusting the suction in this case, BI indicated what postures and body regions should act on the knob; CI indicated the functionality and operational direction of the knob for the task.

- Part-part: The part-part does not need to be operated manually but does need to be assembled with another part. Hence, it needs perceptual information for assembly-ability for specific operational tasks, and AI and CI need to be able to show effectively how the parts are to be assembled.

- User-part-part: The user-part-part may not only need to be operated by users but also assembled with another part for specific operational tasks. Hence, it may need to present various kinds of perceptual information. CI and BI can show operability, functionality, and operational direction of the part; AI and CI need to be able to effectively support assembly-ability of the part.

Figure 4. Model of information for entities.

Discussions

In terms of product design, designers care more about what actions the users perceive to be possible than what is true (Norman, 1999). It is not serviceable information if users cannot perceive the information of a product. Therefore, when perceptual information is applied to product design, it is important to consider how the perceptual information of a product is perceived and realized by the users. When the information is not obvious or sufficient, the users can not immediately perceive the functionalities of the parts. As Table 4 shows, for subtasks 2.2, 3.3, and 7.1, some subjects thought that the information was not obvious for the subtasks; for subtask 6.1, some subjects thought that there was not sufficient information for the subtask. However, the mean of the evaluation based on subject responses are 5.7 and 5.1, respectively. Perhaps, the subtasks were not difficult for the users, and all that they needed was to spend a little time to figure out the information or to try operating in different ways to complete the subtasks. For this reason, designers should enhance the perceptual information for specific operational tasks and show them more clearly.

Table 4. Usability evaluation

The application of BI in this study is similar to the affordance concept. Affordance is the intrinsically behavioural relation between users and objects (You & Chen, 2006). Nevertheless, in general, past experience or convention cannot be ignored entirely, especially when products are operated by users in an artificial environment. As Siler (2005) noted, people have learned most of the conventions: buttons are for pushing, knobs are for turning, switches are for flicking, and strings are for pulling. Also, convention shows that users know that turning the knob on a device in a clockwise direction means to increase; conversely, in a counterclockwise direction means to decrease. Sometimes, signs can reinforce the information for proper operation. For modern product design, not only do designers need to take into account the information about physical-behavioural relationships between users and objects, but they also need to understand the users’ cultures, social settings, experiences, and knowledge of conventions. Furthermore, the different pieces of perceptual information should present the proper operational cues to users.

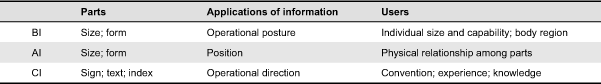

As the model illustrates, the perceptual information plays different roles in presenting specific applications for user-product interaction. The model refers to objects, applications of the information and users. It also points out the issues of what roles the different information play in terms of objects, their applications, and users, as shown in Table 5. BI can be presented with parts of size, form, and material that indicate what operational postures should act on the parts that are relative to individual size, capability, and body regions. AI can also be presented with size and form of parts. AI also shows the proper way to assemble the various parts, as well as to explain how parts are related. CI of a part can be presented by semiotic techniques, such as signs, texts, and indexes that refer to users’ experiences, and knowledge of conventions. BI, AI, and CI can be regarded as approaches in presenting applications of an object. CI indicates the operational direction and function of a part, but sometimes, the operational directions are performed based on users’ habitual behaviours or visual cues from an ambient event. Cooper (1995) noted that users are able to understand how the objects are manipulated by hands, and not because of their instinctive understanding, but because of their tool-manipulating nature. In a similar situation, while you grasp the handle of a drawer and realize the drawer is placed in a desk, you may naturally pull the drawer out. The habitual behaviours and realization of the ambient even in part refer to users’ conventions or experiences; in general, simple operations do not need CI except in cases of a particular or complex operation. In general, products are designed for the majority of the people. Designers assume that nearly everyone has similar capabilities and limitations. However, designers should be aware of individual differences, such as gender, physical capabilities, and intellectual capabilities (Dix et al., 2004). For design of CI, designers need to consider cultural backgrounds as each culture has its own way for dealing with certain situations or cues (Howlett, 1996).

Table 5. Perceptual information in parts, applications and users

As the results show, the model proposed is just for this case study. The diverse perceptual information for specifying the applications and the types of entities proposed in this study may differ from those in other products. Entity types depend on the kinds of products. For instance, a mobile phone may not need to be assembled or connected with other parts during the operational process, thus making it different from a vacuum cleaner. Hence, the part-part category would not exist for this product. Therefore, the perceptual information should be designed particularly for specific purposes, and sometimes, not all information is essential for a specific task as it may cause confusion. For instance, in subtask 5.2, BI in part A was sufficient for the operational task. The habitual behaviours from operational posture by BI and the virtual cue from the ambient event, and the layout of the cover on the main body, could lead users to implement the operational direction of the part, which required it to be drawn upward with fingers. It is worthy to further study whether the model proposed in this study can be extensively applied to all kinds of products and whether the information is sufficient for the model to improve user-product interaction as a more intuitive operation.

Conclusion

In this study, three categories of perceptual information have been proposed that play different roles in presenting their applications for facilitating user-product interaction. This study tried to explore how the information applies to product design through analyzing the information of parts for the specific operational tasks. We found a primary model of how the different information presented their applications that are significant for the various types of entities of a product. The types of entities proposed in this study may be able to be extended to all kinds of products. It should be noted that this study purely focused on the visual information. It is worth to investigate further the types of entities in different kinds of products and other types of product information, such as sounds, to complement and strengthen the deficiencies of the model. The primary model can be developed further to an evaluation model for evaluating whether the information is sufficient for the parts in a product or not. As mentioned above, this would be contributive for designing and improving user-product interaction as design references.

References

- Baumann, K., & Thomas, B. (2001). User interface design for electronic appliances. London: Taylor & Francis.

- Borghi, A. M. (2004). Object concepts and action: Extracting affordances from objects parts. Acta Psychologica, 115(1), 69-96.

- Constantine, L. L., & Lockwood, L. A. D. (1999). Software for use: A practical guide to the models and methods of usage-centered design. Reading, MA: Addison Wesley.

- Cooper, A. (1995). About face the essentials of user interface design. Foster City, CA: IDG Books Worldwide.

- Courage, C., & Baxter, K. (2005). Understanding your users: A practical guide to user requirements methods, tools, and techniques. Boston: Morgan Kaufmann Publishers.

- Dix, A., Finlay, J., Abowd, G. D., & Beale, R. (2004). Human-computer interaction (3rd ed.). Harlow, England: Prentice-Hall.

- Djajadiningrat, J. P. (1998). Cubby: What you see is where you act: Interlacing the display and manipulation spaces. Unpublished doctoral dissertation, Delft University of Technology. Delft, The Netherlands.

- Frens, J. W. (2006). Designing for rich interaction: Integrating form, interaction, and function. Unpublished doctoral dissertation, Eindhoven University of Technology, Eindhoven, The Netherlands.

- Galvao, A. B., & Sato, K. (2005). Affordance in product architecture: Linking technical functions and users’ tasks. In Proceedings of 17th International Conference on Design Theory and Methodology (pp.1-11), Long Beach: ASME Press.

- Gaver, W. (1991). Technology affordances. In Proceedings of the SIGCHI Conference on Human Factors in Computing System: Reaching Through (pp.79-84). New York: ACM Press.

- Gibson, J. J. (1979). The ecological approach to visual perception. Boston: Houghton Mifflin Company.

- Hackos, J. T., & Redish, J. C. (1998). User and task analysis for interface design. New York: John Wiley & Sons.

- Hartson, H. R. (2003). Cognitive, physical, and perceptual affordances in interaction design. Behaviour and Information Technology, 22(5), 315-338.

- Horton, W. (1994). The icon book: Visual symbols for computer systems and documentation. New York: John Wiley & Sons.

- Howlett, V. (1996). Visual interface design for Windows: Effective user interfaces for Windows 95, Windows NT, and Windows 3.1. New York: John Wiley & Sons.

- Krippendorff, K. (2006). The semantic turn: A new foundation for design. Boca Raton: Taylor & Francis.

- McGrenere, J., & Ho, W. (2000). Affordance: Clarifying and evolving a concept. In Proceedings of Graphics Interface 2000 Conference (pp.179-186). Montreal: Lawrence Erlbaum Associates Press.

- Norman, D. A. (1990). The design of everyday things. London: MIT.

- Norman, D. A. (1999). Affordance, conventions, and design. Interaction, 6(3), 38-42.

- Reed, E. (1988). James J. Gibson and the psychology of perception. New Haven: Yale University Press.

- Rogers, Y., & Sharp, H. (2002). Interaction design: Beyond human-computer interaction. New York: John Wiley & Sons.

- Silver, M. (2005). Exploring interface design. New York: Thomson.

- Spolsky, J. (2001). User interface design for programmers. Berkeley, CA: Apress.

- Thimbleby, H. (1990). User interface design. New York: ACM Press.

- Vihma, S. (1995). Products as representations: A semiotic and aesthetic study of design products. Helsinki: University of Art and Design.

- Ware, C. (2000). Information visualization: Perception for design. San Francisco: Morgan Kaufman.

- You, H., & Chen, K. (2006). Application of affordance and semantics in product design. Design Studies, 28(1), 22-38.