Exploring Problem-framing through Behavioural Heuristics

Experiential Engineering, WMG, University of Warwick, Coventry, UK

Cleaner Electronics Research, Brunel Design, School of Engineering & Design, Brunel University, London, UK

Transportation Research Group, Engineering & the Environment, University of Southampton, Southampton, UK

Design for behaviour change aims to influence user behaviour, through design, for social or environmental benefit. Understanding and modelling human behaviour has thus come within the scope of designers’ work, as in interaction design, service design and user experience design more generally. Diverse approaches to how to model users when seeking to influence behaviour can result in many possible strategies, but a major challenge for the field is matching appropriate design strategies to particular behaviours (Zachrisson & Boks, 2012). In this paper, we introduce and explore behavioural heuristics as a way of framing problem-solution pairs (Dorst & Cross, 2001) in terms of simple rules. These act as a ‘common language’ between insights from user research and design principles and techniques, and draw on ideas from human factors, behavioural economics, and decision research. We introduce the process via a case study on interaction with office heating systems, based on interviews with 16 people. This is followed by worked examples in the ‘other direction’, based on a workshop held at the Interaction ’12 conference, extracting heuristics from existing systems designed to influence user behaviour, to illustrate both ends of a possible design process using heuristics.

Keywords – Interaction Design, Design for Behaviour Change, Design for Sustainable Behaviour, Heuristics, Modelling, Mental Models, Problem-Framing.

Relevance to Design Practice – Heuristics could be a useful tool for helping designers to frame ‘behavioural problems’ via contextual research with users, and with mapping to relevant design principles and examples. They can help to ensure that users themselves, and their understanding of situations, are included in the design process when seeking to influence behaviour.

Citation: Lockton, D., Harrison, D. J., Cain, R., Stanton, N. A., & Jennings, P. (2013). Exploring problem-framing through behavioural heuristics. International Journal of Design, 7(1), 37-53.

Received August 7, 2012; Accepted February 6, 2013; Published April 30, 2013.

Copyright: © 2013 Lockton, Harrison, Cain, Stanton, & Jennings. Copyright for this article is retained by the authors, with first publication rights granted to the International Journal of Design. All journal content, except where otherwise noted, is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 2.5 License. By virtue of their appearance in this open-access journal, articles are free to use, with proper attribution, in educational and other non-commercial settings.

*Corresponding Author: dan@requisitevariety.co.uk

Introduction

Design for behaviour change (Lilley, 2009; Wever, 2012) is part of what Redström (2005) calls “a progression towards the user becoming the subject of design” (p. 124), with “design as an intervention into multiple and interpenetrating technical, material and social systems” (Mazé & Redström, 2008, p. 55). Dubberly and Pangaro (2007, p. 1302) note design’s growing concern with “ways of behaving”, and popular guidebooks and toolkits explaining the practical applicability of a range of behavioural principles to design have become common in recent years, particularly in interaction design, service design and user experience design (e.g., Anderson, 2010, 2011; Dirksen, 2012; Lockton, Harrison, & Stanton, 2010a; Pfarr, Cervantes, Lavey, Lee, Hintzman, & Vuong, 2010; Weinschenk, 2009, 2011).

Much of this focuses on changing behaviour: the aim generally is to translate psychological principles or effects into strategies or techniques which can be applied via the design of products and services, to influence users’ behaviour, often for social benefit (e.g., Tromp, Hekkert, & Verbeek, 2011; Visser, Vastenburg, & Keyson, 2011) or environmental benefit (e.g., Lockton, Cain, Harrison, Giudice, Nicholson, & Jennings, 2011; Mazé & Redström, 2008). The field overlaps substantially with the aims of persuasive technology (Fogg, 2003), and may contribute to transformation design (Sangiorgi, 2011) as design is employed to facilitate change.

As design practice becomes increasingly focused on people, modelling human behaviour is becoming an explicit aspect of designers’ responsibilities (Keinonen, 2010). The different approaches taken to understanding behaviour can result in a wide spectrum of strategies, framing both problems and solutions in a variety of ways (e.g., Brand, 2004; Lidman & Renström, 2011; Lockton et al., 2010a; Tang & Bhamra, 2008; Tromp et al., 2011; Wever, van Kuijk, & Boks, 2008; Zachrisson & Boks, 2012). What is still needed is an approach to modelling which links insights from contextual research (with users themselves) to possible design strategies (Lockton, Harrison, & Stanton, 2012). This has the potential to be particularly valuable in design for behaviour change, giving designers greater understanding of users’ behaviour and how to influence it.

The research question we explored in this paper, is whether it is possible to develop an approach that links insights from user research with applicable design techniques in the context of design for behaviour change, through modelling both interaction behaviour and design techniques in terms of simple abstracted ‘rules’–behavioural heuristics. The approach complements but differs from other nascent modelling approaches used within design for behaviour change and persuasive technology, drawing on ideas from human factors, behavioural economics, and decision research to explore and frame behavioural problem-solution pairs (Dorst & Cross, 2001) in-context, rather than starting with classifying design strategies.

This paper takes an exploratory approach to examine the potential of using behavioural heuristics as part of the design process in design for behaviour change: we are not claiming this to be a definitive solution to the question of how to specify relevant design techniques, but rather we are suggesting that this is an approach which may be useful to practitioners seeking to model user behaviour through insights from user research. Further, we wish to stimulate discussion in the area of design for behaviour change. We start by reviewing how models of human behaviour play an important part in design for behaviour change, and how they link to different ways of framing problem/solution spaces. We then consider the potential of investigating users’ mental models, and propose behavioural heuristics as a lower-level approach to modelling in comparison to conventional mental models, which seek to model behavior in a more comprehensive way. We do this by drawing on ideas from a number of fields.

Via a case study concerning users’ interaction with heating systems, we introduce the idea of heuristics, followed by worked examples in the ‘other direction’, extracting heuristics from case studies of existing systems designed to influence user behaviour, to illustrate both ends of a possible design process using heuristics. We then discuss the potential place of behavioural heuristics within a design process for behaviour change, including their use in conjunction with other approaches.

The Place of Modelling in Design for Behaviour Change

In the academic literature on design for behaviour change, some critical reflection has centred on issues such as ethics (Gram-Hansen, 2010; Pettersen & Boks, 2008) or on users’ understanding of the ‘persuasive’ intentions of the designers (Crilly, 2011), but perhaps the central research challenge in this emerging field, at least from the perspective of design practice, is that of matching the most effective and appropriate design strategies to particular behaviours (Zachrisson & Boks, 2012).

Diverse approaches to how to model and ‘treat’ users when seeking to influence behaviour can result in a wide spectrum of strategies, a phenomenon familiar to ‘non-design’ fields which regularly deal with behavioural interventions, such as healthcare (Nuffield Council on Bioethics, 2007; Michie, van Stralen, & West, 2011). Some researchers have built programmes around the exploration of particular models and strategies for influencing behaviour and user experience (e.g., affective and emotional design: Ozkaramanli & Desmet, 2012; Desmet & Hekkert, 2009). There are also many ‘design for behaviour change’ approaches that involve classifying multiple techniques via different dimensions and categories with the aim of helping designers explore the strategies available (Brand, 2004; Lidman & Renström, 2011; Lockton et al., 2010a; Tang & Bhamra, 2008; Tromp et al., 2011; Wever et al., 2008; Zachrisson & Boks, 2012). Others (Fogg & Hreha, 2010; Lockton, Harrison, & Stanton, 2010b) have aimed to guide designers towards strategies which may be most applicable to particular ‘target behaviours’, more along the lines of TRIZ (Altshuller, 1994).

Models and Frames

As Froehlich, Findlater, and Landay (2010) put it, “even if it is not explicitly recognised, designers [necessarily] approach a problem with some model of human behaviour” (p. 2000)–modelling is central to design (Alexander, 1964; Ayres, 2007; Dubberly & Pangaro, 2007), though the models employed may be diverse. The range of designers’ models of human behaviour can be especially evident in design for behaviour change, where every concept proposed inherently embodies a model of how users will behave as a result of the design (Lockton, Harrison, & Stanton, 2012). Argyris and Schön (1974, p. 28) suggested that “an interventionist is a man struggling to make his model of man come true”, and each of the interventionists’ models represents a particular way of framing both ‘problem’ and ‘solution’ in terms of what affects and is affected by human behaviour. We should, of course, remember that “all models are wrong, but some are useful” (Box & Draper, 1987, p. 424); likewise, concerns with the very notion of ‘the user’ (e.g., Keinonen, 2010; Krippendorff, 2007) are noted, but we argue that it remains a useful construct in this kind of modelling.

Rasmussen (1986) refers to different models of users each “support[ing] the choice of an interface design that will activate a category of behaviour” (p. 61), and this arguably applies to all kinds of intervention, not just interface design. For example, a designer modelling users as primarily ‘lazy’ or uninterested may try to influence users’ decisions by making the ‘target’ behaviour the easiest choice, such as a default setting (Frederick, 2002; Johnson & Goldstein, 2003; Kesan & Shah, 2006), whereas a designer modelling users as being motivated by intrinsic factors such as social relatedness (Deci & Ryan, 1985) or connectedness (Visser et al., 2011) may apply techniques emphasising that a user is contributing to the well-being of the wider community in making particular choices. The resulting design solutions may aim to influence the same ultimate target behaviour, but can differ substantially through modelling humans “at several levels of abstraction” (Rasmussen, 1983, p. 266).

The models used by designers thus provide some structure to problem/solution ‘spaces’ (Maher, Poon, & Boulanger, 1996), framing and redefining the “problematic situation” (Schön, 1983, p.68) in a variety of ways, each of which exposes new aspects of the problem (Rith & Dubberly, 2007; Rittel & Webber, 1973). Hey (2008) found that design teams will re-frame problem/solution spaces repeatedly over the course of a project, negotiating an evolving ‘common’ frame which aims to reconcile the perspectives and needs of both designers and users. Design becomes “a process of enquiry during which meaning is constructed with diverse stakeholders” (Kimbell, 2011, p. 49); ‘solutions’ will not be ‘perfect’ resolutions (Ebenreuter, 2007) but will address certain perspectives and interpretations of meaning.

Problem-solution Pairs

Dorst and Cross (2001) suggest that the emergent formation of ‘bridges’–pairing one representation of the problem with an apposite solution–is what defines the insight of the ‘creative leap’. Cross (2006) describes an example of a design brief concerning a way to transport a hiker’s backpack on a mountain bike: this included a number of potential sub-problems. The designers in the study “oscillat[ed] between sub-solution and sub-problem areas… partial models of the problem and solution are constructed side-by-side” (p. 57), with each concept addressing particular representations of the problem, creating problem-solution pairs. A particular sub-solution (a moulded plastic tray) enabled a number of the partial models to be mapped onto each other.

As Cross (2006) explains, this interpretation differs from models of the design process emphasising clear ‘stage’-based separation between a defined problem specification and the generation of multiple alternative solutions; the generation of partial solutions enables the problem to be modelled and examined in different ways and the original brief reframed. Likewise, problem-solution pairs could be also a valuable unit of analysis in design for behaviour change, explicitly incorporating a particular model of the problematic situation, and indeed the context in which the solution is applicable. A problem-solution pair rooted in a problem context itself can be seen to be ‘solving’ the problem in a different way compared with a solution which only addresses its ‘symptoms’ (something which arguably characterises many approaches to behaviour change, for example those based solely on rewarding or punishing people for particular behaviours).

Using this framework, if designers are going to try to address particular behavioural ‘problems’ they need to do sufficient research with users to develop models matching the contextual reality of their situated decision-making (Suchman, 2007). This means that contextual user research is essential. However, as design for behaviour change becomes seen as less about influencing broad public opinions and attitudes (e.g., towards the environment, or health), and much more about understanding and developing empathy (Postma, Zwartkruis-Pelgrim, Daemen, & Du, 2012) user participation as described by Ho and Lee (2012) also becomes essential. User participation in this sense allows designers to take into account the contextual factors affecting how people make situated decisions about how to act in everyday life, which includes expectations of outcomes, perceptions of meaning and perceived affordances.

Similar ideas have been discussed in cybernetics, which offers a number of useful constructs for designers working with behaviour (Dubberly & Pangaro, 2007). Conant and Ashby’s (1970) ‘Good Regulator’ theorem states that “every good regulator of a system must be a model of that system”, with the corollary that modelling is a necessary part of regulating a system’s behaviour; indeed, Beer (1974, p. 34) notes that “no regulator can actually work unless it contains a model of whatever is to be regulated”. Designers may not see themselves as ‘regulators’–even those explicitly involved in behaviour change–but as Scholten (2009-10, p. 3) has argued, an implication of Conant and Ashby is simply that “every good solution must be a model of the problem it solves”: not too far from Dorst and Cross’s (2001) concept of problem-solution pairs.

Mental Models and Heuristics in Design for Behaviour Change

One approach to understanding and modelling user behaviour in context is to investigate users’ own understanding and mental models of the systems with which they are interacting; as Krippendorff (2007) puts it, “designers who intend to design something that has the potential of being meaningful to others need to understand how others conceptualize their world” (p. 1386).

There are different ways of defining and representing mental models (Jones, Ross, Lynam, Perez, & Leitch., 2011; Moray, 1996). One definition commonly used in human-computer interaction (HCI) is described broadly by Carroll, Olson, and Anderson (1987) as: “knowledge of how the system works, what its components are, how they are related, what the internal processes are, and how they affect the components” (p. 6). Users’ mental models thus allow them “not only to construct actions for novel tasks but also to explain why a particular action produces the results it does” (p. 6).

In the context of influencing behaviour change, mental models could be important if a user’s current model leads him or her to behave or interact with a system in a way which is undesirable, dangerous, inefficient or otherwise deemed deserving of intervention. For example: Besnard, Greathead, and Baxter (2004) discuss how an erroneous mental model held by the flight crew can be considered a major factor in the 1989 Kegworth air crash in Leicestershire, England; Kempton’s (1986) investigation of home heating control found that patterns of thermostat use (some more wasteful of fuel than others) were largely consistent with two different mental models of how thermostats work (the ‘valve’ model and the ‘threshold’ or ‘switch’ model); Thaler’s (1999) work on ‘mental accounting’ suggests that different ways of modelling and categorising everyday financial transactions can lead people to make irrational or myopic budgeting decisions to their own detriment; Senge (1990) looks at how tacit but wrong mental models can lead to poor organisational performance, and how ‘surfacing’ and changing them can improve matters.

The aim of a designer seeking to change behaviour via mental models would usually be to shift the user’s mental model (if incorrect) to a more accurate one, perhaps by making the system model evident (an aim of ecological interface design: Burns & Hajdukiewicz, 2004), via a series of analogical steps bridging the two models (Clement, 1991), or by increasing the repertoire of models available to users–as Papert (1980) put it, “[learning] anything is easy if you can assimilate it to your collection of models” (p. vii). Alternatively, an aim could be to redesign a system so that it appears to work in the way that the user assumes, working with the existing model even if incorrect. In both cases, the effectiveness of the approach could be examined by measuring behaviour changes that have occurred as a result of intervention.

Both approaches require investigating users’ current mental models of the systems they use–within design research and in human factors, methods such as interviews, verbal protocol analyses, structured tasks (e.g., Payne, 1991), eye-tracking, cultural probes (Gaver, Dunne, & Pacenti, 1999), ethnography and shadowing can all help reveal aspects of subjects’ understandings and internal representations of situations, via examining and mapping interaction behaviour, routines, shifts in focus or even the errors people make. Even with an extensive palette of methods, the fundamental difficulty nevertheless remains; mental models are “not available for direct inspection or measurement” (Jones et al., 2011, p. 46).

Lower-level Modelling: Behavioural Heuristics as Problem-solution Pairs

As part of an industrial collaboration project, we have investigated aspects of people’s mental models of heating, ventilation and air conditioning (HVAC) and energy use, and perceptions of the role played by behaviour, at home and at work. The aim was to use the insights gained to design feedback systems which lead to lower energy use through behaviour change. We interviewed people about their behaviour and usage patterns of heating systems. From these interviews we found that, in many cases, interviewees did not, in context, consider much of the system beyond their immediate interactions with it.

This issue has been recognised in some treatments of mental models, such as Collins and Gentner’s (1987) concept of ‘pastiche models’ (which recognises that the models applied at different levels may be inconsistent). Rather than making decisions based on macro-level comprehension of a ‘whole’ mental model (such as it may be), it often appeared that people’s reported interaction decisions were determined by sets of individual ‘rules’ considered at the micro-level–“If this is the situation, do that”, “If I have that problem, this is how I fix it”–intended to satisfice (Simon, 1956, 1969) for the situation at hand. The idea is reminiscent of Argyris and Schön’s (1974) theories-in-use, or Minsky’s (1983) suggestion that “the only way a person can understand anything very complicated is to understand it at each moment only locally” (p. 189)–and raises questions about how appropriate it is to consider designing ‘whole system’ solutions as opposed to decomposition into lower-level sub-problems and sub-solutions. From a design perspective, should designers try to understand users on the level of individual behaviours, or in larger ‘units’ such as personas? This issue is considered further in the section ‘Discussion: fitting into design practice’.

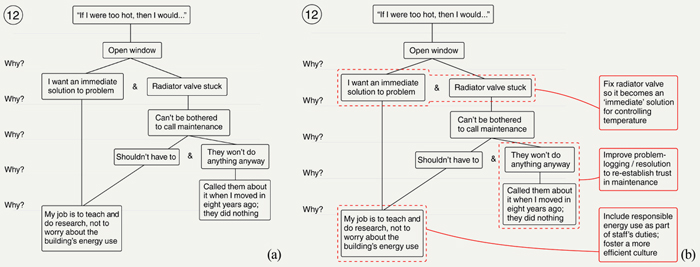

Returning to the interviews, many explanations given by interviewees were something closer to Dorst and Cross’s (2001) problem-solution pairs than full-blown mental models: they were, essentially, heuristics for how to interact with the system in a range of situations rather than explanations of how the whole system was believed to work. Each heuristic presented a certain way of framing the problematic situation perceived by the interviewee, and the immediate behavioural solution undertaken (Figure 1). The section of this paper titled ‘Case study: extracting heuristics around interaction with heating’ elaborates on the study.

Figure 1. A heuristic represented as a simple ‘problem-solution pair’.

Using Heuristics to Simplify the Problem / Solution Space

What are heuristics? There are a number of definitions, but the term as used in current behavioural economics and decision science generally refers to simple, frugal ‘rules of thumb’, tacit or explicit, for making decisions and solving problems. Groner, Groner, and Bischof (1983) note that they are “used to reduce the search space of a given problem” (p. 16), and this is a common thread through research with heuristics in different disciplines, including mathematical problem-solving (Pólya, 1945), education (Papert, 1980), artificial intelligence (Newell, 1983), cooperation in groups (Gardner, Ostrom, & Walker, 1992), behavioural economics (Gilovich, Griffin, & Kahneman, 2002; Tversky & Kahneman, 1974) and decision research (Gigerenzer & Selten, 2001; Gigerenzer, Todd, & ABC Research Group., 1999).

Heuristics are qualitatively different to other components of behavioural models such as attitudes and habits: instead they characterise the phenomenon of people bounding or simplifying the problem/solution spaces they experience, framing situations in terms of a particular perspective, tentative assumption or salient piece of information, rather than undertaking an exhaustive analysis of all possible courses of action. For example, Tversky and Kahneman’s (1973) availability heuristic involves deciding on the likelihood of an event based on how easy it is to bring to mind similar events, and Goldstein and Gigerenzer’s (1999) recognition heuristic involves attributing a higher value to objects which are recognised than those which are not; just as Pólya’s (1945) exhortation to “Look at the unknown! And try to think of a familiar problem having the same or a similar unknown” (p. 73) involves starting to solve a mathematical problem by finding a point of comparison with other problems.

In different circumstances, the application of heuristics has been considered both harmful and potentially beneficial. Much behavioural economics discourse (e.g., Thaler & Sunstein, 2008) concerns ways to overcome biases introduced by the use of heuristics, whereas the ‘ecological rationality’ approach (e.g., Todd & Gigerenzer, 2012) focuses on situations where the use of particular heuristics is actually adaptive, and thus on how to improve decision-making through exploiting (or changing) the information structure of people’s decision environments. From a design perspective, it seems clear that there are circumstances where simplification is valuable to users (Colborne, 2010; Krug, 2006), and others where it can cause problems (just as with mental models).

Within ‘design for behaviour change’, to date little attention has been paid to the possibilities and implications of heuristics. Rogers and her collaborators (Kalnikaitė et al., 2011; Rogers, 2011; Todd, Rogers, & Payne, 2011) have used an ecological rationality approach in the design of a shopping trolley-mounted system giving supermarket shoppers simple, salient information on the ‘food miles’ of products, but there is also the possibility for heuristics to be used as part of user research and problem-framing for design, as the remainder of this paper will discuss.

Simple Rules and Levels of Abstraction

The notion of modelling behaviour in terms of ‘if... then...’ rules has been explored in a number of disciplines, not necessarily under the ‘heuristics’ banner.

Aside from the obvious parallels in computer programming itself, examples include: von Wright’s (1972) treatment of practical inference mechanisms, e.g., “I intend to make it true that E. Unless I do A, I shall not achieve this. Therefore I must do A” (p. 44); Argyris and Schön’s (1974) discussion of representing theories of action in the form “in situation S, if you want to achieve consequence C, do A” (p. 5); and the ‘fuzzy’ rule-based problem-solving method developed by Hunt and Rouse (1982) and Rouse (1983), which includes context-specific S-rules [e.g., “if: The engine will not start and the starter motor is turning and the battery is strong, then: Check the gas gauge” (Hunt & Rouse, 1982, p. 293)] and more generally applicable context-free T-rules [e.g., “if: The output of I is bad and I depends on Y and Z, and Y is known to be in working, then: Check Z” (Hunt & Rouse, 1982, p. 294)]. Minsky (2006) compares a ‘rule-based reaction machine’ approach of following basic “If situation → Do action” rules with goal-driven “If situation and goal → Do action” and deliberative “If situation + Do action → Then result” mental simulations.

Within human factors, Rasmussen’s (1983, 1986) Skills, Rules and Knowledge (SRK) framework situates rule-based behaviour in the context of other kinds of human performance. As opposed to skill-based behaviour, which comprises automatic patterns of response with simple feedback and without conscious attention, and knowledge-based behaviour, which makes use of stored knowledge, conscious analysis and mental simulations, rule-based behaviour is essentially the use of heuristics. It is “consciously controlled by a stored rule or procedure that may have been derived empirically during previous occasions, communicated from other persons’ know-how as an instruction or cookbook recipe, or it may be prepared on occasion by conscious problem solving and planning” (Rasmussen, 1986, p. 102). Rule-based behaviour is informed by signs in the environment, which “indicate a state in the environment with reference to certain conventions for acts...they serve to activate stored patterns of behaviour” (p. 108). Rasmussen gives the example of a flowmeter gauge in a pipeline with a valve, with four particular positions identified on the scale. The rules or “stereotype acts” are “If valve open, and: if indication C: OK; if indication D: adjust flow; If valve closed, and: if indication A: OK; if indication B: recalibrate meter” (p. 107). In rule-based behaviour, when errors (‘incorrect’ behaviour) occur, they may be due to misclassifying the situation and hence applying the wrong rule (Reason, 1990).

Another aspect of Rasmussen’s (1983, 1986) framework relevant to modelling user behaviour is the treatment of abstraction in task analysis, including users’ decision-making when interacting with systems. An abstraction hierarchy–in human factors research often created as a phase of cognitive work analysis (Jenkins, Stanton, Salmon, & Walker, 2008; Vicente, 1999)–outlines multiple representations of a system, from functional purpose to physical form, with different models of the system (means and ends) applicable at each level of abstraction. From a design perspective, what occurs is “a process of iteration between considerations at the various levels [of abstraction] rather than an orderly transformation from a description of purpose to a description in terms of physical form” (Rasmussen, 1983, p. 265); similar approaches to abstraction and decomposition have been discussed by Alexander (1964), de Bono (1993) and Straker and Rawlinson (2002), while Dörner (1983), specifically in the context of heuristics, discusses the use of multiple levels of ‘resolution’ of problematic situations to enable the selection of appropriate strategies. Ishikawa’s (1990) fishbone cause-and-effect diagrams, as used in quality management, also have much in common with this modelling approach, and we make use of a similar ‘5 Whys’ (e.g., Pylipow & Royall, 2001) or ‘laddering’ (Hawley, 2009) technique below in the section ‘Elaborating the heuristics: five whys and further abstraction’.

The abstraction notion, and the resolution into ‘means and ends’ as a representation of the system at each level, again has parallels with Dorst and Cross’s (2001) problem-solution pairs in design and the concept of simple behavioural heuristics. By identifying the levels of abstraction at which users’ heuristics can be considered to be operating, it is possible to understand better the context in which the behaviour is occurring, and frame the problem accordingly as part of the design process.

Case Study: Extracting Heuristics Around Interaction with Heating

This case study illustrates one practical application of the heuristics idea within user research, as part of a design for behaviour change project.

Sixteen office workers at two organisations (a government department and a university, both in London) were interviewed individually as part of a study of building users’ understanding of energy use. As part of a wider series of structured questions and exercises, we asked interviewees to describe what actions they would take, at work, if they were i) too hot, and ii) too cold. These are effectively behavioural heuristics as outlined above: simple ‘if... then...’ statements describing behaviour in response to particular contextual conditions: we do not claim or aim to imply any deeper insight into human decision-making than simply asking people to express what they would do, in this particular form. Interviewees were subsequently (as described in the section ‘Elaborating the heuristics: 5 Whys and further abstraction’) asked to elaborate the reasons behind the heuristics given, following a laddering or 5 Whys-type method–for each answer given by an interviewee, the interviewer reframed this as a further “Why?” question–see Figure 2(a) for an example. The number of times “Why?” was asked was not always five; the intention here was not necessarily to determine a ‘root cause’ (assuming such a thing exists), but instead to derive multiple, iterated levels of abstraction for each heuristic, enabling a more diverse set of representations for problem-framing.

Figure 2. (a) The 5 Whys chain for interviewee 12 and (b) with immediate ‘solutions’ for each reason. (Click to enlarge this figure.)

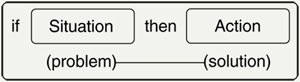

Heuristic Statements as Situated Strategies

Table 1 shows the heuristic statements given by interviewees for both the ‘too hot’ and ‘too cold’ conditions; where multiple answers are present, each is presented in the order mentioned by the interviewees, as a kind of ‘else’ clause. Interviewees 1-8 worked in a building with locked windows and ceiling-mounted HVAC controlled by a building management system (BMS); one interviewee (2) remembered that some windows had previously been unlocked (during a period when the air conditioning was switched off) and included this as part of her set of heuristics. The ‘draughty’ windows mentioned by two interviewees had a noticeable draught around the frame when closed.

Table 1. Interviewees’ statements about what they would do if too hot or too cold in the office.

Interviewees 9-13, 15 and 16 worked in buildings with central heating, radiators and opening windows, while interviewee 14’s office had an opening window as well as BMS-controlled HVAC, but no user controls on the floor-mounted heating vents. The only interviewee with an air conditioner under her control, 16, preferred not to use it since she was unsure of the interface.

From the point of view of understanding the links between behaviour and energy use, an important implication here is that the “If I were too hot, then I would open the window” (or variant) heuristics given by 9-12 and 14-16 have the potential to lead directly to energy inefficiency: if the radiators are on, opening the window as first recourse, rather than adjusting the radiator, will simply lead to heat being wasted, with no feedback loop to the building manager or BMS. Indeed, in the case of 14, in an office with BMS-controlled HVAC, opening the window to cool down may cause the thermocouple temperature sensor in the room to signal the BMS to increase the amount of heating. Interviewees 9,11,12 and 13’s use of their own electric heaters and fans would be against the policies of many building managers, as well as increasing energy use. Other heuristics of potential interest or concern to building managers include 7’s declaration that she might simply “go home” from work if she were too cold in the office, and 2 and 8 who both choose to sit near (unintentionally) draughty window frames because of the lower temperature (and fresher air) this affords. This kind of empirical ‘revealed preference’ is valuable as an insight into the real day-to-day comfort needs of building users, along the lines of some of the post-occupancy evaluation insights uncovered by Hadi and Halfhide (2009).

While the specific insights in this case are of most relevance to the HVAC context, and probably the particular workplaces studied, the aim from the perspective of this article is simply to demonstrate how the first stage of asking users for their ‘situated strategy’ heuristics in response to particular problems can be practically carried out. The next stage continues that demonstration.

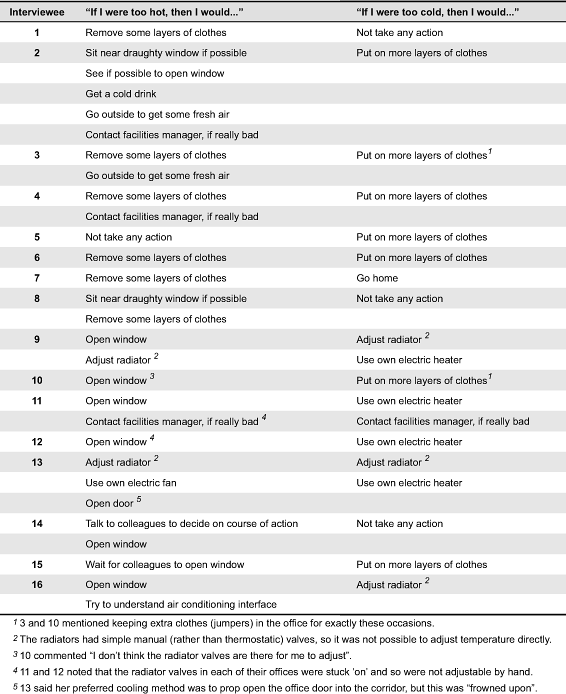

Elaborating the Heuristics: 5 Whys and Further Abstraction

After recording the simple heuristic statements, we then used a 5 Whys process to ask interviewees to elaborate their statements. The full series of five levels of abstraction was not reached with all interviewees, but some were able to give chains of reasons. From the energy use perspective, cases in which we were particularly interested were where interviewees said that they would open the window (9-12, 14-16) or, in 15’s case, wait for colleagues to open the window, if too hot, since as outlined above, this has the potential for significant energy inefficiency through behaviour.

Figure 2(a) shows an example of the 5 Whys chain for interviewee 12, based on his reasons for opening the window if too hot. In some cases, as here, the ‘causal chain’ branches and re-joins, as the same ultimate reason is given for more than one reason along the way. In this case, 12 revealed that while one of the immediate reasons he opens the window is simply that the radiator valve is stuck, there are also deeper factors around his experiences with contacting maintenance staff, and ultimately his perception of what his job entails and what is out of scope. It is our contention that each of these reasons essentially implies a problem-solution pair.

As Figure 2(b) shows, it is trivial to suggest ‘solutions’ at each level based on providing alternative ways of fixing one or more of the reasons given by the interviewee–fixing the radiator valve, improving maintenance’s problem-logging procedures and including energy use as part of staff’s responsibility–but each of these solutions is acting on a different way of framing the problematic behaviour. The ‘ultimate’ reason may be a lack of perceived responsibility for energy use, but the immediate situated reason of a stuck radiator valve is no less ‘valid’ as a problem to be addressed (it is certainly quicker to fix). Some will be ‘design’ solutions, others not.

This analysis of individual interviewees’ reasoning was made more generally applicable by restating each of the reasons given, at different levels of abstraction, as a heuristic, and tabulating them so that common elements can be more easily identified. As Table 2 shows, once restated as heuristics, extracting design ‘implications’ from each is relatively simple, if subjective: the heuristic is again just a problem-solution pair, and for each, possibly applicable ‘solutions’ are easy to conceive. It is noticeable that the design implications frame the problem as being variously about changes to physical equipment (to lead to changes in behaviour) and changes to people’s behaviour through service or support changes: there is no hard distinction between what might be considered service design or product design. It is important to remember that while some of the possible ‘solutions’ listed in Table 2 appear more complex than the existing responses in Table 1, many of these existing responses were essentially ‘unacceptable’ to building managers, due to energy inefficiency. Equally, it is important to note that the interpretations in Table 2 are inherently subjective–we are not claiming that the heuristics, implications and solutions are the only way of dealing with users’ comments, but they represent a set of possible ways of framing the problems and solutions concerned. Useful framings might not emerge within five levels of questions; on the other hand, they may emerge straight away.

Table 2. Restating interviewees’ reasons for opening windows as heuristics.

In the case study in question, the next stage of the process was to identify which of the possible implications and concepts were likely to be actionable in the subsequent stages of the project, in the specific workplaces concerned. But in the wider field of design for behaviour change, something along the lines of Zachrisson and Boks’ (2012) challenge introduced at the start of section 1 is relevant: is it possible to match design strategies with particular heuristics across domains and problems? Are there other situations where, for example, the “If the group agrees with a course of action, then I’ll do it” heuristic mentioned in Table 2 also applies? And if so, do the pertinent design implications and concepts exhibit similar principles? Ultimately, could extracting and abstracting behavioural heuristics, through user research, provide a ‘common language’ of problem-solution pairs enabling translation between design principles for behaviour change and the kinds of behavioural situations in which they apply?

Reverse Engineering of Existing Examples

To explore the potential of this ‘common language’ approach, we attempted to ‘reverse engineer’ existing examples of systems known to be designed to influence user behaviour, to uncover heuristics which users are (potentially) assumed to be following for the intended behaviour to occur. To do this we applied a process similar to the 5 Whys decomposition employed in interviews with users, this is essentially a mirror image of the interview process: interrogating existing examples to obtain heuristics at different levels of abstraction, rather than interviewing users themselves. After partially exploring one example ourselves–perhaps ‘obvious’ because of its ubiquity, but nevertheless successful–see ‘Example: Amazon recommendations’ below, workshop participants at an interaction design industry conference were then invited to carry out the decomposition process on three further examples (see below ‘Examples: Codecademy, OPOWER and Foodprints’).

Example: Amazon Recommendations

Amazon, the online retailer, is well-known for its recommendation engine (e.g., Iskold, 2007); as of 2006, 35 per cent of the company’s sales came via recommendation elements on the website (Marshall, 2006). Users (potential customers) browsing for products, such as books, are–in addition to basic product information–presented with a number of page elements using variants of ‘social proof’ (Anderson, 2010; Cialdini, 2007) to influence their decision-making, based on extensive, ongoing background analysis of shopping habits by Amazon. These can include “Frequently bought together”, “Customers who bought this item also bought”, “What other items do customers buy after viewing this item?”, “Customers also bought items by”, “Customers who bought items in your recent history also bought” and “Listmania” (which features customer-curated recommended lists of items with similar characteristics), as well as reviews of the item by other customers, who can give one to five stars and write comments (in turn, users can rate the reviews as being helpful or not, and the ‘most helpful’ reviews are promoted in page position; frequent and well-regarded reviewers receive ‘flair’ next to their names).

Each of these many elements–in a slightly different way–uses the behaviour and opinions of other users, individually or as a ‘community’ to attempt to influence the behaviour of the potential customer. They could all be classified as using ‘social proof’ as a design principle, but this misses the nuances of the approach. The fact that Amazon uses so many variants–and presumably tracks closely the effectiveness of each with different customers and market segments–suggests that slightly different models of behaviour underlie each element, and what ‘works’ for some users does not work for everyone in terms of influencing behaviour (Kaptein & Eckles, 2010). Each variant makes slightly different assumptions about how people will respond the heuristics that are being followed. What might glibly be classified as ‘social proof’ comprises assumptions such as:

- People will do what they see other people doing.

- People will value or do what seems to be most popular.

- People value or respect others’ opinions and actions.

- People want to learn more about a subject.

- People will buy multiple items at the same time.

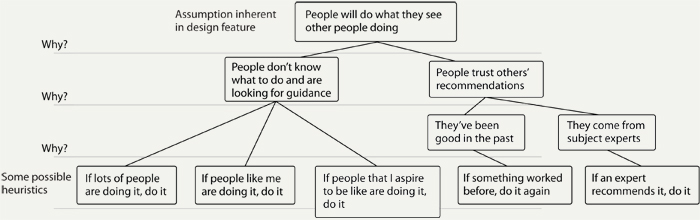

There are many other possible assumptions, of course. But taking “People will do what they see other people doing” as an example statement, if we follow a similar process of asking “Why?” repeatedly, as in the section ‘Elaborating the heuristics: 5 Whys and further abstraction’ –“Why will people do what they see other people doing?”–we are able to tease out some possible factors (Figure 3), which can easily be expressed as heuristics.

Figure 3. Extracting some possible heuristics from an assumption inherent in the use of ‘social proof’.

For the five heuristics uncovered, it is not too difficult to think of design techniques which are directly relevant:

- If lots of people are doing it, do it → Show directly how many (or what proportion of) people are choosing an option.

- If people like me are doing it, do it → Show the user that his or her peers, or people in a similar situation, make a particular choice.

- If people that I aspire to be like are doing it, do it → Show the user that aspirational figures are making a particular choice.

- If something worked before, do it again → Remind the user what worked last time.

- If an expert recommends it, do it → Show the user that expert figures are making a particular choice.

As with the implications and concepts in Table 2, each technique has the property of framing both problem and solution in a particular way. If user research with Amazon customers led to the expression of a heuristic such as “If people that I aspire to be like are doing it, do it”, then a way of framing the specification which directly relates to the heuristic becomes immediately clear; depending on the heuristics uncovered, it might be that majority preference, authority-based messaging, friend-based recommendations, peer voting, celebrity or expert endorsement, or some other techniques entirely could match groups of users’ heuristics better. Sometimes, as with Amazon, a product or service will use more than one technique simultaneously, employing multiple elements, to try to satisfy multiple heuristics, or perhaps because the designers are not sure which heuristics are being used by individual users. Kaptein and Eckles’ (2010) work on persuasion profiling suggests a way forward which would allow directly targeted elements tailored to what influences individual users.

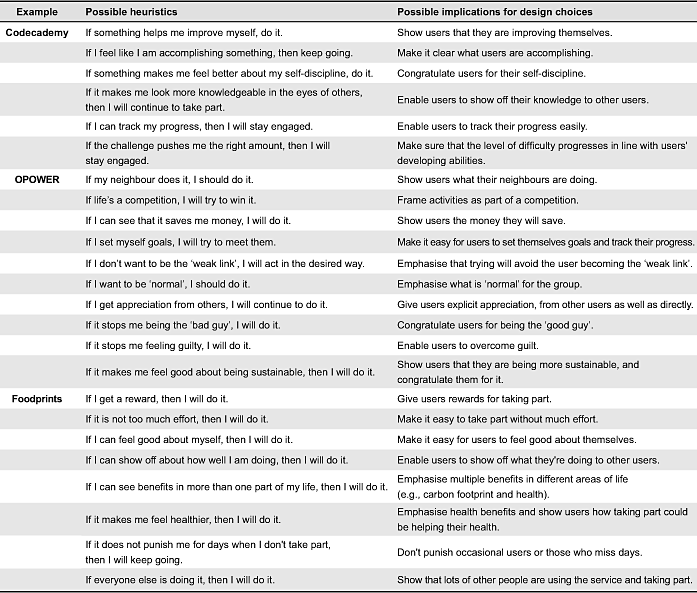

Examples: Codecademy, OPOWER and Foodprints

The analysis outlined above with the Amazon example is exploratory, rough and subjective, carried out by one of the authors. In practice, working with heuristics in design for behaviour change would need to be done as part of a design process, by designers rather than solely academics. To explore how this would work, a workshop session was run at Interaction ’12 in Dublin, Ireland, a major interaction design industry conference. Forty-one participants, including designers and researchers, worked in groups on exploring the assumptions inherent in elements of three current digital services designed to influence users’ behaviour:

Codecademy offers interactive tutorials in Javascript and Python, using a range of design techniques to encourage participants to sign up and remain motivated. There is ‘gamification’ (Deterding, Dixon, Nacke, O’Hara, & Sicart, 2011) in terms of badges and points, but the focus of the user experience is rapid feedback and a structured progression, intended to maintain engagement. As part of the ‘2012 Code Year’ initiative (which received 300,000 sign-ups in the first week: Pavlus, 2012), other techniques such as commitment & consistency and social proof (Cialdini, 2007) come into play, as participants share their commitment.

OPOWER provides a service to electricity and gas suppliers where customers receive ‘Home Energy Reports’ featuring comparisons with the average energy used by neighbours (with similar size homes) and by the most efficient comparable neighbours. The bills then suggest improvements that customers can make in order to improve their performance. The focus of the graphics is not financial, and the energy quantities given are normative–comparisons with a customer’s ‘peer group’. The graphs are paired with ‘smiley faces’, giving an alternative way of interpreting performance–shying away from criticism, only neutral or positive terms are used (and no ‘sad faces’: Schultz, Nolan, Cialdini, Goldstein, & Griskevicius,, 2007). A randomised controlled trial based on 600,000 households in the US (Allcott, 2011), found an average 2% reduction in energy use among households receiving the OPOWER reports.

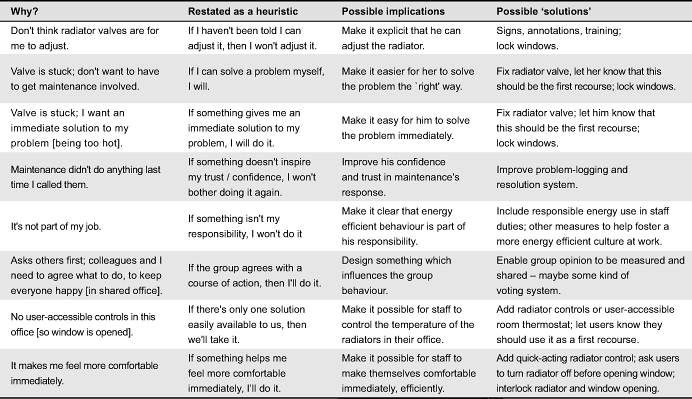

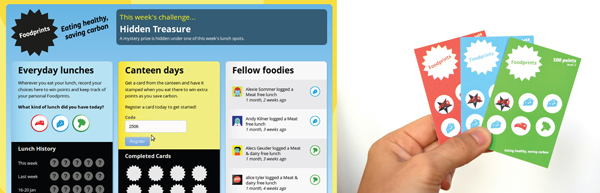

Foodprints (Figure 4), part of the CarbonCulture platform, combines a web app, ‘dashboard’, and physical loyalty cards and stamps in company canteens to help staff reduce their meat and dairy intake, for both environmental (carbon footprint) and health reasons. The app uses some gamification elements (points, ‘hidden treasure’, etc) and a ‘social proof’ ticker showing the meals that colleagues have recorded. The points contribute towards winning prizes, though not in competition with other users (Nicholson, 2011).

Figure 4. The Foodprints online dashboard and cards (Nicholson, 2011).

Through a similar decomposition process to the one described in ‘Example: Amazon recommendations’, groups extracted a handful of statements of possible heuristics for each example (Table 3), with possible implications for design choices. These are by no means necessarily criteria that the developers of Codecademy, OPOWER or Foodprints had in mind for their products, but each heuristic / implication could be exemplified with elements of the products concerned.

Table 3. Possible heuristics, and design implications, extracted by workshop participants.

Thus, if a similar behavioural heuristic–e.g., “If my neighbour does it, I should do it”–were uncovered from user research in a different context with a different product, the design element from OPOWER fulfilling the “Show users what their neighbours are doing” criterion could be used as an example on which to base a new element. In this particular case OPOWER’s visual comparisons with the energy used by neighbours is presented as part of a printed report, but as Atwood (2012) notes, the example translates well into wholly unrelated contexts, such as the design of the reputation system for online question-and-answer forums.

In the workshop, participants went on to assemble sets of heuristics into fictional ‘personas’, for whom they then generated design concepts. While outside the scope of this paper, it is worth noting in passing the possibility of using heuristics as a way of triggering designers to think outside their own perspective on a problem–“ design for someone who’s following this rule rather than that one” or “design so that people following either of these rules will be satisfied”.

Discussion: Fitting into Design Practice

Where do behavioural heuristics fit into a design process for behaviour change?

The two approaches which were explained in the sections ‘Case study: extracting heuristics around interaction with heating’ and ‘Reverse engineering of existing examples’ are something like a pincer movement, arriving from two directions at heuristics as a common language of problem-solution pairs. The points where the approaches meet are at various levels of abstraction, but these help provide design practitioners with a variety of routes between user research in a particular behavioural situation, and design principles for behaviour change which are relevant in that context; the levels of abstraction are necessarily different ways of modelling the problem / solution space, rather than a deterministic single route from current behaviour to desired behaviour. Using behavioural heuristics in the design process, starting from the behavioural situation itself, could thus comprise:

- Contextual research with users of the product, service or environment in question, including ‘5 Whys’-style interviews to extract and elaborate the heuristics potentially being followed.

- Analysing and grouping the heuristics uncovered to segment users according to heuristics being followed, and to identify different levels of design intervention feasible, by mapping heuristics to relevant design techniques.

- Generating design concepts based on relevant techniques, and making a selection of those to develop further based on appropriate criteria.

- Prototyping and/or implementing solutions, and evaluating the effects on behaviour as appropriate.

The aim is for heuristics to become a tool for helping designers to frame ‘behavioural problems’ via contextual research with users, and with mapping to relevant design principles (and examples). Before the use of heuristics can be presented as a complete ‘system’, this is of course one important element requiring further development: developing a process for this mapping, in a way which is applicable by designers engaged in user research, fitting into methods and processes already used. We suggest that this will enable many of the diverse current approaches to classifying design for behaviour change techniques (e.g., Brand, 2004; Fogg & Hreha, 2010; Lockton et al., 2010a; Lidman & Renström, 2011; Tang & Bhamra, 2008; Tromp et al., 2011; Wever et al., 2008; Zachrisson & Boks, 2012) to be described in common language, and so more easily applied as part of a design process as problems are investigated and re-framed.

The focus is initially at least on the immediate context of interaction, but a laddering approach to interviewing can help elaborate elements of users’ attitudes and ‘background’ reasons for particular behaviour. This offers the potential of addressing all three of the ‘A-B-C’ of attitudes, behaviour and context inherent in behavioural models such as Guagnano, Stern, and Dietz (1995) and Stern (2000) which thus far have not received much attention within design research. It is true that the behavioural heuristics approach as presented in this paper is mainly concerned with investigating and re-designing existing systems rather than creating entirely novel ones–and this can be seen as a limitation–but as Michl (2002) suggests, most commercial design practice is ‘redesign’ in some form. Linking heuristics to design techniques for behaviour change could enable the process to become more generative, enabling the development of innovative solutions rooted in real problems.

A Possible Extension

One possible refinement of “if... then” heuristics could be structuring them along the lines of Minsky’s (1974, p. 1) concept of frames in artificial intelligence, which represent “a stereotyped situation, like being in a certain kind of living room, or going to a child’s birthday party”, with attached information about how to proceed and what can be expected.

This would capture the idea of familiar situations being recognised by users and particular heuristics being enacted, also thereby explicitly taking learned experience into account–nd drawing on knowledge from usability research around interaction design patterns and conventions. For example, if someone needs to use a mobile phone he or she has never used before to make a call, the interaction process could be modelled in terms of heuristics involving recognising interface or physical form elements (or not), looking for labels and familiar (or not) icons, and so on, in order to work out how to make a call. Each heuristic has the potential for design intervention to influence the user’s behaviour. The idea also recalls Klein’s (1999) recognition-primed decision model, which considers how “decision makers size up the situation to recognise which course of action makes sense, and the way they evaluate that course of action by imagining it” (p. 24): essentially, a stage of pattern recognition, and a stage (if necessary) of rapid mental simulation before deciding to carry out the action or modify it. The elements of the model important in a design practice context are likely to be different to those in which the models were developed, but that need not stop our appropriation and adoption of models which offer us useful perspectives during the design process.

Applicability, Limitations and Justification

It is true that the use of heuristics for modelling can be seen as extremely reductionist: representing users as bundles of rules is close to treating “the human as a system component” (Jääskö & Keinonen, 2006, 2010; Rasmussen, 1986, p. 61), maybe even the “trivial machine” of von Foerster (2002). The intention is, nevertheless, to represent, via a very simple modelling approach, the contexts in which interaction behaviour occurs with the products and services we design.

While simple statements of “if... then” heuristics will not capture many of the nuances of the situated strategies people use, they do permit a more granular framing of problems and possible solutions than a number of existing approaches to design for behaviour change, and indeed than conventional approaches to mental models. There are, of course, risks of oversimplification, and even of stereotyping users or user groups, but being able to elaborate multiple levels of abstraction–describing problems in multiple ways based on insights from users themselves–goes some way towards mitigating this. As with the use of personas in user-centred design, avoiding stereotyping while still producing useful descriptions of individuals (and their behaviour) is a challenge, probably best addressed through triangulation, using multiple research methods to compose more informed representations.

The heuristics approach focuses on individuals (and individual decision-making, even when referenced to others) rather than taking into account the wider ecology of interaction situations (Forlizzi, 2008), and this will affect the situations where heuristics are useful in design. For example, if research with individual users is not possible, is it feasible to extract heuristics usefully from automatically generated data such as web analytics? What about physical products where this kind of tracking is not possible? Can ‘revealed’ heuristics, without the elaboration provided by the ‘5 Whys’ stage, still be useful, or will this level of abstraction be simply too far away from the users themselves? In some circumstances, possibly–for example cases relating to safety, where designers may want to change people’s behaviour regardless of the level of users’ understanding (see Lockton et al., 2012 for a discussion)–but this misses the benefits which the process outlined in this paper can offer.

If heuristics can be derived from empirical user research, they can help ensure that users themselves, and their understanding of situations, are included in the design process. As far as designing behaviour change goes, we believe that this is not just desirable, but essential.

Acknowledgements

Thanks to Sebastian Deterding, Hans-Bredow-Institut für Medienforschung, Hamburg, Luke Nicholson of More Associates and Megan Ellinger, The National Academies, Washington, DC, for suggestions on the ideas described in this paper; Sebastiano Giudice for his interviews with University of Warwick staff; interviewees at the Department of Energy & Climate Change and Brunel University; and our workshop participants. This work was funded by the Technology Strategy Board, who played no part in the study design.

References

- Alexander, C. (1964). Notes on the synthesis of form. Cambridge, MA: Harvard University Press.

- Allcott, H. (2011). Social norms and energy conservation. Journal of Public Economics, 95(9-10), 1082-1095.

- Altshuller, G. (1994). And suddenly the inventor appeared: TRIZ, the theory of inventive problem solving. Worcester, MA: Technical Innovation Center.

- Anderson, S. P. (2010). Mental notes. Dallas, TX: Poetpainter LLC.

- Anderson, S. P. (2011). Seductive interaction design: Creating playful, fun and effective user experiences. Berkeley, CA: New Riders.

- Argyris, C., & Schön, D. A. (1974). Theory in practice: Increasing professional effectiveness. San Francisco, CA: Jossey-Bass.

- Atwood, J. (2012). For a bit of colored ribbon. Retrieved January 5, 2013, from http://www.codinghorror.com/blog/2012/11/for-a-bit-of-colored-ribbon.html

- Ayres, P. (2007). The origin of modelling. Kybernetes, 36(9/10), 1225-1237.

- Beer, S. (1974). Designing freedom. London, UK: Wiley.

- Besnard, D., Greathead, D., & Baxter, G. (2004). When mental models go wrong: Co-occurrences in dynamic, critical systems. International Journal of Human-Computer Studies, 60(1), 117-128.

- Box, G. E. P., & Draper, N. R. (1987). Empirical model-building and response surfaces. New York, NY: Wiley.

- Brand, R. (2004). Can we make people want what they ought to want – and should we? Historical lessons for sustainability planners. In P. Wilding (Ed.), Proceedings of the 6th International Summer Academy on Technology Studies: Urban Infrastructure in Transition (pp. 11-27). Graz, Austria: IFZ.

- Burns, C. M., & Hajdukiewicz, J. R. (2004). Ecological interface design. Boca Raton, FL: CRC Press.

- Carroll, J. M., Olson, J. R., & Anderson, N. S. (1987). Mental models in human-computer interaction: Research issues about what the user of software knows. Washington, DC: National Research Council.

- Cialdini, R. B. (2007). Influence: The psychology of persuasion (Rev. Ed.). New York, NY: Collins.

- Clement, J. (1991). Non-formal reasoning in experts and in science students: The use of analogies, extreme cases, and physical intuition. In J. F. Voss, D. N. Perkins, & J. W. Segal (Eds.), Informal reasoning and education (pp. 345-362). London, UK: Routledge.

- Colborne. G. (2010). Simple and usable: Web, mobile & interaction design. Berkeley, CA: New Riders.

- Collins, A., & Gentner, D. (1987). How people construct mental models. In D. Holland, & N. Quinn (Eds.), Cultural models in language and thought (pp. 243-265). Cambridge, UK: Cambridge University Press.

- Conant, R. C., & Ashby, W. R. (1970). Every good regulator of a system must be a model of that system. International Journal of Systems Science, 1(2), 89-97.

- Crilly, N. (2011). Do users know what designers are up to? Product experience and the inference of persuasive intentions. International Journal of Design, 5(3), 1-15.

- Cross, N. (2006). Designerly ways of knowing. London, UK: Springer.

- de Bono, E. (1993). Serious creativity. London, UK: HarperCollins.

- Deci, E. L., & Ryan, R. M. (1985). Intrinsic motivation and self-determination in human behaviour. New York, NY: Plenum.

- Desmet, P. M. A., & Hekkert, P. (2009). Special issue editorial: Design & emotion. International Journal of Design, 3(2), 1-6.

- Deterding, S., Dixon, D., Nacke, L. E., O’Hara, K., & Sicart, M. (2011). Gamification: Using game design elements in non-gaming contexts. In Proceedings of SIGCHI Conference on Human Factors in Computing Systems (Extended Abstracts, pp. 2425-2428). New York, NY: ACM.

- Dirksen, J. (2012). Design for how people learn. Berkeley, CA: New Riders.

- Dörner, D. (1983). Heuristics and cognition in complex systems. In R. Groner, M. Groner, & W. F. Bischof (Eds.), Methods of heuristics (pp. 89-108). Hillsdale, NJ: Lawrence Erlbaum Associates.

- Dorst, K., & Cross, N. (2001). Creativity in the design process: Co-evolution of problem-solution. Design Studies, 22(5), 425-437.

- Dubberly, H., & Pangaro, P. (2007). Cybernetics and service-craft: Language for behavior-focused design. Kybernetes, 36(9), 1301-1317.

- Ebenreuter, N. (2007). The dynamics of design. Kybernetes, 36(9), 1318-1328.

- Fogg, B. J. (2003). Persuasive technology: Using computers to change what we think and do. San Francisco, CA: Morgan Kaufmann.

- Fogg, B. J., & Hreha, J. (2010). Behavior wizard: A method for matching target behaviors with solutions. In T. Ploug, P. Hasle, & H. Oinas-Kukkonen (Eds.), Proceedings of the 5th International Conference on Persuasive Technology (pp. 117-131). Berlin, Germany: Springer.

- Forlizzi, J. (2008). The product ecology: Understanding social product use and supporting design culture. International Journal of Design, 2(1), 11-20.

- Frederick, S. (2002). Automated choice heuristics. In T. Gilovich, D. Griffin, & D. Kahneman (Eds.), Heuristics and biases. New York, NY: Cambridge University Press.

- Froehlich, J. E., Findlater, L., & Landay, J. A. (2010). The design of eco-feedback technology. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1999-2008). New York, NY: ACM.

- Gardner, R, Ostrom, E., & Walker, J. (1992). Social capital and cooperation: Communication, bounded rationality, and behavioral heuristics. In Proceedings of the 3rd Conference for the International Association for the Study of Common Property. Bloomington, IN: International Association for the Study of Common Property.

- Gaver, W., Dunne, A., & Pacenti, E. (1999). Cultural probes. Interactions, 6(1), 21-29.

- Gigerenzer, G., & Selten, R. (2001). Rethinking rationality. In G. Gigerenzer & R. Selten (Eds.), Bounded rationality: The adaptive toolbox (pp. 1-12). Cambridge, MA: MIT Press.

- Gigerenzer, G., Todd, P. M., & ABC Research Group. (1999). Simple heuristics that make us smart. New York, NY: Oxford University Press.

- Gilovich, T., Griffin, D., & Kahneman, D. (Eds.) (2002). Heuristics and biases: The psychology of intuitive judgment. New York, NY: Cambridge University Press.

- Goldstein, D. G., & Gigerenzer, G. (1999). The recognition heuristic: How ignorance makes us smart. In G. Gigerenzer, P. M. Todd, & ABC Research Group. (Eds.), Simple heuristics that make us smart (pp. 37-58). New York, NY: Oxford University Press.

- Gram-Hansen, S. B. (2010). Persuasion, ethics and context awareness: Towards a platform for persuasive design founded in the notion of Kairos. In T. Ploug, P. Hasle, & H. Oinas-Kukkonen (Eds.), Poster Proceedings of the 5th International Conference on Persuasive Technology (pp. 93-96) Persuasive 2010, Copenhagen. Oulu: University of Oulu.

- Groner, R, Groner, M., & Bischof, W. F. (1983). Approaches to heuristics: A historical review. In R. Groner, M. Groner, & W. F. Bischof (Eds.), Methods of heuristics (pp. 1-18). Hillsdale, NJ: Lawrence Erlbaum Associates.

- Guagnano, G. A., Stern, P. C., & Dietz, T. (1995). Influences on attitude-behavior relationships. Environment and Behavior, 27(5), 699-718.

- Hadi, M., & Halfhide, C. (2009). The move to low-carbon design: Are designers taking the needs of building users into account? A guide for building designers, operators and users. Watford, UK: IHS BRE Press.

- Hawley, M. (2009, July 6). Laddering: A research interview technique for uncovering core values. Retrieved April 5, 2012, from http://www.uxmatters.com/mt/archives/2009/07/laddering-a-research-interview-technique-for-uncovering-core-values.php

- Hey, J. H. G. (2008). Effective framing in design (Doctoral dissertation). Berkeley, CA: University of California.

- Ho, D. K. L., & Lee, Y. C. (2012). The quality of design participation: Intersubjectivity in design practice. International Journal of Design, 6(1), 71-83.

- Hunt, R. M., & Rouse, W. B. (1982). A fuzzy rule-based model of human problem-solving. In Proceedings of American Control Conference (pp. 292-300). Washington, DC: IEEE Computer Society.

- Ishikawa, K. (1990). Introduction to quality control (J. H. Loftus Trans.). Portland, OR: Productivity Press.

- Iskold, A. (2007, January 26). The art, science and business of recommendation engines. Retrieved April 5, 2012, from http://www.readwriteweb.com/archives/recommendation_engines.php

- Jääskö, V., & Keinonen, T. (2006). User information in concepting. In T. Keinonen & R. Takala (Eds.), Product concept design: A review of the conceptual design of products in industry (pp. 92-131). Berlin, Germany: Springer.

- Jenkins, D. P., Stanton, N. A., Salmon, P. M., & Walker, G. H. (2008). Cognitive work analysis: Coping with complexity. Farnham, UK: Ashgate.

- Johnson, E. J., & Goldstein, D. G. (2004). Defaults and donation decisions. Transplantation, 78(12), 1713-1716.

- Jones, N. A., Ross, H., Lynam, T., Perez, P., & Leitch, A. (2011). Mental models: An interdisciplinary synthesis of theory and methods. Ecology and Society, 16(1), article 46.

- Kalnikaitė, V., Rogers, Y., Bird, J., Villar, N., Bachour, K., Payne, S., …& Kreitmayer, S. (2011). How to nudge in situ: Designing lambent devices to deliver salient information in supermarkets. In Proceedings of the 13th International Conference on Ubiquitous Computing (pp. 11-20). New York, NY: ACM.

- Kaptein, M., & Eckles, D. (2010). Selecting effective means to any end: Futures and ethics of persuasion profiling. In T. Ploug, P. Hasle, & H. Oinas-Kukkonen (Eds.), Proceedings of the 5th International Conference on Persuasive Technology (pp. 82-93). Berlin, Germany: Springer.

- Keinonen, T. (2010). Protect and appreciate: Notes on the justification of user-centered design. International Journal of Design, 4(1), 17-27.

- Kempton, W. (1986). Two theories of home heat control. Cognitive Science, 10(1), 75-90.

- Kesan, J. P., & Shah, R. C. (2006). Setting software defaults: Perspectives from law, computer science and behavioral economics. Notre Dame Law Review, 82(2), 583-634.

- Kimbell, L. (2011). Designing for service as one way of designing services. International Journal of Design, 5(2), 41-52.

- Klein, G. A. (1999). Sources of power: How people make decisions. Cambridge, MA: MIT Press.

- Krippendorff, K. (2007). The cybernetics of design and the design of cybernetics. Kybernetes, 36(9/10), 1381-1392.

- Krug, S. (2006). Don’t make me think! A common sense approach to web usability (2nd Ed.). Berkeley, CA: New Riders.

- Lidman, K., & Renström, S. (2011). How to design for sustainable behaviour? A review of design strategies and an empirical study of four product concepts (Master thesis). Göteborg, Sweden: Chalmers University of Technology.

- Lilley, D. (2009). Design for sustainable behaviour: Strategies and perceptions. Design Studies, 30(6), 704-720.

- Lockton, D., Cain, R., Harrison, D. J., Giudice, S., Nicholson, L., & Jennings, P. (2011). Behaviour change at work: Empowering energy efficiency in the workplace through user-centred design. In Proceedings of the 5th Conference on Behavior, Energy & Climate Change. Stanford, CA: Precourt Energy Efficiency Center.

- Lockton, D., Harrison, D. J., & Stanton, N. A. (2010a). Design with intent: 101 patterns for influencing behaviour through design. Windsor, UK: Equifine.

- Lockton, D., Harrison, D. J., & Stanton, N. A. (2010b). The Design with Intent method: A design tool for influencing user behaviour. Applied Ergonomics, 41(3), 382-392.

- Lockton, D., Harrison, D. J., & Stanton, N. A. (2012). Models of the user: Designers’ perspectives on influencing sustainable behaviour. Journal of Design Research, 10(1/2), 7-27.

- Maher, M. L., Poon, J., & Boulanger, S. (1996). Formalising design exploration as co-evolution: A combined gene approach. In J. S. Gero & F. Sudweeks (Eds.), Advances in formal design methods for CAD (pp. 3-30). London, UK: Chapman & Hall.

- Marshall, M. (2006, December 10). Aggregate Knowledge raises $5M from Kleiner, on a roll. Retrieved April 5, 2012, from http://venturebeat.com/2006/12/10/aggregate-knowledge-raises-5m-from-kleiner-on-a-roll

- Mazé, R., & Redström, J. (2008). Switch! Energy ecologies in everyday life. International Journal of Design, 2(3), 55-70.

- Michie, S., van Stralen, M. M., & West, R. (2011). The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Science, 6, article 42.

- Michl, J. (2002). On seeing design as redesign: An exploration of a neglected problem in design education. Scandinavian Journal of Design History, 12, 7-23.

- Minsky, M. L. (1974). A framework for representing knowledge. MIT artificial intelligence memo (no. 306). Cambridge, MA: MIT AI Laboratory.

- Minsky, M. L. (1983). Jokes and the logic of the cognitive unconscious. In R. Groner, M. Groner, & W. F. Bischof (Eds.), Methods of heuristics (pp. 171-193). Hillsdale, NJ: Lawrence Erlbaum Associates.

- Minsky, M. L. (2006). The emotion machine: Commensense thinking, artificial intelligence, and the future of the human mind. New York, NY: Simon & Schuster.

- Moray, N. (1996). Mental models in theory and practice. In D. Gopher, & A. Koriat (Eds.), Attention and performance XVII, cognitive regulation of performance: Interaction of theory and application (pp. 223-258). Cambridge, MA: MIT Press.

- Newell, A. (1983). The heuristic of George Pólya and its relation to artificial intelligence. In R. Groner, M. Groner, & W. F. Bischof (Eds.), Methods of heuristics (pp. 195-243). Hillsdale, NJ: Lawrence Erlbaum Associates.

- Nicholson, L. (2011). Changing environmental behaviour – Digitally. Public Sector Sustainability, 2(5), 30-31.

- Nuffield Council on Bioethics. (2007). Public health: Ethical issues. Retrieved April 5, 2012, from http://www.nuffieldbioethics.org/sites/default/files/Public%20health%20-%20ethical%20issues.pdf

- Ozkaramanli, D., & Desmet, P. M. A. (2012). I knew I shouldn’t, yet I did it again! Emotion-driven design as a means to subjective well-being. International Journal of Design, 6(1), 27-39.

- Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. New York, NY: Basic Books.

- Pavlus, J. (2012, January 10). Code year, a programming class for dummies that goes out via email. Retrieved April 5, 2012, from http://www.fastcodesign.com/1665779/code-year-a-programming-class-for-dummies-that-goes-out-via-email

- Payne, S. J. (1991). A descriptive study of mental models. Behaviour & Information Technology, 10(1), 3-21.

- Pettersen, I. N., & Boks, C. (2008). The ethics in balancing control and freedom when engineering solutions for sustainable behaviour. International Journal of Sustainable Engineering, 1(4), 287-297.

- Pfarr, N., Cervantes, M. Lavey, J., Lee, J., Hintzman, A., & Vuong, V. (2010). Brains, behavior & design toolkit. Retrieved April 5, 2012, from http://www.brainsbehavioranddesign.com

- Pólya, G. (1945). How to solve it. Princeton, NJ: Princeton University Press.

- Postma, C. E., Zwartkruis-Pelgrim, E., Daemen, E., & Du, J. (2012). Challenges of doing empathic design: Experiences from industry. International Journal of Design, 6(1), 59-70.

- Pylipow, P. E., & Royall, W. E. (2001). Root cause analysis in a world-class manufacturing operation. Quality, 40(10), 66-70.

- Rasmussen, J. (1983). Skills, rules, and knowledge: Signals, signs, and symbols, and other distinctions in human performance models. IEEE Transactions on Systems, Man, and Cybernetics, 13(3), 257-266.

- Rasmussen, J. (1986). Information processing and human-machine interaction: An approach to cognitive engineering. New York, NY: North-Holland.

- Reason, J. (1990). Human error. Cambridge, UK: Cambridge University Press.

- Redström, J. (2005). Towards user design? On the shift from object to user as the subject of design. Design Studies 27(2), 123-139.

- Rith, C., & Dubberly, H. (2007). Why Horst W. J. Rittel matters. Design Issues, 23(1), 72-91.

- Rittel, H. W. J., & Webber, M. M. (1973). Dilemmas in a general theory of planning. Policy Sciences, 4(2), 155-169.

- Rogers, Y. (2011). Interaction design gone wild: Striving for wild theory. Interactions, 18(4), 58-62.

- Rouse, W. B. (1983). Models of human problem solving: Detection, diagnosis, and compensation for system failures. Automatica, 19(6), 613-625.

- Sangiorgi, D. (2011). Transformative services and transformation design. International Journal of Design, 5(2), 29-40.

- Scholten, D. L. (2009, October). Every good key must be a model of the lock it opens (The Conant & Ashby Theorem revisited). Retrieved April 5, 2012, from http://goodregulatorproject.org/images/Every_Good_Key_Must_Be_A_Model_Of_The_Lock_It_Opens.pdf

- Schön, D. (1983). The reflective practitioner: How professionals think in action. London, UK: Temple Smith.

- Schultz, P. W., Nolan, J. M., Cialdini, R. B., Goldstein, N. J., & Griskevicius, V. (2007). The constructive, destructive, and reconstructive power of social norms. Psychological Science, 18(5), 429-434.

- Senge. P. M. (1990). The fifth discipline: The art & practice of the learning organisation. New York: Doubleday.

- Simon, H. A. (1956). Rational choice and the structure of the environment. Psychological Review, 63(2), 129-138.

- Simon, H. A. (1969). The sciences of the artificial. Cambridge, MA: MIT Press.

- Stern, P. C. (2000). Toward a coherent theory of environmentally significant behavior. Journal of Social Issues, 56(3), 407-424.

- Straker, D., & Rawlinson, G. (2002). How to invent (almost) anything. London, UK: Spiro Business Guides.

- Suchman, L. A. (2007). Human-machine reconfigurations (Plans and situated actions, 2nd Ed.). Cambridge, UK: Cambridge University Press.

- Tang, T., & Bhamra, T. (2008). Understanding consumer behaviour to reduce environmental impacts through sustainable product design. In Proceedings of the 4th Biennial Conference of the Design Research Society (pp. 183/1-183/15). Sheffield, UK: Sheffield Hallam University.

- Thaler, R. H. (1999). Mental accounting matters. Journal of Behavioral Decision Making, 12(3), 183-206.

- Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. New Haven, CT: Yale University Press.

- Todd, P. M., Gigerenzer, G., & the ABC Research Group. (2012). Ecological rationality: Intelligence in the world. New York, NY: Oxford University Press.

- Todd, P. M., Rogers, Y., & Payne, S. J. (2011). Nudging the trolley in the supermarket: How to deliver the right information to shoppers. International Journal of Mobile Human Computer Interaction, 3(2), 20-34.

- Tromp, N., Hekkert, P., & Verbeek, P. P. (2011). Design for socially responsible behavior: A classification of influence based on intended user experience. Design Issues, 27(3), 3-19.

- Tversky, A., & Kahneman, D. (1973). Availability: A heuristic for judging frequency and probability. Cognitive Psychology, 5(2), 207-232.

- Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185(4157), 1124-1131.

- Vicente, K. J. (1999). Cognitive work analysis: Toward safe, productive, and healthy computer-based work. Boca Raton, FL: CRC Press.

- Visser, T., Vastenburg, M. H., & Keyson, D.V. (2011). Designing to support social connectedness: The case of SnowGlobe. International Journal of Design, 5(3), 129-142.

- von Foerster, H. (2002). Perception of the future and the future of perception. In H. von Foerster (Ed.), Understanding understanding: Essays on cybernetics and cognition (pp. 199-210). New York, NY: Springer.

- von Wright, G. H. (1972). On so-called practical inference. Acta Sociologica, 15(1), 39-53.

- Weinschenk, S. M. (2009). Neuro web design: What makes them click? Berkeley, CA: New Riders.

- Weinschenk, S. M. (2011). 100 things every designer needs to know about people. Berkeley, CA: New Riders.

- Wever, R. (2012). Editorial: Special issue on design for sustainable behaviour. Journal of Design Research, 10(1-2), 1-6.

- Wever, R., van Kuijk, J., & Boks, C. (2008). User-centred design for sustainable behaviour. International Journal of Sustainable Engineering, 1(1), 9-20.

- Zachrisson, J., & Boks, C. (2012). Exploring behavioural psychology to support design for sustainable behaviour research. Journal of Design Research, 10(1-2), 50-66.